This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

By: Aimee Garcia, Program Manager, AI Benchmarking and Sonal Doomra, Program Manager 2, AI Benchmarking

The Azure NC-series is composed of virtual machines (VMs) available with single, multiple, or fractional NVIDIA GPUs. The different VM sizes are designed to run compute-intensive workloads. Some of these challenging workloads include running AI training and inference models that are integrated with NVIDIA CUDA, a parallel computing platform and programming model.

In this technical blog, we will use three NVIDIA Deep Learning Examples for training and inference to compare the NC-series VMs with 1 GPU each.

The 3 VM series tested are the:

- powered by NVIDIA T4 Tensor Core GPUs and AMD EPYC 7V12 (Rome) CPUs

- NCsv3 powered by NVIDIA V100 Tensor Core GPUs and Intel Xeon E5-2690 v4 (Broadwell) CPUs

- NCads A100 v4 powered by NVIDIA A100 PCIe Tensor Core GPUs and 3rd-generation AMD EPYC 7V13 (Milan) processors

For this benchmarking activity, we ran BERT, SSD, and ResNet-50 from the NVIDIA Deep Learning Examples repository. We ran the training and inference piece for 3 benchmarks on the NC-series machines mentioned above.

BERT

Bidirectional Encoder Representations from Transformers (BERT) is a transformer-based machine learning technique for natural language processing (NLP) pre-training developed by Google. In the NVIDIA Deep Learning Examples, two different datasets were used to train the models. These datasets are General Language Understanding Evaluation (GLUE) and Stanford Question Answering Dataset (SQuAD) benchmarks. GLUE is a collection of resources for training, evaluating, and analyzing natural language understanding systems. SQuAD is a reading comprehension dataset that consists of questions on a set of Wikipedia articles, where the answer to every question is a segment of text from the corresponding reading passage.

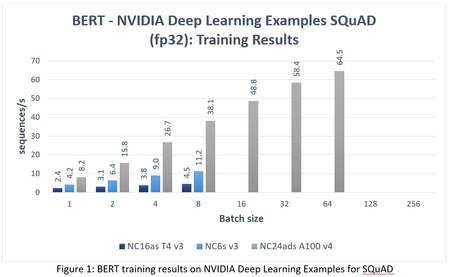

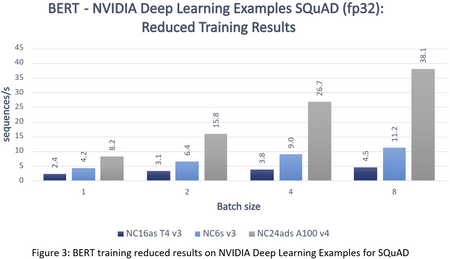

Training

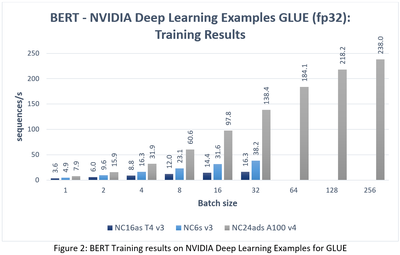

We compared the performance of BERT on the NC A100 v4, NCsv3 and NCas_T4_v3 series VMs for training. The graphs below show the results obtained for BERT for both the SQuAD and GLUE datasets.

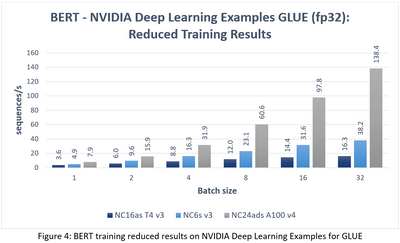

Due to the different amounts of memory per GPU type, BERT was unable to run for larger batch sizes on NC16as T4 v3 and NC6s v3 instances. The graphs below show the reduced set of results with batch sizes that were common for all SKUs.

As can be seen from the above graphs, the NC24ads A100 v4 has the best throughput (sequences/s) for BERT training. The performance gain is significant when compared to the other NC-series VM for higher batch sizes. It is almost 9 times faster than the NC16as T4 v3 and 4 times faster than NC6s v3 for batch size 32. For lower batch sizes, <=2, the performance is slightly better than the other NC series VMs. It is followed by NC6s v3, and NC16as T4 v3, respectively. As the batch size increases, the throughput also increases until the GPU peaks out at the large batch sizes.

Inference

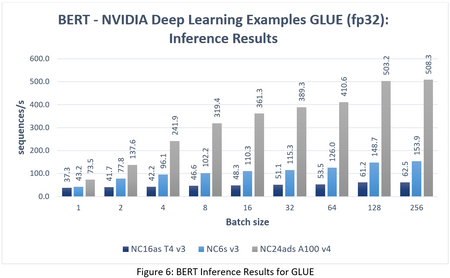

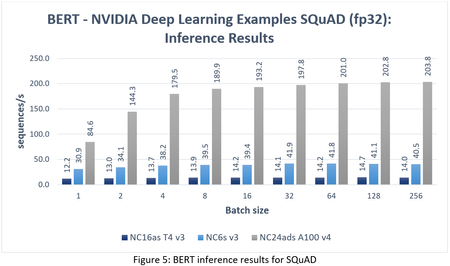

We compared the performance of BERT on the NC A100 v4, NCsv3 and NCas_T4_v3 series VMs for inference. The graphs below show the results obtained for BERT for the SQuAD and GLUE datasets.

The NC24ads A100 v4 outperforms all other VMs significantly on BERT inference in terms of the throughput (sequences/s). It is important to note that as the batch size increases, the inference performance on the BERT benchmark increases for all NC series VMs. The throughput peaks up to a certain batch size and then stays stable due to the limited GPU memory. The NC16as T4 v3 VM has the slowest performance against other NC-series VMs compared in this document.

SSD

Single Shot Multibox Detector (SSD) is a method for detecting objects in images using a single deep neural network. SSD has two components: the backbone model and the SSD head. In NVIDIA Deep Learning examples, the backbone model is a ResNet-50 used as a feature extractor. SSD head is another set of convolutional layers added to this backbone and the outputs are interpreted as the bounding boxes and classes of objects in the spatial location of the final layer's activations. The dataset used to train the SSD model is COCO 2017 dataset (COCO – Common Objects in Context).

Training

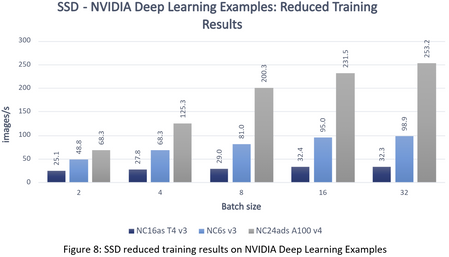

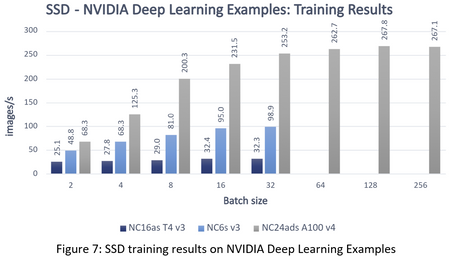

We compared the performance of SSD on the NC A100 v4, NCsv3 and NCas_T4_v3 series VMs for training. The graphs below show the obtained results.

Due to the different amounts of memory per GPU type, SSD was unable to run for larger batch sizes on NC16as T4 v3 and NC6s v3 VMs. Figures 8 and 9 show the reduced results with batch sizes that were common for all SKUs.

The NC24ads A100 v4 VM has the best throughput (images/s) for SSD training workload. The performance gain is significant as compared to the other NC-series VMs for higher batch sizes. For lower batch sizes, <=2, its performance is slightly better than the other NC series VMs.

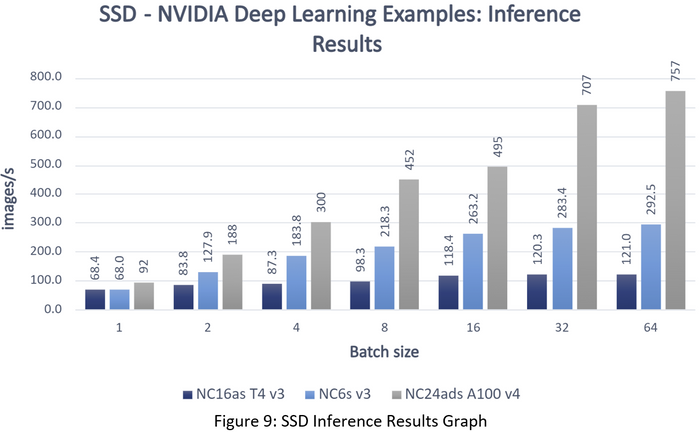

Inference

We compared the performance of SSD on the NC A100 v4, NCsv3 and NCas_T4_v3 series VMs for inference. The graphs below show the obtained results.

The NC24ads A100 v4 outperforms all other VMs significantly on SSD inference in terms of the throughput (images/s) for higher batch sizes. While in general the NC6s v3 outperforms the NC16as T4 v3, for a single batch size the NC16as T4 v3 outperforms the NC6s v3.

ResNet-50

ResNet-50 is a variant of the ResNet model which has 48 convolution layers along with 1 MaxPool and 1 Average Pool layer. The ResNet-50 network can classify images in 1,000 object categories.

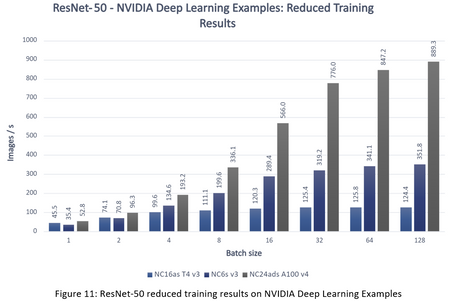

Training

We compared the performance of ResNet-50 on the NC A100 v4, NCsv3 and NCas_T4_v3 series VMs for training. The graphs below show the obtained results.

Due to the different amounts of memory per GPU type, ResNet-50 was unable to run for the largest batch size on NC16as T4 v3 and NC6s v3 VMs. Figures 11 and 12 show the reduced results with batch sizes that were common for all SKUs.

The NC24ads A100 v4 VM has the best throughput (sequences/s) for ResNet-50 training workload. The performance gain is significant as compared to the other NC-series VMs for higher batch sizes. As the batch size increases, the throughput also increases, on all but the NC16as T4 v3, until the GPU memory reaches the capacity.

Inference

We compared the performance of ResNet-50 on the NC A100 v4, NCsv3 and NCas_T4_v3 series VMs for inference. The graphs below show the obtained results.

The NC24ads A100 v4 outperforms all other VMs significantly on ResNet-50 inference in terms of the throughput (images/s) for batch sizes >=8. NC6s v3 VMs follow the same trend on corresponding batch sizes, though performance is slower for smaller batches.

Benchmarking Results with Multi-Instance GPU Instances Enabled

Multi-Instance GPU (MIG), a key technology introduced in the NVIDIA Ampere architecture, enables the NVIDIA A100 Tensor Core GPUs, which power the NC A100 v4 VM series on Azure, to be partitioned into as many as seven instances, each fully isolated with high-bandwidth memory, cache, and compute cores. To read more about MIG, click here.

A100 GPUs that have MIG enabled allow for higher overall GPU performance on larger batch sizes across all 3 benchmarks and for each number of MIG instance if you have multiple models running in parallel. If you only have a single inference model to run, MIG is not recommended. However, when more MIG instances are enabled, there is a higher latency. Please note that when 3 MIG instances are enabled, 1/7th of the GPU isn’t used, which explains the lower results when compared with 2 or 7 MIG instances enabled on higher batch sizes.

With 7 MIG instances enabled, there is better versatility for customers, as it beats or competes with the full GPU across most of the batch sizes and each benchmark.

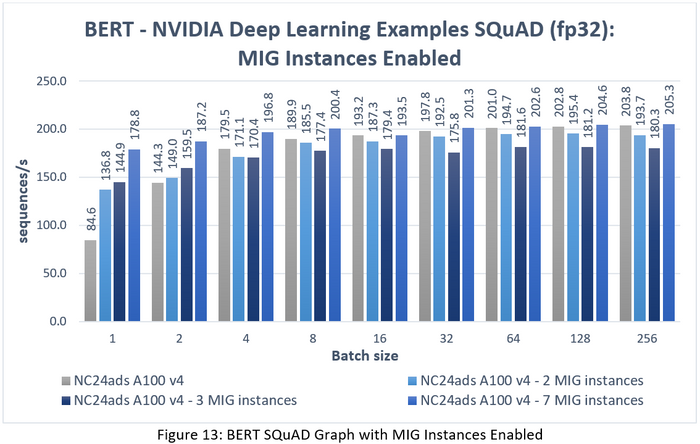

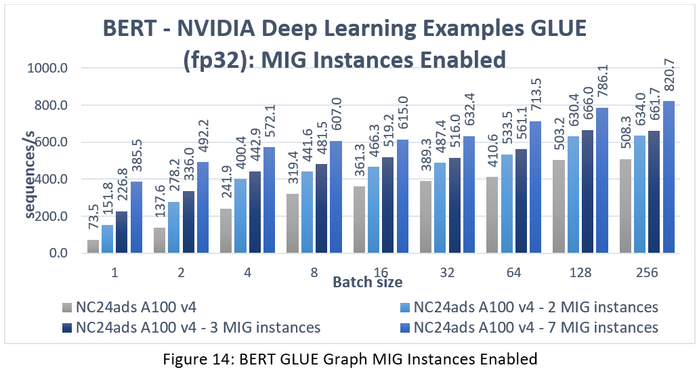

BERT

To understand how the performance varies on BERT with different MIG configurations enabled, we ran the SQuAD and GLUE datasets.

As noted above, and can be seen from the graphs, the configuration with 3 MIG enabled takes a performance hit as 1/7th of the GPU is not utilized. With MIG enabled, the Bert models are running in parallel on each instance and the total number of sequences/sec processed across all instances is reported. With one full GPU and no MIG enabled, the performance is slower than MIG enabled configurations for lower batch sizes. Although, there is little difference for higher batch sizes for this benchmark. This could be because for a large model like Bert on SQuAD dataset, higher batch sizes are more optimal and make good use of our system’s resources.

We observed that for GLUE benchmark, BERT does much better when MIG instances are enabled.

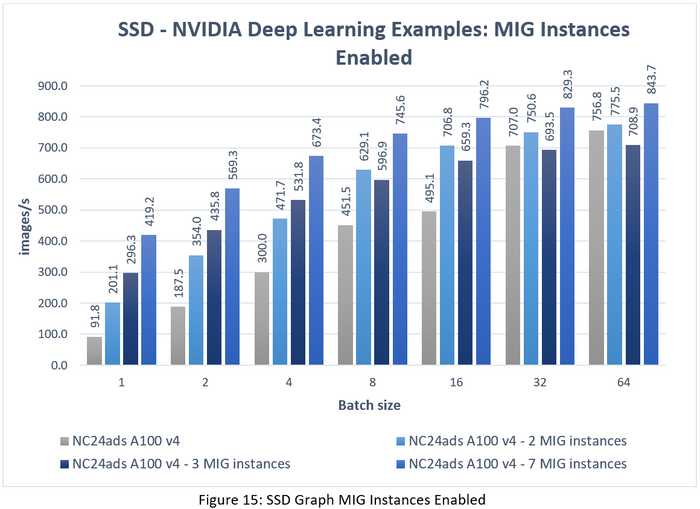

SSD

To understand how the performance varies on SSD with the different MIG configurations enabled, we ran different dataset with 2, 3 and 7 MIG instances. The

For SSD benchmark, the throughput of the model is much faster when MIG is enabled (4X for 7 MIG enabled) when the batch sizes are lower. In this case, MIG gives the customers more flexibility to run models in parallel when the memory consumption of the workloads they are running is less. For higher batch sizes, however, the performance gain is not that significant. Here as well, the configuration with 3 MIG enabled is slower than 2 and 7 MIG enabled for batch sizes>=8.

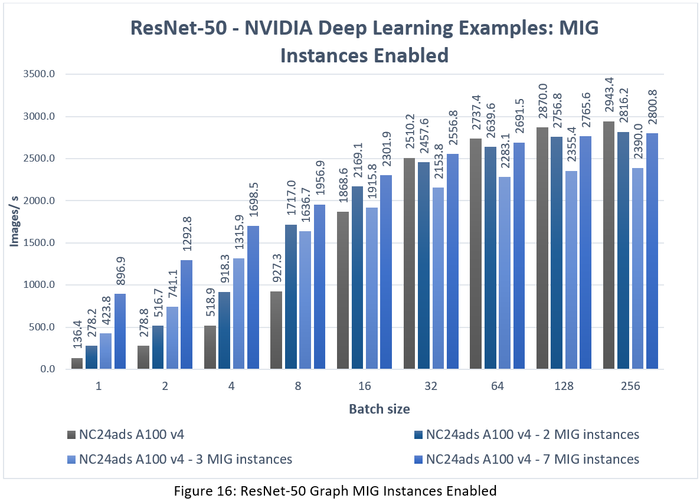

ResNet-50

To understand how the performance varies on ResNet-50 with the different MIG configurations enabled, we ran different dataset with 2, 3 and 7 MIG instances, the results are shown in figure 16.

In the above graph for Resnet benchmark, the latency comes into play at higher batch sizes because of which the performance of 7 MIG instances enabled is slightly lower than one full GPU. The rest of the observations remain the same as other benchmarks.

Recreate the results in Azure

To recreate the results shown in the charts above, please see the following links and choose the correct ones for the desired VM type and benchmark.