This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Content originally authored by Brandon Dixon, Microsoft Partner of Product Management, on May 10, 2016.

Did you know that Microsoft crawls the internet's web pages daily- to 2 billion HTTP requests daily?! For this reason, Defender TI users can leverage derived datasets such as Trackers, Components, Host Pairs, and Cookies during their investigations. Microsoft's web crawling technology is powerful because it's not just a simulation but a fully instrumented browser. To understand what this means, we thought it would be useful to go behind the scenes and into a sample crawl response from the Microsoft Defender Threat Intelligence (Defender TI) toolset.

Web Crawling Process

Understanding a web crawl is a reasonably straightforward process. Like how you digest data on pages you browse online, Microsoft's web crawlers essentially do the same, only faster, automated, and made to store the entire chain of events. When web crawlers process web pages, they take note of links, images, dependent content, and other details to construct a sequence of events and relationships. Web crawls are powered by an extensive set of configuration parameters that could dictate an exact URL starting point or something more complex, like a search engine query.

Once they have a starting point, most web crawls will perform the initial crawl, take note of all the links from within the page, and then crawl those follow-on pages performing the same process repeatedly. Most web crawls have a depth limit that stops after 25 or so links outside the initial starting point to avoid crawling forever. Microsoft's configuration allows for some different parameters to be set that dictate the operation of the web crawl.

Knowing that malicious actors are always trying to avoid detection, Microsoft has invested a great deal of time and effort into its proxy infrastructure. This includes a combination of standard servers and mobile cell providers being used as egress points deployed worldwide. The ability to simulate a mobile device in the region where it's being targeted means that Microsoft crawlers are more likely to observe the complete exploitation chain.

Collected Data

Microsoft has an internal web front-end tool for the raw data collected from a web crawl and slices it into the following sections.

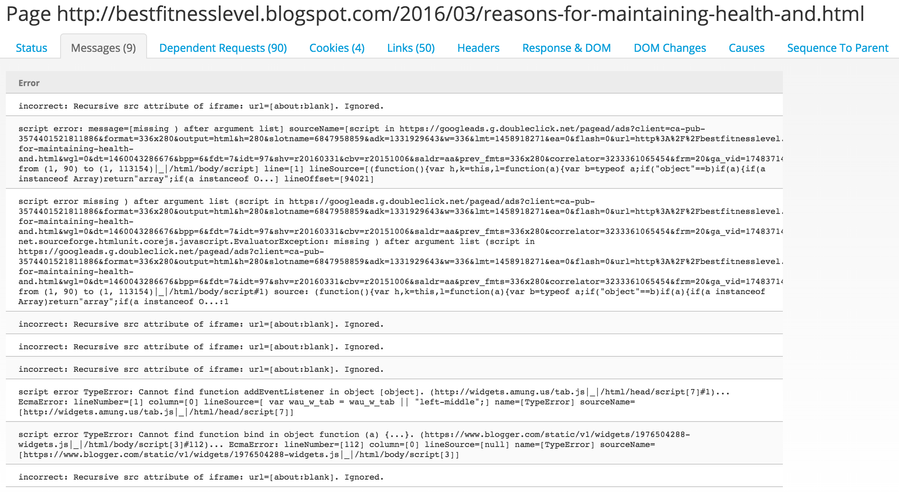

Messages

If you ever view a web page in Microsoft Edge and fire up the Microsoft Edge Developer Tools, you might notice a few messages in the console. Sometimes these are leftover debugging statements from the site author, and other times they are errors encountered when rendering the page. These messages are stored with the web crawl details and presented in raw form. While not always helpful, it's possible that unique messages could be buried inside of the messages pane that may show signs of a common author or malicious tactic.

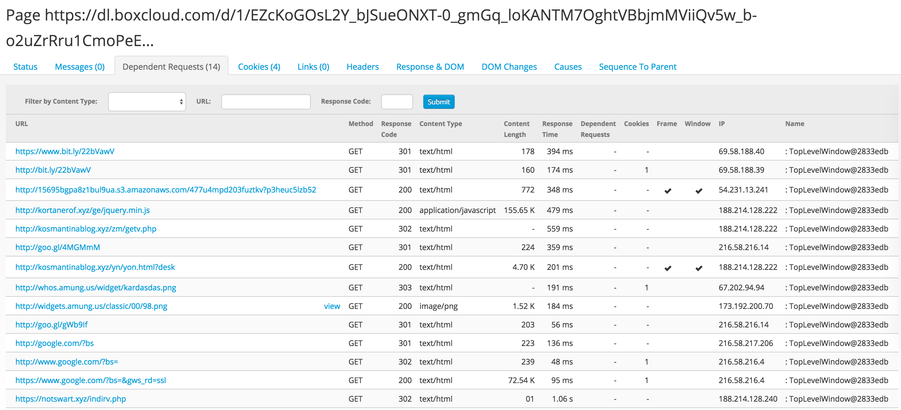

Dependent Requests

Most modern web pages are not created from just one local source. Instead, they are constructed from many different remote resources that get assembled to form a cohesive user experience. Requests for images, stylesheets, javascript files, and other resources are logged under dependent requests and noted accordingly. Metadata from the request itself is associated with each entry and could include things like the HTTP response code, content length, cookie count, content header, and load data.

Dependent requests are a great place to start when investigating a suspicious website—seeing all the requests made to create the page and how it was loaded can sometimes reveal things like malvertising through injected script tags or iframes. Additionally, seeing the resolving IP address means we can use that as another reference point for our investigation. Passive DNS data may reveal additional hostnames that the team has already actioned.

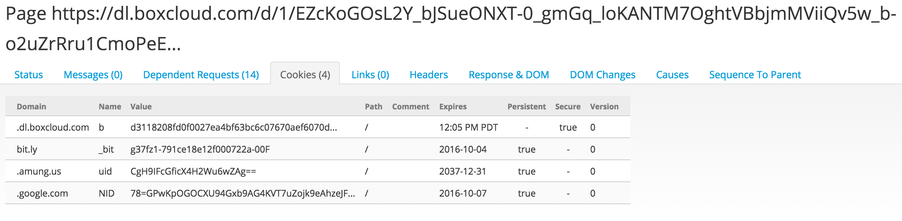

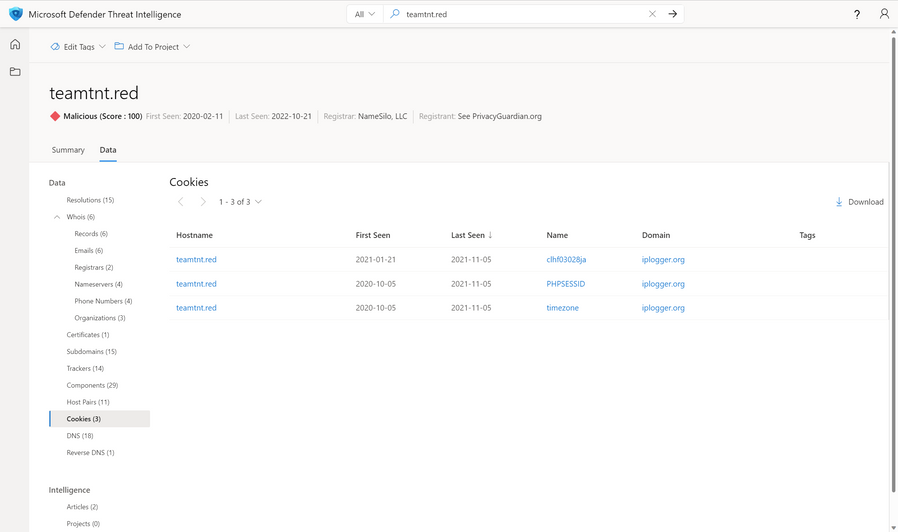

Cookies

Just like a standard browsing experience, Microsoft web crawlers understand and support the concept of cookies. These are stored with the requests and include the original names and values, supported domains, and whether or not the cookie has been flagged as secure. Often associated with legitimate web behavior, cookies are also used by malicious actors to keep track of infected victims or store data to be utilized later. Using a cookie's unique names or values allows an analyst to begin making correlations that would otherwise be lost in datasets like passive DNS or web scraping.

Links

To crawl a web page thoroughly, Microsoft crawlers need to understand the HTML Document Object Model (DOM) to extract additional links. Each A element shows the original href and text properties while preserving the XPath location where it was found within the DOM. While a bit abstract, having the XPath location allows an analyst to begin thinking about the structure of the web page in a different way. Longer path locations imply deeper nesting of link elements, while shorter locations could be top-level references.

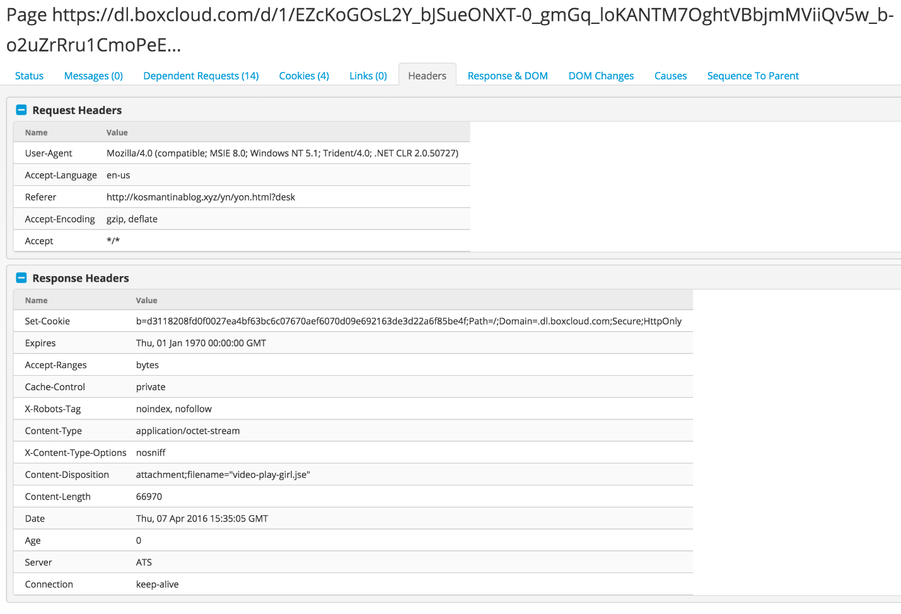

Headers

Headers make it possible for the modern web to work correctly. They dictate the rules of engagement and describe what the client is requesting and how the server responds. Microsoft keeps both ends of the headers and preserves the keys and values and the order in which they were observed. This process captures standard and custom headers, creating the opportunity for unique fingerprinting of specific servers or services.

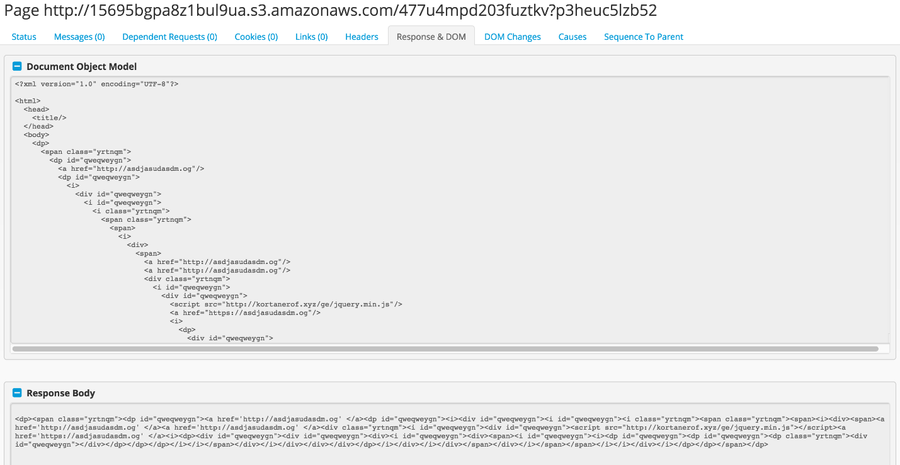

Response and DOM

Arguably, web crawling technology is useful to preserve what a page looked like at a certain point in time. Microsoft not only keeps the entire HTML content from the crawled page but also saves any dependent file used in the loading process. This means things like stylesheets, images, javascript, and other details are also kept. Having all the local contents means that Microsoft can recall or recreate the web page as it was crawled. When it comes to malicious campaigns, it's not uncommon for actors to keep their infrastructure up for a short period to not attract attention. From an analyst's perspective, being able not only to see but interact with a page that may no longer exist is invaluable in understanding the nature of the attack.

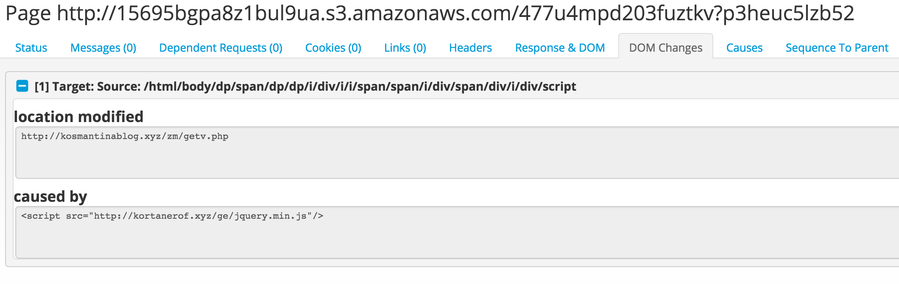

DOM Changes

Today's web experience is mainly built on dynamic content. Web pages are fluid and may change hundreds of times after their initial load. Some web pages are simply shells that only become populated after a user has requested the page. If the Microsoft web crawlers only downloaded the initial pages, many would appear blank or lack substantial content. Because the crawlers act as a full browser, they can observe changes made to the page's structure and log them in a running list. This log becomes extremely powerful when determining what happened inside the browser after the page loaded. What might start as a benign shell of a page may eventually become a platform for exploit delivery or the start of a long redirection sequence to a maze of subsequent pages.

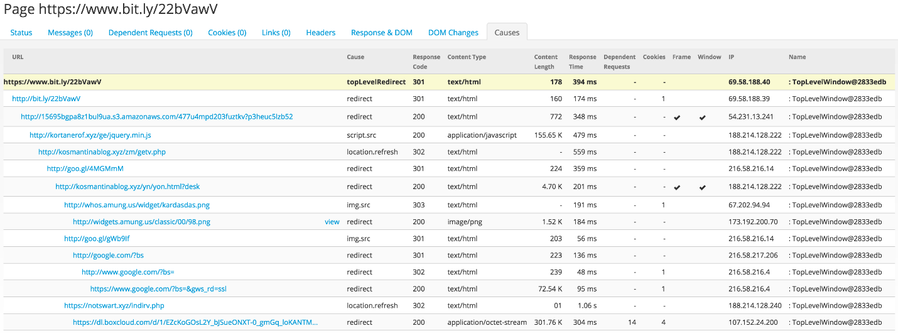

Causes

If you think of a web crawl as a tree structure, you may have an initial trunk with multiple branches where each additional branch could have its own set of branches. The cause section of Defender TI content subscribes to this idea of a tree and shows all web requests in the order of how they were called. Viewing the relationships to multiple web pages this way allows us to gain an understanding of the role of each page. The above example shows a long-running chain of redirection sequences starting with bit.ly, then Google, and eventually ending at Dropbox.

Data from the cause tree is what we use to create the host pair dataset inside Defender TI. We can be flexible in our detection methods by seeing the entire chain of events. For example, if we had the final page of an exploit kit, we could just use the cause chain to walk back up to the initial page that caused it. Along the way, this could reveal more malicious infrastructure or maybe even point to something like a compromised site.

Web Crawls for the Masses

As you can see from the above screenshots and explanations, Microsoft has a lot of data that can be used for analysis purposes. Our goal with Microsoft Defender Threat Intelligence is to introduce this data in a format that is easy to use and understand.

Disclaimer

Many of the indicators noted in the subsequent sections may be malicious. As a precaution, Microsoft has defanged these indicators for your protection. Indicator searches performed in Microsoft Defender Threat Intelligence are safe to execute.

Host Pairs

In the "cause" section of the crawl, it was easy to see how all the links related to each other and formed a tree structure. By keeping this structure, we can derive a set of host pairs based on the presence of referencing images and redirection sequences between two hosts. For example, teamtnt[.]red (parent host) is referencing an image hosted by iplogger[.]org (child host). They also seem to have a symbiotic relationship because another relationship shows iplogger[.]org redirecting users to teamtnt[.]red as well.

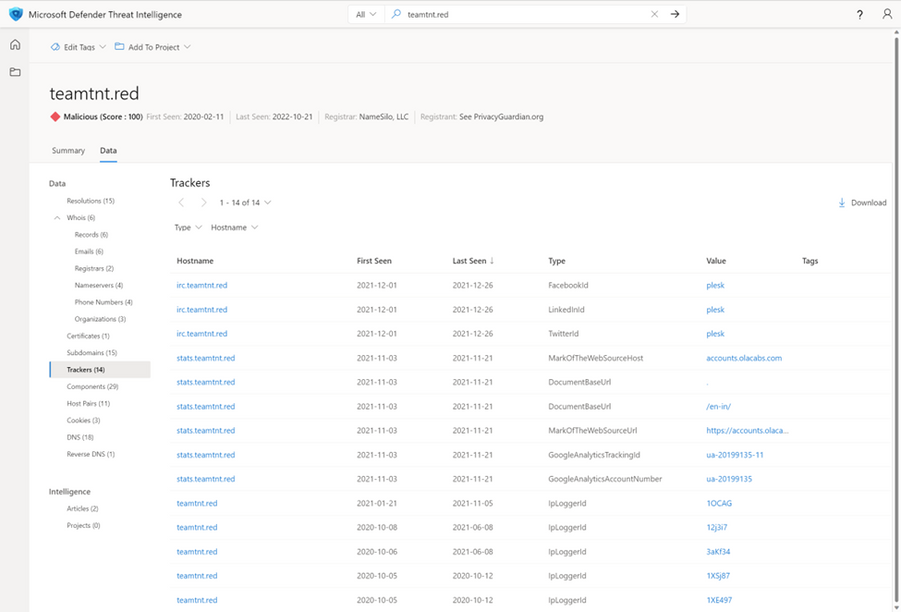

Trackers

Since Microsoft stores all the page content from the web crawls, it's easy to go back in time to extract content from the DOM. Trackers are generated from inline processing during the crawl and post-processing of data based on extraction patterns. Using regular expressions or code-defined logic, we can extract codes from the web page content, pull the timestamp of the occurrence, and for what hostname it was associated with.

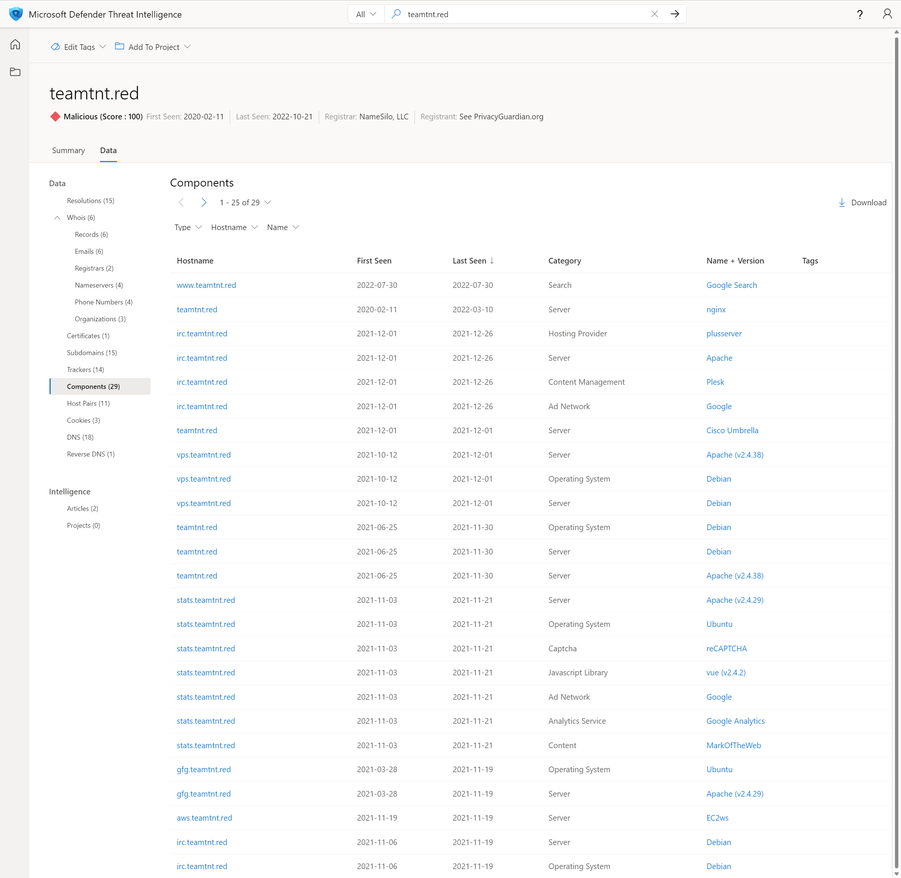

Components

Creating the Components dataset is a combination of header and DOM content parsing similar to that of the Trackers dataset. Regular expressions and code-defined logic help extract details about the server infrastructure and which web libraries were associated with a page.

Cookies

Earlier, we learned about how Microsoft collects cookies and how actors may use them to track their victims. Above is a screenshot of cookies observed by teamtnt[.]red, which had originated from iplogger[.]org.

Want to further your learning?

Check out the Microsoft Defender Threat Intelligence Level 400 Ninja Training and Product Documentation on Microsoft Learn.