This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Confidential Computing with Serverless Functions

By Roy Hopkins, R3

Serverless functions are a cloud-native development model that allows customers to build applications and services without having to manage infrastructure. Services such as Azure Functions automatically scale based on demand and charge only for the actual time spent executing the application code.

The simplicity of provisioning, managing, and scaling serverless functions makes them extremely popular and provides a compelling reason to move workloads to the cloud. However, there are downsides and concerns when working with Function as a Service (FaaS) platforms which can prevent some enterprises from migrating to the cloud. How can we help address those concerns?

Trust in serverless functions

Let's take a look at a diagram that shows how a FaaS platform might be architected so we can better understand some of these concerns.

Firstly, the developer's code that is used to implement the serverless functions must be handed over to the platform for storage in a database, ready to be invoked by the user. For some highly sensitive applications, service providers may want to keep their code intellectually property private from normally trusted entities such as the cloud service provider (CSP).

Even if we do trust our CSP, what happens if an attacker uploads a modified version of the service code, designed to manipulate results, or even steal data sent to the service?

When it comes to invoking a function there is a fairly complicated pipeline and set of services involved. The user makes a request which is then queued and subsequently allocated to a container that can run the request. The code is then provisioned into the container from storage, parsed and the function is executed. Any return value or result from the function is then sent back to the user. This whole process increase the attack surface.

In summary, there are a number of areas that can be hardened in order to provide a higher level of trust in FaaS implementations:

- Privacy of developer code, protecting it from the FaaS platform.

- Integrity of the developer code: ensuring the code that is provisioned by the developer to provide a higher level of trust.

- Privacy and integrity of user-provided data: Increasing the level of protection so that the data provided by the user can only be accessed and processed by the developer code and cannot be observed or modified by any other entity.

- Privacy and integrity of the result: Protecting data returned by the service so that it can only be seen by the user and that it cannot be modified by any other entity.

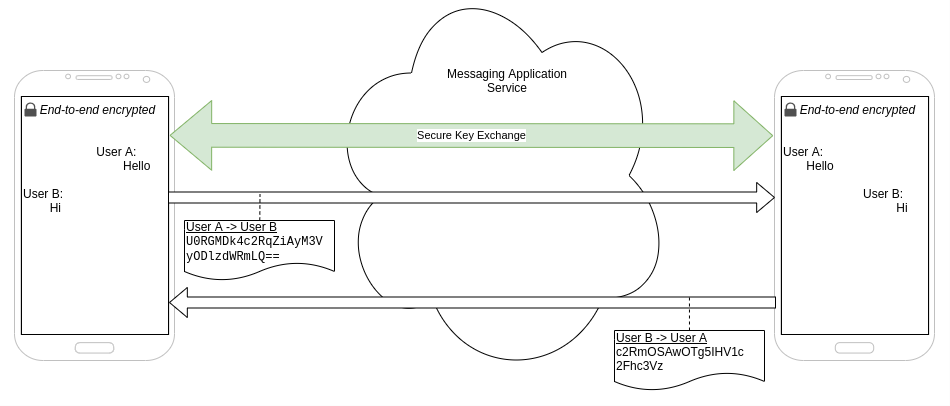

Can end-to-end encryption help?

Some messaging services use end-to-end encryption to secure users' conversations. This means that when a new conversation is established, the parties agree upon an encryption key using a secure key exchange which allows that only the approved parties have access to the key, and not the developer.

Whenever a message is sent over the network between parties, the message is encrypted on the sender's device and decrypted on the recipient's device. The solution is designed to prevent unauthorized parties to decrypt or see the plaintext contents of the message: not the developer or the CSP hosting the service. This works because all of the parties that require access to the decrypted data (the sender and recipient) are all trusted. The developer and CSP are untrusted, but they also do not need access to the decrypted data in order to provide the service.

So, can we apply a similar approach to serverless functions? Who is the sender and the recipient in this case? Looking back at the FaaS architecture diagram above, can we identify which actors need to be trusted?

The model is slightly more complicated as we need to consider different levels of trust - different actors need to be trusted with different data as is shown in this table.

|

Actor |

Data they are trusted with. |

|

User |

Data they want to send to the service. Response data from the service. |

|

Developer |

The service code. |

|

JavaScript Engine |

The service code. The user's data. The response data. |

There are a few things to note here. The developer is not trusted with the user's data. And vice-versa, the user is not trusted with the developer's code. Although in order for the user to trust that the developer's service performs the actions they expect, the developer may choose to trust the user with their code.

Finally, and most importantly, the actor with the highest level of trust is the JavaScript engine as this requires access to the code and the data. But how can we trust the JavaScript engine when it is running on untrusted infrastructure in a FaaS that is under the control of the cloud service provider? End-to-end encryption is not enough. We can certainly end-to-end encrypt the data between the actors, but if we cannot trust one of the 'ends', we have failed to add trust to the platform.

Confidential computing to the rescue

Let's take a look at what confidential computing offers us:

- Protection of confidentiality and integrity of data using Trusted Execution Environments (TEEs).

- Integrity of the code that is executing within the Trusted Execution Environment.

Trusted Execution Environments (TEEs) use hardware to provide a secure processor in which code and data are physically isolated from processes outside the TEE. There are a number of different vendors that provide TEE implementations, with the most relevant ones for server workloads being Intel's Secure Guard Extensions (Intel SGX) and AMD's Secure Encrypted Virtualization (SEV)-Secure Nested Paging (SNP).

When we write code to target a TEE, we call it an 'enclave'. When an enclave is running in the context of a TEE, the initial codebase of the enclave is running a known, exact version and that any data processed in the enclave cannot be observed or modified from the outside. We can run our enclave on a server that supports TEEs in the cloud and still get these same protection, even in the presence of an untrusted firmware or kernel on the host server.

So, for our FaaS platform, as long as we create an enclave to run our JavaScript engine and we run it in the context of a TEE on the CSPs servers, we can expect that exactly the same version of code is executed, and that the user's data and the response are only accessible within the enclave itself.

So now we know about enclaves we can readdress the list above of areas that need to be hardened:

- Privacy of the Developer's code can be maintained by securely sending it to an enclave, which is designed not to disclose the service code to actors outside the enclave.

- Integrity of the Developer's service code can be maintained by the assured code integrity of the JavaScript engine. The JavaScript engine will only execute the service code if it has not been modified or tampered with.

- Privacy and integrity of user data can be maintained by only processing the data within the boundaries of the TEE hosting the enclave.

- Privacy and integrity of the result can be maintained by encrypting it within the TEE using a key accessible by the end user.

This makes the JavaScript Engine a trusted actor, right?

Actually - we are not quite there yet. Certainly, by running the JavaScript engine in an enclave we harden all the areas we identified. But how do we know that the (untrusted) FaaS platform provider is really running it inside a TEE? They may claim they are, but how do we know that?

Using attestation to prove integrity

The final piece of the puzzle is attestation - the evidence provided by the TEE to prove that an enclave is genuinely running within a hardened context. Without this, there is nothing to stop the FaaS platform from saying they are running inside a TEE when they are not, allowing to break the protection by a confidential computing platform.

Both Intel SGX and AMD SEV-SNP include architecture to provide the required attestation, giving cryptographic evidence bound to the CPU hardware that a genuine TEE is hosting a particular configuration of an enclave, including the initial code that is executing within the enclave. Further, a cryptographic public key can be bound to this attestation that allows any data encrypted with the key to be decrypted by that exact enclave running in a valid TEE.

With all the confidential computing components in place we can finally build our hardened serverless platform.

Conclave Functions - a Hardened FaaS running on Azure

R3's Conclave Cloud is a platform for hosting privacy-preserving applications. Built on top of the R3's Conclave SDK, which in turn is built to take advantage of Intel SGX hosted on the Azure confidential computing infrastructure, Conclave Cloud is a platform that gives customers the tools necessary to ensure that access to data is provided only to authorised parties.

The Conclave Cloud platform includes the Conclave Functions service which provides a realisation of a hardened Function as a Service platform as described in this article. When using Conclave Functions to host serverless applications, the application code is executed by a JavaScript engine running inside an enclave. The Intel SGX attestation service is used to provide users and developers with the tools to verify the integrity of the platform.

This diagram shows how all the concepts we have talked about fit together to provide this service.

Firstly, in order to gain trust in the JavaScript Engine the Developer and User both need to obtain the attestation from the platform giving evidence that the platform is indeed using a valid set of TEEs and verifying the code running inside enclaves. The root of trust for Conclave Cloud is the gatekeeper for all the platform keys - the Key Derivation Service (KDS). By making the KDS run inside an enclave, keys will be securely released to other approved enclaves running in valid TEEs, thus establishing a chain of trust.

When the Developer uploads code to the platform, the code is first encrypted using the public key. Then, the JavaScript Engine enclave will be given access to the private key to decrypt this.

When the User wants to invoke a function, they also use the public key, this time to encrypt the data that is sent to the function for processing inside the JavaScript engine. Again, only the JavaScript Engine enclave will be given access to the private key to decrypt this. The data sent to the JavaScript engine also includes a signature - a cryptographic hash of the Developer's code that is expected to be running inside the JavaScript Engine. The JavaScript Engine will refuse to pass the data to the function if this signature differs from the actual code inside the engine, ensuring the code cannot be modified undetected by the Developer.

When the JavaScript engine is invoked, it requests the private key from the KDS which verifies the attestation from the JavaScript Engine enclave to see if it is entitled to the private key before securely transferring it. Once in possession of the key, the JavaScript engine can run the function code and encrypt a result to send back to the User.

Try it out!

All of this is available today! You can create a free Beta account to try Conclave Cloud here, and experience a hardened serverless platform for yourself taking advantage of infrastructure provided by Azure confidential computing!

For a demo, please check out the Conclave webinar.

For more information, please check out the documentation.