This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Steps for Installing the third-party .whl packages into DEP-enabled Azure Synapse Spark instances

It is really challenging when you need to install third-party .whl packages into a DEP-enabled Azure Synapse Spark Instance.

According to the documentation, https://learn.microsoft.com/en-us/azure/synapse-analytics/spark/apache-spark-azure-portal-add-libraries#pool-packages Installing packages from PyPI is not supported within DEP-enabled workspaces. Hence we cannot just upload the .whl packages into the workspace. We need to upload all the dependencies along with the .whl package and it will be an offline installation. Now Synapse spark clusters come with in-built packages and hence we may find some conflicts when we try to install some third-party packages.

This document will show how we can install a .whl package and upload it successfully within the spark cluster. The package we are showing here is azure-storage-file-datalake

The pypi link: azure-storage-file-datalake · PyPI

The steps follow:

- First, we need to create a VM with internet access.

Keep in mind for Spark 3.1 & 3.2 the OS should be Ubuntu 18.0.4, for 2.4 the OS should be Ubuntu 16.0.4. This is critical because if the OS is different the .whl packages will be different and we will face issues in uploading them in our spark clusters.

Please refer :

Azure Synapse Runtime for Apache Spark 3.2 - Azure Synapse Analytics | Microsoft Learn

Azure Synapse Runtime for Apache Spark 3.1 - Azure Synapse Analytics | Microsoft Learn

Azure Synapse Runtime for Apache Spark 2.4 - Azure Synapse Analytics | Microsoft Learn

- Then you need to create a file named Synapse-Python38-CPU.yml (for Spark 3.1 & 3.2) which has been attached here.

- Now run the below commands in the VM [You can put it in a script also]:

#Downloading & running the miniforge shell script

wget https://github.com/conda-forge/miniforge/releases/download/4.9.2-7/Miniforge3-Linux-x86_64.sh

sudo bash Miniforge3-Linux-x86_64.sh -b -p /usr/lib/miniforge3

#Exporting to the Path variable to use conda

export PATH="/usr/lib/miniforge3/bin:$PATH"

#Install the gcc g++

sudo apt-get -yq install gcc g++

#Creating the conda environment and activating it

conda env create -n synapse-env -f Synapse-Python38-CPU.yml

source activate synapse-env

- Create a file named input-user-req.txt, and put the list of third-party wheel packages you need, for example for azure datalake add the below line

azure-storage-file-datalake

- Run the below commands to install and identify the list of dependent packages:

pip install -r input-user-req.txt > pip_output.txt

- Pushing the list of dependent .whl packages into Files.txt file

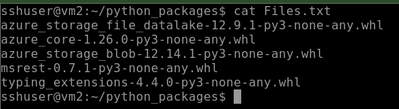

cat pip_output.txt | grep -E "Downloading *" | awk -F" " '{print $2}' > Files.txt

if it is not a fresh pip install run the below command. It may happen that we have to install a few packages earlier. To get the list of cached packages run the below command

cat pip_output.txt | grep -E "Using cached *" | awk -F" " '{print $3}'>>Files.txt

- You will find the list of dependent wheel files that you need to download

- Now to download the packages I have used the below python code, you may feel free to do it in any other way [Optional]

Python code :

import sys

import subprocess

f = open(sys.argv[1], "r")

for line in f:

package = line.split('-')[0]

subprocess.check_call([sys.executable, '-m', 'pip', 'download','--no-deps', package])

- Once you downloaded the packages, you can push them to Azure storage or directly to azure synapse workspace.

Please refer: Manage packages outside Synapse Analytics Studio UIs - Azure Synapse Analytics | Microsoft Learn to automate the process either through PowerShell or Rest API calls.

Apart from this please check the below URLs to upload packages manually to Azure Synapse

Manage workspace libraries for Apache Spark - Azure Synapse Analytics | Microsoft Learn

Manage Spark pool level libraries for Apache Spark - Azure Synapse Analytics | Microsoft Learn

- End of the step you should find the package is installed into your synapse spark cluster

Few More Points to keep in Mind:

There are a few things you need to keep in mind when you are going to debug or troubleshoot package installation.

- If you upload the same package in the same Spark cluster it will error out. Please look for any package ending with (1).whl or any (numeric digit).whl. Try to upload the other packages without that package and it should succeed

- There are a few .tar.gz files that complain and fail the installation. Please build those files in the VM and create a .whl file out of them. Once you have the .whl file the installation will succeed.

There is another approach to creating a custom conda channel, but for that, we need a public Azure storage account. Please refer if you want to explore that path: Create custom Conda channel for package management - Azure Synapse Analytics | Microsoft Learn.