This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Today, I worked on a performance case where our customer got the following error message: ('40001', '[40001] [Microsoft][ODBC Driver 17 for SQL Server][SQL Server]Transaction (Process ID NNN) was deadlocked on lock resources with another process and has been chosen as the deadlock victim. Rerun the transaction. (1205) (SQLExecute)').

Following I would like to share with you how we found out the problem, solved and improve it.

First, we have the following Python code:

import csv

import pyodbc

import threading

import os

import datetime

SQL_server = 'tcp:servername.database.windows.net,1433'

SQL_database = 'DatabaseName'

SQL_user = 'username'

SQL_password = 'Password'

filepath = 'C:\SourceCode\\PYTHON'

filelog = filepath + '\\Error.log'

chunksize = 10000

class ThreadsOrder:

Threads = []

def Clean(self,NumberThreads):

for t in range(0,NumberThreads):

self.Threads.append(0)

def Available(self):

slot=-1

for t in self.Threads:

slot+= 1

if( t == 0 ):

self.ChangeStatus(slot,1)

return slot

return -1

def ExecuteSQL(self,n,a):

TExecutor = threading.Thread(target=ExecuteSQLcc,args=(a,n,))

TExecutor.name = "Process - " + str(n)

TExecutor.start()

def ChangeStatus(self,n,value):

threading.Lock()

SaveResults('Thread ' + str(n) + ' changed to ' + str(value),False)

self.Threads[n]=value

def SaveResults( Message, bSaveFile):

try:

print(Message)

if (bSaveFile==True):

t = datetime.datetime.now()

file_object = open(filelog, "a")

file_object.write(datetime.datetime.strftime(t, '%d/%m/%y %H:%M:%S') + '-' + Message + '\n' )

file_object.close()

except BaseException as e:

print('And error occurred - ' , format(e))

def ExecuteSQLcc(a,n):

try:

pThreadOrder.ChangeStatus(n,-2)

cnxn1 = pyodbc.connect("DRIVER={ODBC Driver 17 for SQL Server};SERVER=" + SQL_server + ";DATABASE=" + SQL_database + ";UID=" +SQL_user+';PWD='+ SQL_password, autocommit=False)

cursor = cnxn1.cursor()

cursor.fast_executemany = True

cursor.executemany("MERGE INTO [dbo].[test_data] AS T USING (SELECT ? as [Key]) as NewVal([Key]) ON t.[Key] = newval.[Key] WHEN MATCHED THEN UPDATE SET Duplicate = 1 WHEN NOT MATCHED THEN INSERT (Num_TEST, TEST_01, TEST_02, TEST_03, TEST_04, TEST_05, TEST_06, TEST_07, TEST_08, TEST_09, TEST_10, TEST_11, TEST_12, TEST_13, TEST_14, TEST_15, TEST_16, TEST_17, TEST_18, TEST_19, TEST_20, [Key]) values(?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?);",a)

cursor.commit()

cnxn1.close()

except BaseException as e:

SaveResults('Executing SQL - an error occurred - ' + format(e),True)

finally:

pThreadOrder.ChangeStatus(n,0)

def ExecuteSQLLimpia():

try:

SaveResults('Truncating the table',True)

cnxn1 = pyodbc.connect("DRIVER={ODBC Driver 17 for SQL Server};SERVER=" + SQL_server + ";DATABASE=" + SQL_database + ";UID=" +SQL_user+';PWD='+ SQL_password, autocommit=False)

cursor = cnxn1.cursor()

cursor.execute("TRUNCATE TABLE [test_data]")

cursor.commit()

cnxn1.close()

except BaseException as e:

SaveResults('Truncating - an error occurred - ' + format(e),True)

pThreadOrder = ThreadsOrder()

pThreadOrder.Clean(100)

##ExecuteSQLLimpia()

nThread=0

line_total = 0

for directory, subdirectories, files in os.walk(filepath):

for file in files:

name, ext = os.path.splitext(file)

if ext == '.csv':

a=[]

line_count = 0

SaveResults('Reading the file ' + name ,True)

with open(os.path.join(directory,file), mode='r') as csv_file:

csv_reader = csv.reader(csv_file, delimiter=',')

for row in csv_reader:

line_count+= 1

line_total+=1

if line_count>1:

row.append(row[0])

a.append(row)

if (line_count%chunksize)==0:

nThread = pThreadOrder.Available()

while(nThread==-1):

nThread = pThreadOrder.Available()

print('Waiting for available Thread ' + str(line_total))

SaveResults('Review Thread ' + str(nThread) + ' process rows ' + str(line_count),True)

pThreadOrder.ExecuteSQL(nThread,a)

a=[]

if(a.count!=0):

pThreadOrder.ExecuteSQL(nThread,a)

SaveResults('Processed ' + str(line_count) + ' lines for the file ' + name, True)

- This Python code is reading a csv file that contains 23 columns.

- Every 10000 rows read of this csv file, Python will execute in another thread (up to 100 threads at the same time) a merge TSQL command with the following objetive:

- Verify if the Key exists already in the table.

- If YES, update the column duplicate

- if NO, insert the information in the table.

Definition of the table

/****** Object: Table [dbo].[test_data] Script Date: 02/01/2023 17:36:34 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE TABLE [dbo].[test_data](

[Key] [int] NOT NULL,

[Num_TEST] [int] NULL,

[TEST_01] [varchar](6) NULL,

[TEST_02] [varchar](6) NULL,

[TEST_03] [varchar](6) NULL,

[TEST_04] [varchar](6) NULL,

[TEST_05] [varchar](6) NULL,

[TEST_06] [varchar](6) NULL,

[TEST_07] [varchar](6) NULL,

[TEST_08] [varchar](6) NULL,

[TEST_09] [varchar](6) NULL,

[TEST_10] [varchar](6) NULL,

[TEST_11] [varchar](6) NULL,

[TEST_12] [varchar](6) NULL,

[TEST_13] [varchar](6) NULL,

[TEST_14] [varchar](6) NULL,

[TEST_15] [varchar](6) NULL,

[TEST_16] [varchar](6) NULL,

[TEST_17] [varchar](6) NULL,

[TEST_18] [varchar](6) NULL,

[TEST_19] [varchar](6) NULL,

[TEST_20] [varchar](6) NULL,

[Duplicate] [bit] NULL

) ON [PRIMARY]

GO

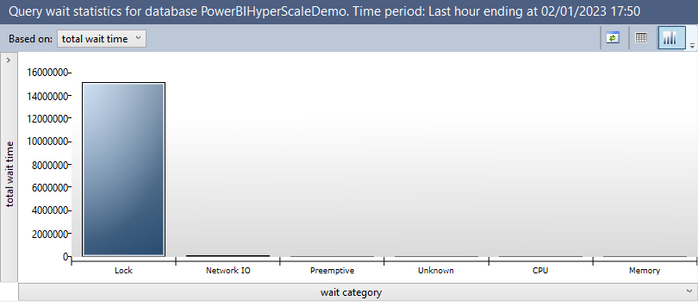

We analyzed what is the dominant wait stat, as expected, the wait stats is Lock:

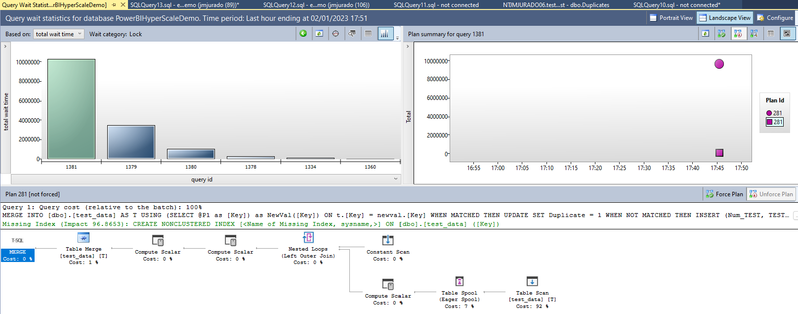

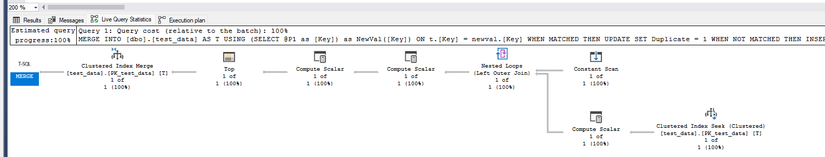

And the query and execution plan is:

declare @p1 int

set @p1=NULL

exec sp_prepexec @p1 output,N'@P1 nvarchar(10),@P2 nvarchar(2),@P3 nvarchar(12),@P4 nvarchar(12),@P5 nvarchar(1),@P6 nvarchar(1),@P7 nvarchar(1),@P8 nvarchar(1),@P9 nvarchar(1),@P10 nvarchar(1),@P11 nvarchar(1),@P12 nvarchar(1),@P13 nvarchar(1),@P14 nvarchar(1),@P15 nvarchar(1),@P16 nvarchar(1),@P17 nvarchar(1),@P18 nvarchar(1),@P19 nvarchar(1),@P20 nvarchar(1),@P21 nvarchar(1),@P22 nvarchar(1),@P23 nvarchar(10)',N'MERGE INTO [dbo].[test_data] AS T USING (SELECT @P1 as [Key]) as NewVal([Key]) ON t.[Key] = newval.[Key] WHEN MATCHED THEN UPDATE SET Duplicate = 1 WHEN NOT MATCHED THEN INSERT (Num_TEST, TEST_01, TEST_02, TEST_03, TEST_04, TEST_05, TEST_06, TEST_07, TEST_08, TEST_09, TEST_10, TEST_11, TEST_12, TEST_13, TEST_14, TEST_15, TEST_16, TEST_17, TEST_18, TEST_19, TEST_20, [Key]) values(@P2,@P3,@P4,@P5,@P6,@P7,@P8,@P9,@P10,@P11,@P12,@P13,@P14,@P15,@P16,@P17,@P18,@P19,@P20,@P21,@P22,@P23);',N'30000',N'2',N'D33579',N'D62074',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'',N'30000'

select @p1

MERGE INTO [dbo].[test_data] AS T USING (SELECT @P1 as [Key]) as NewVal([Key]) ON t.[Key] = newval.[Key] WHEN MATCHED THEN UPDATE SET Duplicate = 1 WHEN NOT MATCHED THEN INSERT (Num_TEST, TEST_01, TEST_02, TEST_03, TEST_04, TEST_05, TEST_06, TEST_07, TEST_08, TEST_09, TEST_10, TEST_11, TEST_12, TEST_13, TEST_14, TEST_15, TEST_16, TEST_17, TEST_18, TEST_19, TEST_20, [Key]) values(@P2,@P3,@P4,@P5,@P6,@P7,@P8,@P9,@P10,@P11,@P12,@P13,@P14,@P15,@P16,@P17,@P18,@P19,@P20,@P21,@P22,@P23);

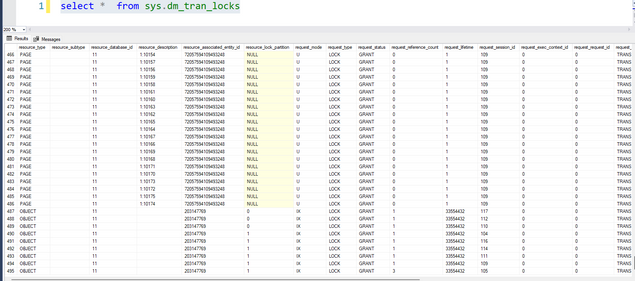

Using select * from sys.dm_tran_locks we could see the reason of the deadlock: we have transactions with a lock in between the updates of others in a single transaction.

- I saw several things to work:

- Why we have a table scan with 92%?

- We saw that our customer is using a HeapTable without any index, to find out the value. So, in this case, we are going to create an index for that, reducing and avoid any table scan and deadlock for resources.

- We could create a nonclustered index maintaining the heap table or

- We could create a clustered index.

- In this case, after creating a clustered index.

- We saw that our customer is using a HeapTable without any index, to find out the value. So, in this case, we are going to create an index for that, reducing and avoid any table scan and deadlock for resources.

- Why we have a table scan with 92%?

- Why the parameters are nvarchar when the table structure is varchar and Why we have a convert implicit seeking the key.

- I found that Python3 are sending all the parameters in Unicode, so, converting the values in the query to the right format, I could save a few miliseconds per query.

def ExecuteSQLcc(a,n):

try:

pThreadOrder.ChangeStatus(n,-2)

cnxn1 = pyodbc.connect("DRIVER={ODBC Driver 17 for SQL Server};SERVER=" + SQL_server + ";DATABASE=" + SQL_database + ";UID=" +SQL_user+';PWD='+ SQL_password, autocommit=False)

cursor = cnxn1.cursor()

cursor.fast_executemany = True

cursor.executemany("MERGE INTO [dbo].[test_data] AS T USING (SELECT CONVERT(int,?) as [Key]) as NewVal([Key]) ON t.[Key] = newval.[Key] WHEN MATCHED THEN UPDATE SET Duplicate = 1 WHEN NOT MATCHED THEN INSERT (Num_TEST, TEST_01, TEST_02, TEST_03, TEST_04, TEST_05, TEST_06, TEST_07, TEST_08, TEST_09, TEST_10, TEST_11, TEST_12, TEST_13, TEST_14, TEST_15, TEST_16, TEST_17, TEST_18, TEST_19, TEST_20, [Key]) values(CONVERT(int,?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(int,?));",a)

cursor.commit()

cnxn1.close()

except BaseException as e:

SaveResults('Executing SQL - an error occurred - ' + format(e),True)

finally:

pThreadOrder.ChangeStatus(n,0)declare @p1 int

set @p1=null

exec sp_prepexec @p1 output,N'@P1 nvarchar(10),@P2 nvarchar(4),@P3 nvarchar(12),@P4 nvarchar(12),@P5 nvarchar(12),@P6 nvarchar(12),@P7 nvarchar(12),@P8 nvarchar(12),@P9 nvarchar(12),@P10 nvarchar(12),@P11 nvarchar(12),@P12 nvarchar(12),@P13 nvarchar(12),@P14 nvarchar(12),@P15 nvarchar(12),@P16 nvarchar(12),@P17 nvarchar(12),@P18 nvarchar(12),@P19 nvarchar(12),@P20 nvarchar(12),@P21 nvarchar(12),@P22 nvarchar(12),@P23 nvarchar(10)',N'MERGE INTO [dbo].[test_data] AS T USING (SELECT CONVERT(int,@P1) as [Key]) as NewVal([Key]) ON t.[Key] = newval.[Key] WHEN MATCHED THEN UPDATE SET Duplicate = 1 WHEN NOT MATCHED THEN INSERT (Num_TEST, TEST_01, TEST_02, TEST_03, TEST_04, TEST_05, TEST_06, TEST_07, TEST_08, TEST_09, TEST_10, TEST_11, TEST_12, TEST_13, TEST_14, TEST_15, TEST_16, TEST_17, TEST_18, TEST_19, TEST_20, [Key]) values(CONVERT(int,@P2),convert(varchar(6),@P3),convert(varchar(6),@P4),convert(varchar(6),@P5),convert(varchar(6),@P6),convert(varchar(6),@P7),convert(varchar(6),@P8),convert(varchar(6),@P9),convert(varchar(6),@P10),convert(varchar(6),@P11),convert(varchar(6),@P12),convert(varchar(6),@P13),convert(varchar(6),@P14),convert(varchar(6),@P15),convert(varchar(6),@P16),convert(varchar(6),@P17),convert(varchar(6),@P18),convert(varchar(6),@P19),convert(varchar(6),@P20),convert(varchar(6),@P21),convert(varchar(6),@P22),convert(int,@P23));',N'99739',N'20',N'D23063',N'D92294',N'D31858',N'D58259',N'D25084',N'D38396',N'D60646',N'D54678',N'D61377',N'D35637',N'D89828',N'D44396',N'D26608',N'D71205',N'D91092',N'D43897',N'D32687',N'D12671',N'D36501',N'D90202',N'99739'

select @p1

- Finally, in order to improve the performance more, we suggested to use an explicit conversion in the code:

def ExecuteSQLcc(a,n):

try:

pThreadOrder.ChangeStatus(n,-2)

cnxn1 = pyodbc.connect("DRIVER={ODBC Driver 17 for SQL Server};SERVER=" + SQL_server + ";DATABASE=" + SQL_database + ";UID=" +SQL_user+';PWD='+ SQL_password, autocommit=False)

cursor = cnxn1.cursor()

cursor.fast_executemany = True

##cursor.executemany("MERGE INTO [dbo].[test_data] AS T USING (SELECT CONVERT(int,?) as [Key]) as NewVal([Key]) ON t.[Key] = newval.[Key] WHEN MATCHED THEN UPDATE SET Duplicate = 1 WHEN NOT MATCHED THEN INSERT (Num_TEST, TEST_01, TEST_02, TEST_03, TEST_04, TEST_05, TEST_06, TEST_07, TEST_08, TEST_09, TEST_10, TEST_11, TEST_12, TEST_13, TEST_14, TEST_15, TEST_16, TEST_17, TEST_18, TEST_19, TEST_20, [Key]) values(?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?);",a)

cursor.executemany("MERGE INTO [dbo].[test_data] AS T USING (SELECT CONVERT(int,?) as [Key]) as NewVal([Key]) ON t.[Key] = newval.[Key] WHEN MATCHED THEN UPDATE SET Duplicate = 1 WHEN NOT MATCHED THEN INSERT (Num_TEST, TEST_01, TEST_02, TEST_03, TEST_04, TEST_05, TEST_06, TEST_07, TEST_08, TEST_09, TEST_10, TEST_11, TEST_12, TEST_13, TEST_14, TEST_15, TEST_16, TEST_17, TEST_18, TEST_19, TEST_20, [Key]) values(CONVERT(int,?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(varchar(6),?),convert(int,?));",a)

cursor.commit()

cnxn1.close()

except BaseException as e:

SaveResults('Executing SQL - an error occurred - ' + format(e),True)

finally:

pThreadOrder.ChangeStatus(n,0)

exec sp_execute 1,89991,10,'D46524','D57566','D69960','D86195','D20493','D17178','D40703','D60016','D24064','D69131','','','','','','','','','','',89991

Right now, we reduced a lot the performance but, our customer needs to reduce more the time, in this case, as all threads in SQL Server is SOS_SCHEDULER_YIELD and runable, means that we need more CPUs, in this case, we changed from 2 vCores to 4 vCores and we reduced this time.

SELECT

substring(REPLACE(REPLACE(SUBSTRING(ST.text, (req.statement_start_offset/2) + 1, (

(CASE statement_end_offset WHEN -1 THEN DATALENGTH(ST.text) ELSE req.statement_end_offset END

- req.statement_start_offset)/2) + 1) , CHAR(10), ' '), CHAR(13), ' '), 1, 512) AS statement_text

,req.database_id

,sess.program_name

,req.session_id

, sysproc.ecid

,sysproc.status

,sysproc.waittime

,sysproc.lastwaittype

, sysproc.cpu

, sysproc.sql_handle

, req.cpu_time 'cpu_time_ms'

, req.status

, wait_time

, wait_resource

, wait_type

, last_wait_type

, req.total_elapsed_time

, total_scheduled_time

, req.row_count as [Row Count]

, command

, scheduler_id

, memory_usage

, req.writes

, req.reads

, req.logical_reads, blocking_session_id

FROM sys.dm_exec_requests AS req

inner join sys.dm_exec_sessions as sess on sess.session_id = req.session_id

inner join sys.sysprocesses as sysproc on sess.session_id = sysproc.spid

CROSS APPLY sys.dm_exec_sql_text(req.sql_handle) as ST

where req.session_id <> @@SPID and sysproc.status <> 'background'

order by sess.session_id, sysproc.ecid

Enjoy!