This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Financial Services Industry (FSI) is one of the largest consumers of HPC environments in the cloud. To evaluate the performance of these systems, it is important to use benchmark tests that accurately reflect the type of workloads of those systems. In this article, we will attempt to benchmark the most common virtual machine types used in Azure in these environments with relevant benchmarks to the industry.

One of the key considerations when choosing an HPC benchmark test is the type of workload that the system will be expected to handle. Different HPC applications have different computational needs, so it is important to select a benchmark test that reflects the specific requirements of the system. For example, a system that will be used for scientific simulations may require different benchmark tests than a system that will be used for data analytics or financial modeling.

The Azure HPC product group has produced many articles comparing the virtual machine options or SKUs with a variety of benchmarks. (The latest one was on the upcoming AMD HBv4 & HX processors options.) In general, these articles have focused on tightly coupled real-world HPC workloads, like computational fluent dynamics (CFD, like Ansys Fluent, OpenFoam, or Star-CCM+), finite element analysis (FEA, like Altair RADIOSS) & even weather analysis, like WRF. For HPC professionals, having benchmarks demonstrating their workloads scaling up to 80k cores is unique within the industry. However, one area or industry not typically covered to date has been the financial services industry (FSI). The financial services industry is one of the most demanding industries in terms of computational power and data storage, with high expectations for uptime, availability, and performance.

In some cases, the general assumption is that any general purpose (GP) SKU would be good enough. However, in my role as a Global Black Belt for Microsoft for FSI in Americas, we’ve done the analysis for both customers and service providers providing HPC as a Service (HPCaaS) for their customers. Selecting the right SKU can be hugely impactful not only for performance but also memory to core ratio and cost-wise, where cost per core hour is important. In some organizations, local disk performance is hugely determinant to overall workload speed.

Foremost, Microsoft would recommend testing your specific workloads and code against a variety of SKUs, but we want to submit these sample benchmark results to guide your choices. Your application might scale differently than these sample results indicate.

Most popular Azure VM SKUs to run FSI HPC Workloads:

|

VM Name |

HC44rs |

HB120rs_v3 |

HB120rs_v2 |

D64ds_v5 |

D64ads_v5 |

|

Number of pCPUs |

44 (Constrained Core 16, 32 options available) |

120 (Constrained Core 16, 32, 64, 96 options available) |

120 (Constrained Core 16, 32, 64, 96 options available) |

32 |

32 |

|

InfiniBand |

100Gb/s HDR |

200Gb/s EDR |

200Gb/s EDR |

NA |

NA |

|

Processor |

Intel Xeon Platinum 8168 |

AMD EPYC 7V73X CPU cores (“Milan-X”) |

AMD EPYC 7742 CPU cores |

Intel® Xeon® Platinum 8370C (Ice Lake) |

AMD's EPYC 7763v CPU Cores |

|

Peak CPU Frequency |

3.70 GHz |

3.5 GHz |

3.4 GHz |

3.5 GHz |

3.5 GHz |

|

RAM per VM |

352 GB |

448 GB |

456 GB |

256 GB |

256 GB |

|

RAM per core |

8 GB |

3.75 GB |

3.8 GB |

8 GB |

8 GB |

|

Memory B/W per core |

4.3 GB/s |

5.25 GB/s |

2.9 GB/s |

4.26 GB/s |

4.26 GB/s |

|

L3 Cache per VM |

33MB |

768MB |

256MB |

48MB |

256MB |

|

L3 Cache per core |

0.9 MB |

6.4 MB |

2.1 MB |

1MB |

5.3 MB |

|

Attached Disk |

1 x 700MB NVMe |

2 x 0.9 TB NVMe |

1 x 0.9 TB NVMe |

2400 SSD |

2400 SSD |

Table 1: Technical specifications of FSI HPC VM SKUs

Important to Note: Most GP VM options in Azure have hyperthreading enabled. (e.g., Dv3, Dv4, Ddsv5, Dasv5, etc.) Hyperthreading is generally discouraged with most HPC workloads as the underlying physical cores (not logical threads) is the bottleneck, and excess operations can lead to paging. I recommend most customers create a support ticket to disable hyperthreading for the VMs and Virtual Machine Scale Sets (VMSS) running their HPC environments.

Second Note: In most cases, these workloads are single-threaded operations, so these benchmarks are effectively testing the processor choice alone not the scalability of a particular node type. HPC SKUs would run multiple instances of these tasks at a time in a production HPC scheduled job.

Python-Based Benchmarks:

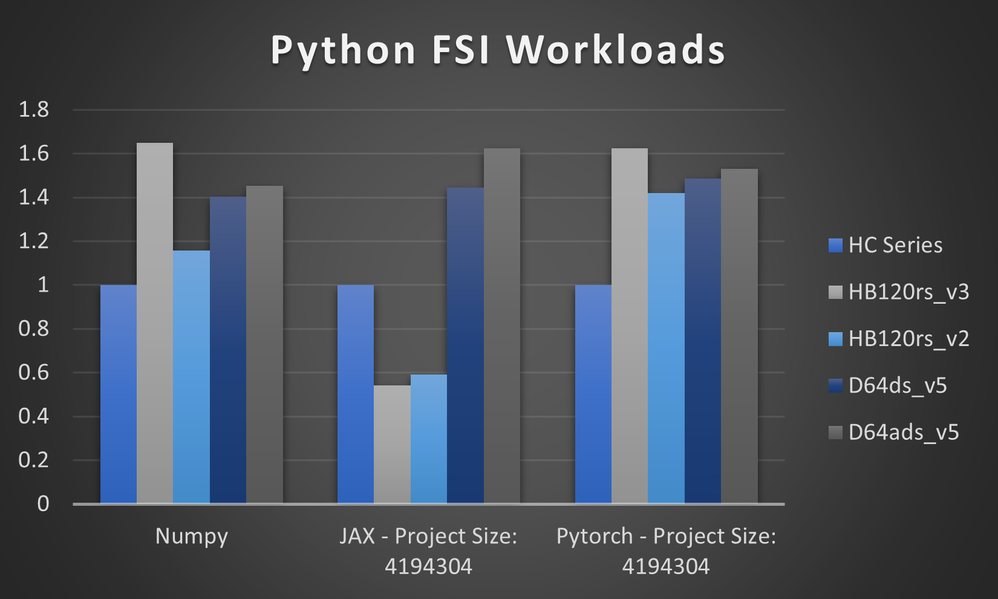

Many FinServ companies are using a variety of Python technologies to perform their calculations.

- NumPy is a Python library used for working with arrays. It has functions for working in the domain of linear algebra, fourier transform, and matrices. NumPy is often used to perform numerical calculations on financial data, such as estimating the value of derivatives or simulating the behavior of financial markets. (Single Threaded)

- PyTorch is a popular open-source deep learning framework developed by Facebook's AI Research lab. It is widely used for training and evaluating machine learning models in a variety of fields, including natural language processing and financial services. In the field of financial services, PyTorch has been used for a range of tasks, including credit risk modeling, fraud detection, and trading strategy optimization. As an aside, Azure cloud is the sole cloud with enterprise support for your PyTorch models. (Single Threaded)

- Jax is a Python library designed for high-performance ML research. Jax is nothing more than a numerical computing library, like NumPy, but with some key improvements with the ability to utilize GPU/TPU as well as CPU utilizing XLA (Accelerated Linear Algebra) compiler. It is often used in financial engineering for tasks such as time series forecasting, risk management, and algorithmic trading. (Single Threaded)

Figure 1 Python FSI Benchmarks, performance comparison to HC-Series

(taller line indicates comparatively better performance)

Application Benchmarks:

In lieu of ISV-proprietary code, we will be benchmarking FinanceBench and QuantLib.

- FinanceBench is a suite of benchmarks developed by the HPC Advisory Council that focuses on evaluating the performance of computing systems for financial applications, such as risk analysis and portfolio management. The FinanceBench test cases are focused on Black-Sholes-Merton Process with Analytic European Option engine, QMC (Sobol) Monte-Carlo method (Equity Option Example), Bonds Fixed-rate bond with flat forward curve, and Repo Securities repurchase agreement. FinanceBench was originally written by the Cavazos Lab at the University of Delaware. (Does not scale across multiple cores)

- QuantLib is an open-source library/framework around quantitative finance for modeling, trading and risk management scenarios. QuantLib is written in C++ with Boost and its built-in benchmark used reports the QuantLib Benchmark Index benchmark score. It is respected by the financial services industry (FSI) for its comprehensive collection of tools and algorithms for pricing and risk management of financial instruments, as well as its high level of performance and accuracy. (Does not scale across multiple cores)

Figure 2 Application FSI Benchmarks, performance comparison to HC-Series

(taller line indicates comparatively better performance)

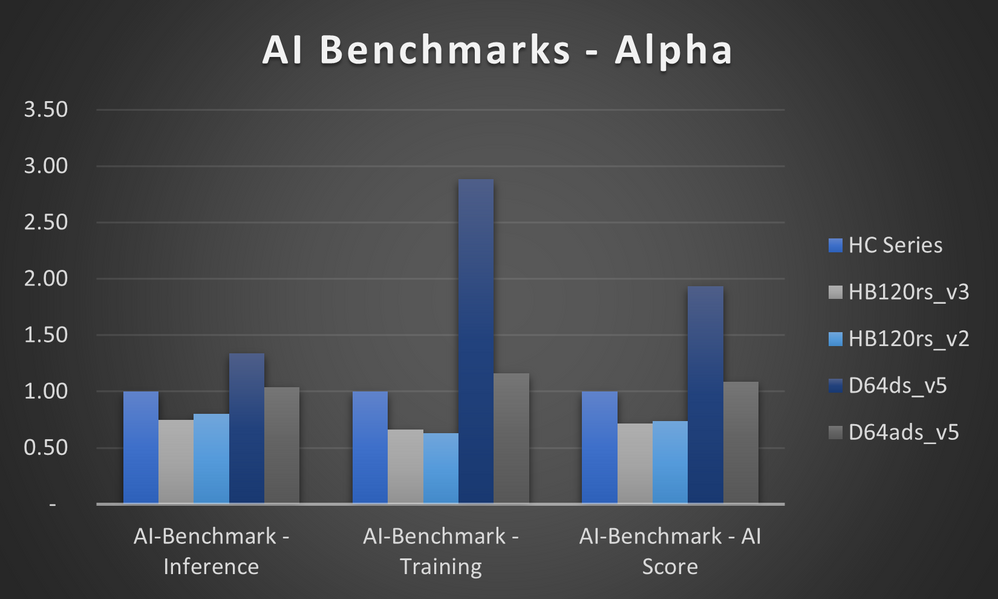

AI Benchmarks:

Generic AI Benchmarks are still alpha in development. Certainly, specific AI-type codes, like CUDA, have benchmarks or points of comparison between machines, but generic industry-wide benchmarks are in a fairly early infancy stage yet. It relies upon the TensorFlow machine learning library. This workload does scale well to multiple cores.

Figure 3 AI *ALPHA* Benchmarks, performance comparison to HC-Series

(taller line indicates comparatively better performance)

Testing Methodology & Commentary:

Testing methodology and assumptions are the two important factors that need to be considered when performing a benchmarking exercise. It requires knowing the application workload and assessing what the value of the benchmark will be. In this article’s case, the primary use will be in a processor or SKU comparison between options that exist in Azure for specific types of calculations often done in FSI as representative analogies.

The testing methodology for this project is to benchmark the performance of the HPC workload against a baseline system, which in this case was the HC-Series high-performance SKU in Azure. The assumption is that an HPC grid would have this workload running many times often with a 1:1 job-to-physical CPU core ratio. Benchmarking will be done on a single machine with a single CPU, which is not representative of any real-world environment. However, future tests may involve multiple systems like the notable STAC A2 benchmark.

The baseline system for these benchmarks:

- Ubuntu 20.04 LTS Distro with latest hot fixes installed (Gen 2 Azure Image)

- Python 3.8.9

- Phoronix Test Suite 10.8.4 (latest)

Most testing data was written to the local ephemeral drives for the test. I also specifically excluded two GP SKU families from benchmarking –Fs_v2 and Dds_v4/Dads_v4 due to the possibility to be hosted by multiple processor types. Dds_v4 could be hosted by both Cascade Lake or Ice Lake Processors, and there is no way to guarantee an entire grid will be one or the other. In one case with Fs_v2 SKU families, the number of cores per socket changed from the generation of Intel processors implemented, which affected the customer in question. This is not to say one shouldn’t use these SKUs for FSI Grid workloads but only to be aware of the multiple supporting processor types.

One of the challenges of HPC benchmark testing is that the workloads that these systems are expected to handle are often highly complex and dynamic as it runs multiple jobs at the same time. As a result, HPC benchmark tests should be considered as a starting point for evaluating the performance of a system, rather than the definitive measure of its capabilities. Benchmarking is also done once activity with the consistent efforts to modernize the cloud and the ability to be dynamic with one’s workloads. For instance, Microsoft urges you to continue to retest your benchmarks as new SKU families arrive like AMD’s Genoa-X powered HBv4 and HX Series coming in 2023.

In conclusion, HPC benchmark testing is a valuable tool for evaluating the performance of high-performance computing systems. By selecting the appropriate benchmark test and running it in a controlled and consistent manner, it is possible to compare the performance of different systems and make informed decisions about which system is best suited to a particular workload. However, it is important to keep in mind that HPC benchmark tests are not a perfect reflection of real-world workloads, and other factors such as reliability and overall cost should also be considered when choosing an HPC system. Different organizations will have different priorities on where their key performance indicators are, like cost per job or elapsed time per job.

#AzureHPCAI