This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Azure Database for MySQL is a relational database service in the Microsoft cloud based on the MySQL Community Edition database engine. MySQL is an advanced, feature-rich, open-source database system that’s popular with developers, used for a wide range of applications, and compatible with most popular application platforms.

Azure Database for MySQL – Flexible Server is the service deployment option that provides the most granular control and flexibility over database management functions and configuration settings. Many customers choose Azure Database for MySQL – Flexible Server to run their production workloads in Azure, and we continue to actively modernize our service to support different workloads at scale.

In this blog post, I’ll go over the various tools available for performing custom migrations from MySQL running on-premises, in Virtual Machines or a third-party cloud, or from Azure Database for MySQL – Single Server to Azure Database for MySQL – Flexible Server. I’ll also discuss complex scenarios to help in planning the migration and performing it with minimal issues.

Importance of benchmarking

There are many tools to choose from, and each has its own pros and cons – some work best for one scenario but perhaps not well in another. As a result, to recommend the best tool when migrating MySQL databases between platforms / clouds, it’s important to do some benchmark tests to better compare the tools. This information provides a solid baseline for selecting the best tool to address your specific needs while planning migrations.

Tools and their best practices (every tool and scenario). Parameter/tuning used for the tool.

MyDumper/MyLoader

MyDumper/MyLoader are MySQL Logical Backup/Restore Tools which are two tools in one package. MyDumper is responsible for exporting a consistent backup of MySQL databases. MyLoader reads the backup from MyDumper, connects to the destination database, and imports the backup. Both tools use multi-threading/parallel processing capabilities.

URL - https://github.com/mydumper/mydumper

MySQLSh

MySQLSh is a powerful tool that provides scripting capabilities for JavaScript and Python and includes APIs for working with MySQL. It also provides parallel dumping with multiple threads, file compression, and progress information display and MySQL Database Service compatibility checks and modifications. Dumps can be easily imported into a MySQL Server instance or a MySQL Database Service DB System using the MySQL Shell load dump utilities.

URL - https://dev.mysql.com/doc/mysql-shell/8.0/en/

MySQLPump

The mysqlpump client utility performs logical backups, producing a set of SQL statements that can be executed to reproduce the original database object definitions and table data. It dumps one or more MySQL databases for backup or transfer to another MySQL Server. It can dump DB in parallel and it is an excellent tool for backup.

URL - https://dev.mysql.com/doc/refman/8.0/en/mysqlpump.html

MySQLDump

The mysqldump client utility performs logical backups, producing a set of SQL statements that can be executed to reproduce the original database object definitions and table data. It dumps one or more MySQL databases for backup or transfer to another MySQL server. The mysqldump command can also generate output in CSV, other delimited text, or XML format.

URL - https://dev.mysql.com/doc/refman/8.0/en/mysqldump.html

Benchmarking commands

The benchmarking we performed with variety of scenarios covering small to large databases in size such as 90 GB to 1Tb and simple to complex databases for example high number of database objects ranging from 400 to 40000. We chose the server SKU to be 16 vCore with 20000 IOPS since this provides good performance/cost for migrations and the servers only need to be scaled up during migration and scaled down post completion to minimize cost. Azure VM with 16 vCore with 7500 IOPS was used for dump and restore.

The following sections provide the commands for dump and load operations associated with each tool.

MyDumper/MyLoader commands

Backup

Command syntax:

|

mydumper --regex='^(?!(mysql|sys|information_schema|performance_schema))' --host=<DB_hostname> --user=<DB_username> --password=<DB_password> --outputdir=<backup_directory>--rows=500000 --compress --build-empty-files --threads=16 --compress-protocol |

Sample output:

|

root@vm1:/backup/mydumper/test2# time mydumper --regex='^(?!(mysql|sys|information_schema|performance_schema))' --host=single2-70gb.mysql.database.azure.com --user=azureuser@single2-70gb --password=************ --outputdir=/backup/mydumper/test2 --rows=500000 --compress --build-empty-files --threads=16 --compress-protocol

real 8m44.974s user 131m30.487s sys 3m14.229s |

Restore

Command syntax:

|

mydumper --regex='^(?!(mysql|sys|information_schema|performance_schema))' --host=<DB_hostname> --user=<DB_username> --password=<DB_password> --outputdir=<backup_directory>--rows=500000 --compress --build-empty-files --threads=16 --compress-protocol |

Sample output:

|

root@vm1:/backup/mydumper/test2# time myloader --host=flex5-restore.mysql.database.azure.com --user=azureuser --password==************ --directory=/datadrive/mydumper/LargeDBSimpleSchema --queries-per-transaction=500 --threads=16 --compress-protocol --verbose=3 -e

** Message: 06:46:22.018: Thread 4 shutting down ** Message: 06:46:23.706: Thread 3 shutting down ** Message: 06:46:23.706: Thread 12 shutting down ** Message: 06:46:25.670: Thread 6 shutting down

real 287m21.490s user 1508m48.761s sys 23m0.890s |

MySQLSH commands

Backup

Command syntax:

|

mysqlsh --uri <DB_username>@<DB_Hostname>:<DB_Port> util.dumpInstance("<backup_directory>", {threads: 16, showProgress: true}) |

Sample output:

|

root@#vm1:/backup/mysqlsh# mysqlsh --uri azureuser%40single90gb@single90gb.mysql.database.azure.com:3306 Please provide the password for 'azureuser%40single90gb@single90gb.mysql.database.azure.com:3306': *************** Save password for 'azureuser%40single90gb@single90gb.mysql.database.azure.com:3306'? [Y]es/[N]o/Ne[v]er (default No): Y MySQL Shell 8.0.31

Copyright (c) 2016, 2022, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners.

Type '\help' or '\?' for help; '\quit' to exit. Creating a session to 'azureuser%40single90gb@single90gb.mysql.database.azure.com:3306' Fetching schema names for auto-completion... Press ^C to stop. Your MySQL connection id is 62629 Server version: 5.7.32-log MySQL Community Server (GPL) No default schema selected; type \use <schema> to set one. MySQL _single90gb.mysql.database.azure.com:3306 ssl__JS_> util.dumpInstance("/backup/mysqlsh/SmallDBSmallSchema", {threads: 16, showProgress: true}) NOTE: Backup lock is not supported in MySQL 5.7 and DDL changes will not be blocked. The dump may fail with an error if schema changes are made while dumping. Acquiring global read lock Global read lock acquired Initializing - done 2 out of 6 schemas will be dumped and within them 400 tables, 0 views. 3 out of 5 users will be dumped. Gathering information - done All transactions have been started Global read lock has been released Writing global DDL files Writing users DDL Running data dump using 16 threads. NOTE: Progress information uses estimated values and may not be accurate. Writing schema metadata - done Writing DDL - done Writing table metadata - done Starting data dump 101% (400.00M rows / ~394.56M rows), 1.28M rows/s, 253.04 MB/s uncompressed, 115.09 MB/s compressed Dump duration: 00:04:35s Total duration: 00:04:52s Schemas dumped: 2 Tables dumped: 400 Uncompressed data size: 77.56 GB Compressed data size: 35.26 GB Compression ratio: 2.2 Rows written: 400000000 Bytes written: 35.26 GB Average uncompressed throughput: 281.23 MB/s Average compressed throughput: 127.86 MB/s |

Restore

Command syntax:

|

mysqlsh --uri <DB_username>@<DB_Hostname>:<DB_Port> util.loadDump(" <backup_directory> ", {threads: 16, showProgress: true}) |

Sample output:

|

root@vm1:/backup/mysqlsh/test1# mysqlsh --uri azureuser@flex5-restore.mysql.database.azure.com:3306 Please provide the password for 'azureuser@flex5-restore.mysql.database.azure.com:3306': *************** Save password for 'azureuser@flex5-restore.mysql.database.azure.com:3306'? [Y]es/[N]o/Ne[v]er (default No): Y MySQL Shell 8.0.31

Copyright (c) 2016, 2022, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners.

Type '\help' or '\?' for help; '\quit' to exit. Creating a session to 'azureuser@flex5-restore.mysql.database.azure.com:3306' Fetching schema names for auto-completion... Press ^C to stop. Your MySQL connection id is 48 Server version: 5.7.39-log MySQL Community Server (GPL) No default schema selected; type \use <schema> to set one. MySQL flex5-restore.mysql.database.azure.com:3306 ssl JS > util.loadDump("/backup/mysqlsh/test1", {threads: 16, showProgress: true}) Loading DDL and Data from '/backup/mysqlsh/test1' using 16 threads. Opening dump... Target is MySQL 5.7.39-log. Dump was produced from MySQL 5.7.39-log Scanning metadata - done Checking for pre-existing objects... Executing common preamble SQL Executing DDL - done Executing view DDL - done Starting data load 2 thds loading | 100% (77.94 GB / 77.94 GB), 21.11 MB/s, 402 / 402 tables done Executing common postamble SQL Recreating indexes - done 1608 chunks (402.00M rows, 77.94 GB) for 402 tables in 3 schemas were loaded in 22 min 20 sec (avg throughput 61.39 MB/s) 0 warnings were reported during the load. |

MySQLPump commands

Backup

Command syntax:

|

mysqlpump -h<DB_Hostname> -u<DB_User> -p<DB_Password> --include-databases=<database_name1,database_name2> --default-parallelism=16 > backup_file_name.sql |

Sample output:

|

root@vm1:~# time mysqlpump -hsingle2-70gb.mysql.database.azure.com -uazureuser@single2-70gb -p********** --include-databases=db1,db2 --default-parallelism=16 > /backup/mysqlpump/test1/mysqlpump3.dmp mysqlpump: [Warning] Using a password on the command line interface can be insecure. Dump progress: 0/4 tables, 250/3945600 rows Dump progress: 0/65 tables, 1371500/64115975 rows Dump progress: 0/130 tables, 2969000/128232159 rows Dump progress: 1/193 tables, 4082750/190375359 rows Dump progress: 1/247 tables, 5064500/243641509 rows Dump progress: 1/305 tables, 6059000/300852709 rows Dump progress: 392/400 tables, 398936000/394561821 rows Dump progress: 395/400 tables, 399410500/394561821 rows Dump progress: 397/400 tables, 399666250/394561821 rows Dump progress: 399/400 tables, 399839250/394561821 rows Dump completed in 404085

real 6m47.945s user 17m28.244s sys 3m30.992s |

Restore

Command syntax:

|

mysql -h<DB_Hostname> -u<DB_User> -p<DB_Password> < backup_file_name.sql |

Sample output:

|

root@vm1:/backup/mysqlsh/test1# time mysql -hflex5-restore.mysql.database.azure.com -uazureuser -p*********** < /backup/mysqlpump/test1/mysqlpump1.dmp mysql: [Warning] Using a password on the command line interface can be insecure.

real 415m44.683s user 16m10.914s sys 2m20.370s |

MySQLDump commands

Backup

Command syntax:

|

mysqldump --column-statistics=0 -h<DB_Hostname> -u<DB_User> -p<DB_Password> --databases <database_name1 database name2> > /backup/mysqldump/SmallDBLargeSchema/mysqldump.sql |

Sample output:

|

root@vm1:~# time mysql -hflex5-restore.mysql.database.azure.com -uazureuser -p********** < /backup/mysqlpump/test1/mysqlpump1.dmp mysql: [Warning] Using a password on the command line interface can be insecure.

real 421m46.576s user 16m4.305s sys 2m31.939s |

Restore

Command syntax:

|

mysql -h<DB_Hostname> -u<DB_User> -p<DB_Password> < backup_file_name.sql |

Sample output:

|

root@vm1:/backup/mysqldump# time mysqldump --column-statistics=0 -hsingle2-70gb.mysql.database.azure.com -uazureuser@single2-70gb -p********* --databases db1 db2 > /backup/mysqldump/test1/mysqldump.sql mysqldump: [Warning] Using a password on the command line interface can be insecure.

real 91m54.313s user 15m45.947s sys 3m4.154s |

Benchmarking results

The benchmarking results for the scenarios with varying database conditions appear in the tables and charts in the following sections. For all scenarios:

- The source is Azure Database for MySQL – Single Server

- The target is Azure Database for MySQL – Flexible Server

NOTE: MySQLPump and MySQLDump can only create the dump, so MySQL is used to restore.

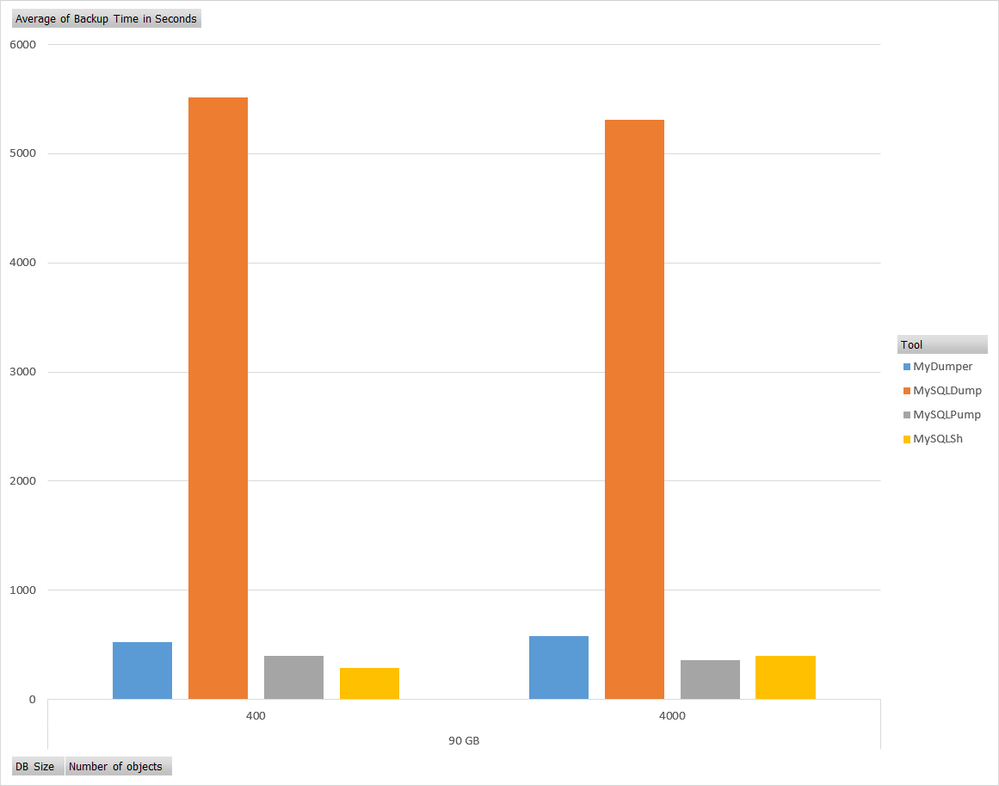

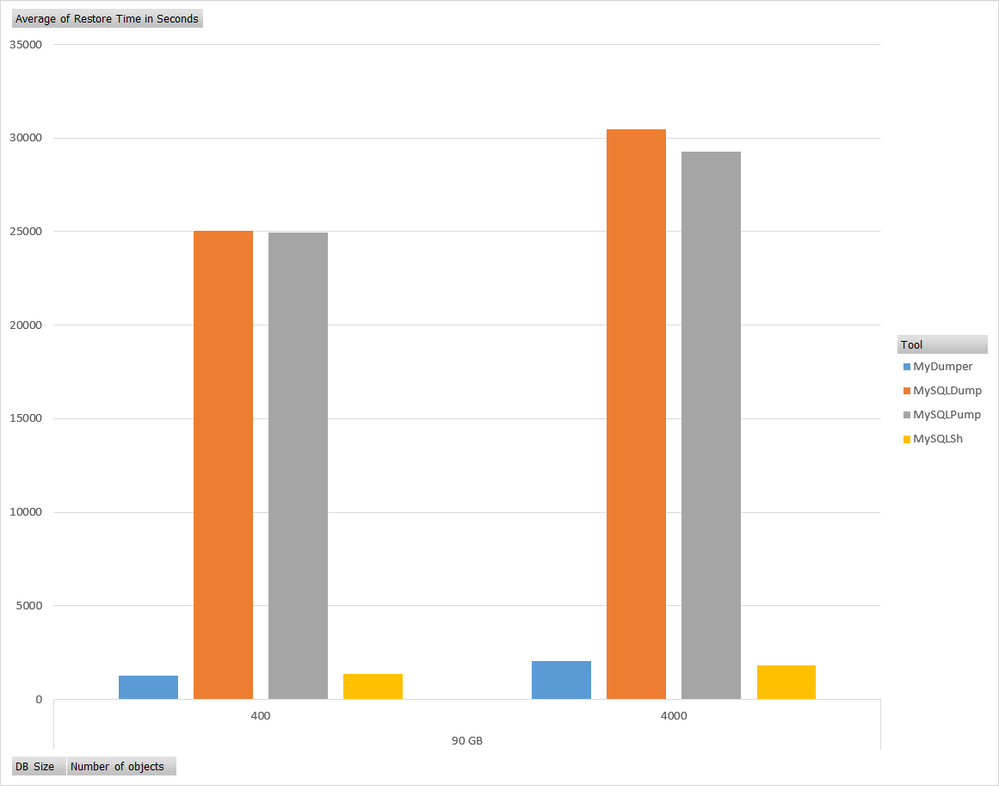

Scenario 1: DB Size = 90 GB

| Tool |

Object Count |

Backup Time (sec) |

Restore Time (sec) |

|

MyDumper |

400 |

524 |

1261 |

|

MySQLSh |

400 |

292 |

1336 |

|

MySQLPump |

400 |

396 |

24944 |

|

MySQLDump |

400 |

5514 |

25059 |

|

MyDumper |

4000 |

581 |

2071 |

|

MySQLSh |

4000 |

396 |

1831 |

|

MySQLPump |

4000 |

358 |

29280 |

|

MySQLDump |

4000 |

5311 |

30451 |

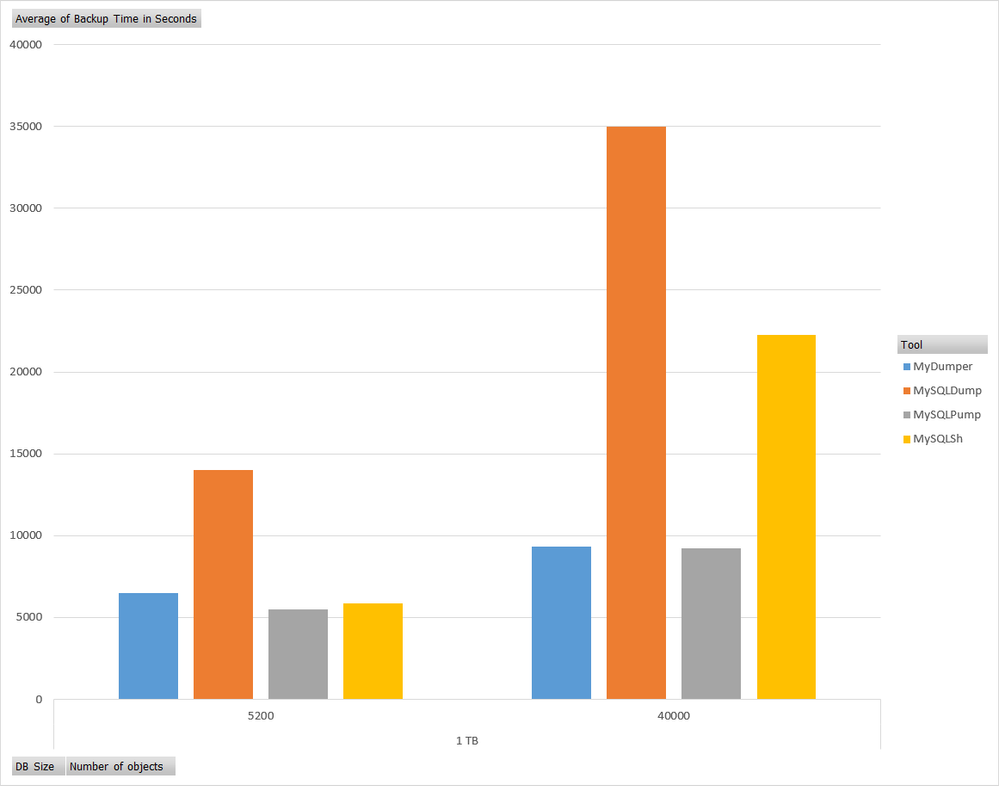

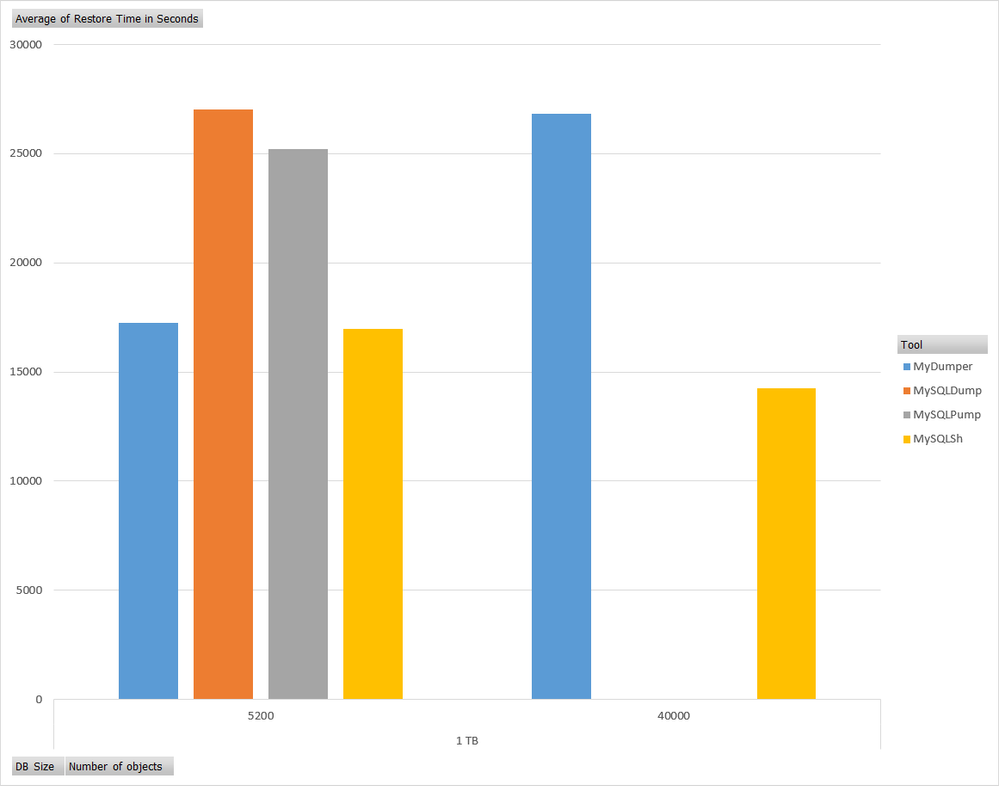

Scenario 2: DB Size = 1 TB

|

Tool |

Object Count |

Backup Time (sec) |

Restore Time (sec) |

|

MyDumper |

5200 |

6491 |

17241 |

|

MySQLSh |

5200 |

5862 |

16980 |

|

MySQLPump |

5200 |

5481 |

25231 |

|

MySQLDump |

5200 |

14016 |

27045 |

|

MyDumper |

40000 |

9345 |

26833 |

|

MySQLSh |

40000 |

22260 |

14254 |

|

MySQLPump |

40000 |

9213 |

> 24 hr |

|

MySQLDump |

40000 |

34980 |

> 24 hr |

Conclusion

Based on benchmarking and testing these popular tools for backing up and restoring a MySQL database, it’s apparent that some options, such as MySQLDump, are legacy tools, lacking in features such as parallelism. As a result, these are only suitable for use with simple migrations of smaller databases, or if a schema dump is all that’s required. With support for parallelism, MyDumper/Myloader has proved to be the most popular tool for dumping/restoring large databases. Today, we also have native MySQL tools such as MySQLPump and MySQLShl, which also offer parallelism with moderately better results, providing additional options for performing a dump/restore.

If you have any feedback or questions about the information provided above, please leave a comment below or email us at AskAzureDBforMySQL@service.microsoft.com. Thank you!