This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Nextflow is a workflow framework that can be used for Genomics analysis. Genomics pipelines are composed of many different interdependent tasks. The output of a task is used as the input of a subsequent downstream task. Each task usually involves running a process that manipulates the data.

To run Nextflow pipeline on Azure you must create a config file specifying compute resources to use. By default, Nextflow will use a single compute configuration for all tasks. In this post we demonstrate how a custom configurations can be made for nf-core/rnaseq pipeline as an example.

We created configuration files corresponding to Azure Ddv4-series and Edv4-series virtual machines and used them to benchmark the pipeline on a subset of ENCODE data. Benchmarking results show that the cost to run a pipeline can be reduced by up to 35% without significant compromise on pipeline run duration when using Edv4-series virtual machines.

Prerequisites

- Create Azure Storage account and copy FASTQ files and references there.

- Create Azure Batch account, associate your Storage account with it, and adjust your quotas on the pipeline’s needs.

- If you’re new to Nextflow check this post to get started with Nextflow on Azure or this post provided by Sequera labs.

Creating configuration files

The simplest Azure configuration file has a few fields that you need to fill in: Storage account name and SAS Token, Batch account location, name and key.

process {

executor = ' azurebatch'

}

azure {

storage {

accountName = "<Your storage account name>"

sasToken = "<Your storage account SAS Token>"

}

batch {

location = "<Your location>"

accountName = "<Your batch account name>"

accountKey = "<Your batch account key>"

autoPoolMode = true

}

}

When using the autoPoolMode setting Nextflow automatically creates a pool of compute nodes to execute the jobs run by your pipeline; by default, it only uses one compute node of Standard_D4_v3 type. For nf-core pipelines the azurebatch config uses Standard_D8_v3. To have more precise control of compute resources you must define several pools and choose a pool depending on the task in your pipeline.

The advantage of nf-core/rnaseq pipeline is that its tasks are annotated with labels and base configuration file defines compute requirements for each label. We used the requirements to choose general purpose virtual machines from Ddv4-series and memory optimized virtual machines from Edv4-series to define pools corresponding to the labels (see Table 1). Note that Edv4-series virtual machines have memory-to-CPU ratio that better fits the pipeline requirements, and that allows us to choose smaller (less CPUs) Edv4 machines compared to Ddv4-series.

|

Requirements |

Ddv4-series |

Edv4-series |

|

Default: CPUs – 1, memory – 6GB |

Standard_D2d_v4 |

Standard_E2d_v4 |

|

Label ‘process_low’: CPUs – 2, memory – 12GB |

Standard_D4d_v4 |

Standard_E2d_v4 |

|

Label ‘process_medium’: CPUs – 6, memory – 36GB |

Standard_D16d_v4 |

Standard_E8d_v4 |

|

Label ‘process_high’: CPUs – 12, memory – 72GB |

Standard_D32d_v4 |

Standard_E16d_v4 |

|

Label ‘process_high_memory’: memory – 200GB |

Standard_D48d_v4 |

Standard_E32d_v4 |

Updated configuration file for Edv4-series looks like

process {

executor = 'azurebatch'

queue = 'Standard_E2d_v4'

withLabel:process_low {queue = 'Standard_E2d_v4'}

withLabel:process_medium {queue = 'Standard_E8d_v4'}

withLabel:process_high {queue = 'Standard_E16d_v4'}

withLabel:process_high_memory {queue = 'Standard_E32d_v4'}

}

azure {

storage {

accountName = "<Your storage account name>"

sasToken = "<Your storage account SAS Token>"

}

batch {

location = "<Your location>"

accountName = "<Your batch account name>"

accountKey = "<Your batch account key>"

autoPoolMode = false

allowPoolCreation = true

pools {

Standard_E2d_v4 {

autoScale = true

vmType = 'Standard_E2d_v4'

vmCount = 2

maxVmCount = 20

}

Standard_E8d_v4 {

autoScale = true

vmType = 'Standard_E8d_v4'

vmCount = 2

maxVmCount = 20

}

Standard_E16d_v4 {

autoScale = true

vmType = 'Standard_E16d_v4'

vmCount = 2

maxVmCount = 20

}

Standard_E32d_v4 {

autoScale = true

vmType = 'Standard_E32d_v4'

vmCount = 2

maxVmCount = 10

}

}

}

}

Updated configuration file for Ddv4-series was created the same way according to the requirements table. Keep in mind you might need to change vmCount and maxVmCount depending on your Batch account quotas. To learn more about pool settings please check Nextflow documentation.

Use -c option to provide Azure configuration file when running your pipeline

nextflow run rnaseq -c '<Path to your Azure configuration file>' --input 'az://<Path to input file>' --outdir 'az://<Output directory>' -w 'az://<Work directory>' [<Other pipeline parameters>]

Benchmarking

Dedicated virtual machines: Ddv4-series vs. Edv4-series

We filtered 54 human RNA-seq samples from ENCODE dataset, all samples were provided by David Hafler Lab, each sample has two FASTQ files. For benchmarking results presented here we used 5 samples out of 54; names, total file sizes and ranks (based on the size) are shown in Table 2.

|

Sample |

Size (GB) |

Rank |

|

ENCSR764EGD |

2.25 |

0 (minimum) |

|

ENCSR377HQQ |

3.36 |

0.26 |

|

ENCSR038QZA |

3.84 |

0.5 |

|

ENCSR092KKW |

4.34 |

0.76 |

|

ENCSR296LJV |

7.09 |

1 (maximum) |

GRCh38 reference sequence was used. Reference indices were precomputed by separate nf-core/rnaseq pipeline run (see --save_reference parameter) and were used in benchmarking runs later. To learn about pipeline reference parameters and how to pass them check the pipeline documentation.

Version 3.9 of nf-core/rnaseq pipeline was used for the experiments. The pipeline has 3 options for alignment-and-quantification route: STAR-Salmon, STAR-RSEM and HISAT2; we benchmarked all of them. DESeq2 quality control step was skipped since each sample was processed separately. Default values were used for all other parameters. Command line for the pipeline run looked like

nextflow run rnaseq -c '<Path to your Azure configuration file>' --input 'az://<Path to input file>' --outdir 'az://<Output directory>' -w 'az://<Work directory>' --skip_deseq2_qc --aligner <'star_rsem' or 'star_salmon' or 'hisat2'> <Input parameters specifying reference location>

We ran the pipeline for each alignment option and each sample; each experiment was done twice. One set of experiments was performed for Ddv4-series configuration file, and one for Edv4-series in West US2 region. Nextflow v22.10.3 was used to execute the pipeline.

We collected the following statistics for each run: pipeline duration extracted from execution report and tasks duration extracted from execution trace file. Pricing information was downloaded from this page. Cost to run a task was estimated as Ctask=Ttask*PtaskVM, where Ttask is the task duration (hr) and PtaskVM is a price ($/hr) to run a virtual machine assigned to that task; pay-as-you-go pricing was used. The cost to run a pipeline was computed as sum of costs of all its tasks

Finally, pipeline duration and cost numbers were averaged over repeated runs for each sample.

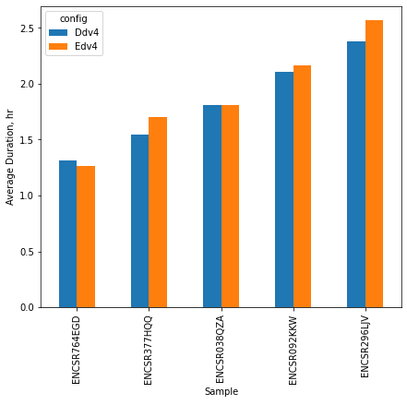

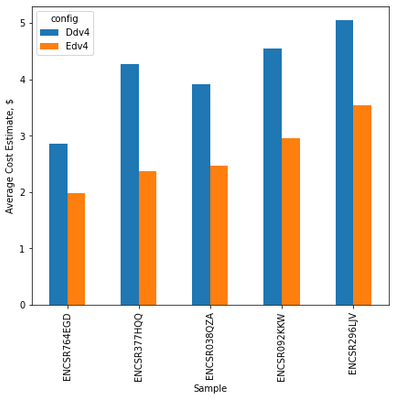

Average cost estimates and durations for each alignment option are presented on Fig.1-3; average changes are in Table 3. The results show that the cost to run a pipeline can be reduced by up to 35% without significant compromise on pipeline completion time by using Edv4-series virtual machines.

Fig. 1: Average cost estimate and pipeline run duration for nf-core/rnaseq pipeline with STAR-Salmon alignment option.

Fig. 2: Average cost estimate and pipeline run duration for nf-core/rnaseq pipeline with STAR-RSEM alignment option.

Fig. 3: Average cost estimate and pipeline run duration for nf-core/rnaseq pipeline with HISAT2 alignment option.

|

Alignment option |

Average change, % |

|

|

Cost |

Duration |

|

|

STAR-Salmon |

-35 |

3.4 |

|

STAR-RSEM |

-26 |

4.2 |

|

HISAT2 |

-31 |

3.1 |

Main factor driving virtual machine choice for nf-core/rnaseq pipeline is task’s memory requirement. As a result, we assign machines with extra CPUs for both Ddv4 and Edv4 series, but those extra CPUs are not allocated to the task. The surplus of CPUs for Ddv4 series is substantial – for tasks with labels ‘process_medium’ and ‘process_high’ we get 2.67 times more CPUs than required; that means we’re underusing compute resources and leads to higher cost with almost no time savings.

There are several approaches to solving “extra CPUs” problem. The first one is to choose a virtual machine with memory-to-CPU ratio that better fits task requirements, as we did with Edv4 series. The second one is to increase the number of allocated CPUs in pipeline’s base configuration file, but that requires deep knowledge of pipeline tools and might be impossible without increasing memory requirements or limited by number of CPUs the pipeline tools could use as well.

Further cost-effectiveness improvements: Spot/Low-priority virtual machines

Azure Batch offers Spot/Low-priority machines to reduce the cost of Batch workloads. On-demand compute cost is much lower than dedicated compute cost. Compute instances on the spot market are dependent on region availability and demand.

We reused benchmarking experiment statistics to get a rough estimate of the cost to run a pipeline using Spot and Low-priority machines. We assumed that task duration will stay the same as it was for dedicated machines and used pricing information for low-cost machines. The estimates show that it might be 5 times cheaper to run nf-core/rnaseq pipeline using Low-priority nodes and 7.7 times cheaper when using Spot machines. That might drop the cost below $1 per sample.

|

Virtual machine series |

Average change in cost, % |

|

|

Low-priority |

Spot |

|

|

Ddv4 |

-80 |

-87 |

|

Edv4 |

-80 |

-87 |

The tradeoff for using Spot/Low-priority machines is that those machines may not always be available to be allocated or may be preempted at any time. If pipeline consists of tasks with short execution times and pipeline competition time is flexible, these nodes might be a great option to reduce the cost. For more accurate cost numbers and better understanding of changes in pipeline completion times, additional benchmarking experiments are required.