This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Table of Contents

Application volume group multiple partition workflow

Outcome when following best practices

Application volume group for SAP HANA workflow

Adapting GUI proposals to meet the naming convention

Abstract

Larger HANA systems such as the new 24TiB VM's requires more performance and capacity than a single data volume can deliver. For such a scenario SAP supports "Multiple partitions" (MP) where the SAP HANA database stripes data files and I/O across multiple data volumes.

This article describes how to use Azure NetApp Files (ANF) volume provisioning using Azure NetApp Files application volume group (AVG) for SAP HANA to provision multiple data volumes for SAP HANA with multiple partitions (MP).

Co-authors: Bernd Herth, Azure NetApp Files SAP Product Manager

Introduction

Application volume group (AVG) for SAP HANA optimizes the ANF volume deployment for SAP HANA for single data volumes but can also be tailored to deploy multiple data volumes. Related documentation can be found here.

While AVG currently does not support multiple data volumes in a single step AVG workflow, customers can still use the current AVG workflows to deploy multiple data volumes to benefit from the optimized volume placement of AVG.

|

(i) Note

|

Application volume group multiple partition workflow

The following workflow describes the use of AVG to deploy the volumes for a multiple partition, single-host SAP HANA system. This workflow demonstrates the creation of 4 data partitions and adapt the naming convention to match recommendations.

Outcome when following best practices

Assuming a customer scenario where a single host HANA system of 24 TiB using 4 HANA partitions needs to be deployed. To meet best practice recommendations the following volumes will be created with the following names, with unique storage endpoints with corresponding IP addresses <ipX> for all data volumes.

For <SID> = P01 the deployed volumes and export path would look like:

HANA shared : <ip1>:/P01-shared

HANA LOG : <ip1>:/P01-log-mnt00001

HANA DATA1 : <ip2>:/P01-data-mnt00001

HANA DATA2 : <ip3>:/P01-data2-mnt00001

HANA DATA3 : <ip4>:/P01-data3-mnt00001

HANA DATA4 : <ip5>:/P01-data4-mnt00001

HANA LOG-Backup : <ip6>:/P01-log-backup

HANA DATA-Backup : <ip6>:/P01-data-backup

The proposed naming convention provides guidance on what the client mount path should look like. This is especially important since SAP HANA internal I/O tuning will only happen if the client-side mount path is different. For example, the /etc/fstab entry for the data volumes should look like:

<ip2>:/P01-data-mnt00001 /hana/data/P01/mnt00001 <mount options>

<ip3>:/P01-data2-mnt00001 /hana/data2/P01/mnt00001 <mount options>

<ip4>:/P01-data3-mnt00001 /hana/data3/P01/mnt00001 <mount options>

<ip5>:/P01-data4-mnt00001 /hana/data4/P01/mnt00001 <mount options>

Application volume group for SAP HANA workflow

In order to deploy the above scenario, the following application volume group workflows need to be combined:

- Deploying a single host HANA system with the default naming convention

- Adding volumes for 3 additional hosts (host 2-4) using the application volume group "multiple host" workflow. This will create 3 volume groups, of which only the data volumes need to be kept. To match the best practices naming convention the proposed names for the volume name as well as the volumes export path need to be adjusted

- After successful creation of the 3 volume groups the LOG volume in each additional group can be deleted

Adapting GUI proposals to meet the naming convention

To be as close as possible to the best practice naming convention proposed volume group name needs to be changed:

- From SAP-HANA-P01-{HostId} to SAP-HANA-P01-partition-{HostId}

For each of the additional DATA volumes (2-4) provisioned using the HANA multiple host workflow for node 2,3,4 the proposed names need to be changed as well:

- Data volume name:

- From P01-data-mnt{HostId} to P01-data{HostId}-mnt00001

- Data volume path:

- From <ipX>:/P01-data-mnt{HostId} to <ipX>:/P01-data{HostId}-mnt00001

Using this pattern, the following volume groups and volumes will be created:

Volume Group SAP-HANA-P01-partition-00001:

HANA shared : <ip1>:/P01-shared

HANA LOG : <ip1>:/P01-log-mnt00001

HANA DATA1 : <ip2>:/P01-data-mnt00001

HANA LOG-Backup : <ip6>:/P01-log-backup

HANA DATA-Backup : <ip6>:/P01-data-backup

Volume Group SAP-HANA-P01-partition-00002:

HANA DATA2 : <ip3>:/P01-data00002-mnt00001

Volume Group SAP-HANA-P01-partition-00003:

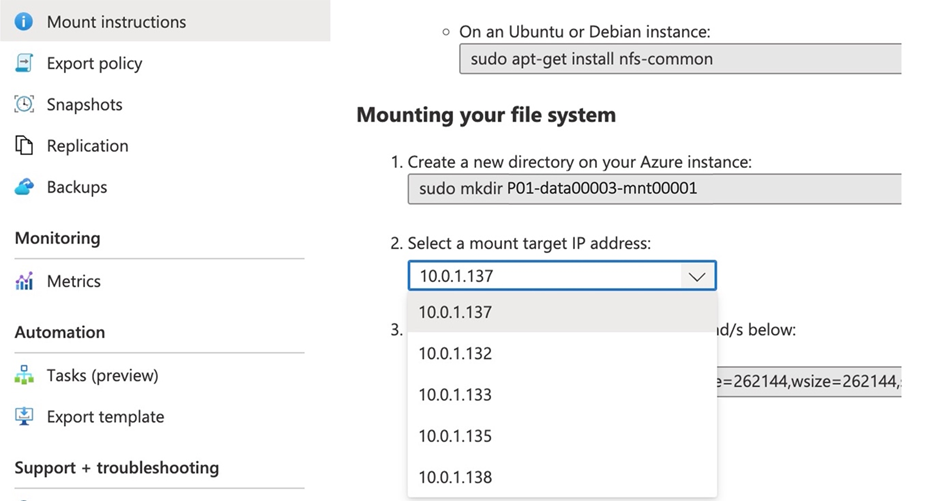

HANA DATA3 : <ip4>:/P01-data00003-mnt00001

Volume Group SAP-HANA-P01-partition-00004:

HANA DATA4 : <ip5>:/P01-data00004-mnt00001

|

(i) Note

|

The following screenshot shows the volumes mount options for one of the additional data volumes. The first IP address in the list of mount target IP’s should be taken as long as this is not the same IP address as for the LOG volume created with the first AVG. In such a case, any other of the displayed IP addresses may be used.

|

(!) Important

The REST-API does not enforce a naming convention. Using the REST-API there is even more flexibility to adapt the deployment using a naming convention to your liking. |

Automation with PowerShell

Community-supported automation PowerShell example script can be found at https://github.com/ANFTechTeam/ANF-HANA-multi-partition. This GitHub repository includes documentation, examples, and source code. The code can be used “as-is” or serve as example code to create customer specific workflows.

Additional documentation

- AVG FAQ: Using AVG to deploy multiple data volumes

- Microsoft Blog post: HANA Data File Partitioning - Installation

- AVG documentation: Configure application volume groups for the SAP HANA REST API