This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Author(s): Bhaskar Sharma is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

Introduction

In this article, I will discuss how to physically model an Azure Synapse Analytics data warehouse while migrating from an existing on-premises MPP (Massive Parallel Processing) data warehouse solution like Teradata and Netezza. The approach and methodologies discussed in this article are purely based on the knowledge and insight I have gained while migrating these data warehouses to Azure Synapse dedicated SQL pool.

Problem statement

Contoso Corporation is a fictional global healthcare organization with its headquarters in the US. They use Azure Synapse as a platform for their enterprise data warehousing solution. A part of their data modernization efforts to Azure public cloud, is evaluating the migration efforts for their two data warehouse platform Teradata and Netezza to Azure Synapse dedicated SQL pool. Before going through the implementation steps and best practices let us first discuss the architecture components and distribution strategies.

Architecture components and distribution strategies

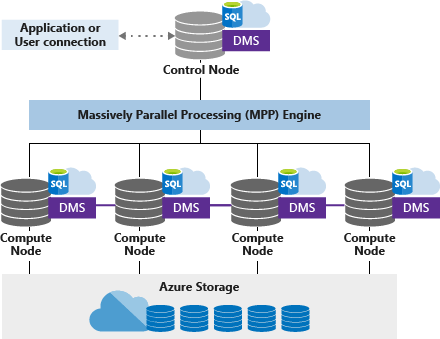

Synapse SQL uses a scale-out architecture to distribute computational processing of data across multiple nodes. The unit of scale is an abstraction of computing power known as a data warehouse unit. Compute is separate from storage, which enables you to scale compute independently of the data in your system.

Control Node - the “Master Node” of the dedicated SQL pool

- The brain of the architecture

- Runs distributed query engine

- Distribute the query to the compute node

Compute Node - the “Worker Node” of the dedicated SQL pool

- Distributions map to Compute nodes for processing

- Compute nodes range from 1 to 60

- Provide the computational power

Data Movement Services (DMS)

- Moves data around as needed

- Enables parallel operations among the compute nodes (queries, loads, etc.)

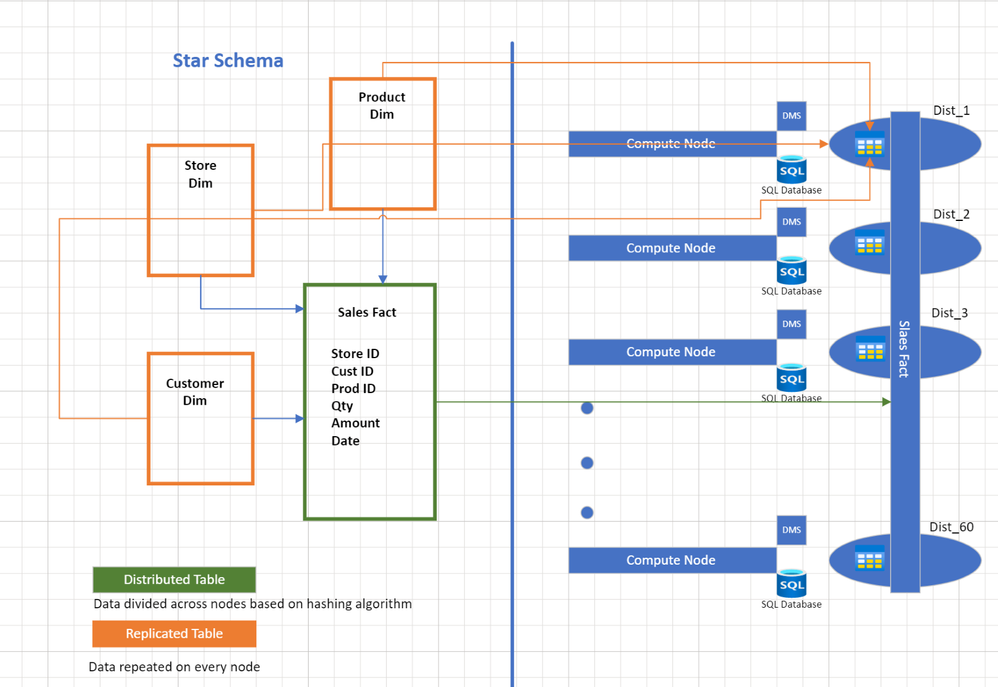

As the diagram suggests, this is an MPP architecture with both compute and storage decoupled, which enables us to scale compute independently of the data in the system. When a dedicated SQL pool runs a query, the work is divided into 60 smaller queries that run in parallel. Each of the 60 smaller queries runs on one of the data distributions. The data is distributed in these storage units based on three distribution strategies.

Distributions Strategies

Azure Synapse Analytics dedicated pool offers the following three data distribution and data movement strategies.

|

Hash Distributed |

Round Robin (default) |

Replicated |

|

Data divided across nodes based on hashing algorithm |

Data distributed evenly across nodes |

Data repeated on every node |

|

Same value will always hash to same distribution |

Easy place to start, do not need to know anything about the data |

Simplifies many query plans and reduces data movement |

|

Single column only (Multi columns distribution is in public preview) |

Simplicity at a cost |

Best with joining hash table |

Azure Synapse dedicated SQL pool data distribution options in a star schema model:

Picking the appropriate types of indexes and partitioning are two additional critical elements of physical modeling in dedicated SQL pools. Let's review them before going into the implementation and operational strategy.

Index type

1: Clustered Columnstore indexes

By default, a dedicated SQL pool creates a clustered columnstore index when no index options are specified on a table. Clustered columnstore tables offer the highest level of data compression and the best overall query performance. As the data is stored in row groups and column segments hence queries often select only a few columns from a table, which reduces total I/O from the physical media.

Clustered column store tables will outperform clustered index or heap tables and are usually the best choice for large tables. For these reasons, a clustered column store is the best place to start when you are unsure of how to index your table.

Rowgroup

A rowgroup is a group of rows that are compressed into columnstore format at the same time. A rowgroup usually has the maximum number of rows per rowgroup, which is 1,048,576 rows.

Column segment

A column segment is a column of data from within the rowgroup. Each rowgroup has one column segment for every column in the table. Each column segment is compressed together and stored on physical media.

Reasons why clustered column store indexes tables are so fast:

- Columns store values from the same domain and commonly have similar values, which result in high compression rates. I/O bottlenecks in your system are minimized or eliminated, and the memory footprint is reduced significantly.

- High compression rates improve query performance by using a smaller in-memory footprint. In turn, query performance can improve because SQL Server can perform more query and data operations in memory.

- Batch execution improves query performance, typically by two to four times, by processing multiple rows together.

- Queries often select only a few columns from a table, which reduces total I/O from the physical media.

2: Heap tables

When you are temporarily landing data in a dedicated SQL pool, you may find that using a heap table makes the overall process faster. Heaps can be used as staging tables for large, unordered insert operations. Because data is inserted without enforcing a strict order, the insert operation is usually faster than the equivalent insert into a clustered index.

Partitioning

Partitioning data is effective for maintaining your data through partition switching or optimizing scans with partition elimination. Table partitions enable you to divide your data into smaller groups of data. In most cases, table partitions are created on a date column. Partitioning is supported on all dedicated SQL pool table types, including clustered column store, clustered index, and heap. Partitioning is also supported on all distribution types, including both hash and round-robin distribution.

Partitioning can help data maintenance and query performance. Whether it helps both or just one is dependent on how data is loaded and whether the same column can be used for both purposes, since partitioning can only be done on one column.

Implementation and best practices

We have investigated architecture components, distribution, index type, and partitioning, now we will see how we can implement these concepts while migrating your data warehouse to the Azure Synapse Analytics.

I will discuss only the consumption/base layer physical modelling and the strategy for ETL landing/staging will be discussed in a separate blog article. Before I started migrating my data from on-premises to the Azure Synapse Analytics environment, I extracted query execution data report for 6 months to analyze how the query pattern and how end users consume the data. The following steps outline my approach.

1: Which column users used for joins to choose the right distribution key (Single Column Distribution vs Multi column distribution)

|

Single column distribution |

Multi-column distribution |

|

The column is frequently used in equality predicate conditions and has a uniform distribution. |

The combination of columns is used in equality predicate conditions and the single column does not have a uniform distribution. |

|

Single column can distribute rows evenly across the 60 distributions. |

Single column introduces a skew and the combination of column distribute rows evenly across the 60 distributions. |

|

Single column consist mainly of unique, distinct values and is most frequently used to access rows. |

If the single column has a small number of distinct values that are repeated frequently or has many nulls values. |

Note: Multicolumn distribution is in public preview and should not be used for a Production workload.

2: What are the filter criteria, to choose the right partitioning key for my data model and to evaluate the need of a secondary index.

3: Which dimension tables qualify for the replicated table distribution strategy.

4: Which tables are best suited to store as HEAP or Clustered Index.

The data below shows Order_date is the right candidate for the partitioning key (used as filter criteria) and Order_id is the right candidate for the distribution key (often used to access data in join condition). I performed a comparable task for each of the Fact tables.

Based on the extracted report I divided the consumption layer into four categories:

1: Fact tables with partitioning key

2: Fact tables without partitioning key

3: Tables with less than 60 million rows

4: Dimensions with less than 2 GB

Let us discuss all four approaches and their use cases.

1: Large Fact tables (with partitioning column)

To optimize performance for large Fact tables, it's recommended to use a Hash Distributed Clustered Columnstore Index along with a partitioning column. However, it's important not to excessively partition the table. Having too many partitions can reduce the effectiveness of clustered columnstore indexes if each partition has fewer than 1 million rows. Dedicated SQL pools automatically partition your data into 60 databases. So, if you create a table with 100 partitions, the result will be 6000 partitions.

If a table contains fewer than the recommended minimum number of rows per partition, consider using fewer partitions in order to increase the number of rows per partition.

Example:

Fact table: Order

Distribution key: Order ID (unique)

Partition Key: Order Date with yearly partition for 10 years i.e., 10 partition/distribution.

Hence, to make the table CCI table with order date and partition column the table should have at least 10 (partition) x 60 (data slices) X 1 million (optimal row group) = 600 million rows.

2: Fact tables without partitioning column

For Fact table > 60 million records, create them as Hash Distributed Clustered Columnstore index without partitioning and make sure you choose the right distribution key to distribute the data evenly across all data slices to reach the optimal threshold of 1 million rows/rowgroup.

3: Tables with less than 60 million rows

For tables that are less than 60 million and greater than 2 GB choose the Hash Distributed Heap/Clustered Index table. As the size of the table is small and will result in smaller rowgroups hence, it will not benefit in performance if created as CCI table and will perform better if stored as a row store table or clustered index table.

Note: It might be possible in some cases you will go for CCI table even with less than 60 million rows based on table width and table usage.

4: Dimension tables with less than 2 GB

For Dimension tables, which are not updated often and are less than 2GB in size, create them as replicated tables. A replicated table has a full copy of the table accessible on each Compute node. Replicating a table removes the need to transfer data among Compute nodes before a join or aggregation. Since the table has multiple copies, replicated tables work best when the table size is less than 2 GB compressed.

Before finalizing the replicated table, please consider the below points.

1: Copy to cache happens on first access

- Queries will execute against the Round-Robin table, until the copy to cache completes.

2: Cached copy invalidated on

- Scale operation

- Any data modification in the table

Best practices

- Indexing cheat sheet

- Distribution cheat sheet

- On top of a clustered columnstore index, you might want to add a non-clustered index to a column heavily used for filtering.

- Be careful how you manage the memory on a table with CCI. When you load data, you want the user (or the query) to benefit from a large resource class. Make sure to avoid trimming and creating many small compressed row groups.

- For CCI, slow performance can happen due to poor compression of your row groups. If this occurs, rebuild or reorganize your CCI. You want at least 100,000 rows per compressed row groups. The ideal is one million rows in a row group. Refer to the Optimizing clustered columnstore indexes for more details.

- Do not over-partition a CCI table.

Our team publishes blog(s) regularly and you can find all these blogs at https://aka.ms/synapsecseblog. For deeper level understanding of Synapse implementation best practices, please refer our Success by Design (SBD) site: https://aka.ms/Synapse-Success-By-Design .