This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

The Responsible AI (RAI) Dashboard is core part of the Responsible AI Toolkit, a suite of tools for a customized, responsible AI experience with unique and complementary functionalities. The capabilities are available both via open source on GitHub or can be accessed through the Azure Machine Learning platform.

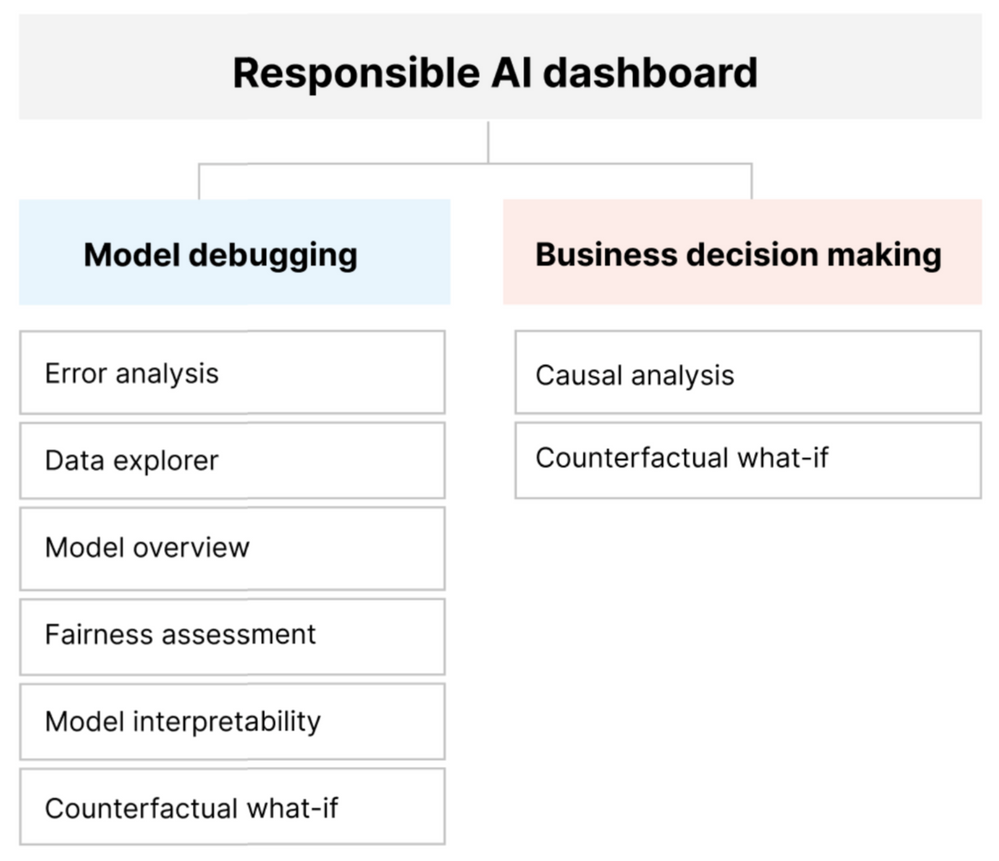

The Responsible AI dashboard brings together a variety of responsible AI capabilities that are meant to easily communicate with each other to facilitate deep-dive investigations, without having to save and reload results in different dashboards. Features include model statistics, data explorer, error analysis, model interpretability, counterfactual analysis, and causal inference.

In this blog series tutorial, we will walk you through how to create an Azure Machine Learning workspace and how to access RAI components including: Error Analysis, Explanations, Fairness Assessment, Counterfactual/What-If, Causal and Scorecard capabilities.

Throughout this series, we’ll use a classification model trained on the publicly available Diabetes Hospital Readmission dataset to illustrate how to use each of the RAI components for further analysis and debugging of your model. The RAI dashboard is built on some of the industry’s leading open-source tools for model debugging, assessment, and responsible decision-making that we’ve been instrumental in developing — including ErrorAnalysis, InterpretML, Fairlearn, DiCE, and EconML.

InterpretML helps data scientists and AI developers access state-of-the-art glassbox (interpretability models), while also helping them better interpret their opaquebox machine learning models. This leads to a better understanding of how the model is making its predictions. For example, it can help provide answers to questions like, “what factors have influenced the predictions the most?” or “why did my model make a mistake?” or “does my model satisfy compliance and audit requirements?”

Fairlearn helps identify fairness issues by exploring groups defined in terms of sensitive attributes such as age, gender, ethnicity, and disabilities. In addition, it reveals disparities in how the model performs with one group of people versus another. An example of this would be a model that prevents one group’s access to products, services, or resources. Fairlearn also covers a set of state-of-the-art mitigation algorithms to help address the observed fairness issues.

DiCE helps not only ML (machine learning) engineers but also business decision-makers generate alternative datapoints with different outcomes from a model. Dice outputs counterfactual datapoints, which are similar datapoints with different/opposite outcomes. For example, imagine a model has predicted that a customer will not be able to pay off the loan and hence, should be denied a loan application. The counterfactual analysis generates the closest datapoints to that customer with opposite prediction outcome from the model (in this case loan approval) and so, the loan officer can provide recommendations like “increase your income by 2K” or “payoff 3K of your credit card debt” to help the customer change the model’s prediction for the next time they apply.

EconML helps business decision-makers explore causal relationships between factors and real-world outcomes. It can help answer questions such as “how would holidays impact our monthly revenue?” It can also help answer real-world what-if scenarios such as “what if we change the product pricing? Would that lead to higher sales?” This type of analysis can help expose potential positive or negative outcomes if action is taken.

All these open-source services help assess and debug models, enabling ML professionals and business stakeholders to analyze models and make better decisions to produce more responsible AI systems. The RAI dashboard is available as part of the Responsible AI Toolbox via open source or in the Azure Machine Learning platform. Now that these capabilities are available within Azure Machine Learning, ML engineers no longer have to worry about the tedious task of using the open-source libraries separately to troubleshoot how performant, reliable, or fair their model are in disparate code instances.

Let’s get started by creating an Azure Machine Learning workspace that we will later use to train a model and create an RAI dashboard.

Prerequisites

- Login or Signup for a FREE Azure account

- Create an Azure ML workspace by completing the “Create a Workspace” section and click the “Review + Create” button. Note: Skip the rest of the Networking and Advanced section.

Working with Azure ML workspace

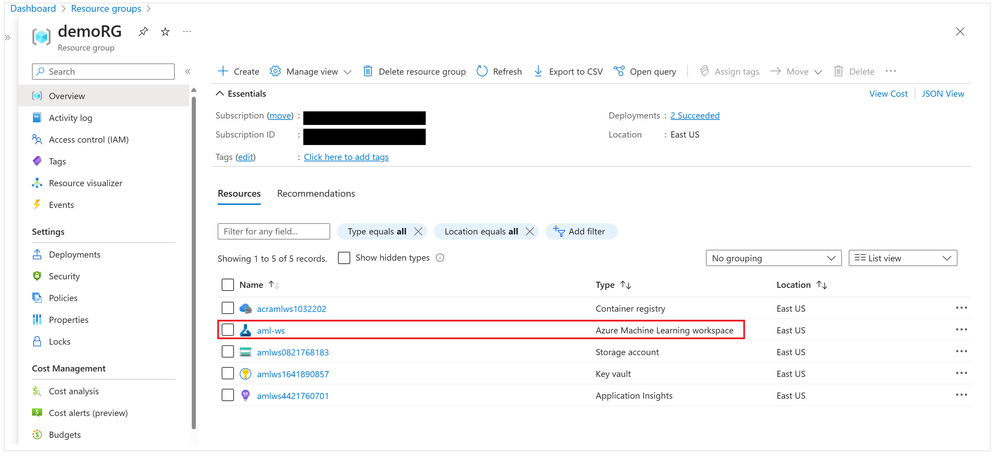

When you have an Azure ML workspace created, click on “Go to Resource.” You should see all the resources that were created with your workspace instance. Click on the Azure ML workspace name to open the workspace page.

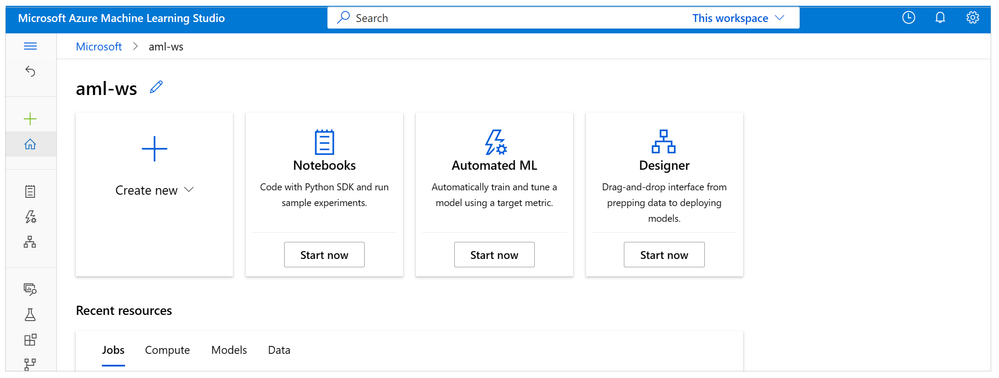

To navigate to the Azure Machine Learning Studio where you’ll be able to train, debug, deploy and manage your model, click on the “Launch Studio” button.

Azure Machine Learning Studio has several useful features for you to complete your end-to-end ML lifecycle tasks.

Verify RAI Insights components

The RAI components are prebuilt system registries available within Azure Machine Learning. To verify the new backend RAI component registries accessible in Azure Machine Learning Studio, click on the back curve arrow icon on the top left-hand navigation bar of the workspace studio.

On the Azure ML studio welcome page, click on the “Components” tab on the left hand-side of the navigation bar. To view the list of different RAI components in the system registry, type “RAI” in the search box to filter the results to responsible AI components.

Great, you are now ready to train a model for RAI dashboard in the next tutorial!

Stay tuned for Part 2 of the tutorial…