This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

The ChatGPT model, gpt-35-turbo, and the GPT-4 models, gpt-4 and gpt-4-32k, are now available in Azure OpenAI Service in preview. GPT-4 models are currently in a limited preview, and you’ll need to apply for access whereas the ChatGPT model is available to everyone who has already been approved for access to Azure OpenAI.

These new models were specifically designed for multi-turn conversations and come with an updated API to facilitate conversational dialogue. When using these models on Azure OpenAI, there are two important changes for you to keep in mind: the introduction of the Chat Completions API (preview) and changes to how we'll be versioning models. This blog post will walk through these changes and what you should keep in mind when getting started with the ChatGPT and GPT-4 models.

The Chat Completions API (preview)

The Chat Completions API (preview) is a new API introduced by OpenAI and designed to be used with chat models like gpt-35-turbo, gpt-4, and gpt-4-32k. In this new API, you’ll pass in your prompt as an array of messages instead of as a single string. Each message in the array is a dictionary that contains a “role” and some “content”. The “role” specifies who the message is from while the “content” is the text of the message itself.

[

{"role": "system", "content": "You are an AI assistant."},

{"role": "user", "content": "What is few shot learning?"}

]

This gives you an easy way to keep track of the conversation and include previous messages in the prompt for context. You can also use this API in the OpenAI Python SDK as shown below.

import os

import openai

openai.api_type = "azure"

openai.api_base = "https://{resource-name}.openai.azure.com/"

openai.api_version = "2023-03-15-preview"

openai.api_key = os.getenv("OPENAI_API_KEY")

response = openai.ChatCompletion.create(

engine = "gpt-35-turbo",

messages = [

{"role":"system","content":"You are an AI assistant."},

{"role":"user","content":"What is few shot learning?"}

]

)

NOTE: gpt-35-turbo can also be used with the existing Text Completions API. However, we recommend that you use the Chat Completions API moving forward. If you do use gpt-35-turbo with the text completions API, make sure to specify your prompts using Chat Markup Language (ChatML).

Model versioning

OpenAI plans to make new versions of the ChatGPT and GPT-4 models available regularly. With this, you’ll also see some changes to how we version models in Azure OpenAI.

When you create a deployment of gpt-35-turbo, you’ll also specify the version of the model that you want to use. Currently, only version "0301" is available and that will be used by default. Soon, you’ll be able to specify that you want to use the "latest" version of the model. If you choose "latest", we’ll always serve your requests with the latest version of the model, and you won’t have to worry about upgrading to newer versions of the model. The same will be true for GPT-4 with the first version of the GPT-4 models being "0314".

Another big part of this change is that models will have faster deprecation timelines than in the past so that we can continue to offer you the latest versions of these models. The "0301" version of the ChatGPT model and the "0314" versions of the GPT-4 models will be deprecated on August 1st, 2023 in favor of newer versions. You can find the deprecation times for models on our Models page and via our Models API.

It’s important that you understand what version of the model you’re using and when that version will be deprecated so that you can update your application to a newer version of the model before the model gets deprecated.

Getting started

There are several ways to get started with the ChatGPT and GPT-4 models including through Azure OpenAI Studio, through our samples repo, and through and end-to-end chat solution.

Azure OpenAI Studio

The easiest way to get started with these models is through our new Chat playground in the Azure OpenAI Studio. You can customize your system message to tailor the model to your use case, then test out the model, and continue to iterate from there.

ChatGPT and GPT-4 Samples

You can also check out our latest samples to see details on how to use the ChatGPT and GPT-4 models, such as managing the flow of a conversation: https://github.com/Azure/openai-samples

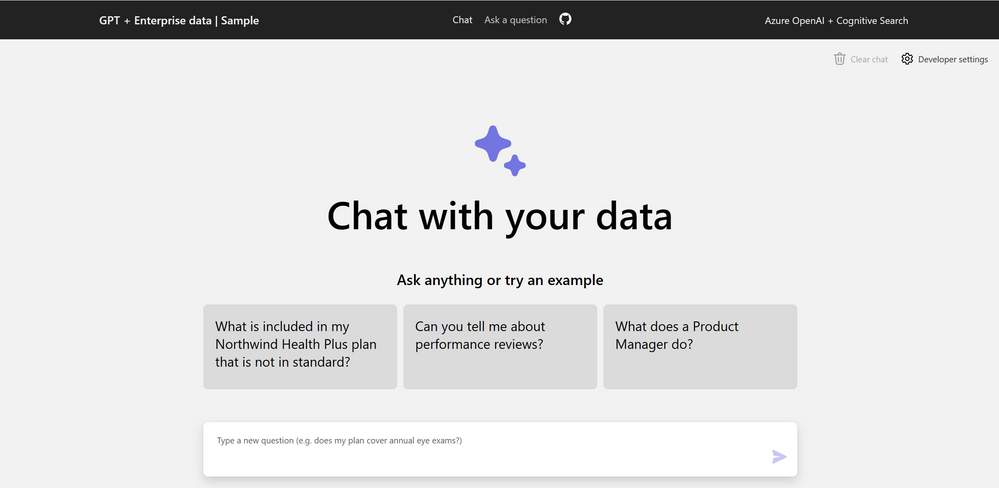

Using ChatGPT and GPT-4 models with your data

For many scenarios, you may also need to infuse your own data into the prompts for ChatGPT and GPT-4. We’ve published an end-to-end example showing how you can combine Azure Cognitive Search and the chat models to develop a curated chat experience including citations support and additional functionality. You can learn more about this pattern in the blog post and can find the code for the demo here: https://github.com/azure-samples/azure-search-openai-demo.