This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

It has been a breakthrough year for AI technology. Large Language Models (LLM) such as ChatGPT and Bing Chat trained on large amount of public data have demonstrated an impressive array of skills from writing poems to generating computer programs, despite not being designed to solve any specific task.

However, even though some users might already feel comfortable sharing personal information such as their social media profiles and medical history with chatbots and asking for recommendations, it is important to remember that these LLMs are still in relatively early phases of development, and are generally not recommended for complex advisory tasks such as medical diagnosis, financial risk assessment, or business analysis. Models that can perform these tasks reliably will require innovation, both in model architecture (e.g., the ability to train on multi-model data such as images, audio, and video), and the way specialized high-quality training data is sourced.

The data that could be used to train the next generation of models already exists, but it is both private (by policy or by law) and scattered across many independent entities: medical practices and hospitals, banks and financial service providers, logistic companies, consulting firms… A handful of the largest of these players may have enough data to create their own models, but startups at the cutting edge of AI innovation do not have access to these datasets.

Confidential Computing

Confidential computing is a foundational technology that can unlock access to sensitive datasets while meeting privacy and compliance concerns of data providers and the public at large. With confidential computing, data providers can authorize the use of their datasets for specific tasks (verified by attestation), such as training or fine-tuning an agreed upon model, while keeping the data secret. End users can protect their privacy by checking that inference services do not collect their data for unauthorized purposes. Model providers can verify that inference service operators that serve their model cannot extract the internal architecture and weights of the model.

Confidential computing can enable multiple organizations to pool together their datasets to train models with much better accuracy and lower bias compared to the same model trained on a single organization’s data. AI startups can partner with market leaders to train models. In short, confidential computing democratizes AI by leveling the playing field of access to data.

Microsoft has been at the forefront of building an ecosystem of confidential computing technologies and making confidential computing hardware available to customers through Azure. Using Azure Confidential VMs and Confidential containers based on the latest CPUs from Intel and AMD, organizations are now able to deploy security-sensitive workloads in the cloud and replace the implicit trust in Azure with verifiable security guarantees.

For AI workloads, the confidential computing ecosystem has been missing a key ingredient – the ability to securely offload computationally intensive tasks such as training and inferencing to GPUs. Over the last three years, Microsoft and NVIDIA have collaborated extensively to address this gap, starting with last year’s preview of Confidential GPU VMs based on Ampere Protected Memory Technology.

Azure Confidential Computing with NVIDIA H100 Tensor Core GPUs

With confidential computing as a built-in feature in the new NVIDIA Hopper architecture, the NVIDIA H100 Tensor Core GPU combines the power of accelerated computing and the security of confidential computing for state-of-the-art AI workloads. NVIDIA has unveiled these capabilities in two sessions at GTC this week. The first, “Hopper Confidential Computing: How it Works under the Hood,” covers in detail the new hardware security features and the driver architecture to protect data transfers and kernel launch and how these new capabilities can be integrated with Azure Confidential VMs. The second, “The Developer’s View to Secure an Application and Data on H100,” explains how to run privacy-preserving applications and the new best practices to follow when developing CC-enabled CUDA applications.

Azure Confidential Computing with NVIDIA H100 marks a significant milestone for confidential computing and privacy preserving machine learning. In combination with existing confidential computing technologies, it lays the foundations of a secure computing fabric that can unlock the true potential of private data and power the next generation of AI models.

Security Capabilities

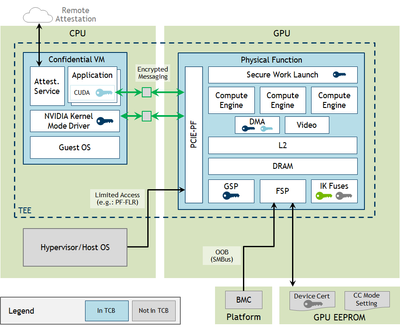

At its core, confidential computing relies on two new hardware capabilities: hardware isolation of the workload in a trusted execution environment (TEE) that protects both its confidentiality (e.g., via hardware memory encryption) and integrity (e.g., by controlling access to the TEE’s memory pages); and remote attestation, which allows the hardware to sign measurements of the code and configuration of a TEE using a unique device key endorsed by the hardware manufacturer.

To bring this technology to the high-performance computing market, Azure confidential computing has chosen the NVIDIA H100 GPU for its unique combination of isolation and attestation security features, which can protect data during its entire lifecycle thanks to its new confidential computing mode. In this mode, most of the GPU memory is configured as a Compute Protected Region (CPR) and protected by hardware firewalls from accesses from the CPU and other GPUs. This region is only accessible by the computing and DMA engines of the GPU. To enable remote attestation, each H100 GPU is provisioned with a unique device key during manufacturing. Two new micro-controllers known as the FSP and GSP form a trust chain that is responsible for measured boot, enabling and disabling confidential mode, and generating attestation reports that capture measurements of all security critical state of the GPU, including measurements of firmware and configuration registers.

Scalability and Programmability

In addition to isolation and remote attestation, Confidential Computing with H100 GPUs also supports a set of capabilities that enable the GPU TEE to connect with a CPU TEE, and to extend across multiple GPUs; these capabilities are critical for building and serving large models. An H100GPU in confidential mode can be attached to a CPU TEE (such as a Confidential VM or a Confidential Container). Enabling this attachment requires support for direct device attachment into a hardware isolated VM in Hyper-V and in the Linux vPCIe driver for Hyper-V. Using this mechanism, multiple GPUs (with NVIDIA NVlink technology enabled) can be attached to the same CPU TEE.

The GPU device driver hosted in the CPU TEE attests each of these devices before establishing a secure channel between the driver and the GSP on each GPU. The driver uses this secure channel for all subsequent communication with the device, including the commands to transfer data and to execute CUDA kernels, thus enabling a workload to fully utilize the computing power of multiple GPUs.

Scenarios

Model Confidentiality

Consider a company that wants to monetize its latest medical diagnosis model. If they give the model to practices and hospitals to use locally, there is a risk the model can be shared without permission or leaked to competitors. On the other hand, if the model is deployed as an inference service, the risk is on the practices and hospitals if the protected health information (PHI) sent to the inference service is stolen or misused without consent.

Confidential computing can address both risks: it protects the model while it is in use and guarantees the privacy of the inference data. The decryption key of the model can be released only to a TEE running a known public image of the inference server (e.g., the public NVIDIA Triton Inference Server container on Docker Hub). The practices or hospitals can use attestation as evidence to their patients and privacy regulators that PHI remains confidential.

Inference/Prompt Confidentiality

With the massive popularity of conversation models like Chat GPT, many users have been tempted to use AI for increasingly sensitive tasks: writing emails to colleagues and family, asking about their symptoms when they feel unwell, asking for gift suggestions based on the interests and personality of a person, among many others. Because the conversation feels so lifelike and personal, offering private details is more natural than in search engine queries. However, Chat GPT is clear in its FAQ that all conversations are collected, they can be reviewed by OpenAI employees and used to train further models. In short – chatbots have the potential to be a major privacy risk.

With the combination of CPU TEEs and Confidential Computing in NVIDIA H100 GPUs, it is possible to build chatbots such that users retain control over their inference requests and prompts remain confidential even to the organizations deploying the model and operating the service.

Fine-Tuning with Private Data

As previously mentioned, the ability to train models with private data is a critical feature enabled by confidential computing. However, since training models from scratch is difficult and often starts with a supervised learning phase that requires a lot of annotated data, it is often much easier to start from a general-purpose model trained on public data and fine-tune it with reinforcement learning on more limited private datasets, possibly with the help of domain-specific experts to help rate the model outputs on synthetic inputs.

Multi-party Training

The ability for mutually distrusting entities (such as companies competing for the same market) to come together and pool their data to train models is one of the most exciting new capabilities enabled by confidential computing on GPUs. The value of this scenario has been recognized for a long time and led to the development of an entire branch of cryptography called secure multi-party computation (MPC). However, due to the large overhead both in terms of computation for each party and the volume of data that must be exchanged during execution, real-world MPC applications are limited to relatively simple tasks (see this survey for some examples).

Federated learning was created as a partial solution to the multi-party training problem. It assumes that all parties trust a central server to maintain the model’s current parameters. All participants locally compute gradient updates based on the current parameters of the models, which are aggregated by the central server to update the parameters and start a new iteration. Although the aggregator does not see each participant’s data, the gradient updates it receives reveal a lot of information.

Confidential computing with GPUs offers a better solution to multi-party training, as no single entity is trusted with the model parameters and the gradient updates. Instead, participants trust a TEE to correctly execute the code (measured by remote attestation) they have agreed to use – the computation itself can happen anywhere, including on a public cloud.

Use cases that require federated learning (e.g., for legal reasons, if data must stay in a particular jurisdiction) can also be hardened with confidential computing. For example, trust in the central aggregator can be reduced by running the aggregation server in a CPU TEE. Similarly, trust in participants can be reduced by running each of the participants’ local training in confidential GPU VMs, ensuring the integrity of the computation. By ensuring that each participant commits to their training data, TEEs can improve transparency and accountability, and act as a deterrence against attacks such as data and model poisoning and biased data.

Conclusion

Confidential computing is emerging as an important guardrail in the Responsible AI toolbox. We look forward to many exciting announcements that will unlock the potential of private data and AI and invite interested customers to sign up to the preview of confidential GPUs.

Further reading

- Sign up to the Azure limited preview of Confidential GPU VMs on A100

- Powering the next generation of trustworthy AI in a confidential cloud using NVIDIA GPUs

- Hopper Confidential Computing: How it Works under the Hood

- Azure previews powerful and scalable virtual machine series to accelerate generative AI

- What Is Confidential Computing? NVIDIA Blog