This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Full credit to:

Samuel Partee, Principal Applied AI Engineer, Redis

Kyle Teegarden, Senior Product Manager, Azure Cache for Redis

Introduction

Imagine that you are a vehicle maintenance technician and a classic convertible just rolled into your shop. You’re about to tighten a few bolts in the engine when you realize you have no idea what the torque specification is. In the past, you had to spend hours digging up the right manual and finding the right page. But today, you have access to a semantic answer engine that is loaded with maintenance manuals for every car produced in the last fifty years. A simple query rapidly pulls up the correct page in the relevant manual and gives you the answer—36 ft. lbs. of torque.

This scenario is no longer science fiction. With the rapid advances in large language models and artificial intelligence led by organizations like OpenAI, opportunities to transform how we work are virtually unlimited. This post will focus on one of the most exciting areas in machine learning for enterprises — using embeddings APIs to analyze content and vector similarity search (VSS) to find most related and relevant component. From allowing retail customers to find new products through a visual similarity search, to providing workers an

intelligent guide to answer their health insurance questions, to enabling more efficient documentation search for software applications, semantic answer engines can use AI to solve problems today.

Vector Embeddings and Search

Vector embeddings are numerical representations of data that can be used for a variety of applications. They are highly flexible and can be used to represent different types of data, including text, audio, images, and videos. Vector embeddings have many practical applications, including reverse image search, recommendation systems and most recently, Generative AI. While vector embeddings are usually smaller in size than the data from which they were created, vectors are packed with information. The density of information is made possible by AI models like GPT that form numerical representations of input data sometimes called a "latent space". One can think of a vector embedding as the opinion of a model about some input data. Because of the highly dimensional representation and small size, vector embeddings are great for the comparison of unstructured data.

Many computer vision models can detect the presence of a plane in the sky or classify cat versus dog, however, using such models directly to discern the difference between 1 million images of cats is computationally impractical. Instead, the "knowledge" of these models can first be extracted into vector embeddings. With the vector representation, simple geometry which is computational inexpensive can be used to calculate the similarity, or dissimilarity, of any image to all the images in the dataset. This process is known as vector similarity search.

A brute-force process for vector similarity search can be described as follows:

1. The dataset is transformed into a set of vector embeddings using an appropriate algorithm.

2. The vectors are placed into a search index (like HNSW)

3. A query vector is generated to represent the user's search query.

4. A distance metric, like cosine similarity, is used to calculate the distance between the query vector and each

vector in the dataset.

5. The top n vectors are returned as the search results

Vector Embeddings and Search

Vector embeddings are numerical representations of data that can be used for a variety of applications. They are highly flexible and can be used to represent different types of data, including text, audio, images, and videos. Vector embeddings have many practical applications, including reverse image search, recommendation systems and most recently, Generative AI.

While vector embeddings are usually smaller in size than the data from which they were created, vectors are packed with information. The density of information is made possible by aImodels like GPT that form numerical representations of input data sometimes called a "latent space". One can think of a vector embedding as the opinion of a model about some input data. Because of the highly dimensional representation and small size, vector embeddings are great for the comparison of unstructured data.

Many computer vision models can detect the presence of a plane in the sky or classify cat versus dog, however, using such models directly to discern the difference between 1 million images of cats is computationally impractical. Instead, the "knowledge" of these models can first be extracted into vector embeddings. With the vector representation, simple geometry which is computational inexpensive can be used to calculate the similarity, or dissimilarity, of any image to all the images in the dataset. This process is known as vector similarity search.

A brute-force process for vector similarity search can be described as follows:

- The dataset is transformed into a set of vector embeddings using an appropriate algorithm.

- The vectors are placed into a search index (like HNSW)

- A query vector is generated to represent the user's search query.

- A distance metric, like cosine similarity, is used to calculate the distance between the query vector and each vector in the dataset.

- The top n vectors are returned as the search results.

For a simple introduction to vector similarity search including code snippets see this article.

Vector Databases

Organizations use vector databases to deploy this capability in production services and applications. Vector Databases typically have the same CRUD operations you would expect from a database, but also have specific structures, search indices, dedicated to organizing the vector embeddings described above. A search index organizes vectors such that the computational cost of searching through them is optimized over the brute force approach described above.

An example of this is the Hierarchical Navigable Small Worlds (HNSW) algorithm. A HNSW index is a Hierarchical Navigable Small World index, a fully graph-based incremental K-ANNS structure that can offer a much better logarithmic complexity scaling.

Redis uses the HNSW algorithm for secondary indexing of the contents within Redis using the RediSearch module. Multiple distance metrics like internal product, euclidean distance, and cosine similarity, as previously mentioned, are used for the similarity calculation. With this functionality, and the traditional search capabilities of RediSearch, developers can utilize powerful vector embedding representation to create intelligent, real-time applications.

Vector Databases and Large Language Models

Generative models like ChatGPT have revolutionized product development by allowing interfaces to be simplified to a Google search bar-like input of natural language. However, fine-tuning can be expensive and slow, making them ill-suited for problem domains with constantly changing information.

Vector databases such as Azure Cache for Redis Enterprise can act as an external knowledge base for generative models and can be updated in real-time. Compared to fine-tuning, this approach is much faster and cheaper, and allows for a continually up-to-date external body of knowledge for the model to draw upon.

One way this is used is to create a question-and-answer system, like this example, that can provide continually updated information without the need for re-training or fine-tuning. For example, a product that answers questions about internal company documentation for new employees can use a vector database to retrieve context for prompts, allowing for point-in-time correctness of generated output. By instructing the model to provide no answer when the vector search returns results below a certain confidence threshold, it can prevent model "hallucinations."

Build your own AI-powered app on Azure

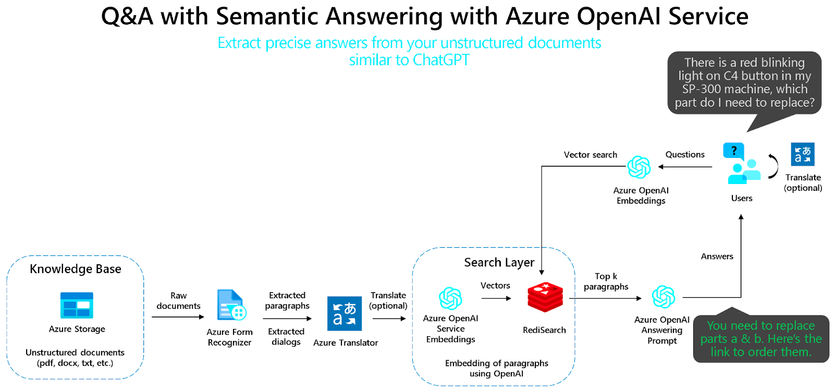

With all the hype about large language models, it’s important to actually build out a real working example. Fortunately, Azure offers all the components needed to develop an AI-powered application, from proof-of-concept to production. In this example, we will build a semantic answer engine where you can upload documents and then query them using natural language. The core of our application will be focused on two key elements:

- Azure OpenAI Service to generate embeddings, process text queries, and provide natural language responses.

- Azure Cache for Redis Enterprise to store the vector embeddings and compute vector similarity with high performance and low latency.

Deploying a model: Azure OpenAI Service

Azure OpenAI Service provides access to OpenAI’s powerful language models. A full list of supported models is available, but we’ll be focused mainly on the embeddings models. Specifically, we’ll be using text-embedding-ada-002 (Version 2), which is the latest embedding model. We’ll also use the text-davinci-003 model for providing natural language responses. Deploying these two models is straightforward:

- If you haven’t already, apply to use Azure OpenAI Service. This is required to gain access to the service due to high demand.

- Provision a new Azure OpenAI Service instance in Azure. Instructions are here, with guidance for using either the Azure portal or CLI. Note that some models are only available in certain regions. Consult this table for guidance on regional availability.

- Navigate to the Model deployments blade and select the Create button to bring up the Create model deployment window. Use the drop down menus to select the model and enter a model deployment name. We need to create two models—one using text-embedding-ada-002 (Version 2) and one using text-davinci-003.

Deploying a Vector Database: Azure Cache for Redis Enterprise

Azure Cache for Redis is a fully managed Redis offering on Azure. In addition to supporting the highly popular core Redis functionality, Azure Cache for Redis takes care of provisioning, hardware management, scaling, patching, monitoring, automatic failover, and many other functions to make development easier. Azure Cache for Redis offers native support for both open-source Redis and Redis Enterprise deployment models. In this example, we’ll be using the Enterprise tier of Azure Cache for Redis, which is built on Redis Enterprise. This allows us to take advantage of RediSearch (including vector similarity search features), plus the performance and reliability benefits from using Enterprise Redis.

Instructions on how to create an Azure Cache for Redis Enterprise instance are available in the Azure documentation. For this example, we will change the default settings to set up the cache for use as a vector database:

- Follow the instructions to start creating an Enterprise tier cache in the same region as your Azure OpenAI Service instance.

- On the Advanced page, select the Modules drop-down and select RediSearch. This will create your cache with the RediSearch module installed. You can also install the other modules if you’d like.

- In the Zone redundancy section, select Zone redundant (recommended). This will give your cache even greater availability.

- In the Non-TLS access only section select Enable.

- For Clustering Policy, select Enterprise. RediSearch is only supported using the Enterprise cluster policy.

- Select Review + create and finish creating your cache instance.

Combining Azure OpenAI and Redis With LlamaIndex

We will explore a Jupyter Notebook that combines the power of Azure OpenAI endpoints and LlamaIndex to enable chat functionality with PDF documents stored with vectors inside a Azure redis Instance. LlamaIndex is a framework that makes it easy to develop applications that interact with a language model and external sources of data or computation. It uses clear and modular abstractions for building blocks necessary to build LLM applications. Specifically, we will leverage the LLamaIndex library to chunk, vectorize, and store a PDF document in Redis as vectors, along with associated text.

To read all of the code for this blog, visit: https://github.com/RedisVentures/LLM-Document-Chat

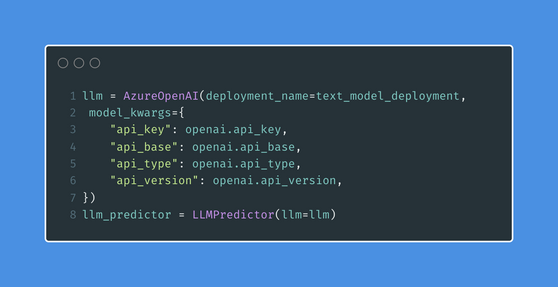

Step 1: The first setup is to use the Azure endpoints for the OpenAI models. These values can be set as environment variables in the ``.env`` file. These values can be read into the AzureOpenAI abstraction provided by LLamaIndex as follows:

Step 2: To chunk up our PDF, create vectors and store the results in Redis, we use the ``RedisVectorStore`` abstraction. The address values for Redis can also be set in the ``.env`` file.

To search for specific information within the document, we use a query engine interface from LlamaIndex. This engine acts as a tool that allows us to interact with the content stored in the Redis database. Its purpose is to find relevant information based on the queries we provide. Underlying this interface is a redis client performing queries on the Redis index created to store the text and vectors for the PDF document.

Once the query engine is ready, we can start searching by calling the query_engine.query() method and providing the query we want to ask. This triggers a search process where LLamaIndex utilizes the stored vectors and the capabilities of Azure OpenAI's language models to locate the most relevant information within the document.

For example, let's say we want to know the towing capacity of the Chevy Colorado. We can use the query engine to find the answer:

You can read about examples of this on the Redis Blog, and find code examples on the RedisVentures Github.