This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Azure Stream Analytics announces a slew of enhancements at Build 2023: New competitive pricing model, Virtual Network integration, native support for Kafka source & destination, integration with Event Hub Schema Registry, GA announcements and more!

Today we are delighted to share a range of exciting updates that will greatly enhance the productivity, cost-effectiveness, and security of processing streaming data. We are introducing new competitive pricing model that offers discounts to customers based on their increasing usage. Additionally, we are pleased to announce the integration of Virtual Network and native support for Kafka as a source and destination for Azure Stream Analytics jobs!

Below is the complete list of announcements for Build 2023. The product is continuously updated so you always get the latest version and can access all these new features automatically.

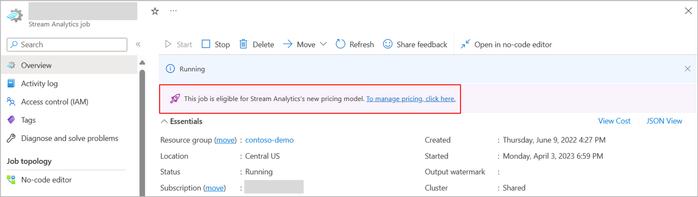

Up to 80% price reduction in Azure Stream Analytics

Customers are enjoying excellent performance, high availability, low-code/no-code experiences, and rich development tools. To make streaming analytics with ASA an even easier win for our customers, we are happy to announce a new competitive pricing model which offers discounts of up to 80%, with no changes to ASA’s full suite of capabilities. The new pricing model offers graduated pricing where eligible customers receive discounts as they increase their usage. ASA’s new transparent and competitive pricing model supports the team’s mission of democratizing stream processing for all. The new pricing model will take effect on July 1st, 2023.

Virtual Network Integration support

Stream Analytics jobs in the Standard (multi-tenant) environment now have support for Virtual Network Integration. With just a few clicks, you can secure access and take advantage of private endpoints, service endpoints, and enjoy the advantages of complete network isolation by deploying dedicated instances of Azure Stream Analytics within a virtual network.

More details are available in this blog: https://aka.ms/VNETblog

Kafka input & output (Private Preview)

Customers now have the capability to directly ingest data from Kafka clusters and output to Kafka clusters in their Azure Stream Analytics (ASA) jobs. This, along with the Virtual Network integration available in the Standard (multi-tenant) environment, simplifies the process for customers to connect their Kafka clusters with their ASA jobs. The Kafka adapters by Azure Stream Analytics are managed by Microsoft’s Azure Stream Analytics team, allowing it to meet business compliance standards without managing extra infrastructure. The Kafka Adapters are backward compatible and support Kafka versions starting from version 0.10 with the latest client release. If you are interested in the private preview, please fill in this form (https://forms.office.com/r/EFxzJDR5xJ).

More details are available in this blog: https://aka.ms/asakafka

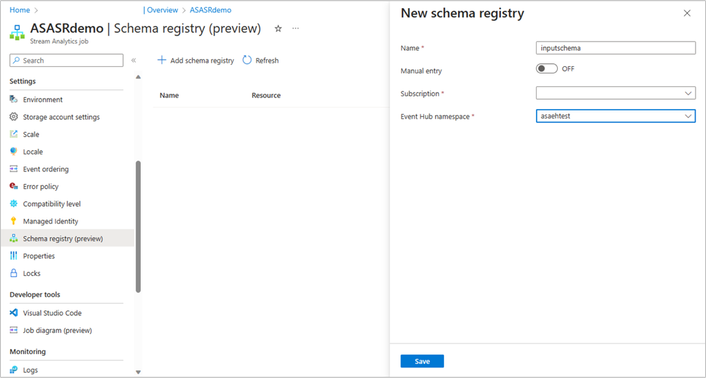

Event Hub Schema Registry integration (Public Preview)

Through the integration of Event Hub Schema Registry, Azure Stream Analytics can now fetch schema information from the Schema Registry and deserialize data from Event Hub inputs for Avro format. This integration allows customers to offload schema metadata to the Schema Registry, resulting in reduced per-message overhead and enabling efficient schema validation for ensuring data integrity. You can try out this feature in all the regions by May 27th. More details are available in this doc: https://aka.ms/ASAschemaregistry

No-code experience on Azure Stream Analytics portal (Public Preview)

The no-code editor offers a SaaS-based user experience that enables you to develop Stream Analytics jobs effortlessly, using drag-and-drop functionality, without having to write any code. With just a few clicks, you can quickly create jobs to handle diverse scenarios in just minutes. Get ready to experience it on the Azure Stream Analytics portal this June!

Custom container names for Blob storage output (Public Preview)

Dynamic blob containers offer customers the flexibility to customize container names in Blob storage, providing enhanced organization and alignment with various customer scenarios and preferences. This lets users partition their data into different blob containers based on data characteristics.

More details are available in this doc: Blob storage and Azure Data Lake Gen2 output from Azure Stream Analytics | Microsoft Learn

Custom Autoscale (General Availability)

Autoscale allows you to have the right number of resources running to handle the load on your job. It will add resources to handle increases in load and save money by removing resources that are sitting idle. How Autoscale behaves is determined by the customer -- you set the minimum, maximum, and configure the scaling behavior using custom rules. The custom Autoscale capability is highly customizable and enables you to set specific trigger rules based on what is most relevant to you.

More details are available in this doc: https://learn.microsoft.com/en-us/azure/stream-analytics/stream-analytics-autoscale

End-to-end exactly once processing with Event Hubs and ADLS Gen2 output (General Availability)

Stream Analytics supports exactly once end-to-end semantics exactly once when reading any streaming input and writing to Azure Data Lake Storage Gen2 and Event Hub. It guarantees no data loss and no duplicates being produced as output. This greatly simplifies your streaming pipeline by not having to monitor, implement, and troubleshoot deduplication logic.

More details and limitations are available in these docs:

https://learn.microsoft.com/azure/stream-analytics/blob-storage-azure-data-lake-gen2-output

Event Hubs output from Azure Stream Analytics | Microsoft Learn

Enhanced troubleshooting, monitoring and diagnosis of issues

Job simulation on Azure Stream Analytics

To ensure your Stream Analytics job operates at peak performance, it's important to leverage parallelism in your query. The Job Simulation feature in the ASA portal and the VSCode extension allow you to simulate the job running topology and thereby can be very useful in fine-tuning the the number of streaming units and simulate the job running topology. It also provides editing suggestions to enhance your query and unlock your job's full performance potential.

More details are available in this doc & blog: Optimize query’s performance using job diagram simulator in VS Code, https://aka.ms/blogjobsimulation

Job simulation on Azure Stream Analytics portal (Public Preview)

Job simulation on ASA VSCode extenstion (General Availability)

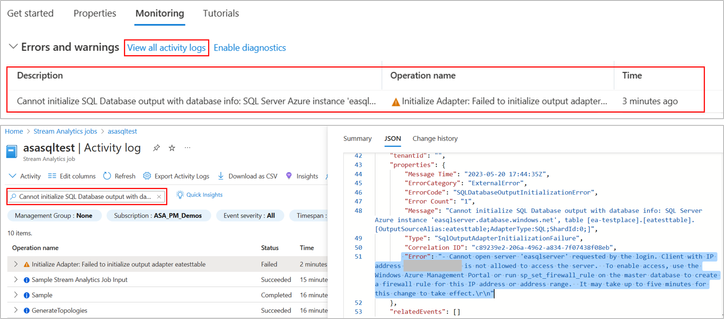

Top 5 errors on Azure Stream Analytics portal overview

Azure Stream Analytics has introduced a convenient way for monitoring errors in your Stream Analytics jobs. In the Overview section of Azure Stream Analytics jobs, customers now have visibility into the top 5 recent errors occurring in their jobs. If customers wish to explore more details about a specific error, they can navigate to the Activity Logs and search for the corresponding error. Alternatively, customers can configure diagnostic logs to obtain comprehensive error information for further analysis and troubleshooting.

Stream Analytics offers two types of logs:

- Activity logs (always on), which give insights into operations performed on jobs.

- Resource logs (configurable in Diagnostic Settings), which provide richer insights into everything that happens with a job.

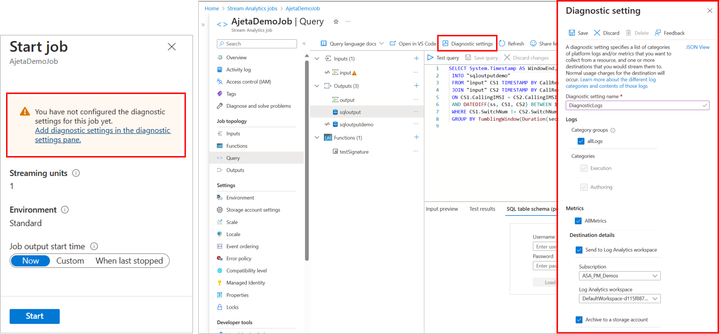

Multiple entry points for setting up diagnostic logs

Failures can be caused by an unexpected query result, by connectivity to devices, or by an unexpected service outage. The resource logs in Stream Analytics can help you identify the cause of issues when they occur and reduce recovery time. It is highly recommended to enable resource logs for all jobs as this will greatly help with debugging and monitoring. Thus, we have introduced multiple entry points while query authoring and starting a job, to enable customers to set up logs.

More details about logs are available in this doc: Troubleshoot Azure Stream Analytics using resource logs | Microsoft Learn

Job Diagram Playback in Azure Stream Analytics Portal

Gain valuable insights into the performance of your Stream Analytics job by replaying the job diagram and observing how key metrics change over time. This feature allows you to identify bottlenecks and anomalies that may affect the overall job performance.

Query Validation with Specific Input Partitions

Stream Analytics VSCode extension offers the capability to validate the behavior of your Stream Analytics job against specific Event Hubs input partitions. By running your job locally and targeting these partitions, you can verify the accuracy of the results and fine-tune your query logic for optimal performance. This functionality enables you to thoroughly test and validate your job's behavior.

Seamless CI/CD with Bicep Templates in VSCode

By utilizing Bicep templates, you can simplify your CI/CD pipeline for Stream Analytics jobs. The ASA extension for VSCode allows you to generate bicep templates with a simple click. Then you can use bicep language to edit the templates for managing and deploying your Azure resources, making the deployment process more streamlined. Learn more about Bicep here: https://learn.microsoft.com/en-us/azure/azure-resource-manager/bicep/overview?tabs=bicep

Azure Stream Analytics team is highly committed to listening to your feedback. We welcome you to join the conversation and make your voice heard via submitting ideas and feedback. If you have any questions or run into any issues accessing any of these new improvements, you can also reach out to us at askasa@microsoft.com.