This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Azure Machine Learning (Azure ML) offers a preferred platform for Snowflake, a cloud-based data warehouse, that is increasingly becoming the go-to choice for many organizations to store their data. Data scientists from organizations that have adopted Snowflake as their data warehouse solution can now explore Azure ML capabilities without relying on third-party libraries or engaging data engineering teams. Now, with a seamless and native integration between Snowflake and Azure Machine Learning, data scientists can import their data from Snowflake to Azure ML with a single command and kick-start their machine learning projects.

We are thrilled to announce the public preview of Azure Machine Learning (Azure ML) data import CLI & SDK which is designed to bring data effortlessly from data repositories that are not part of Azure platform for training in Azure ML. This includes databases like Snowflake and cloud storage services like AWS S3.

This blog post will outline the advantages and steps to get started with Azure Machine Learning for Snowflake users without any external dependencies.

Advantages of Snowflake and Azure Machine Learning Integration

- Enhanced Collaboration: This integration empowers data scientists to directly import data from Snowflake, eliminating the need for constant communication with data engineering teams.

- Time Efficiency: By removing the need for third-party libraries or additional data pipeline development, the data scientists can save time and focus on developing their machine learning models.

- Simplified Workflow: Leveraging native connectivity between Snowflake and Azure Machine Learning results in a more streamlined and user-friendly workflow.

- Flexibility: Leveraging schedules or on-demand options, data scientists can decide when and what data needs to be imported. With certain configurations the data expirations can also be managed, providing them complete flexibility on the datasets.

- Traceability: Each import, whether scheduled or not, creates a unique version of the dataset which is in turn used in training jobs, giving data scientists the required traceability in scenarios that need retraining or for model audits.

How to get started?

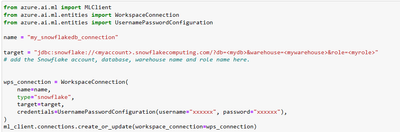

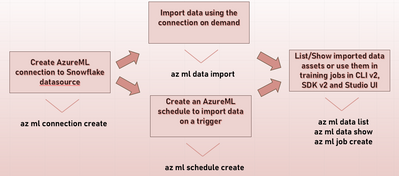

A connection is where it all begins, this is where the endpoint details of the Snowflake instance including the server, database, warehouse and role information is entered as target along with valid the credentials to access data. Typically, an admin is the one who would create a connection.

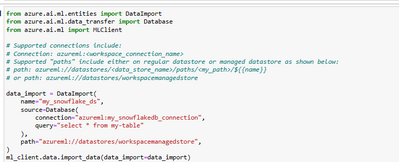

It is easy for a data scientist to use an existing connection if the query to pull the required data that is intended to be used in training is known. In one single step, one can import data and register it as a Azure ML data asset that can be readily referenced in training jobs.

If the scenario demands to import data on a schedule, one can use popular cron or recurrence patterns to define the frequency of import.

We are also excited to introduce the public preview of lifecycle management on Azure ML managed datastore (a “Hosted On Behalf Of/HOBO datastore). This offering from Azure ML is exclusively for data import scenarios available on CLI and SDK. On choosing HOBO datastore as their preferred destination to import data, one gets the capability of lifecycle management or as we call it “auto delete settings” on the imported data assets. A policy to automatically delete an imported data asset if unused for 30 days by any job is set on every imported data asset in the AzureML managed datastore. All that one has to do is to set “azureml://datastores/workspacemanagedstore” as the path when defining their import as shown in the snippet below and rest will be handled by AzureML.

Once the data is imported, one can update the auto delete settings to lengthen or shorten the time duration or even change the condition to be based on created time instead of last used time using CLI or SDK commands.

Quick recap –

Customers having data in Snowflake can now utilize the power of Azure ML for training directly from our platform. They can import data on-demand or on a schedule and can also set “auto-delete” policies to manage their imported data in Azure ML managed datastore from cost and compliance point of view.

Try it for yourself –

To get started with Azure Machine Learning data import, please visit Azure ML documentation and GitHub repo, where you can find detailed instructions to setup connections for external sources in Azure ML workspace , and train or deploy models with a variety of Azure ML examples.

Learn More –

To stay updated on Azure Machine Learning announcements, watch our breakout sessions from Microsoft Build.

- Build and maintain your company Copilot with Azure Machine Learning and GPT-4

- Practical deep-dive into machine learning techniques and MLOps

- Building and using AI models responsibly