This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Microsoft’s’ virtualization stack, which powers the Microsoft Azure Cloud, is made up of the Microsoft hypervisor and the Azure Host OS. Security is foundational for the Azure Cloud, and Microsoft is committed to the highest levels of trust, transparency, and regulatory compliance (see Azure Security and Hypervisor Security to learn more). Confidential VMs are a new security offering that allow customers to protect their most sensitive data in use and during computation in the Azure Cloud.

In this blog we’ll describe the Confidential VM model and share how Microsoft built the Confidential VM capabilities by leveraging confidential hardware platforms (we refer to the hardware platform as the combination of the hardware and architecture specific firmware/software supplied by the hardware vendor). We will give an overview of our goals and our design approach and then explain how we took steps to enable confidential VMs to protect their memory, as well as to provide them secure emulated devices such as a TPM, to protect their execution state and their firmware, and lastly to allow them to verify their environment through remote attestation.

What is Confidential VM?

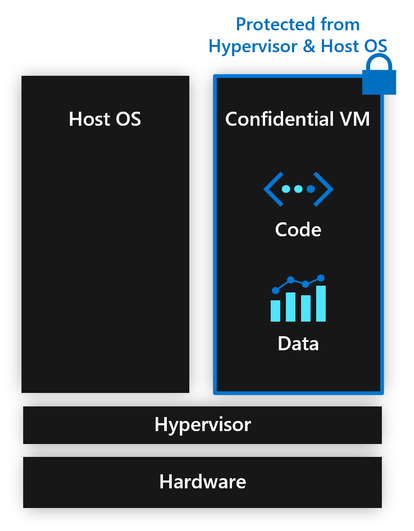

The Confidential Computing Consortium defines confidential computing as “the protection of data in use by performing computation in a hardware-based, attested Trusted Execution Environment (TEE)”, with three primary attributes for what constitutes a TEE: data integrity, data confidentiality, and code integrity1. A Confidential VM is a VM executed inside a TEE, “whereby code and data within the entire VM image is protected from the hypervisor and the host OS” 1. As crazy as this sounds – a VM that runs protected from the underlying software that makes its very existence possible – a growing community is coming together to build the technologies to make this possible.

For confidentiality, a Confidential VM requires CPU state protection and private memory to hold contents that cannot be seen in clear text by the virtualization stack. To achieve this, the hardware platform protects the VM’s CPU state and encrypts its private memory with a key unique to that VM. The platform further ensures that the VM’s encryption key remains a secret by storing it in a special register which is inaccessible to the virtualization stack. Finally, the platform ensures that VM private memory is never in clear text outside of the CPU complex, preventing certain physical attacks such as memory bus snooping, cold-boot attacks, etc.

For integrity, a Confidential VM’s requires integrity protection to ensure its memory can only be modified by that VM. To achieve this, the hardware platform both protects the contents of the VM’s memory against software-based integrity attacks, and it also verifies address translation. The latter serves to ensure that the address space layout (memory view) of the VM can only be changed with the cooperation and agreement of the VM.

An overview of our approach to Confidential VMs

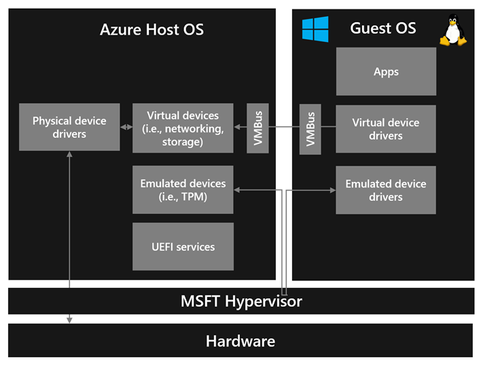

As a type 1 hypervisor, Microsoft’s hypervisor runs directly on the hardware and all operating systems, including the host OS, run on top of it. The hypervisor virtualizes resources for guests and controls capabilities that manage memory translations. The host OS provides functionality for VM virtualization including memory management (i.e., providing guest VMs interfaces for accessing memory), and device virtualization (i.e., providing virtual devices to guest VMs) to run and manage guest VMs.

Since they take care virtualizing and assigning resources to guest VMs, all virtualization stacks up until recently assumed full access to guest VM state, but at Microsoft, we completely evolved our virtualization stack to break those assumptions to be able to support running Confidential VMs. We set a boundary to protect the guest VM from our virtualization stack, and we leverage different hardware platforms to enforce this boundary and help guarantee the Confidential VM’s attributes. Azure Confidential VMs today leverage the following hardware architectures: AMD SEV-SNP (generally available) and Intel TDX (in preview).

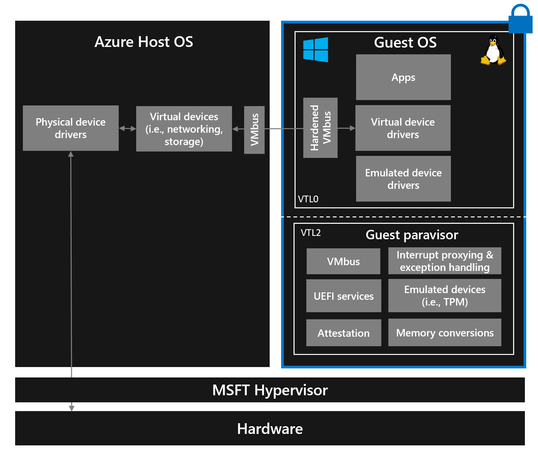

We wanted to enable customers to lift and shift workloads with little or no effort, so one of our design goals was to support running mostly unmodified guest operating systems inside Confidential VMs. Operating systems were not designed with confidentiality in mind, so we created the guest paravisor to bridge between the classic OS architecture and the need for confidential computing. The guest paravisor implements the TEE enlightenments on behalf of the guest OS so the guest OS can run mostly unmodified, even across hardware architectures. This can be viewed as the “TEE Shim” 1. You can think of the guest paravisor as a firmware layer that acts like a nested hypervisor. A guest OS that is fully enlightened (modified) to run as a Confidential guest can run without a guest paravisor (this is also supported in Azure today but out of scope for this blog).

VBS lets a VM have multiple layers of software at different privilege levels. We decided to extend VBS to allow us to run the guest paravisor in a more privileged mode than the guest OS in a hardware platform-agnostic manner using multiple the confidential guest privilege levels offered by the hardware platform. Since the guest paravisor and guest OS run in different privilege levels, the guest OS and UEFI don’t have access to secrets held guest paravisor memory. This allows the paravisor to provide a Confidential VM virtual hardware features that are privilege-separated including Secure Boot and its own dedicated TPM 2.0. We’ll cover the benefits of these features in the attestation section.

On the guest OS side, we evolved the device drivers (and other components) to enable both Windows and Linux guest operating systems to run inside Confidential VMs on Azure. For the Linux guest OS, we collaborated with the Linux kernel community and with Linux distros such as Ubuntu and SUSE. A Confidential VM turns the threat model for a VM upside-down: a core pillar of any virtualization stack is protecting the host from the guest, but with Confidential VMs there is a focus on protecting the guest from the host as well. This means that all guest-host interfaces must be hardened. This was one of the design principles for the paravisor, as it allowed us to move logic from the host into the guest to simplify the interfaces between the guest and the host to be able to better harden them. We therefore analyzed and hardened these interfaces, including the guest device drivers themselves, to enforce a defensive model that examines and validates parameters in the messages that come from the host (i.e., ring buffer messages).

Memory Protections

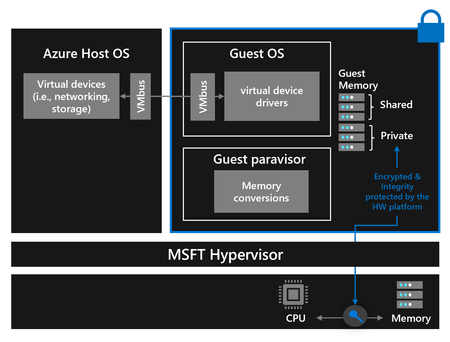

Two types of memory exist for Confidential VMs, private memory where computation is done by default and shared memory to communicate with the virtualization stack for any purpose, i.e., device IO. Any page of memory can be either private or shared, and we call this its page visibility. A Confidential VM should be configured appropriately to make sure that data that will go in a shared memory is protected (via TLS, bit locker, dm-crypt, etc.).

All accesses use private pages by default, so when a guest wants to use a shared page, it must explicitly do this by managing page visibility. We evolved all the necessary components (including the virtual device drivers in the guest that communicate with the virtual devices on the host via VMbus channels) to enable them to use our mechanism to manage page visibility. This mechanism essentially has the guest maintain a pool of shared memory. When a guest wants to send data to the host, for example to send a packet via networking, it allocates a bounce buffer from that pool and then copies that data from its private memory into the bounce buffer. The I/O operation is initiated against the bounce buffer, and the virtual device on the host can read the data.

The size of the pool of shared memory is not fixed; our memory conversion model is dynamic so that the guest can adapt to the needs of the workload. The memory used by the guest can be converted between private and shared as needed to support all the IO flows. Guest operating systems can choose to make use of our dynamic memory conversion model. Confidential computing hardware prevents the hypervisor or anything other than the code inside the CVM from making pages shared. Converting memory is therefore always initiated by the guest, and it kicks off both guest actions and host actions so that our virtualization stack can coordinate with hardware platforms to grant or deny the host access to the page.

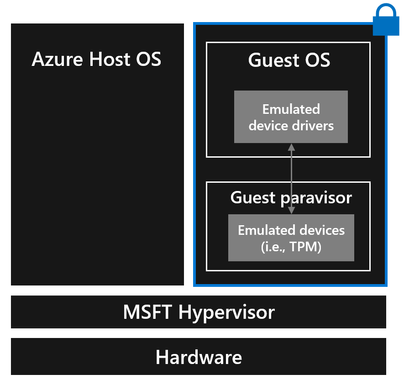

Protecting Emulated Devices

For compatibility, the Azure Host OS provides emulated devices to closely mirror hardware, and our hypervisor reads and modifies a VM’s CPU state to emulate the exact behavior of the device. This allows a guest to use an unmodified device driver to interact with that emulated device. Since a Confidential VM’s CPU state is protected from our virtualization stack, it cannot use the emulated devices on the host OS anymore.

As part of our goal to enable customers to run a mostly unmodified guest OS inside a Confidential VM, we didn’t want to eliminate emulated devices. Therefore, we decided to evolve our virtualization stack to support device emulation operating inside the guest instead of the host OS, so we moved emulated devices (TPM, RTC, serial, etc.) to the guest paravisor (running in the guest but isolated from the guest OS).

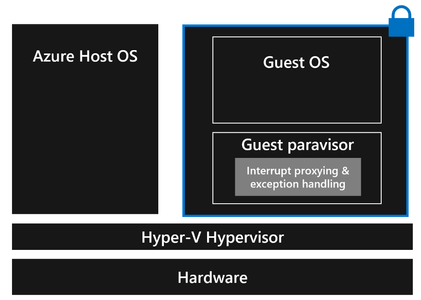

Protecting Runtime State

We believe a Confidential VM requires assurances about the integrity of its execution state, so we took steps to provide this protection to Confidential VM workloads. To support this, the hardware platform provides mechanisms to protect a Confidential VM from being vulnerable to unexpected interrupts or exceptions during its execution.

Normally, our hypervisor emulates the interrupt controller (APIC) to generate emulated interrupts for guests. To help a guest OS running inside a Confidential VM handle these interrupts defensively, the guest paravisor does interrupt proxying, validating interrupts coming from the hypervisor. This way, when the hypervisor injects an interrupt into the guest VM, the guest paravisor ensures that the interrupt is valid before re-injecting it into the guest OS. Additionally, with Confidential VMs there is a new exception type that needs to be handled by the guest VM instead of the virtualization stack. This exception is generated only by the hardware and is hardware platform specific. The paravisor can handle this exception on behalf of the guest OS.

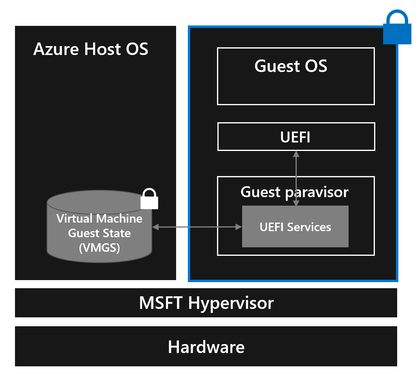

Protecting Firmware

Normally, a guest VM relies on the host OS to store and provide its firmware state and attributes (UEFI variable store) and to implement authenticated UEFI variables to ensure that secure UEFI variables are isolated from the guest OS and cannot be modified without detection. A Confidential VM requires a way to do all this without trusting the host.

We evolved guest UEFI firmware to get trusted UEFI attributes from a new Virtual Machine Guest State (VMGS) file packaged as a VHD instead of from the host. We encrypt this VMGS.VHD file to provide a Confidential VM’s firmware access to persistent storage that is inaccessible to the host. The host only interacts with the encrypted VHD (E2E process for its encryption is out of scope for this blog).

To provide a Confidential VM authenticated UEFI variables that are secure from the host as well as the guest OS, authenticated UEFI variables are managed by the guest paravisor: when a Confidential VM uses UEFI runtime services to write a variable, the guest paravisor processes the authenticated variable rights and persists that data in the VMGS file. Our design allows a Confidential VM to use the VMGS file to persistently store and access VM guest state and guest secrets such as UEFI state and TPM state if desired by the customer. Lastly, we also hardened guest firmware to do validation of UEFI state.

Remote Attestation

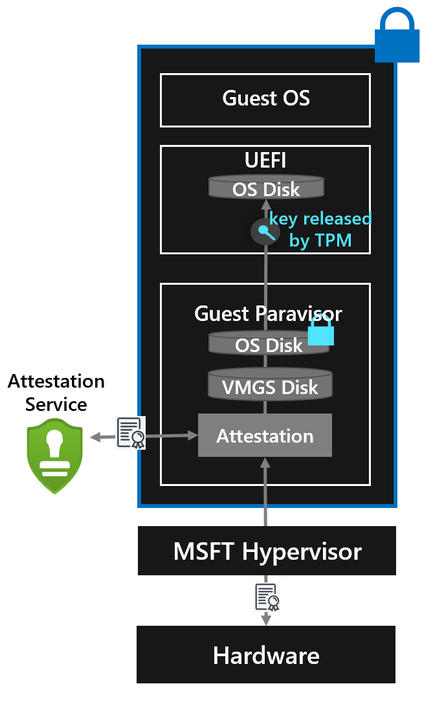

According to the industry definition of confidential computing, any TEE deployment “should provide a mechanism to allow validation of an assertion that it is running in a TEE instance” through “the validation of a hardware signed attestation report of the measurements of the TCB” 1. An attestation report is composed of hardware and software claims about a system, and these claims can be validated by any attestation service. Attestation for a Confidential VM “conceptually authenticates the VM and/or the virtual firmware used to launch the VM” 1.

A Confidential VM cannot solely rely on the host to guarantee that it was launched on a confidential computing capable platform and with the right virtual firmware, so a remote attestation service must be responsible for attesting to its launch. Therefore, a Confidential VM on Azure always validates that it was launched with secure, unmodified firmware and on a confidential computing platform via remote attestation with a hardware root of trust. In addition to this, it validates its guest boot configuration thanks to Secure Boot and TPM capabilities.

Once the partition for a Confidential VM on Azure is created and the VM is started, the hardware seals the partition from modification by the host, and a measurement about the launch context of the guest is provided by the hardware platform. The guest paravisor boots first and performs attestation on behalf of the guest OS, requesting a signed attestation report from the hardware platform and sending this report to an attestation verification service. Any failures in this attestation verification process will result in the VM not booting.

After this, the guest paravisor then transfers control to UEFI. During this phase Secure Boot verifies the startup components, checking their signatures before they are loaded. In addition to this, thanks to TPM Measured boot, as these startup components are loaded, UEFI accumulates their measurements into the PCRs of the TPM. If the OS disk is encrypted, the TPM will only release the key to decrypt it if the VM’s firmware code and configuration, original boot sequence, and boot components are unaltered.

Call to Action

In this blog we described how in collaboration with our industry partners, we evolved our entire virtualization stack to empower customers to lift and shift their most sensitive Windows and Linux workloads into Confidential VMs. We also gave a deep technical overview of how we protect our customers’ workloads in these Confidential VMs. This is an innovative new area, and we want to share that journey with our customers who also want to move into this new Confidential Computing world. As you use Confidential VMs on Azure, we would love to hear about your usage experiences or any other feedback, especially as you think of other scenarios (in the enterprise or cloud).

This blog was written in collaboration with the OS Platform team.

References:

1Common Terminology for Confidential Computing, December 2022, Confidential Computing Consortium.