This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Introduction

In this blog, you will learn how to leverage different Large Language Models available on Azure Machine Learning and provided by Hugging Face, Azure ML, OpenAI, and Meta. Also, you will learn how to integrate them into your Web Application or Power App.

For the sake of simplicity, we won't get into the detailed steps as it has been already shown in this blog Deploying a Large Language Model (GPT-2) on Azure Using Power Automate: Step-by-Step Guide (microsoft.com) rather we will have an overview of what needs to be done in order to use other state-of-the-art Large Language Models available on Azure.

Summary of the learning milestones:

Milestone 1: Understanding Machine Learning Models

Milestone 2: Understanding the Model Deployment

Milestone 3: Understanding the Integration with Web Applications

Milestone 4: Understanding the Integration with Power Platform

Milestone 1: Understanding Machine Learning Models

Machine Learning Models are like a black box that takes in data as input, performs its magic, and gives us back the answer to what we are looking for, but what you need to consider here is: does it take in any kind of data? The short answer is no. The long answer is that each machine learning model has a specific input shape in which the data we give has to be formatted.

So, we need to perform data preparation before sending the data to any machine learning model that applies to large language models too.

As for the output (the answer we are looking for), it also needs to be formatted in a specific format that we will have to render (change the shape of it) to display in a readable way.

Now, the question is how do I know the accepted format for the inputs and outputs of my model?

Fortunately, Azure Machine Learning provides us with that information along with each model's specifications.

Let's take GPT-2 which is provided by OpenAI, Llama 2 which is provided by Meta, and a popular open-source LLM "Dolly v1" which is provided by Databricks as examples.

If you open any of the three models' pages and scroll down a little bit you will find the information you need. (Learn how to create an Azure Machine Learning Workspace in detailed steps from here)

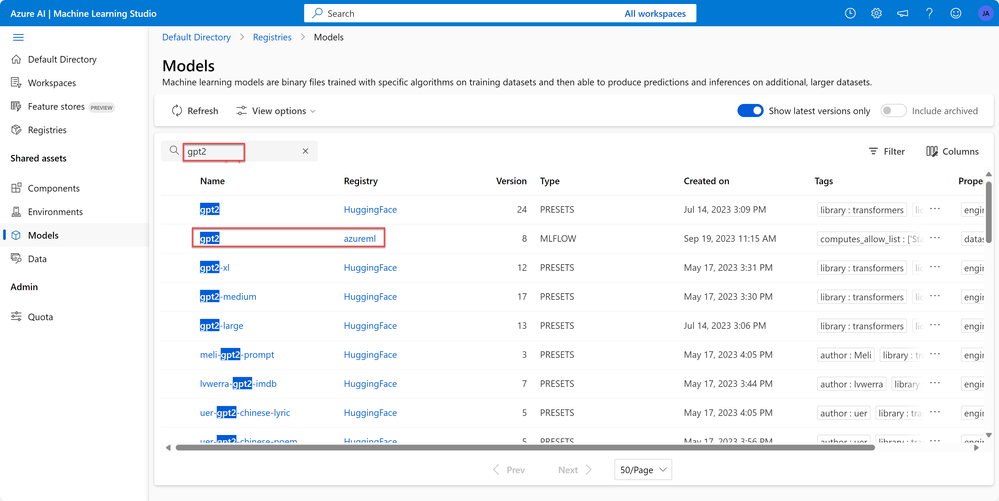

For GPT-2:

Type gpt2 in the search bar at the top of the models page followed by selecting the gpt2 model provided by the azureml registry from the options that appear.

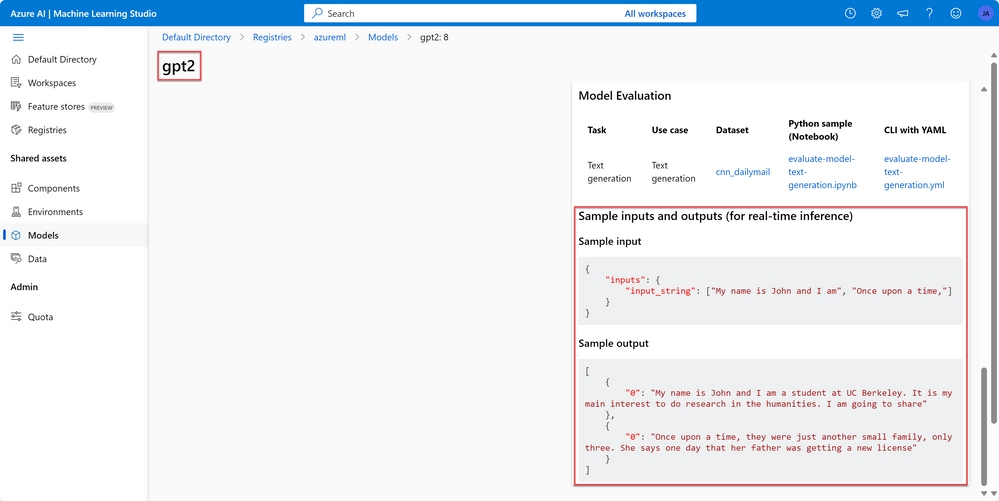

Here you can find the input shape and output shape from the given sample. (Direct link: here)

For Llama 2:

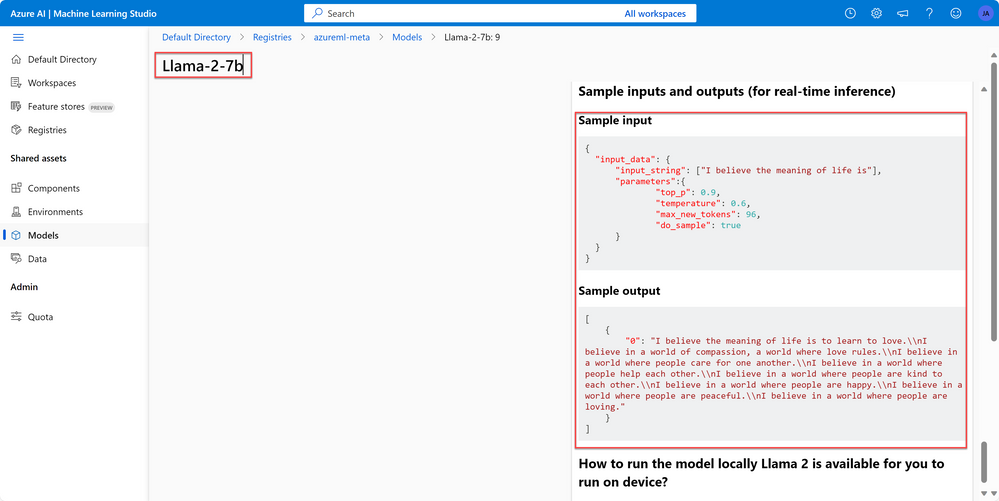

Type llama-2 in the search bar at the top of the models page followed by selecting the Llama-2-7b model provided by the azureml-meta registry from the options that appear.

Here you can find the input shape and output shape from the given sample. (Direct link: here)

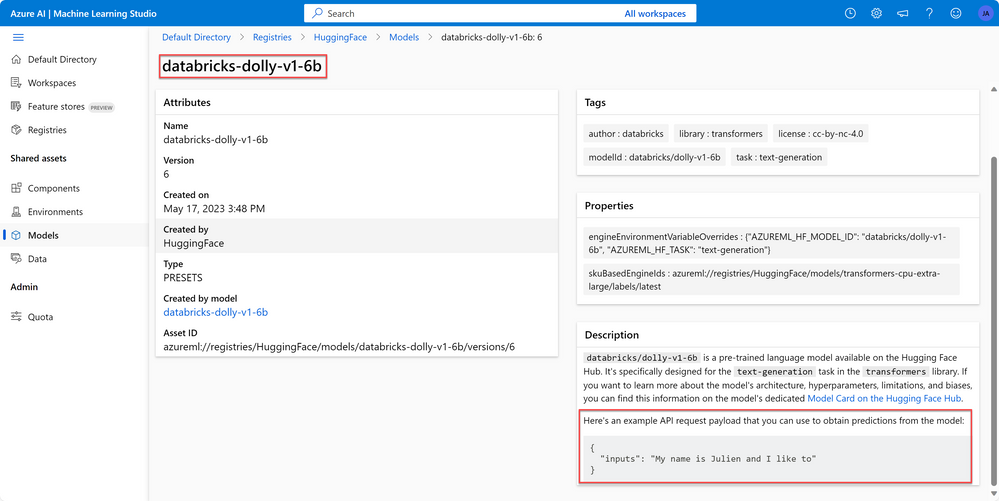

For Dolly v1:

Type dolly-v1 in the search bar at the top of the models page followed by selecting the databricks-dolly-v1-6b model provided by the HuggingFace registry from the options that appear.

Here you can find the input shape only but figuring out the output shape is an easy task. (Direct link: here)

You may ask, is that all? Nope, there is still another thing which is understanding the machine learning model deployment and how you can use it.

Milestone 2: Understanding the Model Deployment

Model deployment to online endpoints deploys models to a web server that can return predictions under the HTTP protocol which is used for time inference in synchronous low-latency requests. (Learn more: here)

You get two things from the deployment: An Endpoint which is a link that you can use to call your model (Make HTTP requests) and an API Key which is used to make authenticated requests to the endpoint think of it as a password that gives you access to your account.

Now, let's deploy the three models with the quick deployment button that is visible on the top of each model's page. (Learn how to do this in detailed steps from here)

Note: some models require GPU machines which might not be available for everyone if it's not available you can request quota and wait.

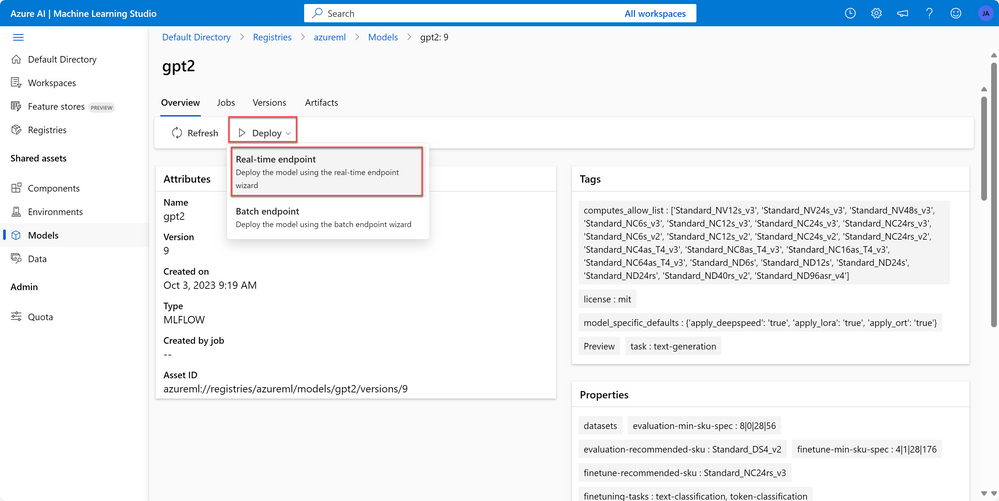

For GPT-2:

Select Deploy followed by Real-time endpoint then select your Subscription and your Workspace then select Proceed to workspace.

Perform the following tasks:

- Select the Virtual machine you'd like to use.

- Enter an Instance count. (For high availability, Microsoft recommends you set it to at least 3)

- Enter an Endpoint name. Any unique name works.

- Enter a Deployment name.

- Select Deploy.

Note: selecting a large Virtual machine will incur more charges and increasing the number of instances from the selected machine will also contribute to that. This may increase the speed and/or availability of your model but at the cost of paying more money.

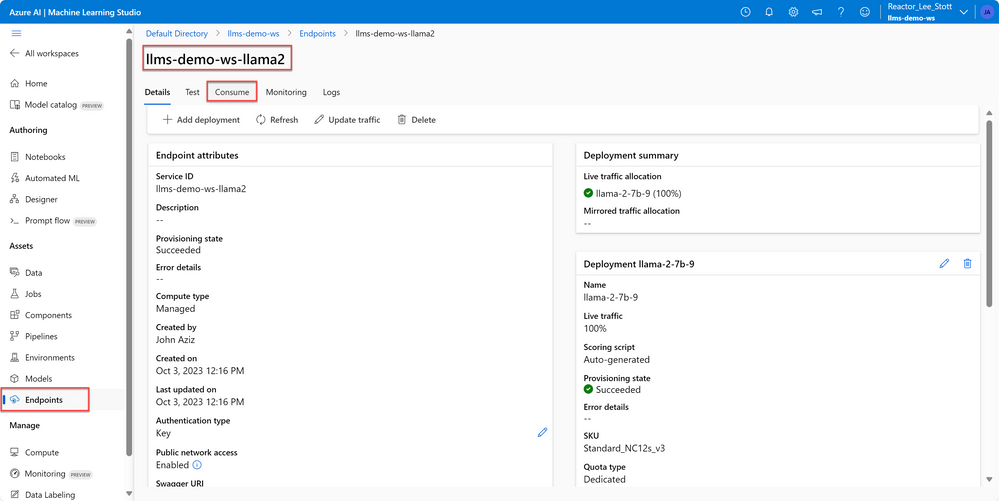

For Llama 2:

Perform the same steps as the previous model.

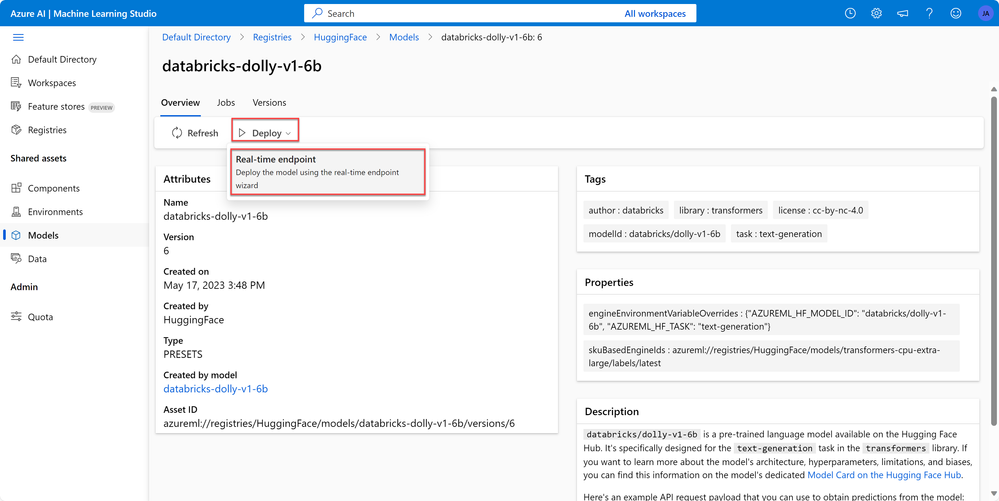

For Dolly v1:

Perform the same steps as the previous model.

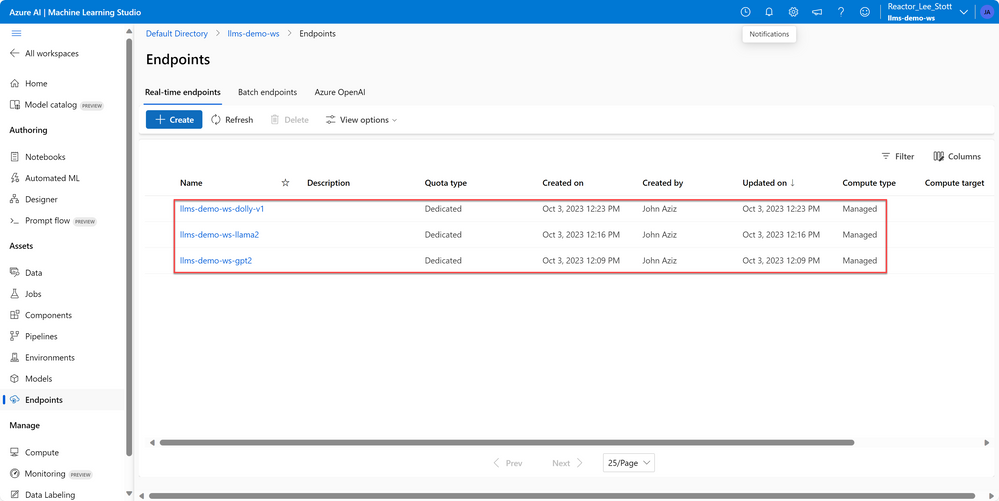

Now, you have successfully deployed three large language models ready for you to use in your different applications, but how? That's what you will learn in the next part!

Milestone 3: Understanding the Integration with Web Applications

In the previous milestone you learned that deploying your model gives you the ability to make HTTP requests and use it, most programming languages have built-in libraries to help you make requests and handle responses but what if you don't know any programming language? Azure Machine Learning Studio gives you ready-made code for each deployed model. You don't need to change anything in it. Simply take it and run it without any searching on the internet.

If you open any model's deployment page from the endpoints tab you'll find a tab called Consume.

In the Consume tab, you can find the REST endpoint and API Key I referred to earlier which are really important and must be kept safe. (Don't share them with anyone as they will have access to your deployment)

If you scroll down you can also find the ready-made code that I mentioned earlier.

Let's give the Python code a test!

On your local machine do the following:

- Open your favorite editor. For example VScode.

- Create a new file and give it the .py extension. For example main.py.

- Copy and paste the code into it.

You just have to make the following three little adjustments:

- Add your API key as it won't be added automatically for you.

- If you remember the input has to be in a specific shape that you already know where to find so, modify it like the screenshot below.

- Using the input_string alone generated very bad sentences so, I used the optional parameters to enhance the generated text. (Feel free to experiment with it remove/add other parameters)

Now, hit the run button and voila it works!

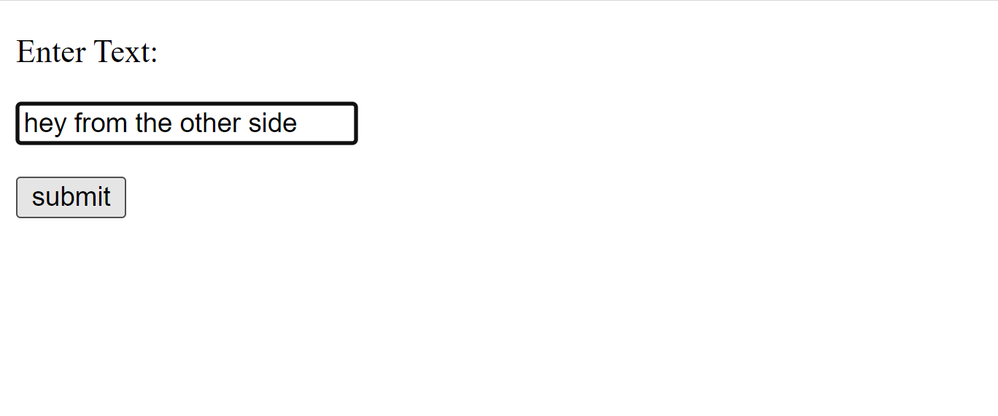

You may be asking now where is the website! well, creating a website is fairly easy as you only have to:

- Create a form inside an HTML page that takes text input and sends it to the backend

- In our backend,

- Get the input_string from the form.

- Format it in the shape that our model accepts.

- Run the code that you got from Azure to make a request.

- Receive the response and format it to get the text only

- Return the response as a string to the front end.

- Render it on the front end.

This is the part that you will build your website around.

Check out the full website code here in the Web App folder the app.py file.

You can copy and paste it but make sure to perform the adjustments mentioned above.

This is the landing page of the website, it's very simple to give you the ability to build your own user interface around it.

When we enter any text and select submit it gives us back the generated text.

Milestone 4: Understanding the Integration with Power Platform

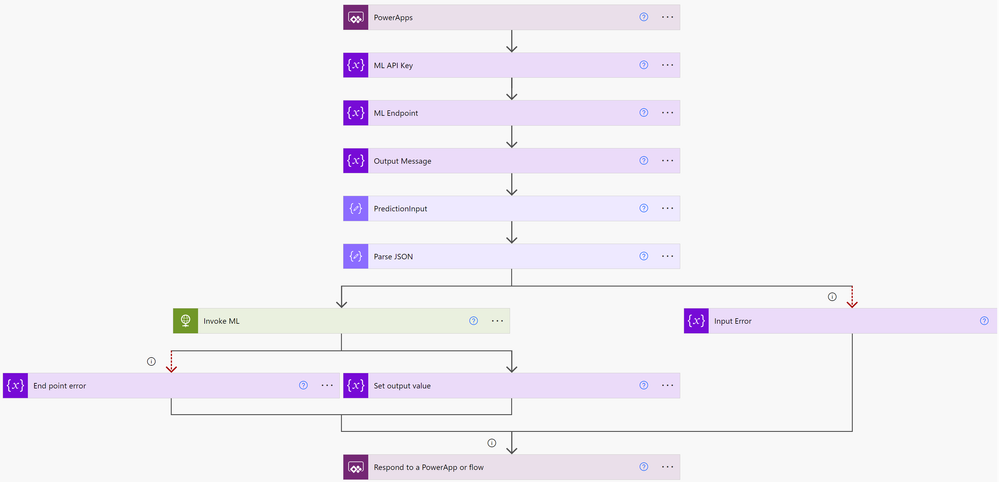

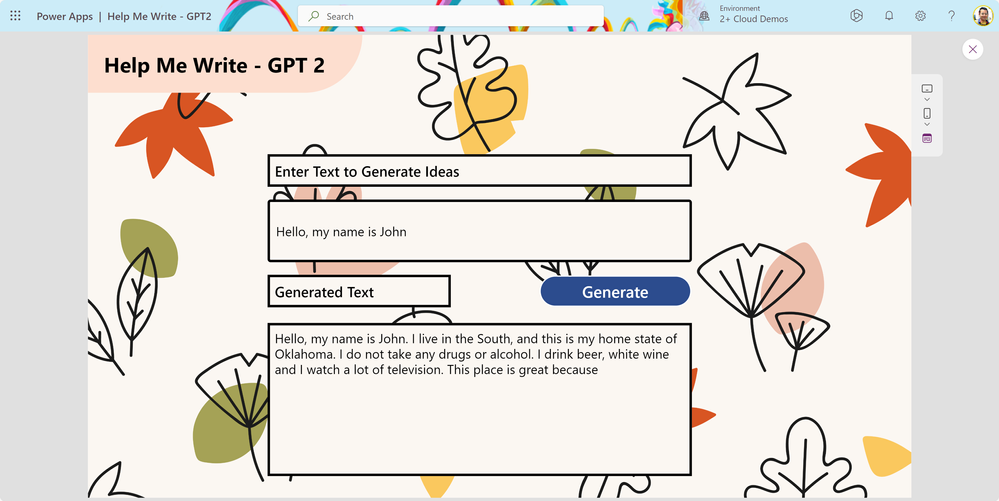

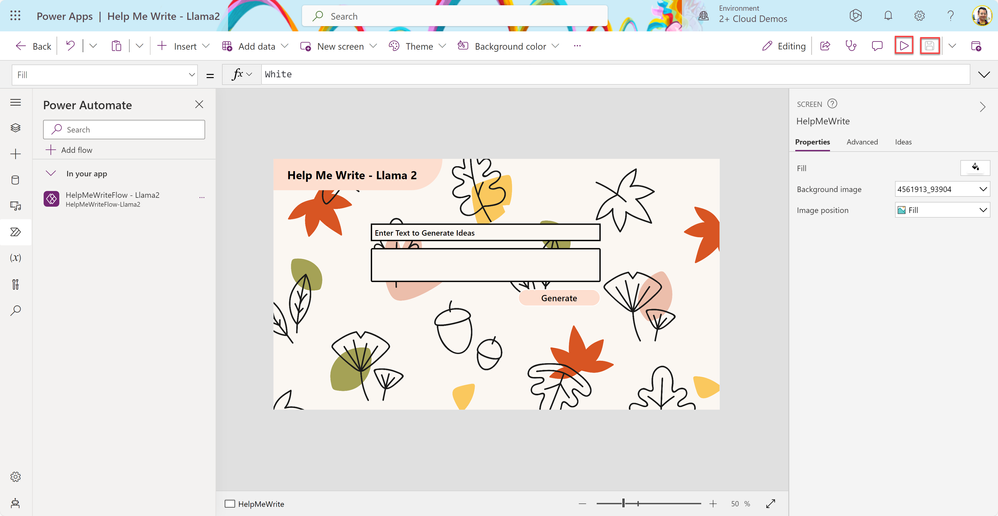

This solution has two parts Power Automate which is the one making everything and Power Apps which takes input from the user and sends it to Power Automate no formatting or text manipulation is done in Power Apps. (If you want to follow along, import the solution by following the steps found here.)

To understand this more here is the code that calls Power Automate from Power Apps.

This code runs when you select the button Generate and does the following:

- Take TextInput1 text box text and format it as a JSON object with the key input_string and the value is the text itself.

- Send this object to Power Automate by calling the function run from the flow

- Store the response of the flow in the _result variable using the set function.

Now, let's get to Power Automate where everything happens.

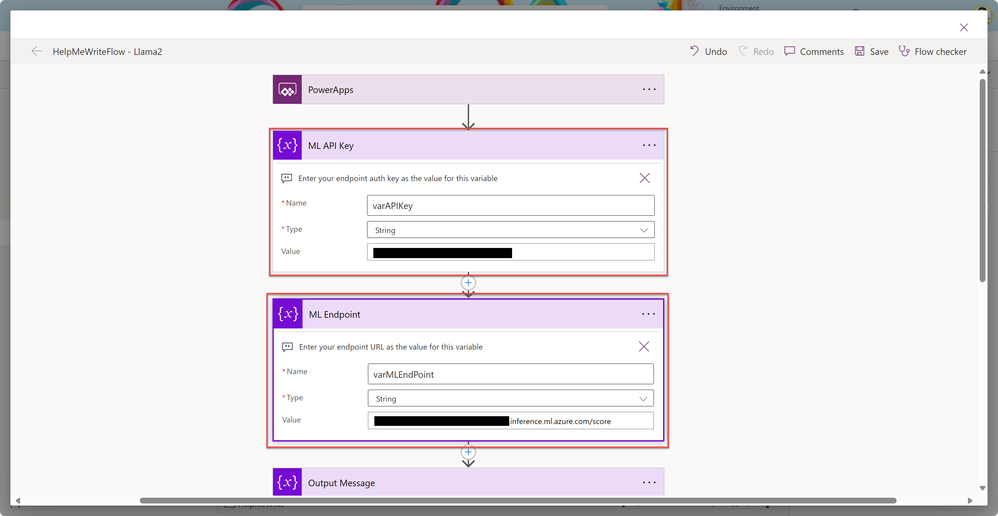

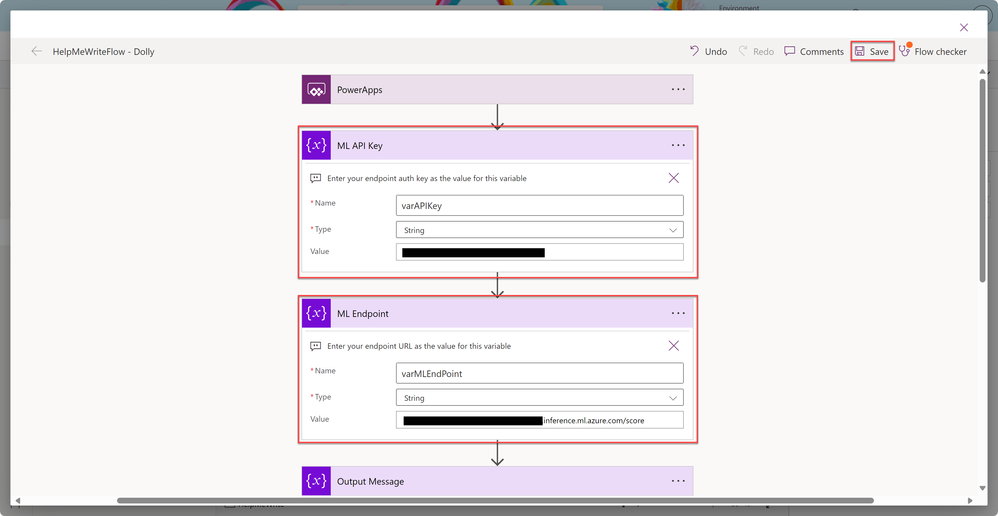

Power Automate does the following:

- Get triggered and Receive input from Power Apps.

- Initialize an ML API Key Variable.

- Initialize an ML Endpoint Variable.

- Initialize an Output Message Variable.

- Perform a Data Operation to get the Prediction Input.

- Perform a Data Operation to Parse the JSON using the right format.

- Check if there is an error in the input.

- Make an HTTP request using the previously initialized parameters and needed body shape.

- Check if there is an error in making the request and save it as output.

- Render the response and save it as an output message.

- Respond with the error or message to Power Apps.

So, you must modify steps 2 and 3 as these are the REST Endpoint and API key which are different for each model and change each time you create a new deployment. Also, you need to check steps 8 and 10 to make sure that the input shape and output shape are as expected.

For GPT-2:

You don't have to modify anything other than steps 2 and 3 as this App and Cloud Flow were initially created to work with GPT-2.

Let's add our Endpoint and API key!

Save and run the application to test it out.

Congratulations! You have successfully integrated the GPT-2 Large Language Model into your Power Platform.

If you just want to try this out you can find the full solution on john0isaac/AzureML-PowerAppSolution (github.com) download the zip file that is located inside the HelpMeWrite GPT-2 Solution folder and import it by following the steps found here.

For Llama 2:

You have to check steps 8 and 10 in addition to modifying steps 2 and 3 in Power Automate Cloud Flow.

Let's add our Endpoint and API key!

Modify the HTTP request to match our input shape by doing the following:

- Change the word inputs to input_data.

- Add the optional parameters to enhance the response.

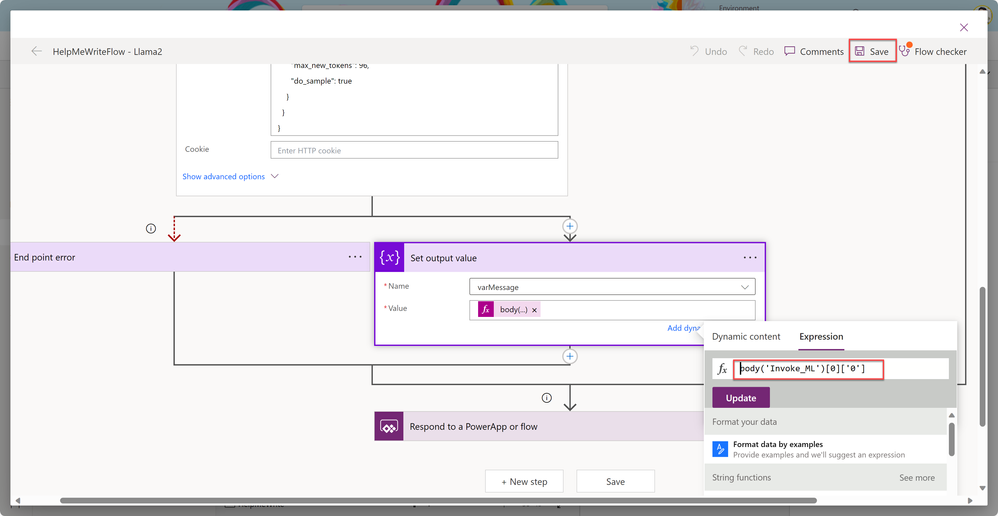

You don't need to change step 10 as this is the correct shape for the output. Save and close the flow.

Save and run the application to test it out.

Congratulations! You have successfully integrated the Llama 2 Large Language Model into your Power Platform.

If you just want to try this out you can find the full solution on john0isaac/AzureML-PowerAppSolution (github.com) download the zip file that is located inside the HelpMeWrite Llama2 Solution folder and import it by following the steps found here.

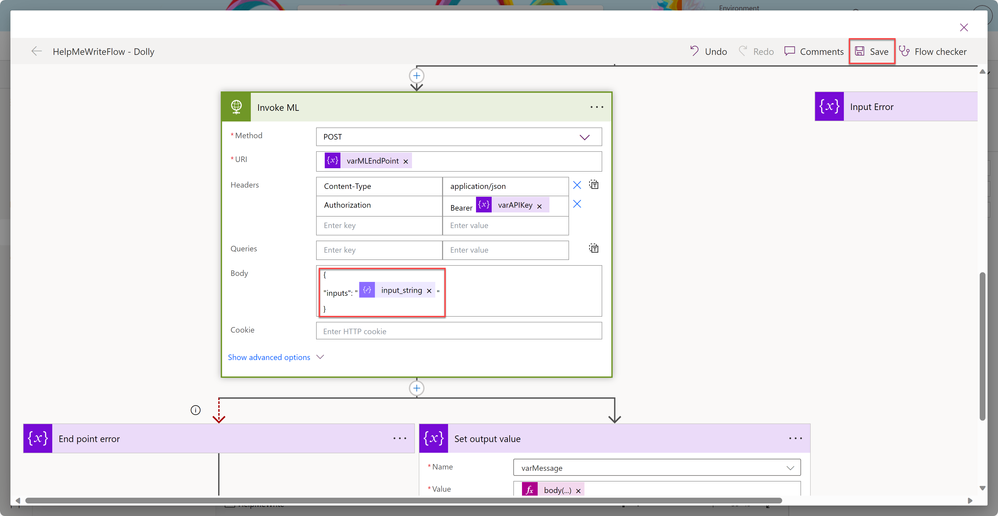

For Dolly v1:

You have to check steps 8 and 10 in addition to modifying steps 2 and 3 in Power Automate Cloud Flow.

Let's add our Endpoint and API key!

Modify the HTTP request to match your input shape by changing the format of the JSON request.

You also need to change step 10 as this is the not correct shape for the output instead of the '0' type 'generated_text'.

I was able to know this by testing the model using the Python ready-made code and taking note of the returned JSON response.

Select update after changing the text then Save and close.

Save and run the application to test it out.

Congratulations! You have successfully integrated the Dolly v1 Large Language Model into your Power Platform.

If you just want to try this out you can find the full solution on john0isaac/AzureML-PowerAppSolution (github.com) download the zip file that is located inside the HelpMeWrite Dolly Solution folder and import it by following the steps found here.

Clean Up

To prevent additional charges that may incur to your account do the following:

- Delete the three deployments from the endpoints tab on Azure Machine Learning Studio.

- Delete the Azure Machine Learning Workspace.

- Delete the Power Apps App.

- Delete the Power Automate Cloud Flow.

Conclusion

Congratulations, you have learned and applied all the concepts behind how to use different large language models on Azure and integrate them into your Web Application or Power App.

This gives you the ability to explore other large language models available on Microsoft Azure or any other type of machine learning models as you now know that the code to use the model will be provided to you and you will be able to figure out the rest of the things related to the input and output shapes.

Found this useful? Share it with others and follow me to get updates on:

- Twitter (twitter.com/john00isaac)

- LinkedIn (linkedin.com/in/john0isaac)

You can learn more at:

- Fundamentals of Generative AI - Training | Microsoft Learn

- Explore the Azure Machine Learning workspace - Training | Microsoft Learn

- Deploy and consume models with Azure Machine Learning - Training | Microsoft Learn

- Introduction to solutions for Microsoft Power Platform - Training | Microsoft Learn

- Manage solutions in Power Apps and Power Automate - Training | Microsoft Learn

Join us live on November the 2nd, 2023 to learn more about Empowering Tech Entrepreneurs: Harnessing a LLM in Power Platform

Register for this event!

2 November, 2023 | 3:30 PM - 4:30 PM GMT

Learn about the new capabilities of Azure Machine Learning Registry that enables you to deploy Large Language Models like GPT and many more, and integrate it with Power Platform John Aziz, a Gold Microsoft Learn Student Ambassador from Egypt, and Lee Stott Principal Cloud Advocate Manager.

In this session, you'll learn about Large Language Models and the steps you need to perform to deploy them to Azure Machine Learning, discover how to set up an Azure Machine Learning Workspace, deploy the machine learning model, learn about Power Apps and Power Automate, and integrate the deployed model with Power Apps. Follow along as we provide clear instructions, and make the process accessible to both beginners and experienced tech enthusiasts.

By the end, you'll have a fully functional application capable of generating text and creative writing ideas. Connect with John and Lee on Twitter and LinkedIn to explore more Microsoft tools and cool tech solutions.

Do you want to become an entrepreneur and harness the power of AI in building your startup?

Join Microsoft Founders Hub for a chance to earn up to $2,500 of OpenAI credits and $1,000 of Azure credits to build your startup!

Feel free to share your comments and/or inquiries in the comment section below..

See you in future demos!