This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Context

Interacting with data in enterprise applications is becoming increasingly prevalent. Developers are now frequently employing the RAG (Retrieval Augmented Generation) model for this purpose. This method integrates OpenAI with vector storage technologies like Cognitive Search, allowing users to converse in natural language with their enterprise data, regardless of its format. An example of this can be seen in a GitHub sample.

The codeful way today

Building such sophisticated applications typically involves a few key steps that require putting building blocks together. Primarily, it includes the creation of a dynamic ingestion pipeline and a chat interface capable of communicating with vector databases and Large Language Models (LLMs). Various components can be assembled to not only perform the data ingestion process but also to provide a robust backend for the chat interface. This backend facilitates the submission of prompts and generates dependable responses during interactions. However, managing and controlling all these elements in code can be quite challenging as is the case for most of the solutions out there.

Codeless approach via Logic Apps

The primary emphasis in this context is on the role of Logic Apps in simplifying backend management. Logic Apps offer pre-built connectors as building blocks, streamlining the backend process. This allows you to concentrate solely on sourcing the data and ensuring that when a prompt is received, the search yields the most current information. Let’s take this example sample used by Azure OpenAI + Cognitive Services.

We're excited to introduce new service provider connectors for Azure Open AI and AI Search, designed to help developers create applications that ingest data and facilitate simple chat conversations.

To understand this better, let's breakdown the backend logic into two key workflows:

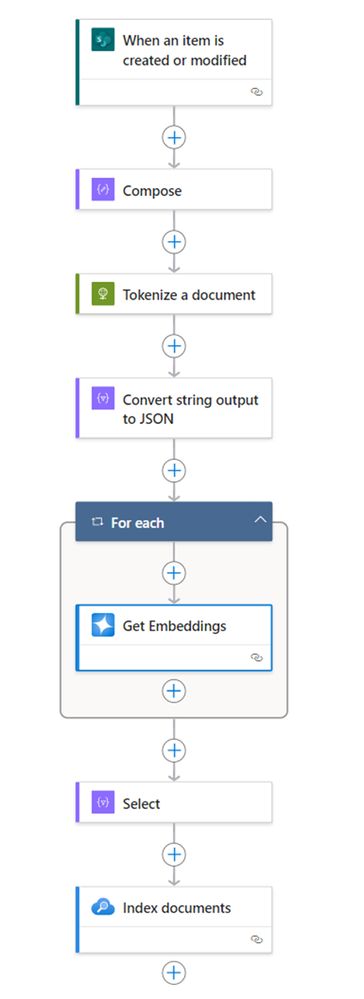

Ingestion Workflow:

Developers can set up triggers to retrieve PDF files, either on recurrence or in response to specific events, such as the arrival of a new file in a chosen storage system like SharePoint or OneDrive. Here's a simplified workflow process for how that ingestion may look like:

- Data Acquisition: Retrieve data from any third-party storage system.

- Data Tokenization: In this scenario, tokenizing a PDF document.

- Embeddings Generation: Utilize Azure OpenAI to create embeddings.

- Document Indexing: Index the document using AI Search.

By implementing this pattern with any data sources, developers can save considerable time and effort while building ingestion pipelines. This approach simplifies not just the coding aspect but also guarantees that your workflows have effective authentication, monitoring, and deployment processes in place. Essentially, it encapsulates all the advantages offered by the Logic App (Standard) as of today.

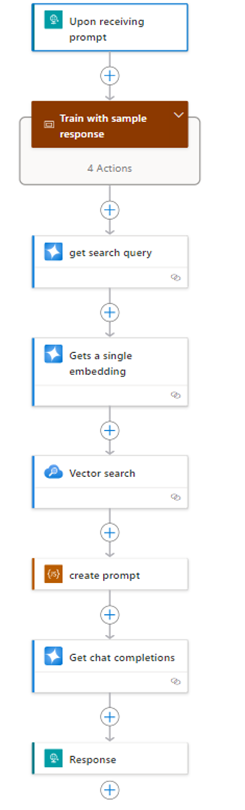

Chat Workflow:

As data continues to be ingested in vector databases, it should be easily searchable so that when a user asks a question, logic apps backend can process the prompt and generate a reliable response.

Here is how chat workflow may look like:

- Prompt capture: Capturing JSON via HTTP request trigger

- Model training: Adapting to sample responses (modeled on GitHub example)

- Query generation: Crafting search queries for vector database

- Embedding conversion: Transforming queries into vector embeddings

- Vector search operation: Executing searches in the preferred database

- Prompt creation and chat completion: Use straightforward JavaScript to build prompts and connect with the chat completion API, guaranteeing reliable responses in chat conversations.

Every step in the process, from generating embeddings and tokenizing to vector searching, not only promises swift performance due to the stateless workflow but also assures that the AI seamlessly extracts all crucial insights and information from your data files.

It goes without saying that in today's fast-paced tech environment, conserving time and development resources in creating OpenAI applications is crucial. That is why we are thrilled to share this capability, streamlining the process for developers to build dynamic ingestion or chat workflows with just a few essential building blocks.

Try it out

The new in-App connectors are currently in Private Preview. Feel free to reach out to try this experience. We are planning to do a Public Preview in January 2024.