This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Introduction

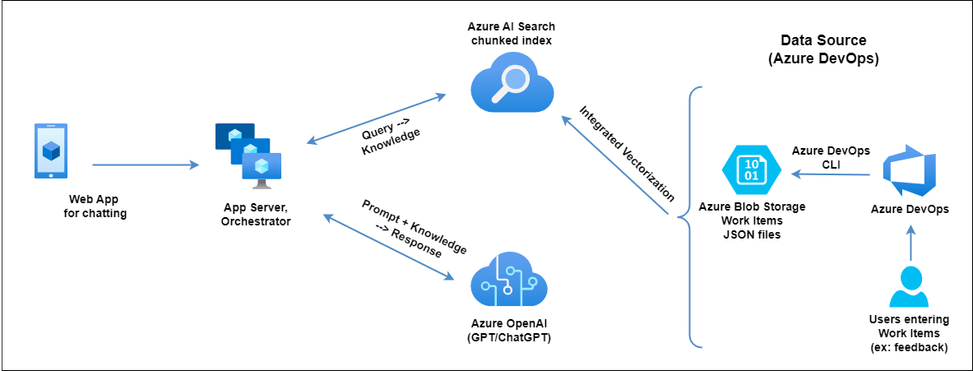

In the world of software development, the ability to quickly access and interpret Azure DevOps (ADO) Work Items is crucial. This is where our innovative solution comes into play, offering a ChatGPT-like experience directly with your ADO. Utilizing the Retrieval Augmented Generation (RAG) pattern, Azure AI Search & Azure Open AI's cutting-edge capabilities, we've created a seamless integration that elevates the efficiency of querying ADO work items.

Solution Overview

This solution builds on top of Azure Search OpenAI Demo.

The solution leverages Azure DevOps CLI to periodically export ADO Work Items as JSON and store them in Azure Blob storage. Next Azure AI Search indexer runs on a scheduled basis to chunk and create a vectorized embeddings of the JSON data to create index, leveraging Azure AI Search's Integrated Vectorization feature. In the final step, when users query about their work items through the Chat App, the orchestrator queries Azure AI Search index using Hybrid Search + Semantic ranker to retrieves relevant knowledge (work items). Then the orchestrator sends the knowledge along with vectorized embeddings of the user query to Azure OpenAI. Finally Azure OpenAI builds the response based on the knowledge provided and that gets served to the user.

Part 1: Exporting Work Items to Azure Blob Storage

The journey begins with Azure DevOps CLI, where work items such as User Stories, Product Backload Items (or custom work item such as Product Feedback) are exported to JSON files. An Azure Function operates periodically, transforming any new or modified work items into JSON files and securely storing them in Azure Blob storage. This ensures that data remains current and accurate.

Setting Up the Azure DevOps CLI and Exporting Work Items

The first step in our solution involves setting up the Azure DevOps CLI and exporting work items. This process is streamlined with a Bash script, which automates the retrieval of work items as JSON files. These JSON files are then stored securely in Azure Blob storage.

Embedding and Utilizing Queries in the Script

To automate the export of items, we’re going to use Queries. Queries in Azure DevOps are used to fetch specific sets of work items based on criteria like type, status, or custom fields. These Azure Boards Queries can exist in either Azure DevOps Boards, or in embedded in the script as a Work Item Query Language (WIQL) query. We will then use the Azure DevOps CLI to run the query and export the data to JSON files.

- Use managed queries to list work items - Azure Boards | Microsoft Learn

- View or run a query in Azure Boards and Azure DevOps - Azure Boards | Microsoft Learn

There is a limitation of the CLI only returning 1000 rows from queries, so if you have a lot of data you can leverage REST calls directly or have multiple queries that return smaller amounts of data, like per year or per quarter.

Selecting Different Fields in Queries

Finally, we’ll select and export only the fields we care about. Queries can return limited data but also data that is more verbose than needed and can come across as noise for the purposes of exploring your data.

Given this returned data from a query, there is a lot of extra data that we can clean up, like CreatedBy and Id.

{

"fields": {

"System.CreatedBy": {

"_links": {

"avatar": {

"href": "[Anonymized Avatar URL]"

}

},

"descriptor": "[Anonymized Descriptor]",

"displayName": "[Anonymized Name]",

"id": "[Anonymized ID]",

"imageUrl": "[Anonymized Image URL]",

"uniqueName": "[Anonymized Email]",

"url": "[Anonymized URL]"

},

"System.Id": 12484,

},

"id": 12484,

}

The Azure CLI has the capability to filter, rename, and manipulate JavaScript results. The Azure CLI supports JMESPath, a query language for JSON.

By leveraging a JMESPath query, we can simplify the result objects to make indexing and the intent clearer.

$ recordFormat='[].{Id:fields."System.Id", CreatedBy:fields."System.CreatedBy".displayName}'

$ az boards query --org=$org --id ${queryId} --query $recordFormat

{

"CreatedBy": "[Anonymized Person]",

"Id": 12484,

}

This gives us more concise, targeted results that reduce a lot of duplication and noise.

The Script: Automated Script for Exporting Work Items

The automation script plays a crucial role in the export process. Below is sample version of the script. This can be run manually, or put in a pipeline and run on a schedule. The result is a folder full of json files, one per workitem. From here you can manually upload the files to Azure Blob Storage, use the script to automate it, or put those instructions into a pipeline. In the future we can show how to make this a continuous process instead of a snapshot export.

Here a complete script for use as an example: azure-devops-scripts/scripts/export-query-to-json.sh at main · codebytes/azure-devops-scripts (github.com)

Part 2: Indexing with Azure AI Search

Next, we harness Azure AI Search's indexer to organize the ADO work item data from Blob storage, capitalizing on the Integrated Vectorization feature. This process, which can be scheduled according to your needs, involves chunking the data with the Text Split skill followed by the pivotal step of converting text into vectors using the AzureOpenAIEmbedding skill. Get started with integrated vectorization using the Import and vectorize data wizard in the Azure portal.

Once Azure AI Search service provisioned with Standard pricing tier, click on the "Import and vectorize data" button as shown below and follow the wizard to create Index and Indexer (to periodically index the data).

Part 3: Chatting with Azure OpenAI Models using your own data

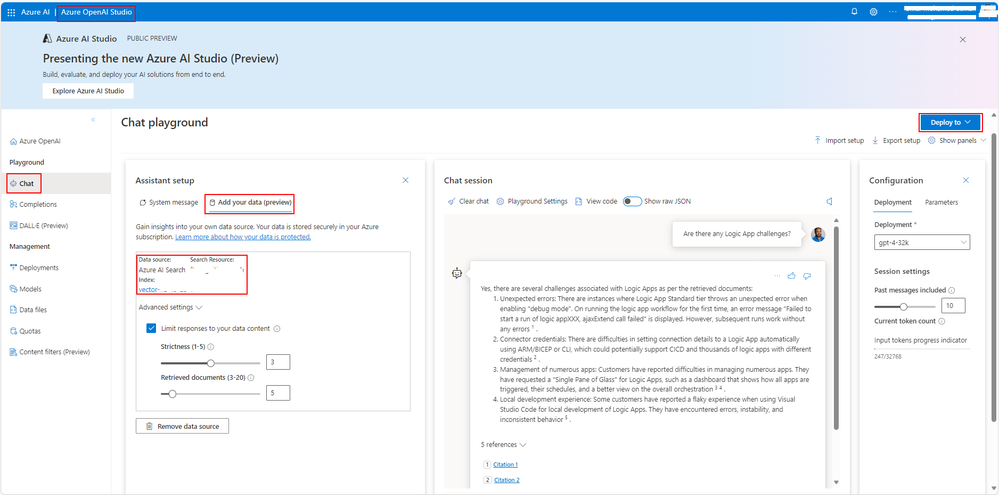

Finally, the crux of our solution lies in the Chat with Azure OpenAI models feature. Within Azure OpenAI Studio, we integrate our Azure AI Search index and employ vector search, utilizing the Azure OpenAI's text-embedding-ada-002 model. By selecting the Hybrid + Semantic ranker search configuration, we ensure the highest quality of results, combining vector and keyword searches with a semantic ranker. Follow this Quickstart to Chat with Azure OpenAI models using your own data.

Querying and Deployment

After integrating our data sources, the Chat playground within Azure OpenAI Studio becomes the testing ground. Here, queries about work items come to life, providing insights and answers through conversational AI. With satisfaction in the studio experience, deploying the model as a standalone web application is merely a click away.

From your Azure OpenAI's "Model deployments" under Azure Portal, open Azure OpenAI Studio/Azure AI Studio. Here you can use Chat option under playground to "Add your data". Then make sure to select "Azure AI Search" as data source and select the right AI search service and Index provisioned in the step 2. Check "Add vector search to this search resource" box. Select Ada embedding model from drop down, if it's not provisioned, go back and provision it first. Under "Data management" screen, select search type as "Hybrid + semantic".

Now you can start chatting with your work items as shown below. Once satisfied with the results, click on "Deploy to" button to deploy as "A new web app".

Authentication

By default, user authentication is mandatory via App Service Easy Auth, with the option to limit access to specific users or Entra ID groups, ensuring both security and flexibility.

Conclusion

Our solution not only streamlines access to work items within Azure DevOps but also enhances the user experience with intelligent and intuitive AI-driven interactions. By marrying Azure's powerful AI Search with conversational models, we've unlocked a new realm of productivity for developers and project managers alike.