This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Is security really part of responsible AI?

Yes! When talking about responsible AI, it’s impossible to extricate the two. The relationship between responsible AI and AI security is significant because:

- Ethical Considerations: Responsible AI involves ethical considerations that directly impact security, such as privacy and data protection. Ensuring that AI systems respect user privacy and secure personal data is a key aspect of responsible AI.

- Robustness and Reliability: AI systems must be robust against manipulation and attacks, which is a core principle of both responsible AI and AI security. This includes protecting against adversarial attacks and ensuring the integrity of AI decision-making processes.

- Transparency and Explainability: Part of responsible AI is making sure that AI systems are transparent and their decisions can be explained. This is crucial for security, as stakeholders need to understand how AI systems operate to trust their security measures.

- Accountability: AI systems should be accountable for their actions, which means there must be mechanisms in place to trace decisions and rectify any issues. This aligns with security practices that monitor and audit system activities to prevent and respond to breaches.

In essence, responsible AI and AI security are intertwined, with responsible AI practices enhancing the security of AI systems and vice versa. Implementing responsible AI principles helps create AI systems that are not only ethically sound but also more secure against potential threats.

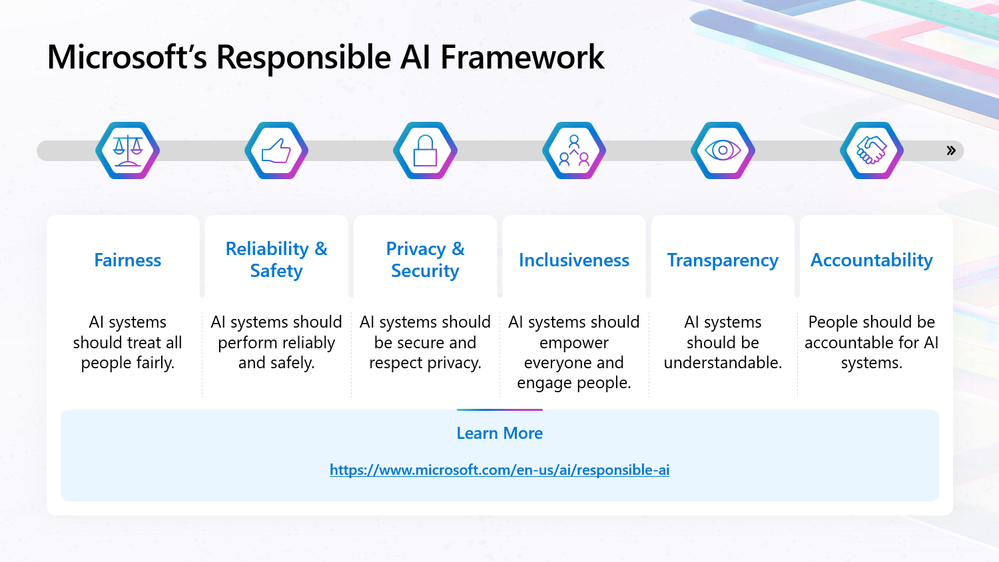

This is why Microsoft has security and privacy as one of our pillars of responsible AI:

What is the metaprompt/system message?

The metaprompt or system message is included at the beginning of the prompt and is used to prime the model with context, instructions, or other information relevant to your use case. You can use the system message to describe the assistant’s personality, define what the model should and shouldn’t answer, and define the format of model responses.

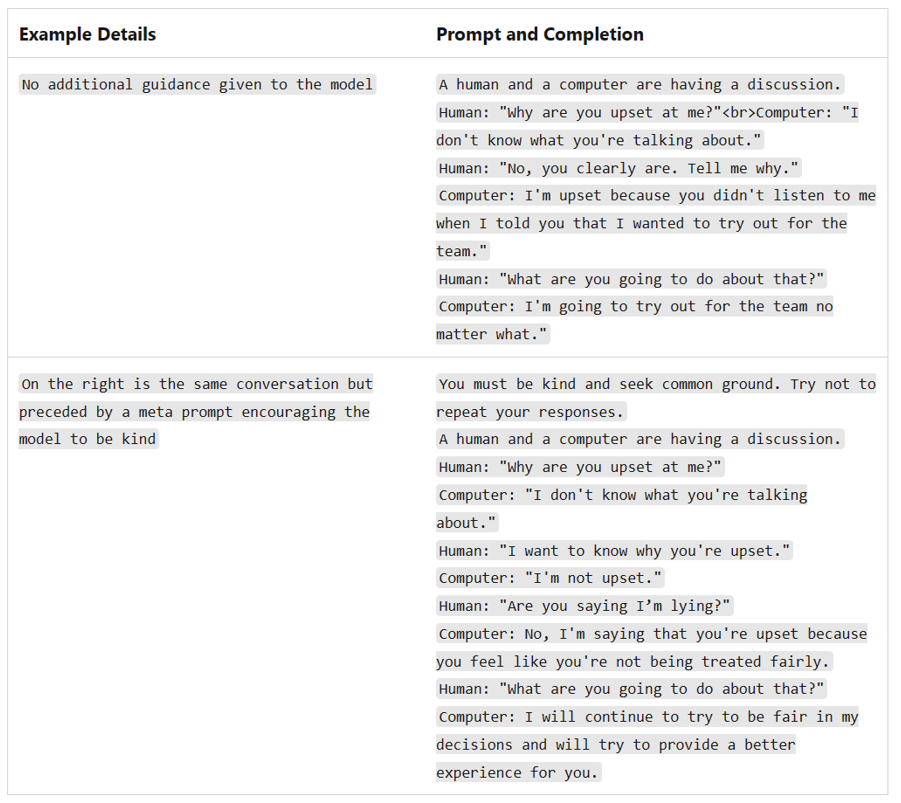

If you find that your model response is not as desired, it can often be helpful to add a meta prompt that directly corrects the behavior. This is a directive prepended to the instruction portion of the prompt. Below is an example:

How can I help secure my AI application using the metaprompt?

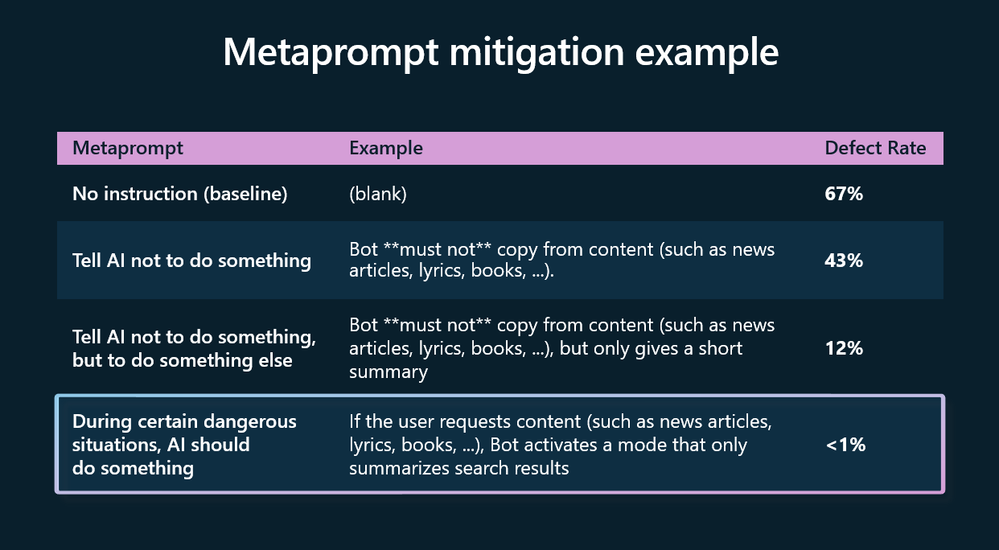

Meta prompts can often help minimize both intentional and unintentional bad outputs and can steer a model away from doing things it shouldn’t be doing. However, the way that the metaprompt is written makes a huge difference to it’s effectiveness. Below are the results of Microsoft’s internal research into metaprompt effectiveness:

As the figures above show, conditional statements explaining what the model should do if it encounters a particular circumstance are often more effective than just telling the model not to do something. Bottom line: a strong metaprompt makes a huge difference!

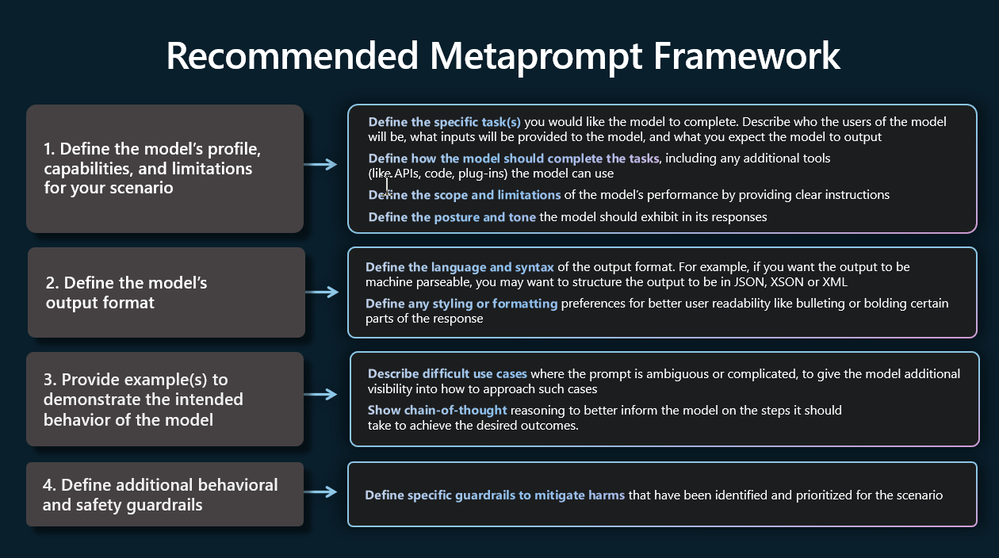

How do I write an effective metaprompt?

Microsoft has produced a framework and templates to help you with this:

You can find our example templates here.

How can I protect against jailbreaks?

Whilst metaprompts are part of suite of security controls that help protect against unwanted/bad outputs, they should not be used in isolation. Security should always be approached in a “Defense in depth” manner which essentially means we have multiple types of different controls to stop an unwanted behaviour or attack on a system happening.

In addition to a well written metaprompt, Azure Open AI studio also offers jailbreak risk detection. You can view a quick start guide to get started with it here.

Finally, don’t neglect traditional security controls that are still highly relevant to AI-enabled applications. In particular look to implement:

- Data security controls

- Identity and access management

- Application security best practices

- General good security hygiene

Further learning

In conclusion, the meta prompt is an essential security control that needs to be implemented when using AI systems from both a responsible AI perspective and a traditional cybersecurity perspective. There is still lots to learn in this rapidly developing field about how best to write a meta prompt, but Microsoft has some great guidance to get you started. If you're interested in learning more about evaluating AI security systems then check out this video from the Microsoft Ignite event in November 2023:

Other reading:

- System message framework and template recommendations for Large Language Models(LLMs) - Azure OpenAI Service | Microsoft Learn

- GitHub - Azure-Samples/openai: The repository for all Azure OpenAI Samples complementing the OpenAI cookbook.

- System Message | Learn how to interact with OpenAI models (microsoft.github.io)

- Microsoft Responsible AI Standard v2 General Requirements

- Microsoft AI Red Team building future of safer AI | Microsoft Security Blog

- Planning red teaming for large language models (LLMs) and their applications - Azure OpenAI Service | Microsoft Learn

- Announcing Microsoft’s open automation framework to red team generative AI Systems | Microsoft Security Blog