This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

The Kubernetes AI toolchain operator (Kaito) is a Kubernetes operator that simplifies the experience of running OSS AI models like Falcon and Llama2 on your AKS cluster. You can deploy Kaito on your AKS cluster as a managed add-on forAzure Kubernetes Service (AKS). The Kubernetes AI toolchain operator (Kaito) uses Karpenter to automatically provision the necessary GPU nodes based on a specification provided in the Workspace custom resource definition (CRD) and sets up the inference server as an endpoint for your AI models. This add-on reduces onboarding time and allows you to focus on AI model usage and development rather than infrastructure setup.

In this project, I will show you how to:

- Deploy the Kubernetes AI Toolchain Operator (Kaito) and a Workspace on Azure Kubernetes Service (AKS) using Terraform.

- Utilize Kaito to create an AKS-hosted inference environment for the Falcon 7B Instruct model.

- Develop a chat application using Python and Chainlit that interacts with the inference endpoint exposed by the AKS-hosted model.

By following this guide, you will be able to easily set up and use the powerful capabilities of Kaito, Python, and Chainlit to enhance your AI model deployment and create dynamic chat applications. For more information on Kaito, see the following resources:

- Kubernetes AI Toolchain Operator (Kaito)

- Deploy an AI model on Azure Kubernetes Service (AKS) with the AI toolchain operator

- Intelligent Apps on AKS Ep02: Bring Your Own AI Models to Intelligent Apps on AKS with Kaito

- Open Source Models on AKS with Kaito

The companion code for this article can be found in this GitHub repository.

NOTE

You can find thearchitecture.vsdxfile used for the diagram under thevisiofolder.

- An active Azure subscription. If you don't have one, create a free Azure account before you begin.

- Visual Studio Code installed on one of the supported platforms along with the HashiCorp Terraform.

- Azure CLI version 2.59.0 or later installed. To install or upgrade, see Install Azure CLI.

aks-previewAzure CLI extension of version2.0.0b8or later installed- Terraform v1.7.5 or later.

- The deployment must be started by a user who has sufficient permissions to assign roles, such as a

User Access AdministratororOwner. - Your Azure account also needs

Microsoft.Resources/deployments/writepermissions at the subscription level.

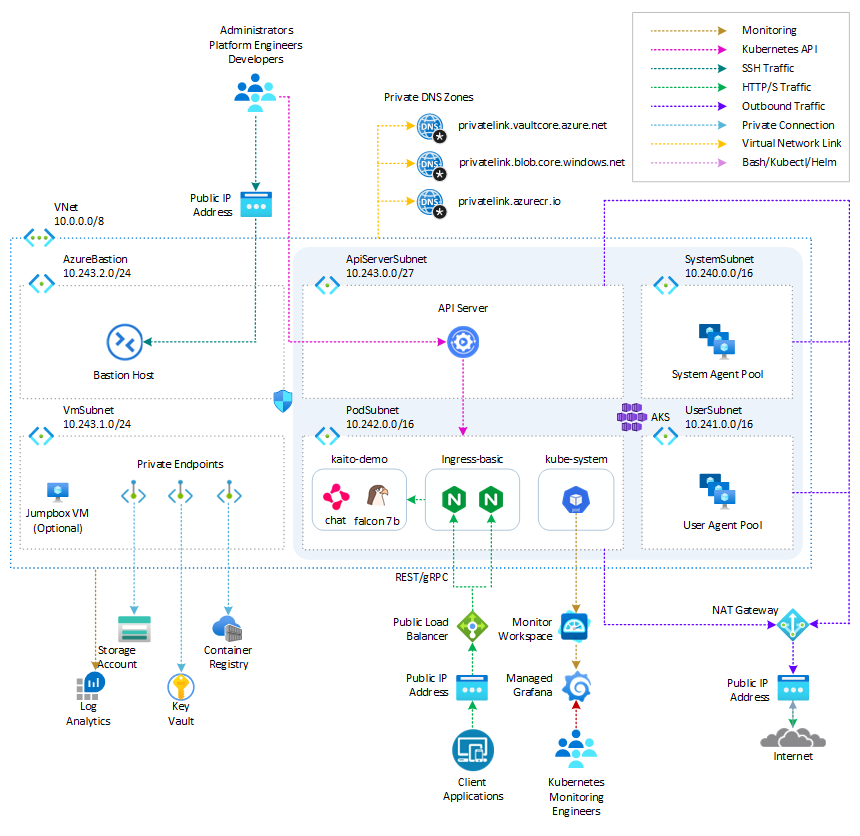

The following diagram shows the architecture and network topology deployed by the sample:

This project provides a set of Terraform modules to deploy the following resources:

- Azure Kubernetes Service: A public or private Azure Kubernetes Service(AKS) cluster composed of a:

- A

systemnode pool in a dedicated subnet. The default node pool hosts only critical system pods and services. The worker nodes have node taint which prevents application pods from beings scheduled on this node pool. - A

usernode pool hosting user workloads and artifacts in a dedicated subnet.

- A

- User-defined Managed Identity: a user-defined managed identity used by the AKS cluster to create additional resources like load balancers and managed disks in Azure.

- Azure Virtual Machine: Terraform modules can optionally create a jump-box virtual machine to manage the private AKS cluster.

- Azure Bastion Host: a separate Azure Bastion is deployed in the AKS cluster virtual network to provide SSH connectivity to both agent nodes and virtual machines.

- Azure NAT Gateway: a bring-your-own (BYO) Azure NAT Gateway to manage outbound connections initiated by AKS-hosted workloads. The NAT Gateway is associated to the

SystemSubnet,UserSubnet, andPodSubnetsubnets. The outboundType property of the cluster is set touserAssignedNatGatewayto specify that a BYO NAT Gateway is used for outbound connections. NOTE: you can update theoutboundTypeafter cluster creation and this will deploy or remove resources as required to put the cluster into the new egress configuration. For more information, see Updating outboundType after cluster creation. - Azure Storage Account: this storage account is used to store the boot diagnostics logs of both the service provider and service consumer virtual machines. Boot Diagnostics is a debugging feature that allows you to view console output and screenshots to diagnose virtual machine status.

- Azure Container Registry: an Azure Container Registry (ACR) to build, store, and manage container images and artifacts in a private registry for all container deployments.

- Azure Key Vault: an Azure Key Vault used to store secrets, certificates, and keys that can be mounted as files by pods using Azure Key Vault Provider for Secrets Store CSI Driver. For more information, see Use the Azure Key Vault Provider for Secrets Store CSI Driver in an AKS cluster and Provide an identity to access the Azure Key Vault Provider for Secrets Store CSI Driver.

- Azure Private Endpoints: an Azure Private Endpoint is created for each of the following resources:

- Azure Container Registry

- Azure Key Vault

- Azure Storage Account

- API Server when deploying a private AKS cluster.

- Azure Private DNDS Zones: an Azure Private DNS Zone is created for each of the following resources:

- Azure Container Registry

- Azure Key Vault

- Azure Storage Account

- API Server when deploying a private AKS cluster.

- Azure Network Security Group: subnets hosting virtual machines and Azure Bastion Hosts are protected by Azure Network Security Groups that are used to filter inbound and outbound traffic.

- Azure Log Analytics Workspace: a centralized Azure Log Analytics workspace is used to collect the diagnostics logs and metrics from all the Azure resources:

- Azure Kubernetes Service cluster

- Azure Key Vault

- Azure Network Security Group

- Azure Container Registry

- Azure Storage Account

- Azure jump-box virtual machine

- Azure Monitor workspace: An Azure Monitor workspace is a unique environment for data collected by Azure Monitor. Each workspace has its own data repository, configuration, and permissions. Log Analytics workspaces contain logs and metrics data from multiple Azure resources, whereas Azure Monitor workspaces currently contain only metrics related to Prometheus. Azure Monitor managed service for Prometheus allows you to collect and analyze metrics at scale using a Prometheus-compatible monitoring solution, based on the Prometheus. This fully managed service allows you to use the Prometheus query language (PromQL) to analyze and alert on the performance of monitored infrastructure and workloads without having to operate the underlying infrastructure. The primary method for visualizing Prometheus metrics is Azure Managed Grafana. You can connect your Azure Monitor workspace to an Azure Managed Grafana to visualize Prometheus metrics using a set of built-in and custom Grafana dashboards.

- Azure Managed Grafana: an Azure Managed Grafana instance used to visualize the Prometheus metrics generated by the Azure Kubernetes Service(AKS) cluster deployed by the Bicep modules. Azure Managed Grafana is a fully managed service for analytics and monitoring solutions. It's supported by Grafana Enterprise, which provides extensible data visualizations. This managed service allows to quickly and easily deploy Grafana dashboards with built-in high availability and control access with Azure security.

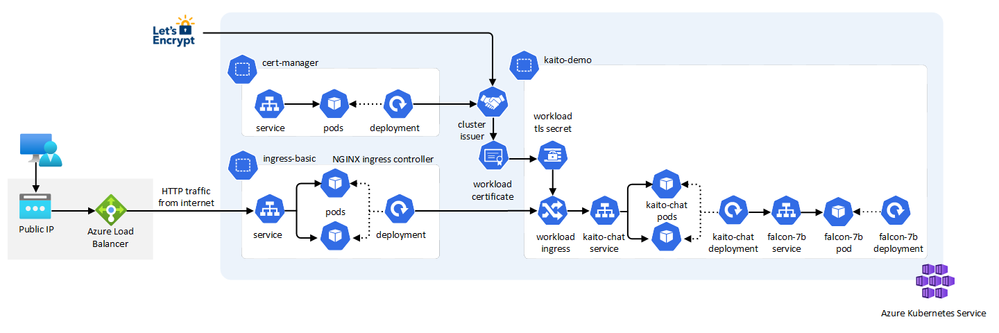

- NGINX Ingress Controller: this sample compares the managed and unmanaged NGINX Ingress Controller. While the managed version is installed using the Application routing add-on, the unmanaged version is deployed using the Helm Terraform Provider. You can use the Helm provider to deploy software packages in Kubernetes. The provider needs to be configured with the proper credentials before it can be used.

- Cert-Manager: the

cert-managerpackage and Let's Encrypt certificate authority are used to issue a TLS/SSL certificate to the chat applications. - Prometheus: the AKS cluster is configured to collect metrics to the Azure Monitor workspace and Azure Managed Grafana. Nonetheless, the kube-prometheus-stack Helm chart is used to install Prometheus and Grafana on the AKS cluster.

- Kaito Workspace: a Kaito workspace is used to create a GPU node and the Falcon 7B Instruct model.

- Workload namespace and service account: the Kubectl Terraform Provider and Kubernetes Terraform Provider are used to create the namespace and service account used by the chat applications.

- Azure Monitor ConfigMaps for Azure Monitor managed service for Prometheus and

cert-managerCluster Issuer are deployed using the Kubectl Terraform Provider and Kubernetes Terraform Provider.`

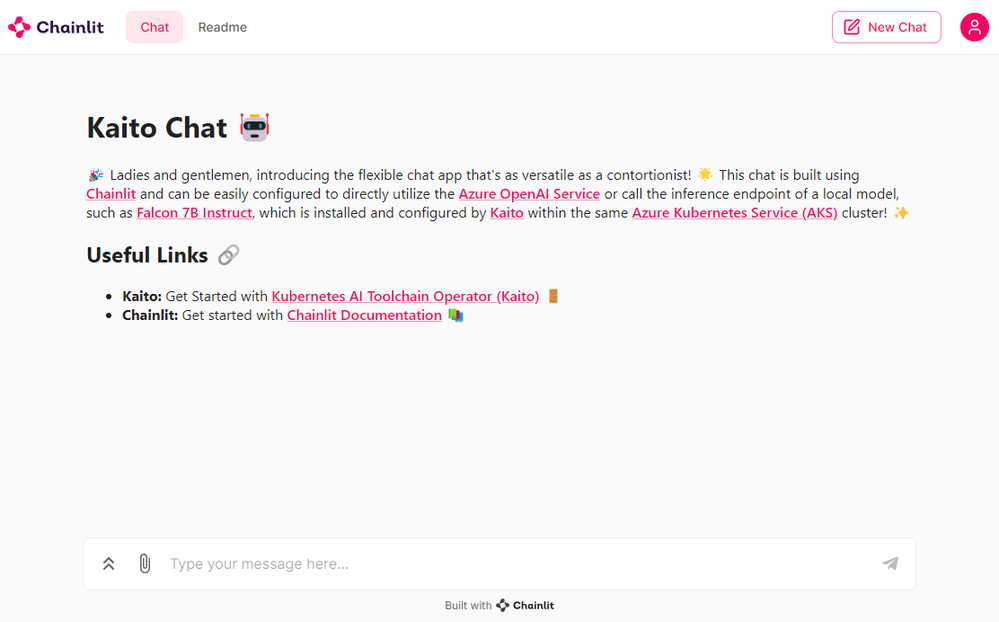

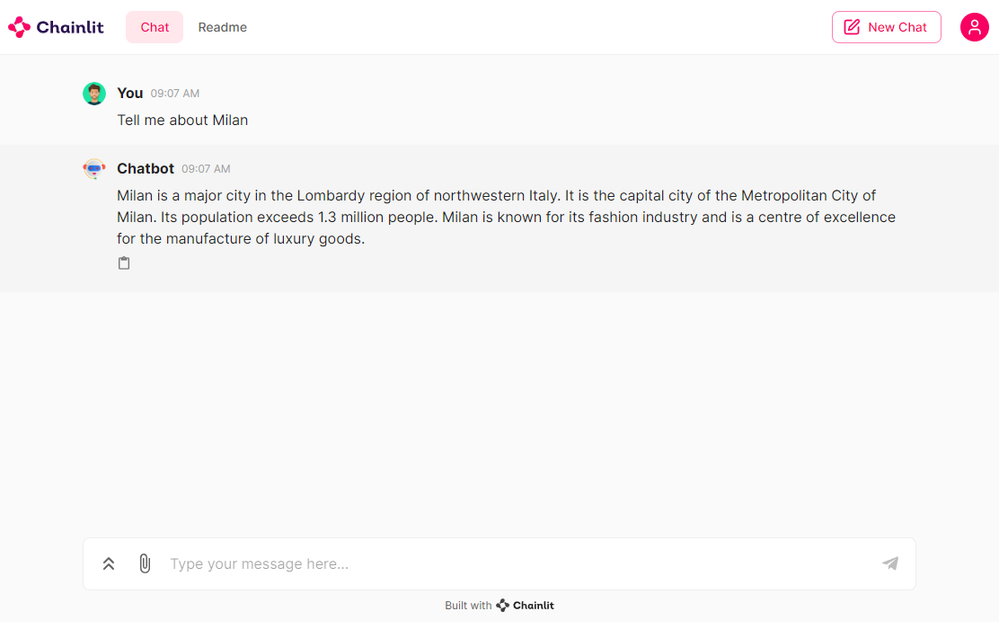

The architecture of the kaito-chat application can be seen in the image below. The application calls the inference endpoint created by the Kaito workspace for the Falcon-7B-Instruct model.

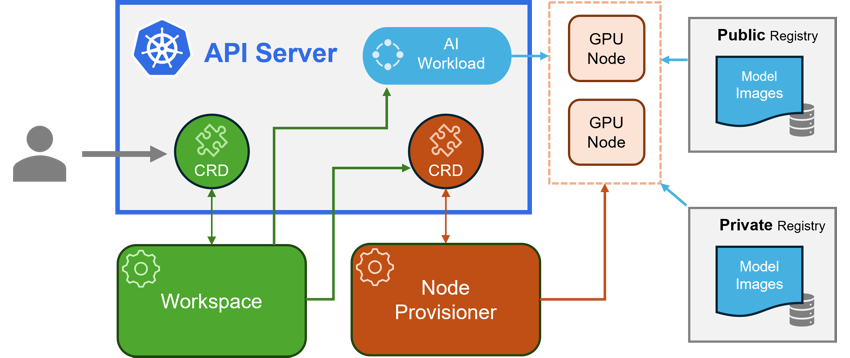

The Kubernetes AI toolchain operator (Kaito) is a managed add-on for AKS that simplifies the experience of running OSS AI models on your AKS clusters. The AI toolchain operator automatically provisions the necessary GPU nodes and sets up the associated inference server as an endpoint server to your AI models. Using this add-on reduces your onboarding time and enables you to focus on AI model usage and development rather than infrastructure setup.

- Container Image Management: Kaito allows you to manage large language models using container images. It provides an HTTP server to perform inference calls using the model library.

- GPU Hardware Configuration: Kaito eliminates the need for manual tuning of deployment parameters to fit GPU hardware. It provides preset configurations that are automatically applied based on the model requirements.

- Auto-provisioning of GPU Nodes: Kaito automatically provisions GPU nodes based on the requirements of your models. This ensures that your AI inference workloads have the necessary resources to run efficiently.

- Integration with Microsoft Container Registry: If the license allows, Kaito can host large language model images in the public Microsoft Container Registry (MCR). This simplifies the process of accessing and deploying the models.

Kaito follows the classic Kubernetes Custom Resource Definition (CRD)/controller design pattern. The user manages a workspace custom resource that describes the GPU requirements and the inference specification. Kaito controllers automate the deployment by reconciling the workspace custom resource.

The major components of Kaito include:

- Workspace Controller: This controller reconciles the workspace custom resource, creates machine custom resources to trigger node auto-provisioning, and creates the inference workload (deployment or statefulset) based on the model preset configurations.

- Node Provisioner Controller: This controller, named gpu-provisioner in the Kaito Helm chart, interacts with the workspace controller using the machine CRD from Karpenter. It integrates with Azure Kubernetes Service (AKS) APIs to add new GPU nodes to the AKS cluster. Note that the gpu-provisioner is an open-source component maintained in the Kaito repository and can be replaced by other controllers supporting Karpenter-core APIs.

Using Kaito greatly simplifies the workflow of onboarding large AI inference models into Kubernetes, allowing you to focus on AI model usage and development without the hassle of infrastructure setup.

There are some significant benefits of running open source LLMs with Kaito. Some advantages include:

- Automated GPU node provisioning and configuration: Kaito will automatically provision and configure GPU nodes for you. This can help reduce the operational burden of managing GPU nodes, configuring them for Kubernetes, and tuning model deployment parameters to fit GPU profiles.

- Reduced cost: Kaito can help you save money by splitting inferencing across lower end GPU nodes which may also be more readily available and cost less than high-end GPU nodes.

- Support for popular open-source LLMs: Kaito offers preset configurations for popular open-source LLMs. This can help you deploy and manage open-source LLMs on AKS and integrate them with your intelligent applications.

- Fine-grained control: You can have full control over data security and privacy, model development and configuration transparency, and the ability to fine-tune the model to fit your specific use case.

- Network and data security: You can ensure these models are ring-fenced within your organization's network and/or ensure the data never leaves the Kubernetes cluster.

At the time of this writing, Kaito supports the following models.

Meta released Llama 2, a set of pretrained and refined LLMs, along with Llama 2-Chat, a version of Llama 2. These models are scalable up to 70 billion parameters. It was discovered after extensive testing on safety and helpfulness-focused benchmarks that Llama 2-Chat models perform better than current open-source models in most cases. Human evaluations have shown that they align well with several closed-source models. The researchers have even taken a few steps to guarantee the security of these models. This includes annotating data, especially for safety, conducting red-teaming exercises, fine-tuning models with an emphasis on safety issues, and iteratively and continuously reviewing the models. Variants of Llama 2 with 7 billion, 13 billion, and 70 billion parameters have also been released. Llama 2-Chat, optimized for dialogue scenarios, has also been released in variants with the same parameter scales. For more information, see the following resources:

Researchers from Technology Innovation Institute, Abu Dhabi introduced the Falcon series, which includes models with 7 billion, 40 billion, and 180 billion parameters. These models, which are intended to be causal decoder-only models, were trained on a high-quality, varied corpus that was mostly obtained from online data. Falcon-180B, the largest model in the series, is the only publicly available pretraining run ever, having been trained on a dataset of more than 3.5 trillion text tokens.

The researchers discovered that Falcon-180B shows great advancements over other models, including PaLM or Chinchilla. It outperforms models that are being developed concurrently, such as LLaMA 2 or Inflection-1. Falcon-180B achieves performance close to PaLM-2-Large, which is noteworthy given its lower pretraining and inference costs. With this ranking, Falcon-180B joins GPT-4 and PaLM-2-Large as the leading language models in the world. For more information, see the following resources:

- The Falcon Series of Open Language Models

- Falcon-40B-Instruct

- Falcon-180B

- Falcon-7B

- Falcon-7B-Instruct

Mistral 7B v0.1 is a cutting-edge 7-billion-parameter language model that has been developed for remarkable effectiveness and performance. Mistral 7B breaks all previous records, outperforming Llama 2 13B in every benchmark and even Llama 1 34B in crucial domains like logic, math, and coding.

State-of-the-art methods like grouped-query attention (GQA) have been used to accelerate inference and sliding window attention (SWA) to efficiently handle sequences with different lengths while reducing computing overhead. A customized version, Mistral 7B — Instruct, has also been provided and optimized to perform exceptionally well in activities requiring following instructions. For more information, see the following resources:

Microsoft introduced Phi-2, which is a Transformer model with 2.7 billion parameters. It was trained using a combination of data sources similar to Phi-1.5. It also integrates a new data source, which consists of NLP synthetic texts and filtered websites that are considered instructional and safe. Examining Phi-2 against benchmarks measuring logical thinking, language comprehension, and common sense showed that it performed almost at the state-of-the-art level among models with less than 13 billion parameters. For more information, see the following resources:

Chainlit is an open-source Python package that is specifically designed to create user interfaces (UIs) for AI applications. It simplifies the process of building interactive chats and interfaces, making developing AI-powered applications faster and more efficient. While Streamlit is a general-purpose UI library, Chainlit is purpose-built for AI applications and seamlessly integrates with other AI technologies such as LangChain, LlamaIndex, and LangFlow.

With Chainlit, developers can easily create intuitive UIs for their AI models, including ChatGPT-like applications. It provides a user-friendly interface for users to interact with AI models, enabling conversational experiences and information retrieval. Chainlit also offers unique features, such as the ability to display the Chain of Thought, which allows users to explore the reasoning process directly within the UI. This feature enhances transparency and enables users to understand how the AI arrives at its responses or recommendations.

For more information, see the following resources:

As stated in the documentation, enabling the Kubernetes AI toolchain operator add-on in AKS creates a managed identity named ai-toolchain-operator-<aks-cluster-name>. This managed identity is utilized by the GPU provisioner controller to provision GPU node pools within the managed AKS cluster via Karpenter. To ensure proper functionality, manual configuration of the necessary permissions is required. Follow the steps outlined in the following sections to successfully install Kaito through the AKS add-on.

-

Register the AIToolchainOperatorPreview feature flag using the az feature register command. It takes a few minutes for the registration to complete.

az feature register --namespace "Microsoft.ContainerService" --name "AIToolchainOperatorPreview" -

Verify the registration using the az feature show command.

az feature show --namespace "Microsoft.ContainerService" --name "AIToolchainOperatorPreview" -

Create an Azure resource group using the az group create command.

az group create --name ${AZURE_RESOURCE_GROUP} --location $AZURE_LOCATION -

Create an AKS cluster with the AI toolchain operator add-on enabled using the az aks create command with the

--enable-ai-toolchain-operatorand--enable-oidc-issuerflags.az aks create --location $AZURE_LOCATION \ --resource-group $AZURE_RESOURCE_GROUP \ --name ${CLUSTER_NAME} \ --enable-oidc-issuer \ --enable-ai-toolchain-operator AI toolchain operator enablement requires the enablement of OIDC issuer.

-

On an existing AKS cluster, you can enable the AI toolchain operator add-on using the az aks update command as follows:

az aks update --name ${CLUSTER_NAME} \ --resource-group ${AZURE_RESOURCE_GROUP} \ --enable-oidc-issuer \ --enable-ai-toolchain-operator -

Configure

kubectlto connect to your cluster using the az aks get-credentials command.az aks get-credentials --resource-group $AZURE_RESOURCE_GROUP --name $CLUSTER_NAME -

Export environment variables for the MC resource group, principal ID identity, and Kaito identity using the following commands:

export MC_RESOURCE_GROUP=$(az aks show --resource-group $AZURE_RESOURCE_GROUP \ --name $CLUSTER_NAME \ --query nodeResourceGroup \ -o tsv) export PRINCIPAL_ID=$(az identity show --name "ai-toolchain-operator-$CLUSTER_NAME" \ --resource-group $MC_RESOURCE_GROUP \ --query 'principalId' \ -o tsv) export KAITO_IDENTITY_NAME="ai-toolchain-operator-${CLUSTER_NAME,,}" -

Get the AKS OIDC Issuer URL and export it as an environment variable:

export AKS_OIDC_ISSUER=$(az aks show --resource-group "${AZURE_RESOURCE_GROUP}" \ --name "${CLUSTER_NAME}" \ --query "oidcIssuerProfile.issuerUrl" \ -o tsv) -

Create a new role assignment for the service principal using the az role assignment create command. The Kaito user-assigned managed identity needs the

Contributorrole on the resource group containing the AKS cluster.az role assignment create --role "Contributor" \ --assignee $PRINCIPAL_ID \ --scope "/subscriptions/$AZURE_SUBSCRIPTION_ID/resourcegroups/$AZURE_RESOURCE_GROUP" -

Create a federated identity credential between the KAITO managed identity and the service account used by KAITO controllers using the az identity federated-credential create command.

az identity federated-credential create --name "Kaito-federated-identity" \ --identity-name "${KAITO_IDENTITY_NAME}" \ -g "${MC_RESOURCE_GROUP}" \ --issuer "${AKS_OIDC_ISSUER}" \ --subject system:serviceaccount:"kube-system:Kaito-gpu-provisioner" \ --audience api://AzureADTokenExchange -

Verify that the deployment is running using the

kubectl getcommand:kubectl get deployment -n kube-system | grep Kaito -

Deploy the

Falcon 7B-instructmodel from the Kaito model repository using thekubectl applycommand.kubectl apply -f https://raw.githubusercontent.com/Azure/Kaito/main/examples/Kaito_workspace_falcon_7b-instruct.yaml -

Track the live resource changes in your workspace using the

kubectl getcommand.kubectl get workspace workspace-falcon-7b-instruct -w -

Check your service and get the service IP address of the inference endpoint using the

kubectl get svccommand.export SERVICE_IP=$(kubectl get svc workspace-falcon-7b-instruct -o jsonpath='{.spec.clusterIP}') -

Run the

Falcon 7B-instructmodel with a sample input of your choice using the followingcurlcommand:kubectl run -it --rm -n $namespace --restart=Never curl --image=curlimages/curl -- curl -X POST http://$serviceIp/chat -H "accept: application/json" -H "Content-Type: application/json" -d "{\"prompt\":\"Tell me about Tuscany and its cities.\", \"return_full_text\": false, \"generate_kwargs\": {\"max_length\":4096}}"

NOTE

As you track the live resource changes in your workspace, the machine readiness can take up to 10 minutes, and workspace readiness up to 20 minutes.

At the time of this writing, the azurerm_kubernetes_cluster resource in the AzureRM Terraform provider for Azure does not have a property to enable the add-on and install the Kubernetes AI toolchain operator (Kaito) on your AKS cluster. However, you can use the AzAPI Provider to deploy Kaito on your AKS cluster. The AzAPI provider is a thin layer on top of the Azure ARM REST APIs. It complements the AzureRM provider by enabling the management of Azure resources that are not yet or may never be supported in the AzureRM provider, such as private/public preview services and features. The following resources replicate the actions performed by the Azure CLI commands mentioned in the previous section.

Here is a description of the code above:

azurerm_resource_group.node_resource_group: Retrieves the properties of the node resource group in the current AKS cluster.azapi_update_resource.enable_Kaito: Enables the Kaito add-on. This operation installs the Kaito operator on the AKS cluster and creates the related user-assigned managed identity in the node resource group.azurerm_user_assigned_identity.Kaito_identity: Retrieves the properties of the Kaito user-assigned managed identity located in the node resource group.azurerm_federated_identity_credential.Kaito_federated_identity_credential: Creates the federated identity credential between the Kaito managed identity and the service account used by the Kaito controllers in thekube-systemnamespace, particularly theKaito-gpu-provisionercontroller.azurerm_role_assignment.Kaito_identity_contributor_assignment: Assigns theContributorrole to the Kaito managed identity with the AKS resource group as the scope.

To create the Kaito workspace, you can utilize the kubectl_manifest resource from the Kubectl Provider in the following manner.

To access the OpenAPI schema of the Workspace custom resource definition, execute the following command:

Running this command will provide you with the OpenAPI schema for the Workspace custom resource definition in JSON format:

Kaito creates a Kubernetes service with the same name and inside the same namespace of the workspace. This service exposes an inference endpoint that AI applications can use to call the API exposed by the AKS-hosted model. Here is an example of an inference endpoint for a Falcon model from the Kaito documentation:

Here are the parameters you can use in a call:

prompt: The initial text provided by the user, from which the model will continue generating text.return_full_text: If False only generated text is returned, else full text is returned.clean_up_tokenization_spaces: True/False, determines whether to remove potential extra spaces in the text output.prefix: Prefix added to the prompt.handle_long_generation: Provides strategies to address generations beyond the model's maximum length capacity.max_length: The maximum total number of tokens in the generated text.min_length: The minimum total number of tokens that should be generated.do_sample: If True, sampling methods will be used for text generation, which can introduce randomness and variation.early_stopping: If True, the generation will stop early if certain conditions are met, for example, when a satisfactory number of candidates have been found in beam search.num_beams: The number of beams to be used in beam search. More beams can lead to better results but are more computationally expensive.num_beam_groups: Divides the number of beams into groups to promote diversity in the generated results.diversity_penalty: Penalizes the score of tokens that make the current generation too similar to other groups, encouraging diverse outputs.temperature: Controls the randomness of the output by scaling the logits before sampling.top_k: Restricts sampling to the k most likely next tokens.top_p: Uses nucleus sampling to restrict the sampling pool to tokens comprising the top p probability mass.typical_p: Adjusts the probability distribution to favor tokens that are "typically" likely, given the context.repetition_penalty: Penalizes tokens that have been generated previously, aiming to reduce repetition.length_penalty: Modifies scores based on sequence length to encourage shorter or longer outputs.no_repeat_ngram_size: Prevents the generation of any n-gram more than once.encoder_no_repeat_ngram_size: Similar tono_repeat_ngram_sizebut applies to the encoder part of encoder-decoder models.bad_words_ids: A list of token ids that should not be generated.num_return_sequences: The number of different sequences to generate.output_scores: Whether to output the prediction scores.return_dict_in_generate: If True, the method will return a dictionary containing additional information.pad_token_id: The token ID used for padding sequences to the same length.eos_token_id: The token ID that signifies the end of a sequence.forced_bos_token_id: The token ID that is forcibly used as the beginning of a sequence token.forced_eos_token_id: The token ID that is forcibly used as the end of a sequence when max_length is reached.remove_invalid_values: If True, filters out invalid values like NaNs or infs from model outputs to prevent crashes.

Before deploying the Terraform modules in the project, specify a value for the following variables in the terraform.tfvars variable definitions file.

This is the description of the parameters:

name_prefix: Specifies a prefix for all the Azure resources.location: Specifies the region (e.g., westeurope) where deploying the Azure resources.domain: Specifies the domain part (e.g., subdomain.domain) of the hostname of the ingress object used to expose the chatbot via the NGINX Ingress Controller.kubernetes_version: Specifies the Kubernetes version installed on the AKS cluster.network_plugin: Specifies the network plugin of the AKS cluster.network_plugin_mode: Specifies the network plugin mode used for building the Kubernetes network. Possible value is overlay.network_policy: Specifies the network policy of the AKS cluster. Currently supported values are calico, azure and cilium.system_node_pool_vm_size: Specifies the virtual machine size of the system-mode node pool.user_node_pool_vm_size: Specifies the virtual machine size of the user-mode node pool.ssh_public_key: Specifies the SSH public key used for the AKS nodes and jumpbox virtual machine.vm_enabled: a boleean value that specifies whether deploying or not a jumpbox virtual machine in the same virtual network of the AKS cluster.admin_group_object_ids: when deploying an AKS cluster with Microsoft Entra ID and Azure RBAC integration, this array parameter contains the list of Microsoft Entra ID group object IDs that will have the admin role of the cluster.web_app_routing_enabled: Specifies whether the application routing add-on is enabled. When enabled, this add-on installs a managed instance of the NGINX Ingress Controller on the AKS cluster.dns_zone_name: Specifies the name of the Azure Public DNS zone used by the application routing add-on.dns_zone_resource_group_name: Specifies the resource group name of the Azure Public DNS zone used by the application routing add-on.namespace: Specifies the namespace of the workload application.service_account_name: Specifies the name of the service account of the workload application.grafana_admin_user_object_id: Specifies the object id of the Azure Managed Grafana administrator user account.vnet_integration_enabled: Specifies whether API Server VNet Integration is enabled.openai_enabled: Specifies whether to deploy Azure OpenAI Service or not. This sample does not require the deployment of Azure OpenAI Service.Kaito_enabled: Specifies whether to deploy the Kubernetes AI Toolchain Operator (Kaito).instance_type: Specifies the GPU node SKU (e.g.Standard_NC12s_v3) to use in the Kaito workspace.

NOTE

We suggest reading sensitive configuration data such as passwords or SSH keys from a pre-existing Azure Key Vault resource. For more information, see Referencing Azure Key Vault secrets in Terraform. Before proceeding, also make sure to run theregister-preview-features.shBash script in theterraformfolder to register any preview feature used by the AKS cluster.

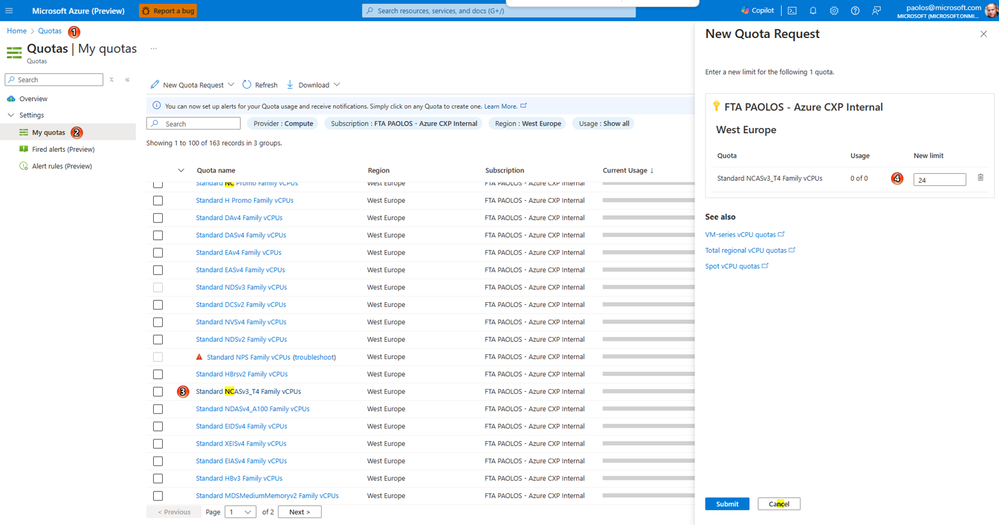

Before installing the Terraform module, make sure to have enough vCPU quotas in the selected region for the GPU VM family specified in the instance_type parameter. In case you don't have enough quota, follow the instructions described in Increase VM-family vCPU quotas. The steps for requesting a quota increase vary based on whether the quota is adjustable or non-adjustable.

- Adjustable quotas: Quotas for which you can request a quota increase fall into this category. Each subscription has a default quota value for each VM family and region. You can request an increase for an adjustable quota from the Azure Portal

My quotaspage, providing an amount or usage percentage for a given VM family in a specified region and submitting it directly. This is the quickest way to increase quotas.

- Non-adjustable quotas: These are quotas which have a hard limit, usually determined by the scope of the subscription. To make changes, you must submit a support request, and the Azure support team will help provide solutions.

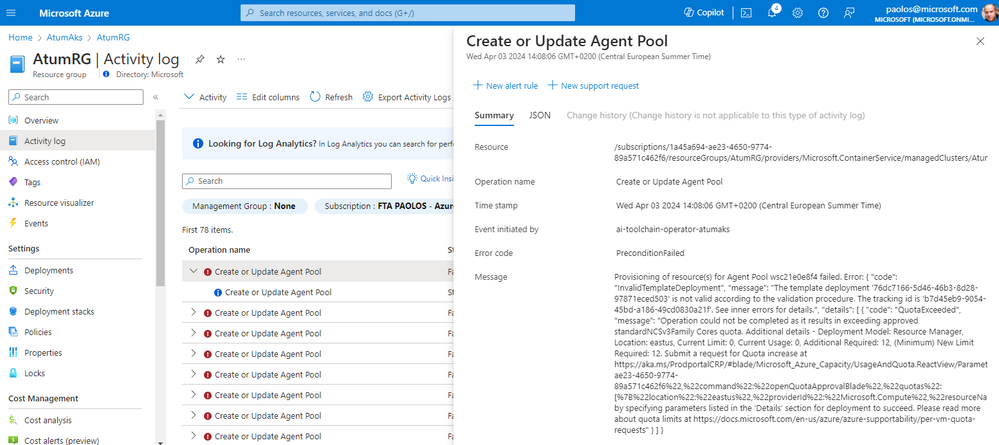

If you don't have enough vCPU quota for the selected instance type, the Kaito workspace creation will fail. You can check the error description using the Azure Monitor Activity Log, as shown in the following figure:

To read the logs of the Kaito GPU provisioner pod in the kube-system namespace, you can use the following command.

In case you exceeded the quota for the selected instance type, you could see an error message as follows:

The project provides the code of a chat application using Python and Chainlit that interacts with the inference endpoint exposed by the AKS-hosted model.

As an alternative, the chat application can be configured to call the REST API of an Azure OpenAI Service. For more information about how to configure the chat application with Azure OpenAI Service, see the following articles:

- Create an Azure OpenAI, LangChain, ChromaDB, and Chainlit chat app in AKS using Terraform (Azure Samples)(My GitHub)(Tech Community)

- Deploy an OpenAI, LangChain, ChromaDB, and Chainlit chat app in Azure Container Apps using Terraform (Azure Samples)(My GitHub)(Tech Community)

This is the code of the sample application.

Here's a brief explanation of each variable and related environment variable:

temperature: A float value representing the temperature for Create chat completion method of the OpenAI API. It is fetched from the environment variables with a default value of 0.9.top_p: A float value representing the top_p parameter that uses nucleus sampling to restrict the sampling pool to tokens comprising the top p probability mass.top_k: A float value representing the top_k parameter that restricts sampling to the k most likely next tokens.api_base: The base URL for the OpenAI API.api_key: The API key for the OpenAI API. The value of this variable can be null when using a user-assigned managed identity to acquire a security token to access Azure OpenAI.api_type: A string representing the type of the OpenAI API.api_version: A string representing the version of the OpenAI API.engine: The engine used for OpenAI API calls.model: The model used for OpenAI API calls.system_content: The content of the system message used for OpenAI API calls.max_retries: The maximum number of retries for OpenAI API calls.timeout: The timeout in seconds.debug: When debug is equal totrue,t, or1, the logger writes the chat completion answers.useLocalLLM: the chat application calls the inference endpoint of the local model when the parameter value is set to true.aiEndpoint: the URL of the inference endpoint.

The application calls the inference endpoint using the requests.request method when the useLocalLLM environment variable is set to true. You can run the application locally using the following command. The -w flag` indicates auto-reload whenever we make changes live in our application code.

NOTE

To locally debug your application, you have two options to expose the AKS-hosted inference endpoint service. You can either use the kubectl port-forward command or utilize an ingress controller to expose the endpoint publicly.

You can use the src/01-build-docker-images.sh Bash script to build the Docker container image for each container app.

Before running any script in the src folder, make sure to customize the value of the variables inside the 00-variables.sh file located in the same folder. This file is embedded in all the scripts and contains the following variables. The Dockerfile under the src folder is parametric and can be used to build the container images for both chat applications.

You can use the src/02-run-docker-container.sh Bash script to test the containers for the sender, processor, and receiver applications.

You can use the src/03-push-docker-image.sh Bash script to push the Docker container images for the sender, processor, and receiver applications to the Azure Container Registry (ACR).

You can use the 08-deploy-chat.sh script to deploy the application to AKS.

You can locate the YAML manifests for deploying the chat application in the companion sample under the scripts folder.

In conclusion, while it is possible to manually create a GPU-enabled agent nodes, deploy, and tune open-source large language models (LLMs) like Falcon, Mistral, or Llama 2 on Azure Kubernetes Service (AKS), using the Kubernetes AI toolchain operator (Kaito) automates these steps for you. Kaito simplifies the experience of running OSS AI models on your AKS clusters by automatically provisioning the necessary GPU nodes and setting up the inference server as an endpoint for your models. By utilizing Kaito, you can reduce the time spent on infrastructure setup and focus more on AI model usage and development. Additionally, Kaito has just been released, and new features are expected to follow, providing even more capabilities for managing and deploying AI models on AKS.