This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

In collaboration with Meta, today Microsoft is excited to introduce Meta Llama 3 models to Azure AI. Meta-Llama-3-8B-Instruct, Meta-Llama-3-70B-Instruct pretrained and instruction fine-tuned models are the next generation of Meta Llama large language models (LLMs), available now on Azure AI Model Catalog. Trained on a significant amount of pretraining data, developers building with Meta Llama 3 models on Azure can experience significant boosts to overall performance, as reported by Meta. Microsoft is delighted to be a launch partner to Meta as enterprises, digital natives and developers build with Meta Llama 3 on Azure, supported with popular LLM developer tools like Azure AI prompt flow and LangChain.

What's New in Meta Llama 3?

Meta Llama 3 includes pre-trained, and instruction fine-tuned language models are designed to handle a wide spectrum of use cases. As indicated by Meta, Meta-Llama-3-8B, Meta-Llama-70B, Meta-Llama-3-8B-Instruct and Meta-Llama-3-70B-Instruct models showcase remarkable improvements in industry-standard benchmarks and advanced functionalities, such as improved reasoning abilities, as shared by Meta. Significant enhancements to post-training procedures have been reported by Meta, leading to a substantial reduction in false refusal rates and improvements in the alignment and diversity of model responses. According to Meta, these adjustments have notably enhanced the capabilities of Llama 3. Meta also states that Llama 3 retains its decoder-only transformer architecture but now includes a more efficient tokenizer that significantly boosts overall performance. The training of these models, as described by Meta, involved a combination of data parallelization, model parallelization, and pipeline parallelization across two specially designed 24K GPU clusters, with plans for future expansions to accommodate up to 350K H100 GPUs.

Features of Meta Llama 3:

- Significantly increased training tokens (15T) allow Meta Llama 3 models to better comprehend language intricacies.

- Extended context window (8K) doubles the capacity of Llama 2, enabling the model to access more information from lengthy passages for informed decision-making.

- A new Tiktoken-based tokenizer is used with a vocabulary of 128K tokens, encoding more characters per token. Meta reports better performance on both English and multilingual benchmark tests, reinforcing its robust multilingual capabilities.

Read more about the new Llama 3 models here.

Use Cases:

- Meta-Llama-3-8B pre-trained and instruction fine-tuned models are recommended for scenarios with limited computational resources, offering faster training times and suitability for edge devices. It's appropriate for use cases like text summarization, classification, sentiment analysis, and translation.

- Meta-Llama-3-70B pre-trained and instruction fine-tuned models are geared towards content creation and conversational AI, providing deeper language understanding for more nuanced tasks, like R&D and enterprise applications requiring nuanced text summarization, classification, language modeling, dialog systems, code generation and instruction following.

Expanding horizons: Meta Llama 3 now available on Azure AI Models as a Service

In November 2023, we launched Meta Llama 2 models on Azure AI, marking our inaugural foray into offering Models as a Service (MaaS). Coupled with a growing demand for our expanding MaaS offerings, numerous Azure customers across enterprise, SMC and digital natives have rapidly developed, deployed and operationalized enterprise-grade generative AI applications across their organizations.

The launch of Llama 3 reflects our strong commitment to enabling the full spectrum of open source to proprietary models with the scale of Azure AI-optimized infrastructure and to empowering organizations in building the future with generative AI.

Azure AI provides a diverse array of advanced and user-friendly models, enabling customers to select the model that best fits their use case. Over the past six months, we've expanded our model catalog sevenfold through our partnerships with leading generative AI model providers and releasing Phi-2, from Microsoft Research. Azure AI model catalog lets developers select from over 1,600 foundational models, including LLMs and SLMs from industry leaders like Meta, Cohere, Databricks, Deci AI, Hugging Face, Microsoft Research, Mistral AI, NVIDIA, OpenAI, and Stability AI. This extensive selection ensures that Azure customers can find the most suitable model for their unique use case.

How can you benefit from using Meta Llama 3 on Azure AI Models as a Service?

Developers using Meta Llama 3 models can work seamlessly with tools in Azure AI Studio, such as Azure AI Content Safety, Azure AI Search, and prompt flow to enhance ethical and effective AI practices. Here are some main advantages that highlight the smooth integration and strong support system provided by Meta's Llama 3 with Azure, Azure AI and Models as a Service:

- Enhanced Security and Compliance: Azure places a strong emphasis on data privacy and security, adopting Microsoft's comprehensive security protocols to protect customer data. With Meta Llama 3 on Azure AI Studio, enterprises can operate confidently, knowing their data remains within the secure bounds of the Azure cloud, thereby enhancing privacy and operational efficiency.

- Content Safety Integration: Customers can integrate Meta Llama 3 models with content safety features available through Azure AI Content Safety, enabling additional responsible AI practices. This integration facilitates the development of safer AI applications, ensuring content generated or processed is monitored for compliance and ethical standards.

- Simplified Assessment of LLM flows: Azure AI's prompt flow allows evaluation flows, which help developers to measure how well the outputs of LLMs match the given standards and goals by computing metrics. This feature is useful for workflows created with Llama 3; it enables a comprehensive assessment using metrics such as groundedness, which gauges the pertinence and accuracy of the model's responses based on the input sources when using a retrieval augmented generation (RAG) pattern.

- Client integration: You can use the API and key with various clients. Use the provided API in Large Language Model (LLM) tools such as prompt flow, OpenAI, LangChain, LiteLLM, CLI with curl and Python web requests. Deeper integrations and further capabilities coming soon.

- Simplified Deployment and Inference: By deploying Meta models through MaaS with pay-as-you-go inference APIs, developers can take advantage of the power of Llama 3 without managing underlying infrastructure in their Azure environment. You can view the pricing on Azure Marketplace for Meta-Llama-3-8B-Instruct and Meta-Llama-3-70B-Instruct models based on input and output token consumption.

These features demonstrate Azure's commitment to offering an environment where organizations can harness the full potential of AI technologies like Llama 3 efficiently and responsibly, driving innovation while maintaining high standards of security and compliance.

Getting Started with Meta Llama3 on MaaS

To get started with Azure AI Studio and deploy your first model, follow these clear steps:

- Familiarize Yourself: If you're new to Azure AI Studio, start by reviewing this documentation to understand the basics and set up your first project.

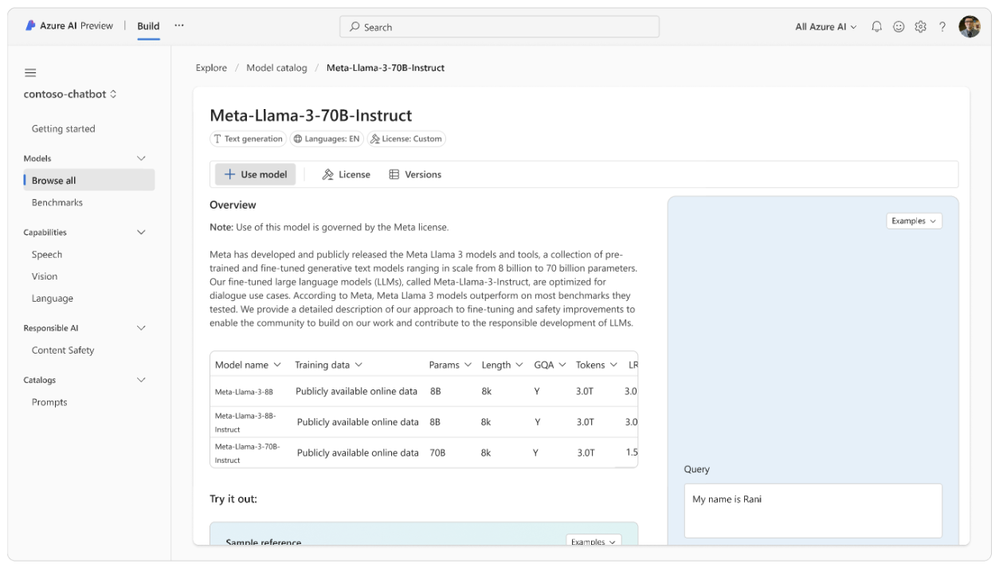

- Access the Model Catalog: Open the model catalog in AI Studio.

- Find the Model: Use the filter to select the Meta collection or click the “View models” button on the MaaS announcement card.

- Select the Model: Open the Meta-Llama-3-70B text model from the list.

- Deploy the Model: Click on ‘Deploy’ and choose the Pay-as-you-go (PAYG) deployment option.

- Subscribe and Access: Subscribe to the offer to gain access to the model (usage charges apply), then proceed to deploy it.

- Explore the Playground: After deployment, you will automatically be redirected to the Playground. Here, you can explore the model's capabilities.

- Customize Settings: Adjust the context or inference parameters to fine-tune the model's predictions to your needs.

- Access Programmatically: Click on the “View code” button to obtain the API, keys, and a code snippet. This enables you to access and integrate the model programmatically.

- Integrate with Tools: Use the provided API in Large Language Model (LLM) tools such as prompt flow, Semantic Kernel, LangChain, or any other tools that support REST API with key-based authentication for making inferences.

Looking Ahead

The introduction of these latest Meta Llama 3 models reinforces our mission to scaling AI. As we continue to innovate and expand our model catalog, our commitment remains firm: to provide accessible, powerful, and efficient AI solutions that empower developers and organizations alike with the full spectrum of model options from open source to proprietary.

Build with Meta Llama 3 on Azure:

We invite you to explore and build with the new Meta Llama 3 models within the Azure AI model catalog and start integrating cutting-edge AI into your applications today. Stay tuned for more updates and developments.

FAQ

- What does it cost to use Meta LLama 3 models on Azure?

- You are billed based on the number of prompt and completions tokens. You can review the pricing on the Cohere offer in the Marketplace offer details tab when deploying the model. You can also find the pricing on the Azure Marketplace:

- Do I need GPU capacity in my Azure subscription to use Llama 3 models?

- No, you do not need GPU capacity. Llama 3 models are offered as an API.

- Llama 3 is listed on the Azure Marketplace. Can I purchase and use Llama 3 directly from Azure Marketplace?

- Azure Marketplace enables the purchase and billing of Llama 3, but the purchase experience can only be accessed through the model catalog. Attempting to purchase Llama 3 models from the Marketplace will redirect you to Azure AI Studio.

- Given that Llama 3 is billed through the Azure Marketplace, does it retire my Azure consumption commitment (aka MACC)?

- Yes, Llama 3 is an “Azure benefit eligible” Marketplace offer, which indicates MACC eligibility. Learn more about MACC here: https://learn.microsoft.com/en-us/marketplace/azure-consumption-commitment-benefit

- Is my inference data shared with Meta?

- No, Microsoft does not share the content of any inference request or response data with Meta.

- Are there rate limits for the Meta models on Azure?

- Meta models come with 200k tokens per minute and 1k requests per minute limit. Reach out to Azure customer support if this doesn’t suffice.

- Are Meta models region specific?

- Meta model API endpoints can be created in AI Studio projects to Azure Machine Learning workspaces in EastUS2. If you want to use Meta models in prompt flow in project or workspaces in other regions, you can use the API and key as a connection to prompt flow manually. Essentially, you can use the API from any Azure region once you create it in EastUS2.

- Can I fine-tune Meta Llama 3 models?

- Not yet, stay tuned…