This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Earlier this year, I published an article on building a solution with Azure Open AI GPT-4 Turbo with Vision (GPT4-V) to analyze videos with chat completions, all orchestrated with Azure Data Factory. Azure Data Factory is a great framework for making calls to Azure Open AI deployments since ADF offers:

- A low-code solution without having to write and deploy apps or web services

- Easy and secure integration with other Azure resources with Managed Identities

- Features which aid in parameterization making a single data factory reuseable for many different scenarios (for example, an insurance company could use the same data factory to analyze videos or images for car damage as well as for fire damage, storm damage, etc. )

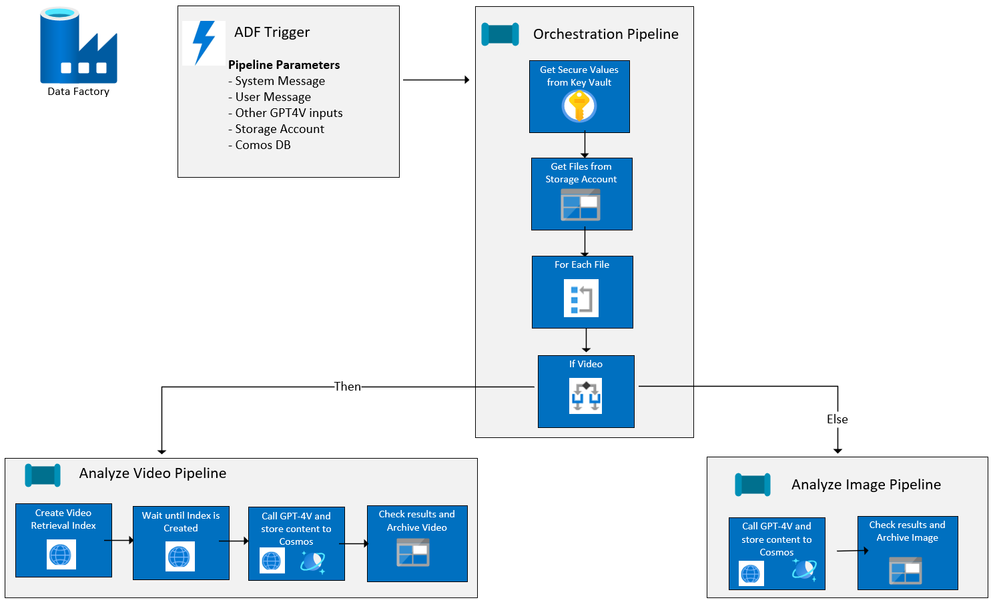

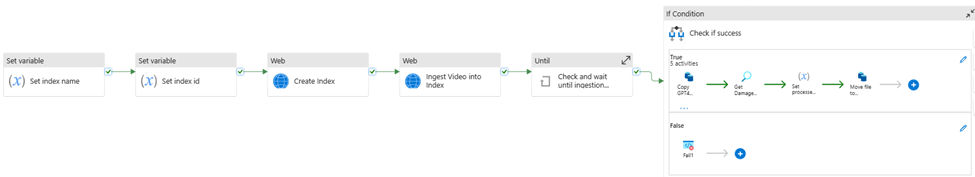

Since the first article was published, I have added image analysis to the ADF solution and have made it easy to deploy to your Azure Subscription from our Github repo, AI-in-a-Box, using azd. Below is the current flow of the ADF solution:

Solution resources and workflow

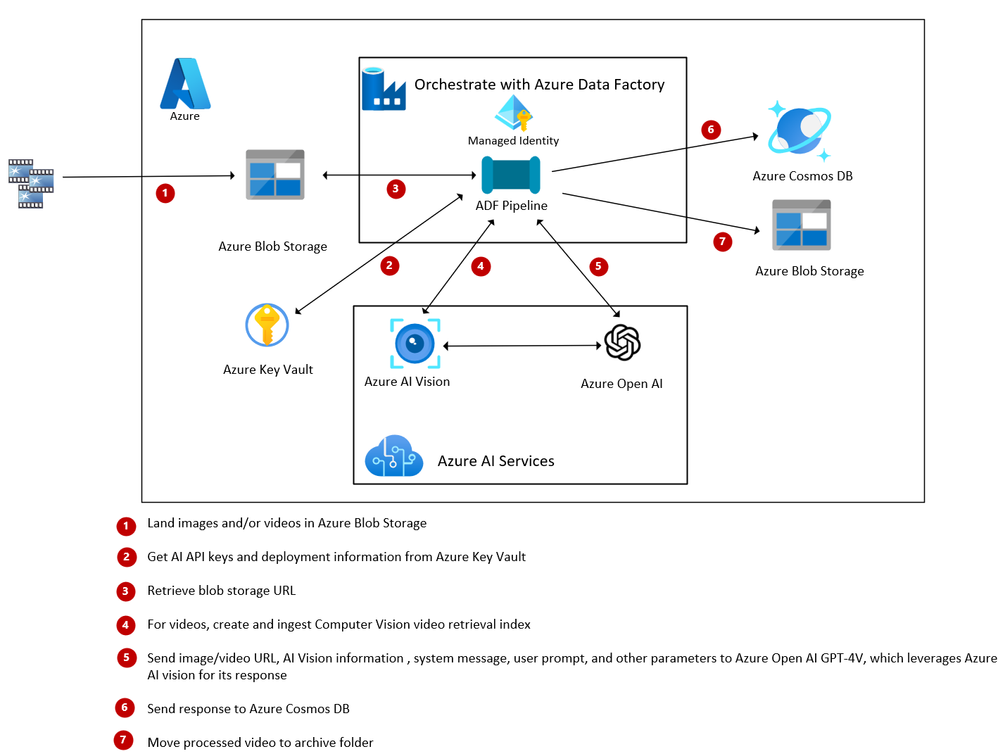

If you read the original blog, you will see that the Azure resources deployed are exactly the same as this solution. The only thing that has changed are the ADF pipelines. The resources used are:

- Azure Key Vault for holding API keys as secrets

- Azure Open AI with a GPT-4V Deployment (Preview)

- Azure Computer Vision with Image Analysis 4.0 (Preview)

- Follow the prerequisites in the above link and note the region availability and supported video formats

- Azure Data Factory for orchestration

- Azure Blob Storage for holding images and videos to be processed

- This must be in the same region as your Azure Open AI resource

- Azure Cosmos DB Account (NoSQL API), database and container for logging GPT-4V completion response

ADF/GPT-4V for Image and Video Processing - Orchestrator Pipeline

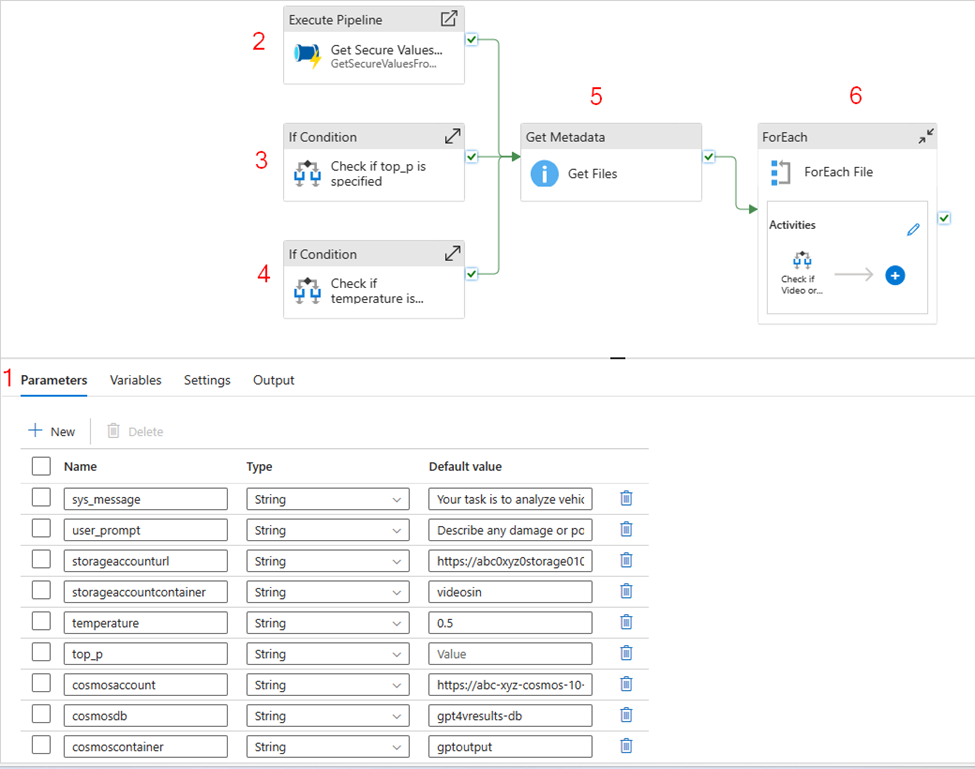

An orchestrator pipeline is the main pipeline that is called by event or at a scheduled time, executing all the activities to be performed, including running other pipelines. The orchestration pipeline has changed slightly since the original article was written. It now includes image processing along with telling GPT-4V to return the results in a Json format.

- Takes in the specified parameters for the system message, user message, image and/or video storage account, and Cosmos DB account and database information.

- Though GPT-4V does not support resource-format Json at this time, you can still return a string result in a Json format.

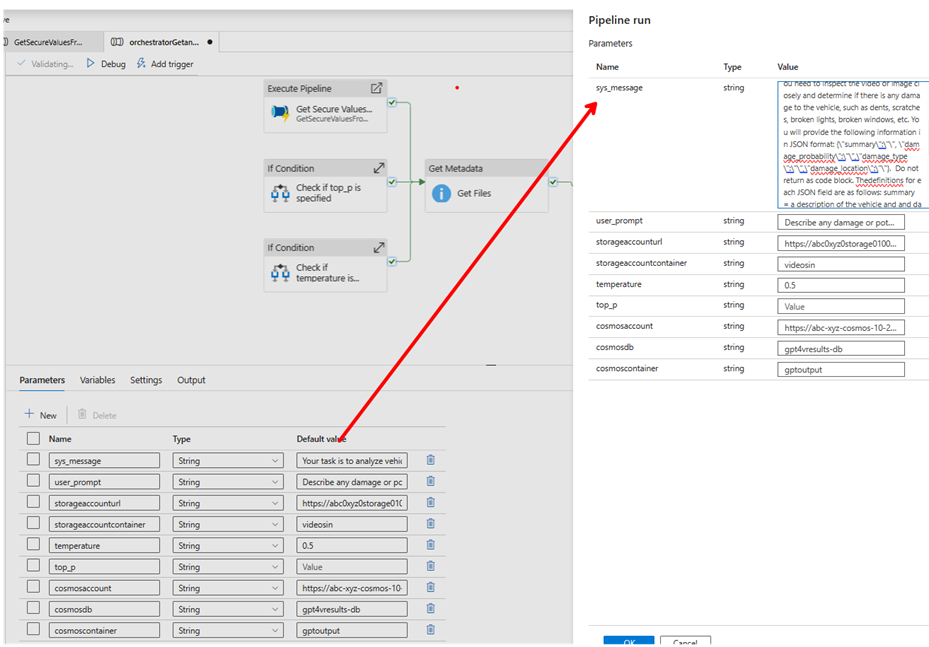

- In the system_message parameter on the Orchestration Pipeline, specify that the results should be formatted in Json:

The system message says:

Your task is to analyze vehicles for damage. You will be presented videos or images of vehicles. Each video or image will only show a portion of the vehicle and there may be glare on the video or image. You need to inspect the video or image closely and determine if there is any damage to the vehicle, such as dents, scratches, broken lights, broken windows, etc. You will provide the following information in JSON format: {\"summary\":\"\", \"damage_probability\":\"\",\"damage_type\":\"\",\"damage_location\":\"\"}. Do not return as code block. The definitions for each JSON field are as follows: summary = a description of the vehicle and and damage found; damage_probability = a value between 1 and 10 where 1 is no damage found, 5 is some likelyhood of damage, and 10 is obvious damage; damage_type = the type of damage on the vehicle, such as scratches, chips, dents, broken glass; damage_location = the location of the damage on the vehicle such as passenger front door, rear bumper.

At the end of this post, you will see how easy it is to query the results and return the content as a Json object.

2. Get the secrets from key vault and store them as return variables

3. Set a variable which contains the name/value pair for temperature. The parameter above for temperature returns “temperature” : 0.5

4. Set a variable which contains the name/value pair for top_p. The parameter above is not set so it will be blank.

5. Gets a list of the videos and/or images in the storage account

6. Then for each video or image, checks the file type and executes the appropriate pipeline depending on whether it is a video or image, passing in the appropriate file details and other values for parameters.

Video Ingestion/GPT-4V with Pipeline childAnalyzeVideo

The core logic for this pipeline has not changed from the first article. The childAnalyzeVideo pipeline is called from the Orchestrator Pipeline for each file that is a video rather than a image. It creates a video retrieval index with Azure AI Vision Image Analysis 4.0 and passes that along with the link to the video to GPT-4V, returning the completion results to Cosmos DB. Please refer to first article if you want more details.

Image Ingestion/GPT-4V with Pipeline childAnalyzeImage

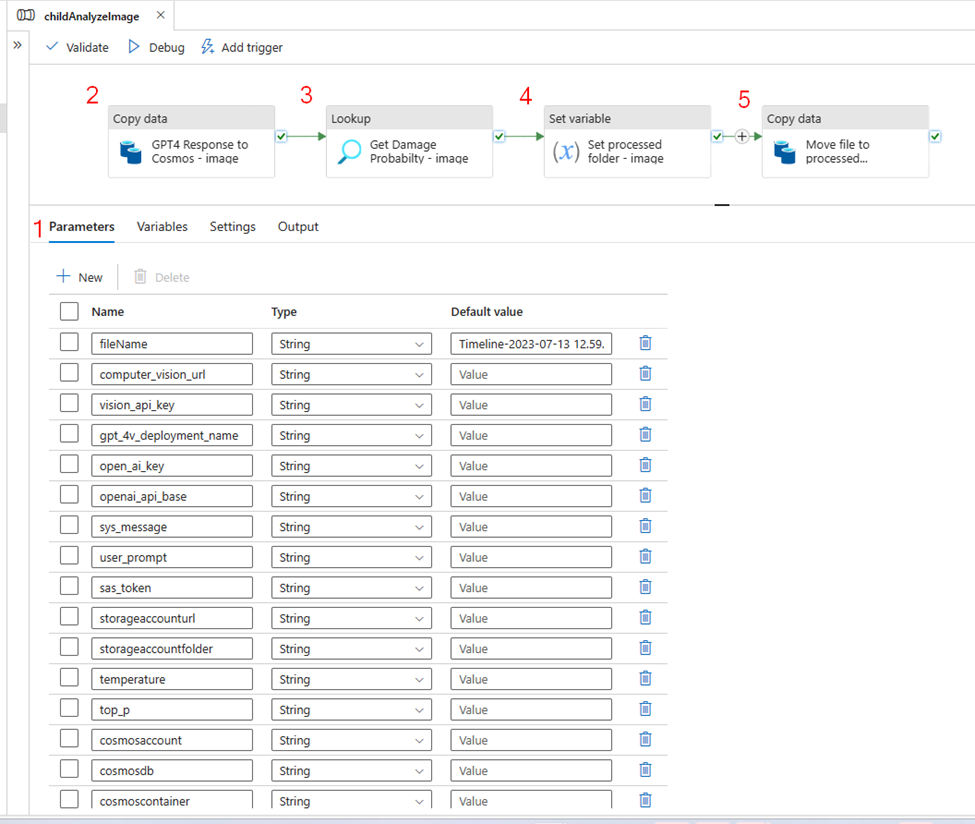

The childAnalyzeImage pipeline is called from the orchestrator pipeline for each file that is an image rather than a video. Below are the details:

- Input parameters for the pipeline. Note that the ‘Secure input’ on subsequent pipeline activities that use API keys or SAS tokens so their values will not be exposed in the pipeline output

- filename - the image to be analyzed

- computer_vision_url – url to your Azure AI Vision resource

- vision_api_key – Azure AI Vision token

- gpt_4v_deployment_name – deployment name of GPT-4V model

- open_ai_key– url to your Azure Open AI resource

- openai_api_base - url to your Azure Open AI resource

- sys_message - initial instructions to the model about the task GPT-4V is expected to perform

- user_prompt - the query to be answered by GPT-4V

- sas_token – Shared Access Signature token

- storageaccounturl – endpoint for the storage account

- storageaccountfolder - the container/file that contains the images

- temperature – formatted temperature value

- top_p - formatted top_p value

- cosmosaccount – Azure Cosmos DB endpoint

- cosmosdb – Azure Cosmos DB name

- cosmoscontainer - Azure Cosmos DB container name

- temperaturevalue - value between 0 and 2 where 0 is the most accurate and consistent result and 2 is the most creative

- top_pvalue - value between 0 and 1 to consider a subset of tokens

-

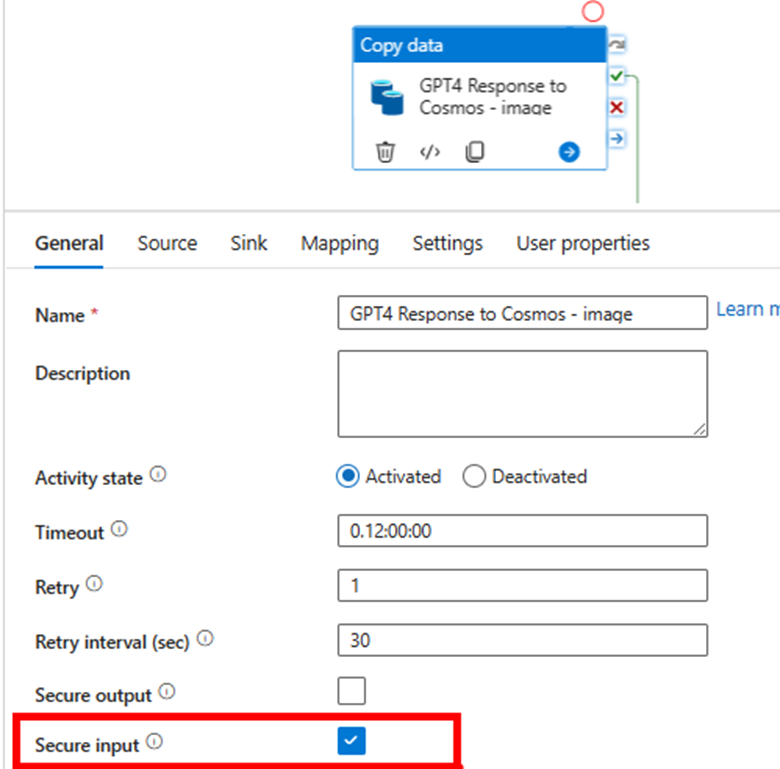

Call GPT-4V with inputs including system message and user prompt and store results in Cosmos DB

Copy Data Activity, General settings– note that Secure Input is checked

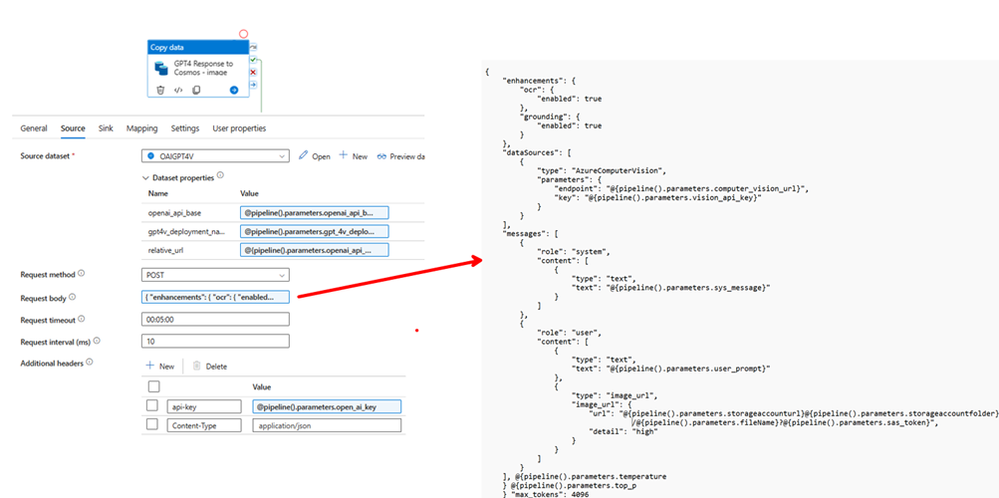

Copy Data Source settings - REST API Linked Service to GPT-4V Deployment. Check out Use Vision enhancement with images, and Chat Completion API Reference for more detail

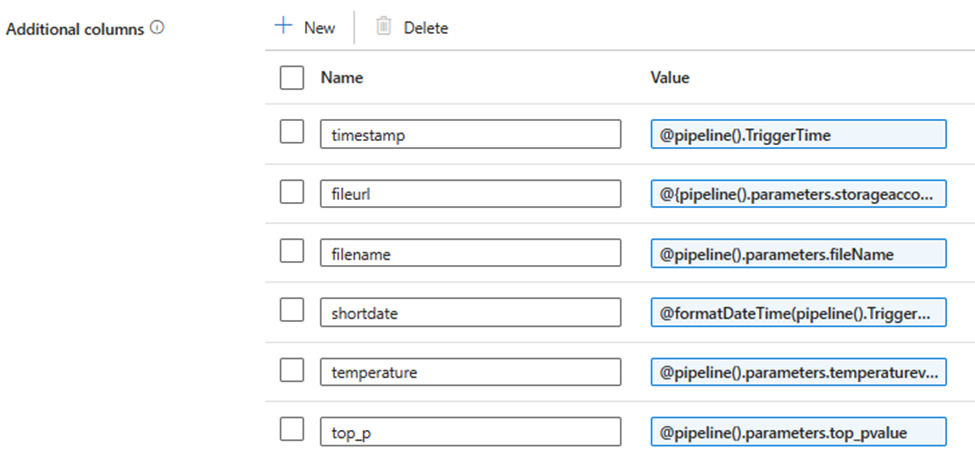

Additional columns with pipeline information were added to the source:

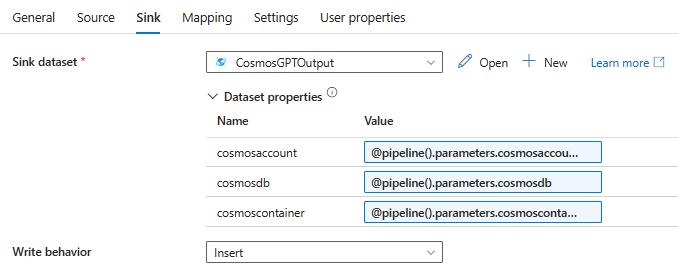

Sink to Cosmos DB

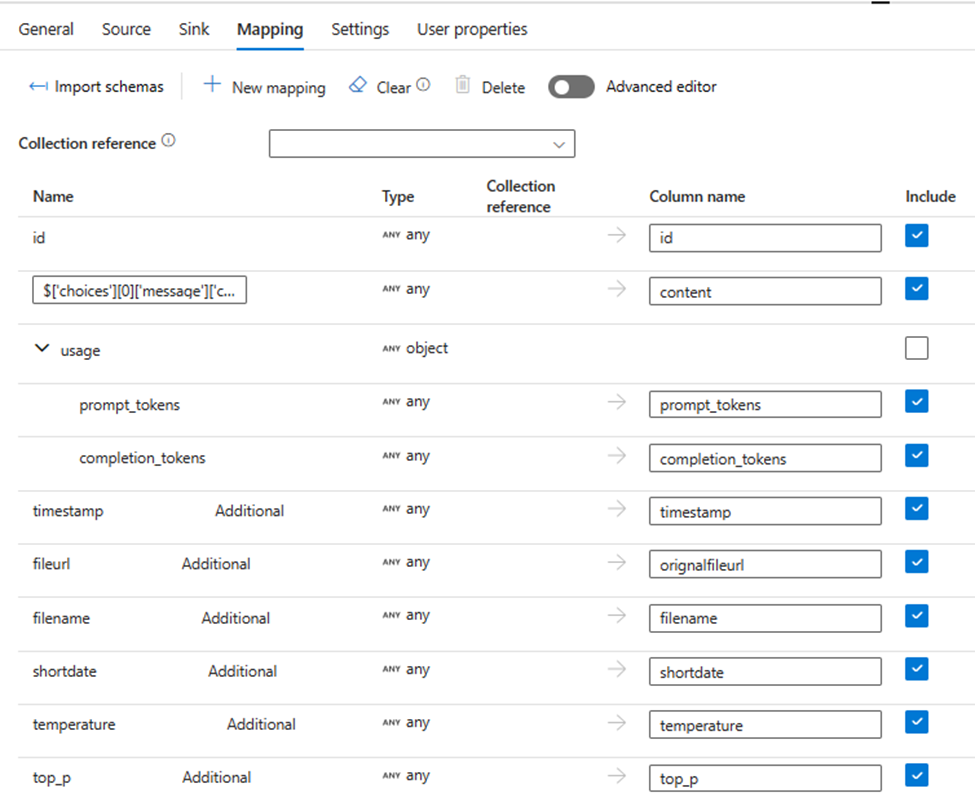

Mapping tab includes GPT-4V completion content, number of prompt and completion tokens plus the additional fields:

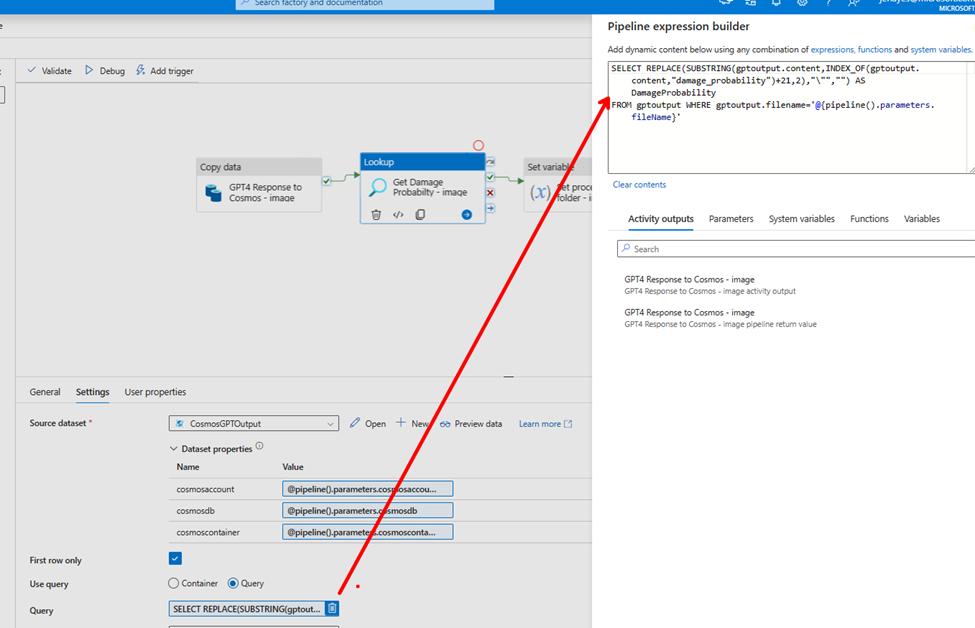

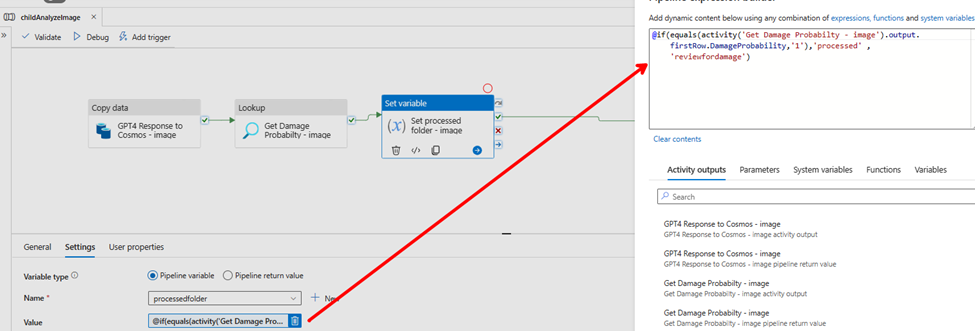

3. Perform lookup to get Damage Probability

4. Get Damaged Probability value to set the value for the processfolder variable

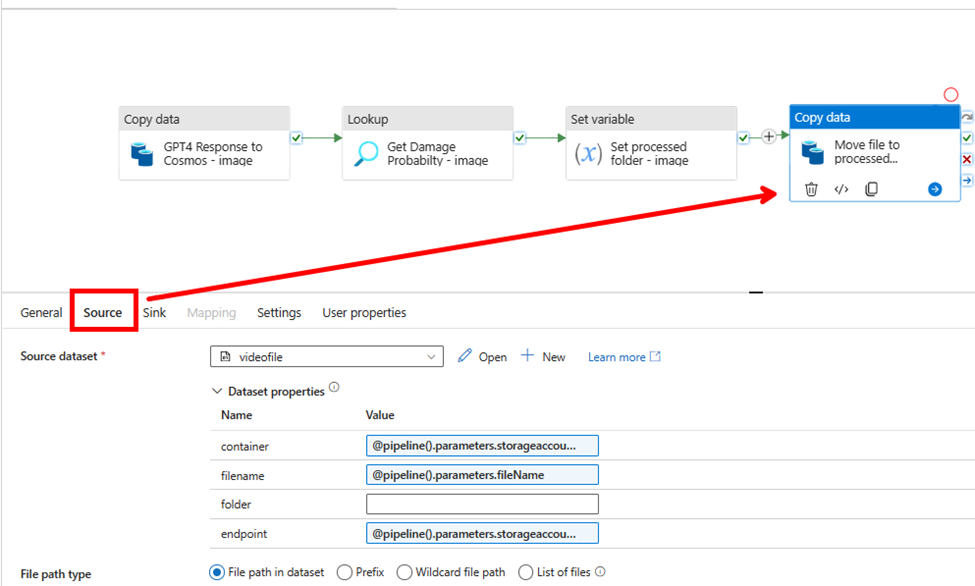

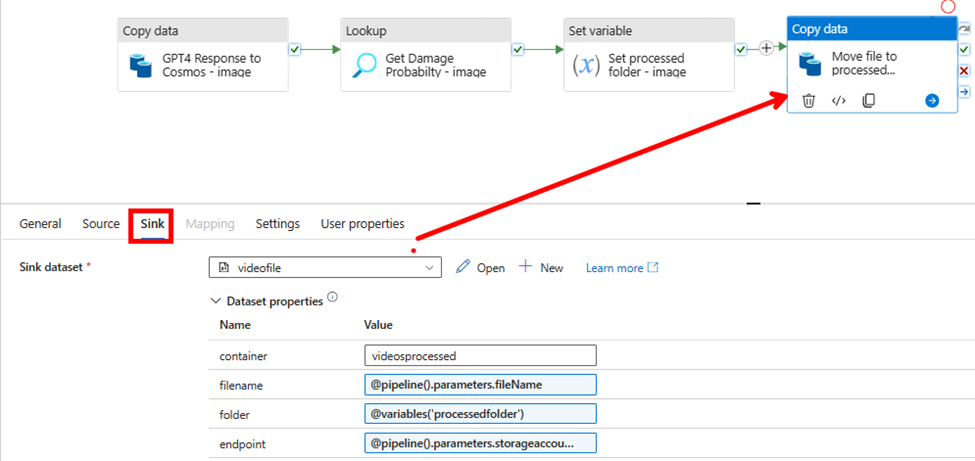

5. Move the file from the Source folder to the appropriate Sink folder with using a binary integration dataset:

Source Settings:

Sink Settings:

That's it!

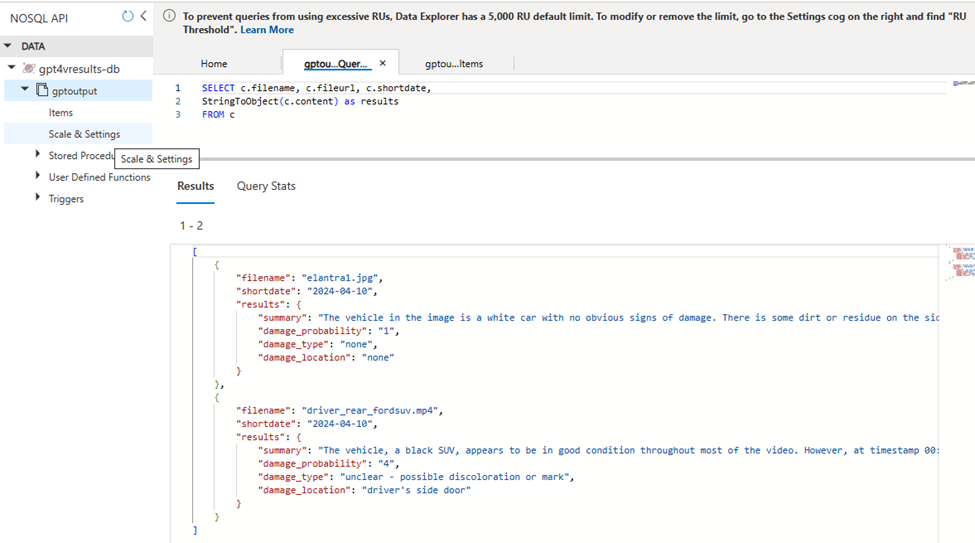

Query in Cosmos DB:

After running the solution, we can query the results from Cosmos DB. Since we specified to format the results as a Json string, we can run a simple query to convert the content field from a string to an object:

SELECT c.filename, c.fileurl, c.shortdate,

StringToObject(c.content) as results

FROM c

Deploying the Image and Video Solution in your environment

You can easily deploy this solution, including all the Azure resources cited earlier in this article plus all the Azure Data Factory Pipelines and code, in your own environment!

First, your subscription must be enabled to GPT-4 Turbo with Vision. If it isn't already, you can go here to apply. This usually takes just a few days.

Then go Image and Video Analysis-Azure Open AI in-a-box and follow the simple instructions to deploy either from your local Git repo or from Azure Cloud shell.

Test it out using the videos and images at the end of this article. Or better yet - upload your own videos and images to the Storage Account and change the system and user message parameters to do analysis for your own use cases!

And of course, since you have the entire AI-in-a-Box repo deployed locally or in Azure Cloudshell, check out the other insightful and easy to deploy solutions including chat bots, AI assistants, semantic kernel solutions and more. Follow the instructions and deploy into your subscription to learn, test, and adapt for your own use cases!

Resources:

Azure/AI-in-a-Box (github.com)

How to use the GPT-4 Turbo with Vision model - Azure OpenAI Service | Microsoft Learn

What is Image Analysis? - Azure AI services | Microsoft Learn