This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Content authored by: Arpita Parmar

Introduction

If you've been delving into the potential of large language models (LLMs) for search and retrieval tasks, you've probably encountered Retrieval Augmented Generation (RAG) as a valuable technique. RAG enriches LLM-generated responses by integrating relevant contextual information, particularly when connected to private data sources. This integration empowers the model to deliver more accurate and contextually rich responses.

Challenges with RAG evaluation

Evaluating RAG poses several challenges, requiring a multifaceted approach. Evaluating both response quality and retrieval effectiveness are in ensuring optimal performance.

Traditional evaluation metrics for RAG applications, while useful, have certain limitations that can impact their effectiveness in accurately assessing RAG performance. Some of these limitations include:

- Inability to fully capture user intent: Traditional evaluation metrics often focus on lexical and semantic aspects but may not fully capture the underlying user intent behind a query. This can result in a disconnect between the metrics used to evaluate RAG performance and the actual user experience.

- Reliance on ground truth: Many traditional evaluation metrics rely on the availability of a pre-defined ground truth to compare system-generated responses against. However, establishing ground truth can be challenging, particularly for complex queries or those with multiple valid answers. This can limit the applicability of these metrics in certain scenarios.

- Limited applicability across different query types: Traditional evaluation metrics may not be equally effective across different query types, such as fact-seeking, concept-seeking, or keyword queries. This can result in an incomplete or skewed assessment of RAG performance, particularly when dealing with diverse query types.

Overall, while traditional evaluation metrics offer valuable insights into RAG performance, they are not without their limitations. Incorporating user feedback into the evaluation process adds another layer of insight, bridging the gap between quantitative metrics and qualitative user experiences. Therefore, adopting a multifaceted approach that considers retrieval quality, relevance of response to retrieval, user intent, ground truth availability, query type diversity, and user feedback is essential for a comprehensive and accurate evaluation of RAG systems.

Improving RAG Application’s Retrieval with Azure AI Search

When evaluating RAG applications, it is crucial to accurately assess retrieval effectiveness and to tune relevance of retrieval. Since the retrieved data is key for the successful implementation of the RAG pattern, a retrieval system that can significantly enhance the quality of your results is integration of Azure AI Search. Even if AI Search offers keyword (full-text), vector and hybrid search capabilities, this post will be focused on using hybrid search. The hybrid search approach can be particularly beneficial in scenarios where the retrieval performance is varied or insufficient. By integrating both keyword and vector-based search techniques, hybrid search can improve the accuracy and completeness of the retrieved documents, which in turn can positively impact the relevance of the generated responses.

The hybrid search process in Azure AI Seach involves the following steps:

- Keyword search: Initial keyword index search to find documents containing the query terms using BM25 ranking algorithm.

- Vector search: In parallel, vector search uses dense vector representations to map the query to semantically similar documents, leveraging embeddings in vector fields using Hierarchical Navigable Small World (HNSW) or exhaustive k-nearest neighbors (KNN) algorithm.

- Result merging: The results from both keyword and vector searches are merged using a Reciprocal Rank Fusion (RRF) algorithm.

Enhancing Retrieval: Quality of retrieval & relevance tuning

When turning for retrieval relevance and quality of retrieval, there are several strategies to consider:

- Document processing: Experiment with chunk size and overlap to preserve context or continuity between chunks.

- Document understanding: Embeddings play a pivotal role in enabling pipelines to understand documents in relation to user queries. By transforming documents and queries into dense vector representations, embeddings facilitate the measurement of semantic similarity between them. Consider selecting an appropriate embedding model. For example, higher-dimensional embeddings can store more context information but may require more computational resources, while smaller-dimensional embeddings are more efficient but may sacrifice some context.

- Vector search configuration: Think of this configuration like building a map. Adjusting this parameter helps the algorithm decide how many landmarks to use and how far apart they should be, which can affect how quickly and accurately it finds relevant information. Adjust the efConstruction parameter for HNSW to change the internal composition of the proximity graph. This parameter changes the way the search algorithm organizes information internally.

- Query-time parameter: Increase the number of results (k) to feed more search results. It determines how many search results are returned for each query. Increasing k means the system will provide more potential matches, which can be useful if you're trying to find the best answer among many possibilities.

- Enhancing hybrid search with Semantic re-ranking: To further enhance the quality of search results, a semantic re-ranking step can be added. Also known as L2, this layer takes a subset of the top L1 results and computes higher-quality relevance scores to reorder the result set. The L2 ranker can significantly improve the ranking of results already found by the L1, critical for RAG applications to ensure the best results are in the top positions. In Azure Search, this is done using a semantic ranker developed in partnership with Bing, which leverages vast amounts of data and machine learning expertise. The re-ranking step helps optimize relevance by ensuring that the most related documents are presented at the top of the list.

By unifying these retrieval techniques and configurations, hybrid search can handle queries more effectively compared to using just keywords or vectors alone. It excels at finding relevant documents even when users query with concepts, abbreviations or phraseology different from the documents.

A recent Microsoft study highlights that hybrid search with semantic re-ranking outperforms traditional vector search methods like dense and sparse passage retrieval across diverse question-answering tasks.

According to this study, key advantages with hybrid search with semantic re-ranking include:

- Higher answer recall: Returning higher quality answers more often across varied question types.

- Broader query coverage: Handling abbreviations, rare terms that vector search struggle with.

- Increased precision: Merged results combining keyword statistics and semantic relevance signals.

Now that we've covered retrieval tuning, let's turn our attention to evaluating generation and streamlining the RAG pipeline evaluation process. Azure AI prompt flow offers comprehensive framework to streamline RAG evaluation.

Azure AI prompt flow

Prompt flow streamlines RAG evaluation with multifaceted approach by efficiently comparing prompt variations, integrating user feedback, and supporting both traditional and AI-generated metrics that don't require ground truth data. It ensures tailored responses for diverse queries, simplifying retrieval and response evaluation while providing comprehensive insights for improved RAG performance.

Both Azure AI Search and Azure AI prompt flow are available in Azure AI Studio, a unified platform for responsibly developing and deploying generative AI applications. The one-stop-shop platform enables developers to explore the latest APIs and models, access comprehensive tooling to support the generative AI development lifecycle, design applications responsibly, and deploy and scale models, flows and apps at scale with continuous monitoring.

With Azure AI Search, developers can connect models to their protected data for advanced fine-tuning and contextually relevant retrieval augmented generation. With Azure AI prompt flow, developers can orchestrate AI workflows with prompt orchestration, interactive visual flows, and code-first experiences to build sophisticated and customized enterprise chat applications.

Here is a video of how to build and deploy an enterprise chat application with Azure AI Studio.

Evaluating RAG applications in prompt flow revolves around three key aspects:

- Prompt variations: Prompt variation testing, informed by user feedback, ensures tailored responses for diverse queries, enhancing user intent understanding and addressing various query types effectively.

- Retrieval evaluation: This involves assessing the accuracy and relevance of the retrieved documents.

- Response evaluation: The focus is on measuring the appropriateness of the LLM-generated response when provided with the context.

Below is the table of evaluation metrics for RAG applications in Prompt flow.

|

Metric Type |

AI Assisted/Ground Truth Based |

Metric |

Description |

|

Generation |

AI Assisted |

Groundedness |

Measures how well the model's generated answers align with information from the source data (user-defined context). |

|

Generation |

AI Assisted |

Relevance |

Measures the extent to which the model's responses generated are pertinent and directly related to the given questions. |

|

Retrieval |

AI Assisted |

Retrieval Score |

Measures the extent to which the model's retrieved documents are pertinent and directly related to the given questions. |

|

Generation |

Ground Truth Based |

Accuracy, Precision, Recall, F1 score |

Measures the RAG system’s responses to a set of predefined, correct answers. Measures the ratio of the number of shared words between the model generation and the ground truth answers. |

There are 3 AI assisted metrics available in prompt flow that do not require ground truth. Traditional metrics based on ground truth are useful while testing RAG applications in development, but AI-assisted metrics offer enhanced capabilities for evaluating user responses, especially in situations where ground truth data is unavailable. These metrics provide valuable insights into the performance of the RAG Application in real-world scenarios, enabling more comprehensive assessment of user interactions and system behavior. These are those metrics:

- Groundedness: Groundedness ensures that the responses from the LLM align with the context provided and are verifiable against the available sources. It confirms factual accuracy and ensures that the conversation remains grounded when all responses meet this criterion.

- Relevance: Relevance measures the appropriateness of the generated answers to the user's query based on the retrieved documents. It assesses whether the response provides sufficient information to address the question and adjusts the score accordingly if the answer lacks relevance or contains unnecessary details.

- Retrieval Score: The retrieval score reflects the quality and relevance of the retrieved documents to the user's query. It breaks down the user query into intents, assesses the presence of relevant information in the retrieved documents, and calculates the fraction of intents with affirmative responses to determine relevance.

Groundedness, relevance, and the retrieval score along with prompt variant testing from prompt flow collectively provide insights into the performance of RAG applications. It enables refinement of RAG Applications, addressing challenges associated with information overload, incorrect response, insufficient retrieval and ensuring more accurate responses throughout the end-to-end evaluation process.

Potential scenarios to evaluate RAG workflows

Now, let's explore 3 potential scenarios to evaluate RAG workflows and how prompt flow and Azure AI Search help in evaluating those scenarios.

Scenario 1: Successful Retrieval and Response

This scenario entails the seamless integration of relevant contextual information with accurate and appropriate responses generated by RAG application. We have good response and good retrieval.

In this scenario, all three metrics perform optimally. Groundedness ensures factual accuracy and verifiability, relevance ensures the appropriateness of the answer to the query, and the retrieval score reflects the quality and relevance of the retrieved documents.

Scenario 2: Inaccurate Response, Insufficient Retrieval

Here, despite the retrieval of relevant documents, the response from LLM is inaccurate. Groundedness may suffer if the response lacks verifiability against the provided sources. Relevance may also be compromised if the response does not adequately address the user's query. The retrieval score might indicate successful document retrieval but fails to capture the inadequacy of the response.

To address this challenge, Azure AI Search retrieval tuning can be leveraged to enhance the retrieval process, ensuring that the most relevant and accurate documents are retrieved. By fine-tuning the search parameters discussed above in section “Enhancing Retrieval: Quality of retrieval & relevance tuning,” Azure AI Search can significantly improve the retrieval score, thereby increasing the likelihood of obtaining relevant documents for the given query.

Additionally, you can refine the LLM's prompt by incorporating a conditional statement within the prompt template, such as "if relevant content is unavailable and no conclusive solution is found, respond with 'unknown'." Leveraging prompt flow, which allows for the evaluation and comparison of different prompt variations, you can assess the merit of various prompts and select the most effective one for handling such situations. This approach ensures accuracy and honesty in the model's responses, acknowledging its limitations and avoiding the dissemination of inaccurate information.

Scenario 3: Incorrect Response, Varied Retrieval Performance

In this scenario, the retrieval of relevant documents is followed by an inaccurate response from the LLM. Groundedness may be maintained if the responses remain verifiable against the provided sources. However, relevance is compromised as the response fails to address the user's query accurately. The retrieval score might indicate successful document retrieval, but the flawed response highlights the limitations of the LLM.

Evaluation in this scenario involves several key steps facilitated by Azure AI prompt flow and Azure AI Search:

- Acquiring Relevant Context: Embedding a user query to search a vector database for pertinent chunks is crucial. The success of retrieval relies on the semantic similarity of these chunks to the query and their ability to provide relevant information for generating accurate responses (see section "Enhancing Retrieval: Quality of retrieval & Relevance Tuning").

- Optimizing Parameters: Adjusting parameters such as retrieval type (hybrid, vector, keyword), chunk size, and K value is necessary to enhance RAG application performance. (see section "Enhancing Retrieval: Quality of retrieval & Relevance Tuning").

- Prompt Variants: Utilizing prompt flow, developers can test and compare various prompt variations to optimize response quality. By iterating prompt templates and LLM selections, prompt flow enables rapid experimentation and refinement of prompts, ensuring that the retrieved content is effectively utilized to produce accurate responses. (see section "How to evaluate RAG with Azure Machine Learning prompt flow").

- Refining Response Generation Strategies: Moreover, exploring different text extraction techniques and embedding models alongside experimenting with chunking strategies can further improve overall RAG performance. (see section "Enhancing Retrieval: Quality of retrieval & Relevance Tuning").

How to evaluate RAG with Azure AI prompt flow

In this section, let’s walk through the step-by-step process of testing RAG using prompt variants with the prompt flow using metrics such as groundedness, relevance, and retrieval score.

Prerequisite: Build RAG using Azure Machine Learning prompt flow.

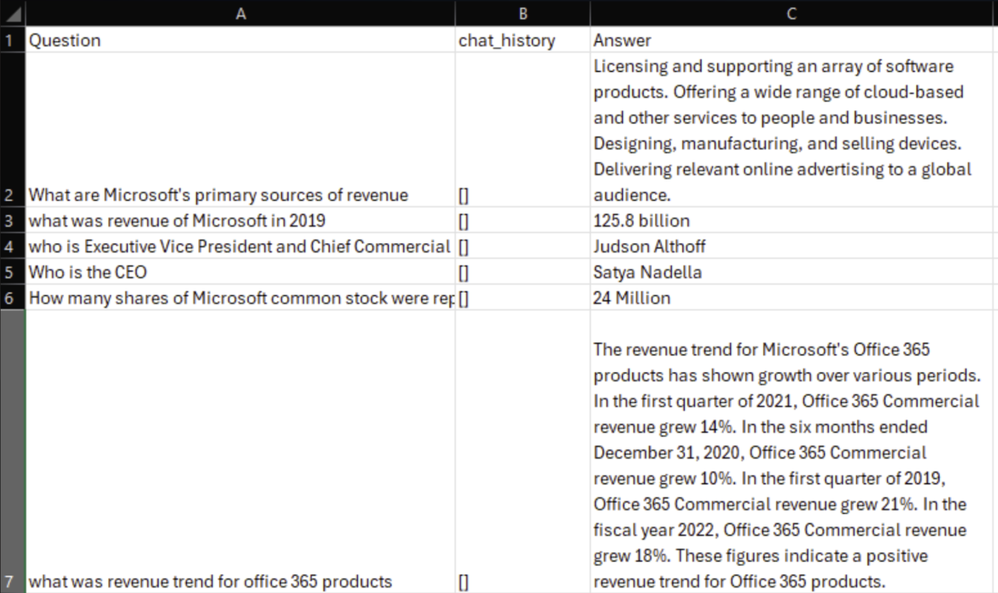

1. Prepare Test Data: Ideally, you should prepare a test dataset of 50-100 samples but for this article we will prepare a test dataset with a few samples. Save this as a csv file.

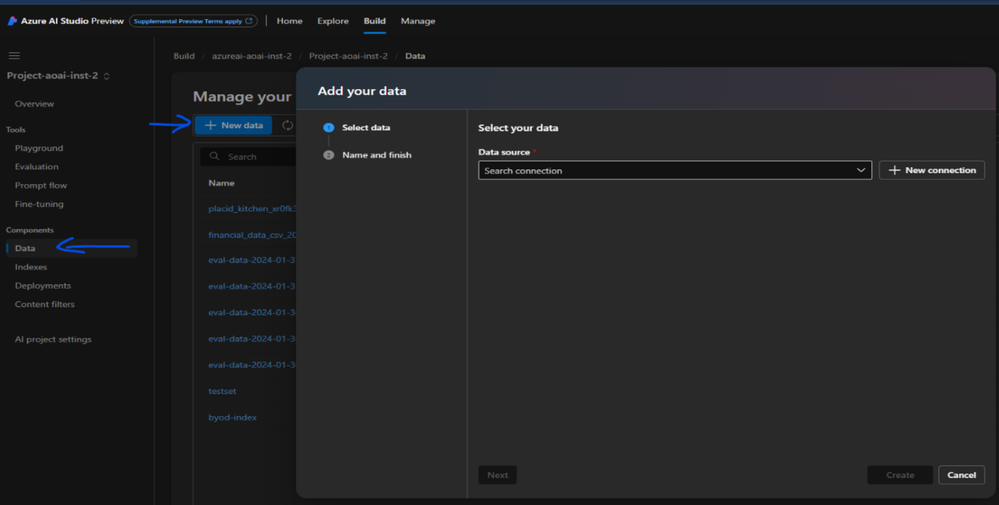

2. Add test data to Azure AI Studio: In your AI Studio project, under Components, select Data -> New data.

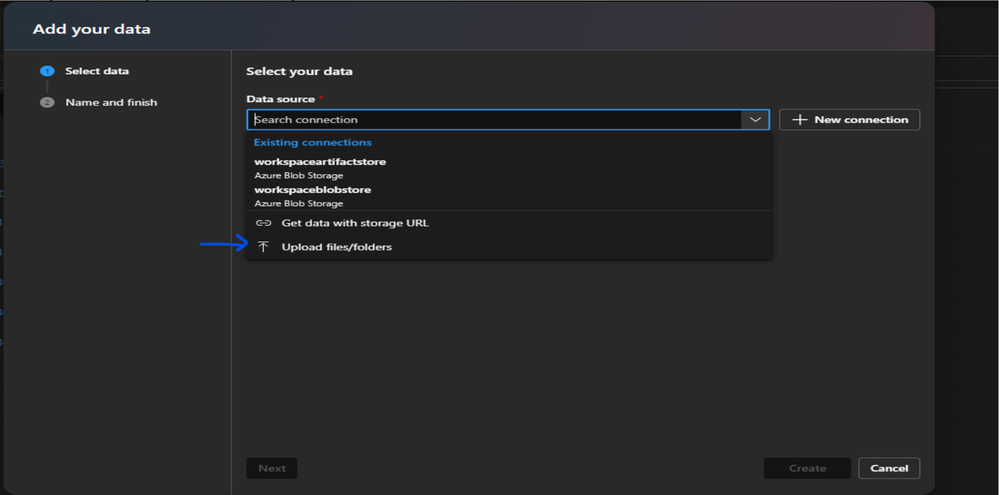

3. Select Upload files/folders and upload the test data from a local drive. Click on Next, provide a name to your data location and click on Create.

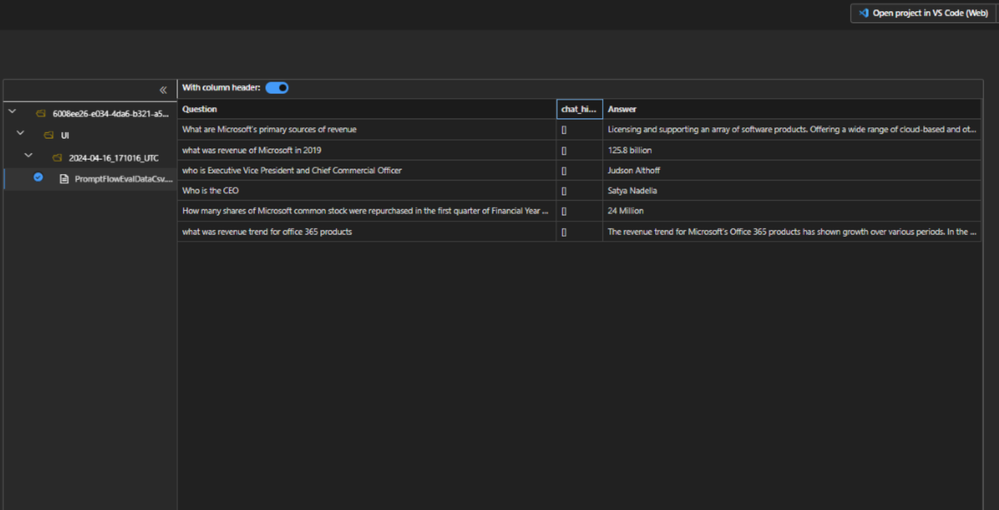

4. Once the test data is uploaded you can see its details.

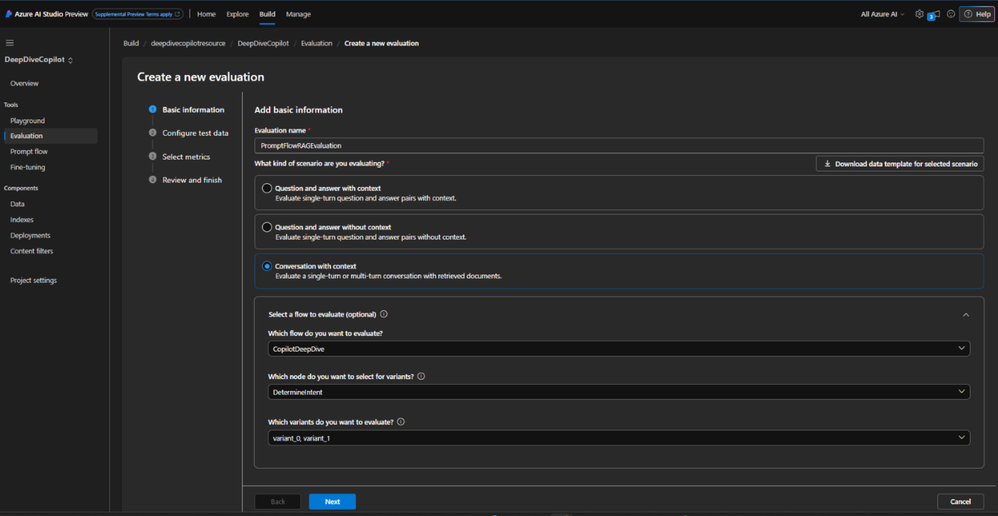

5. Evaluate the flow: Under Tools -> Evaluation, click on New evaluation. Choose Conversation with context and select a flow you want to evaluate. Here we are testing two variants of prompt: Variant_0 and Variant_1. Click on Next.

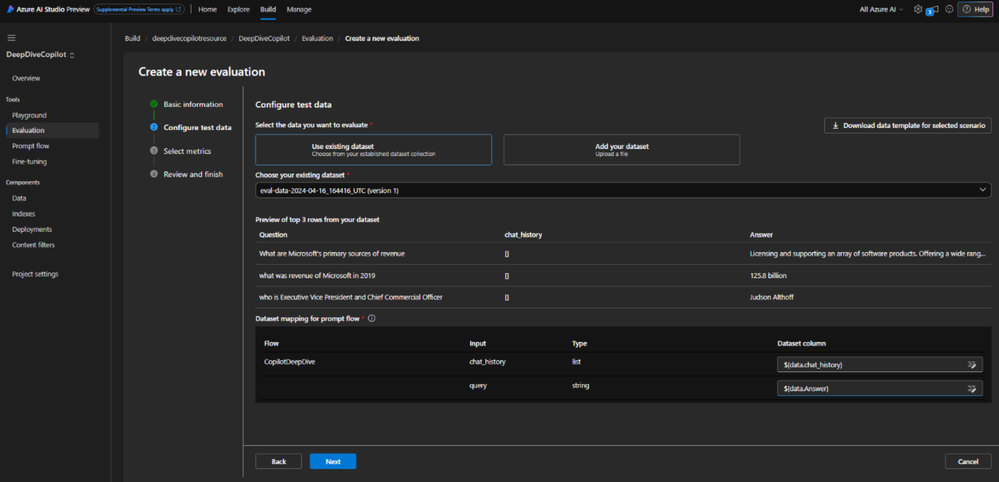

6. Configure the test data. Click on Next.

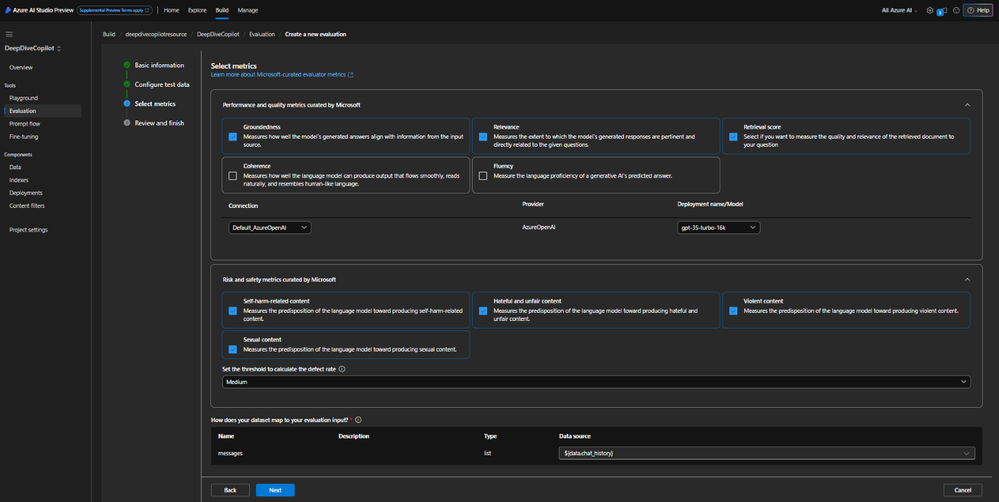

7. Under Select Metrics RAG metrics are automatically selected based on the scenario you have chosen. Refer to more details of metrics. Choose your Azure OpenAI Service instance and model and click on Next.

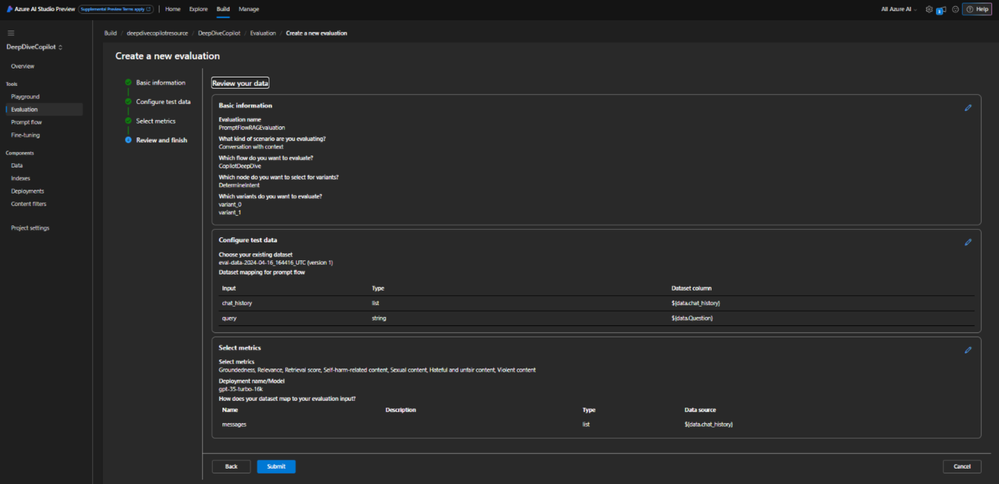

8. Review and finish. Click on Submit.

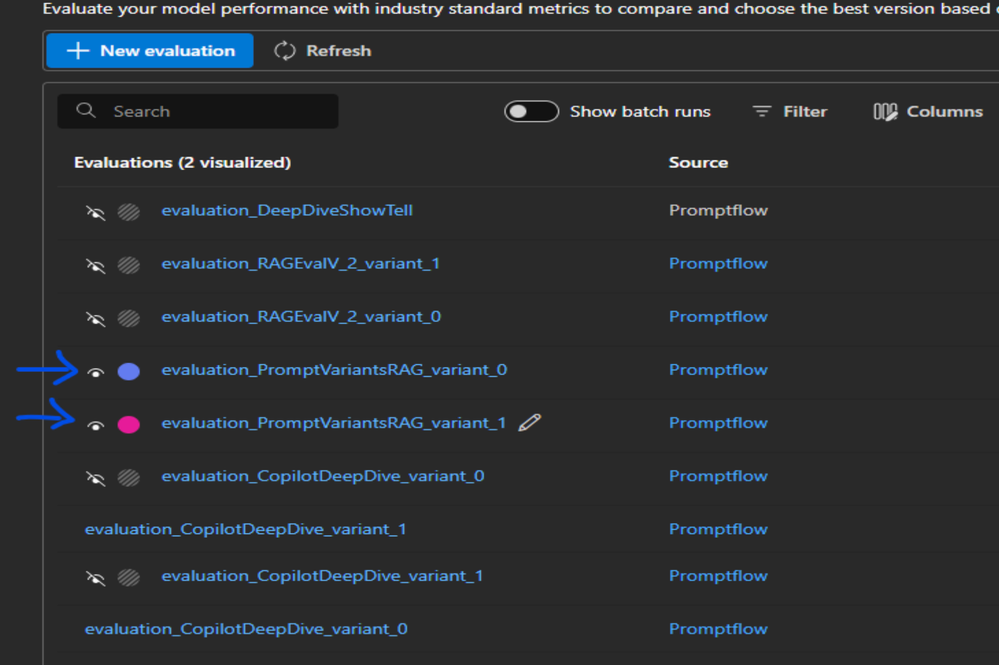

9. Once the evaluation is complete it will be displayed under Evaluations.

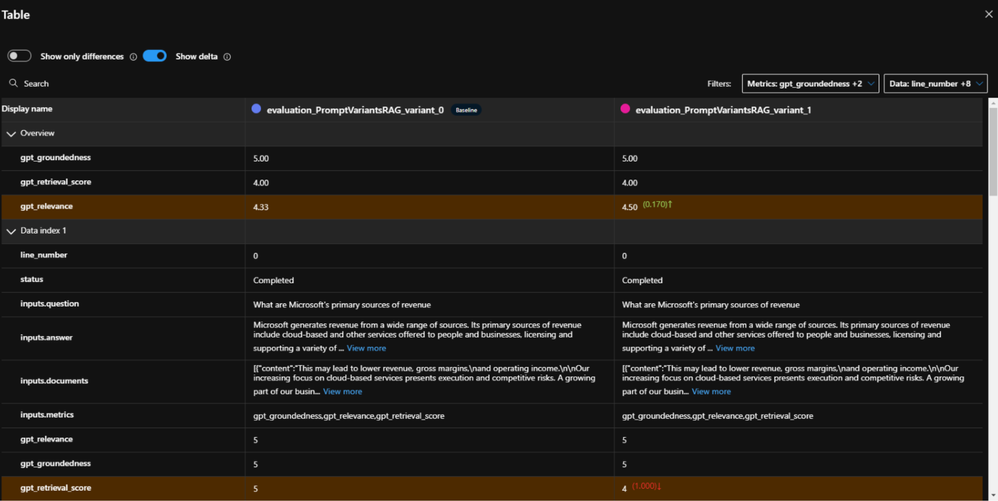

10. Check the results by clicking on the evaluation. You can compare the two variants of prompts by comparing their metrics to see which prompt variant is performing better.

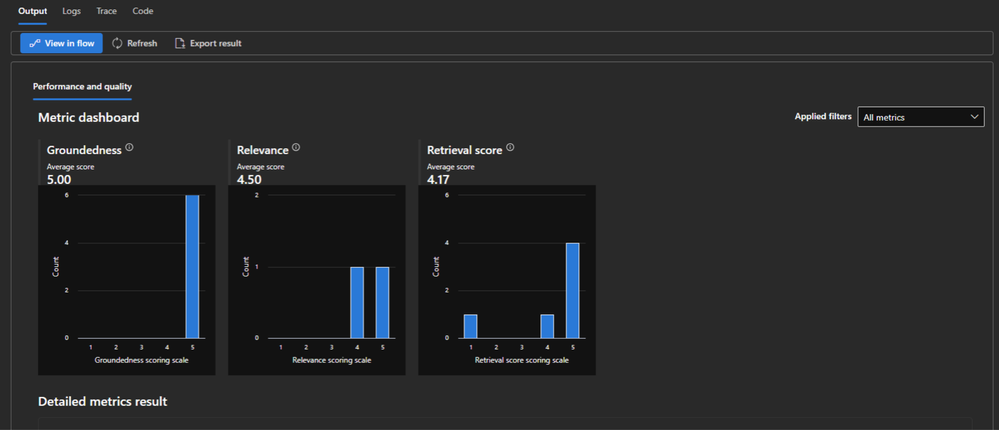

11. You can check the result of individual prompt variant evaluation metrics under the Output tab -> Metrics dashboard.

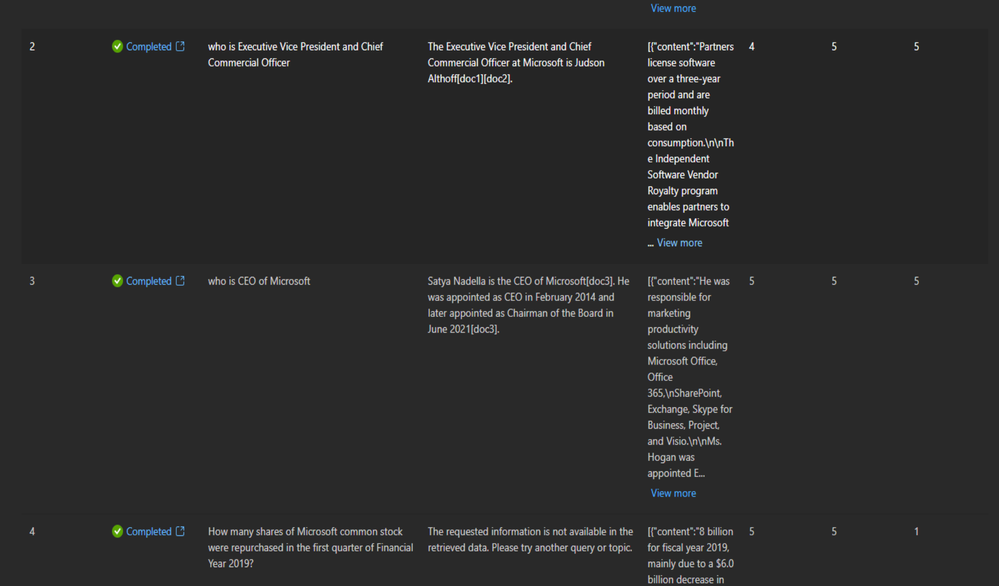

12. Also, under the Output tab, you can also see a detailed view of the metrics under Detailed metrics result.

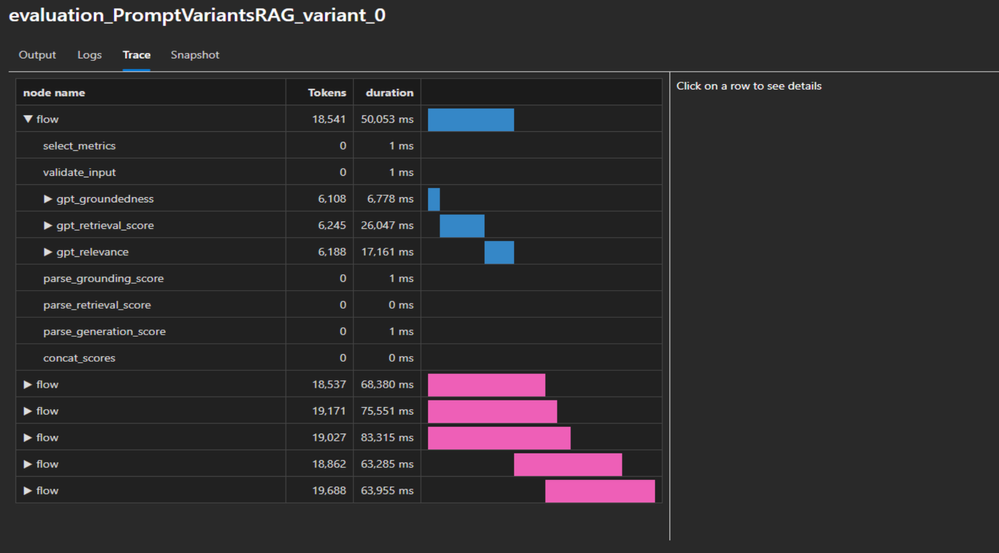

13. Under the Trace tab, you can trace how many tokens were generated and the duration for each test question.

Conclusion:

The integration of Azure AI Search into the RAG pipeline can significantly improve retrieval scores. This enhancement ensures that retrieved documents are more aligned with user queries, thus enriching the foundation upon which responses are generated. Furthermore, by integrating Azure AI Search and Azure AI prompt flow in Azure AI Studio, developers can test and optimize response generation to improve groundedness and relevance. This approach not only elevates RAG application performance but also fosters more accurate, contextually relevant, and user-centric responses.