This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

GoEX: a safer way to build autonomous Agentic AI applications

The Gorilla Execution Engine, from a paper by the UC Berkeley researchers behind Gorilla LLM and RAFT, helps developers create safer and more private Agentic AI applications

By Cedric Vidal, Principal AI Advocate, Microsoft

“In the future every single interaction with the digital world will be mediated by AI”

Yann Lecun, Lex Fridman podcast episode 416 (@ 2:16:50).

In the rapidly advancing field of AI, Large Language Models (LLMs) are breaking new ground. Once primarily used for providing information within dialogue systems, these models are now stepping into a realm where they can actively engage with tools and execute actions on real-world applications and services with little to no human intervention. However, this evolution comes with significant risks. LLMs can exhibit unpredictable behavior, and allowing them to execute arbitrary code or API calls raises legitimate concerns. How can we trust these agents to operate safely and responsibly?

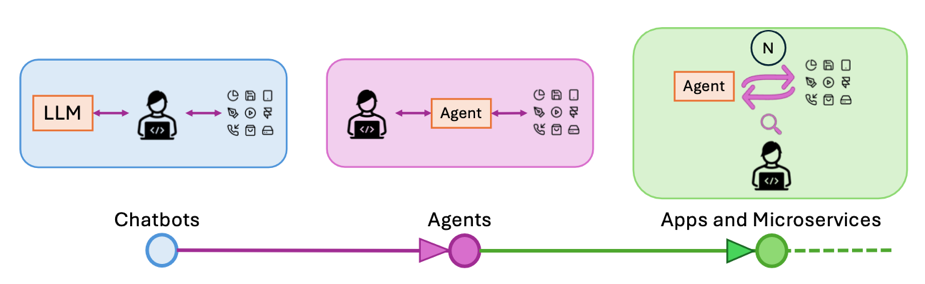

Figure 1 from GoEX paper illustrating the evolution of LLM apps, from simple chatbots, to conversational agents and finally autonomous agents

Enter GoEX (Gorilla Execution Engine), a project headed by researcher Shishir Patil from UC Berkeley. Patil's team has recently released a comprehensive paper titled “GOEX: Perspectives and Designs to Build a Runtime for Autonomous LLM Applications” which addresses these very concerns. The paper proposes a novel approach to building LLM agents capable of safely interacting with APIs, thus opening up a world of possibilities for autonomous applications.

The Challenge with LLMs

The problem with LLMs lies in their inherent unpredictability. They can generate a wide range of behaviors and mis interpret human request.

A lot of progress has already been done to prevent the generation of harmful content and prevent jail breaks. The Azure AI Content Safety is a great example of a practical production ready system you can use today to detect and filter violence, hate, sexual and self-harm content.

That being said, when it comes to generating API calls, those filters don’t apply. Indeed, an intended and completely harmless API call in one context might be unintended and have catastrophic consequences in a different context if it doesn’t align with the intent of the user.

When an AI gets asked: "Send a message to my team saying I'll be late for the meeting", it could very well misunderstand the context and, instead of sending a message indicating the user will be late, it could send a calendar update rescheduling the entire team meeting to a later time. While this API call in itself in harmless, because it is not aligned with the intent of the user and is the result of a misunderstanding by the user, it will cause confusion and disrupt everyone's schedule. While the blast radius here is somewhat limited, not only the AI could wreak havoc on anything the user has granted access to but it could also inadvertently leak any API keys that it has been untrusted with.

In the context of agentic applications, we therefore need a different solution.

“Gorilla AI wrecking havoc in the workplace” generated by DALL-E 3

But first, let’s see how a rather harmless request by a user to send a tardiness message to his team could wreak havoc. The user asks to the AI “Send a message to my team telling them I will be an hour late at the meeting”.

Intended Human Order Python Code (sending a message to the team):

In the following script, we have a function send_email that correctly sends an email message to the team members indicating that the user will be an hour late for the meeting

Clumsy AI Interpretation Python Code (rescheduling the meeting):

The following Python code snippet appears to be sending a message to notify the team of the user's tardiness but instead clumsily reschedules the meeting due to a misinterpretation.

In this code snippet, the function send_message_to_team misleadingly suggests that it sends a message. However, within the function, there's an unintentional call to reschedule the meeting instead of sending the intended message. The comments and the print statement in the try block are misleading the reader into thinking the function is doing the right thing, but the actual executed code performs the unintended action.

It's impractical to have humans validate each function call or piece of code AI generates. This raises a plethora of questions: How do we control the potential damage, or "blast radius," if an LLM executes an unwanted API call? How can we safely pass credentials to LLMs without compromising security?

The Current State of Affairs

As of now, the actions generated by LLMs, be it code or function calls, are verified by humans before execution. This method is fraught with challenges, not least because code comprehension is notoriously difficult, even for experienced developers. What’s more, as AI assistants become more prevalent, the amount of AI generated actions will soon become impractical to verify manually.

GoEX: A Proposed Solution

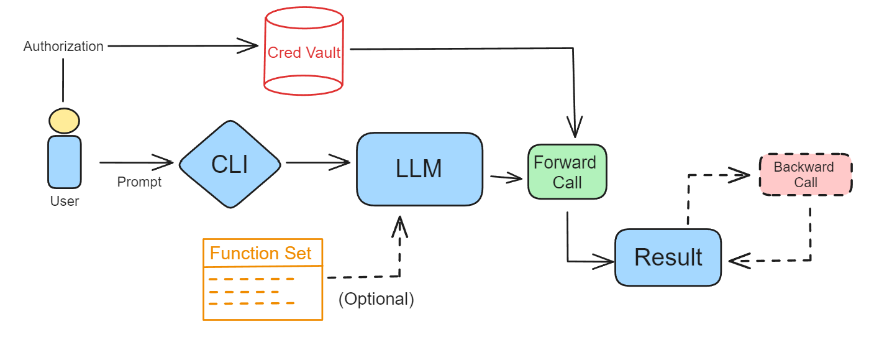

GoEX aims to unlock the full potential of LLM agents to interact with applications and services while minimizing human intervention. This innovative engine is designed to handle the generation and execution of code, manage credentials for accessing APIs, hide those credentials from the LLM and most importantly, ensure execution security. But how does GoEX achieve this level of security?

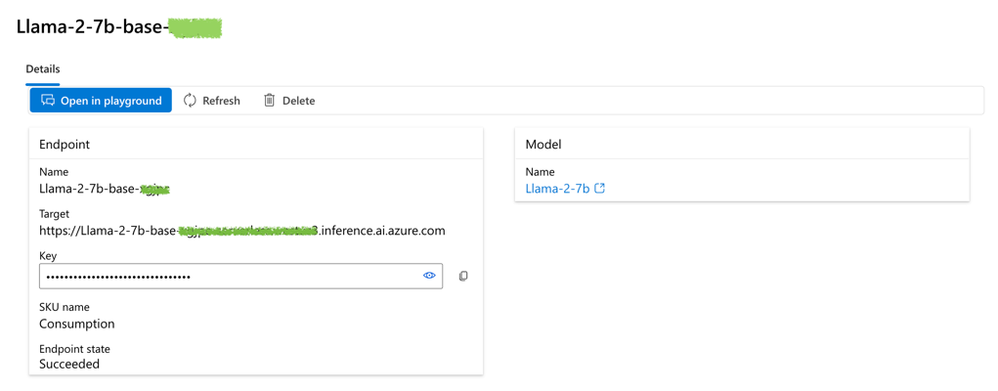

Running GoEX with Meta Llama 2 deployed on Azure Model as a Service

But before delving into explaining how GoEX works, let’s execute it. For this, let’s use Meta Llama 2 deployed on Azure AI Model as a Service / Pay as you go. This is a fully managed deployment platform where you pay by the token, only for what you use, it is very cost efficient to experiment as you don’t pay for infrastructure you don’t use or forget to decommission.

Checkout the project locally or open the project in Github Codespaces (recommended).

Follow the GoEX installation procedure.

Deploy Llama 2 7b or bigger on the Azure AI with Model as a Service / Pay As You Go using this procedure.

Go to the deployed model details page:

Llama 2 endpoint details page showing Target URL and Key token values

Edit the ./goex/.env file and add the following lines, replacing the values by the ones found in the previous endpoint details page:

Note: The target URL needs to be postfixed with “/v1”. It is very important because GoEX relies on the openai compatible API.

Now that you’re set up, you can go ahead and try the examples from the GoEX README.

Note: We used Llama 2 deployed on Model As A Service / Pay As You Go but you can try any other model from the catalog or deploy on your own infrastructure using a real time inference endpoint with a GPU enabled VM, here is the procedure.

Generating forward API calls

How does GoEX generate REST API calls? It uses an LLM with the following carefully crafted Few-shot Learning prompt (see source code ) :

Note how the GoEX instructs the LLM to throw an exception if the action cannot be completed, this is how GoEX detects that something went wrong.

Framework for undo actions

Gorilla AI cleaning up the mess created (Generated by DALL-E 3, including typos)

The key lies in GoEX's ability to create reverse calls that can undo any unwanted effects of an action. By implementing this 'undo' feature, aka Compensating Transaction pattern in the Micro Services literature, GoEX allows for the containment of the blast radius in the event of an undesirable action. This is complemented by post-facto validation, where the effects of the code generated by the LLM or the invoked actions are assessed to determine if they should be reversed. In their blog post, the UC Berkeley team shares a video demonstrating undo in action on a message sent through Slack. While this example is trivial, it shows the fundamental building blocks in action.

But where do undo operations come from? The approach chosen by GoEX is twofold. First, if the API has a known undo operation then GoEX will just use it. If it doesn’t, after having generated the forward call, GoEX will generate the undo operation for the given input prompt and generated forward call.

It uses the following prompt (see source code ) :

Note how this reverse action prompt takes as input not only the original user prompt but also the forward call generated previously. This allows the LLM to learn in context from the user intent as well as what was used for the forward call to craft a call reversing its effect. Also, note how the prompt invites the LLM to bail cleanly with an explanation if it cannot come up with a reverse call.

One possible improvement here is that in addition to learning in context from the forward call, it might be sensible to learn also from the output of the forward call. Indeed, the API backend might produce an outcome that cannot be deduced only from the forward call itself. It would require either using the output of the forward call if available or resolving a known outcome fetching API call or generating one and using that outcome as input to generate the reverse API call.

Also, in addition to using known undo actions or generating them, when the underlying system supports atomicity, such as for transactional databases, GoEX will automatically leverage rollbacks.

Deciding whether a forward call should be undone

How does GoEX make that decision? Currently, GoEX delegates that ultimate arbitration to the user. Delegating to the LLM is a bridge that has not yet been crossed. Indeed, the current implementation asks the user whether to confirm or undo the operation, displaying the undo operation and asking the user to judge the quality of the reverse operation.

An interesting direction for future research is to explore how GoEX could delegate the undo decision making process to an LLM, instead of asking the user. This would require the LLM to evaluate the quality and correctness of the generated forward actions as well as the observed state of the system, and to compare them with the desired state of the system expressed in the initial user prompt.

Privacy through redaction of sensitive data

One of the challenges of using LLMs to generate and execute code is ensuring the security and privacy of the API secrets and credentials that are required to access various applications and services. GoEX solves this problem by redacting sensitive data by replacing them by dummy but credible secrets (called symbolic credentials in the paper) before handing them over to the LLM, such as fake tokens, passwords, card numbers and social security numbers, and replacing them with the real ones in the code generated by the LLM before it is executed. One of the frameworks mentioned by the paper is Microsoft Presidio. This way, the LLM does not have access to the actual secrets and credentials, and cannot leak or misuse them. By hiding the API secrets and credentials from the LLMs, GoEX enhances the security and privacy of the agentic applications and reduces the risks of breaches or attacks.

Diagram from the GoEX blog post illustrating how calls are unredacted using credentials from a Vault after the LLM generation phase

Sandboxing generated calls

The generated actions’ code to call APIs is executed inside a docker container (see code source). This is an improvement over executing the code directly on the user’s machine as it prevents basic exploits but as mentioned in the paper, the docker runtime can still be jail broken and there are additional sandboxing tools that could be integrated in GoEX to make it safer.

Mitigating Risks with GoEX for more reliable and safer agentic applications

GoEX actively addresses the Responsible AI principle of "Reliability and Safety" by incorporating an innovative 'undo' mechanism within its system. This key feature allows for the reversion of actions executed by the AI, which is crucial in maintaining operational safety and enhancing overall system reliability. It acknowledges the fallibility of autonomous agents and ensures there is a contingency in place to maintain user trust.

More privacy and security means wider adoption of agentic applications

Another important Responsible AI principle is "Privacy and Security". In that regard, GoEX adopts a stringent approach by architecting its systems to conceal sensitive information such as secrets and credentials from the LLM. By doing so, GoEX prevents the AI from inadvertently exposing or misusing private data, reinforcing its commitment to safeguarding user privacy and ensuring a secure AI-operating environment. This careful handling of confidential information underlines the project's dedication to upholding these essential facets of Responsible AI.

Conclusion

In conclusion, while the challenges of ensuring the reliability of LLM-generated code and the security of API interactions remain complex and ongoing, GoEX's approach is a notable advancement in addressing these issues. The project acknowledges that complete solutions are a work in progress, yet it sets a precedent for the level of diligence and foresight required to move closer to these ideals. By focusing on these critical areas, GoEX contributes valuable insights and methodologies that serve as stepping stones for the AI community, signaling a directional shift towards more trustworthy and secure AI agents.

Note: The features and methods described in this blog post and the paper are still under active development and research. They are not all currently implemented, available or ready for prime time in the GoEX Github repository.