This post has been republished via RSS; it originally appeared at: Core Infrastructure and Security Blog articles.

In the lush grass of my backyard, Raven, my miniature Schnauzer, finds her bliss. She lies there, soaking in the sun’s rays, much like the data we export from Defender for Endpoint into Azure Storage. It sits there, in the cloud’s protective realm, unassuming yet essential. Raven, ever alert for critters that might encroach on her territory, is like the data that lies in wait—seemingly still but always ready to serve our needs.

This blog will lead you through the steps of moving crucial data to a secure spot, just as Raven seeks out her sunny haven. We’ll explore the technical aspects of data exportation, ensuring it’s accessible for future use, similar to Raven’s watchful relaxation in her favorite sunny spot. Join me in learning how our digital guardians keep our valuable information safe, offering a sense of security as dependable as Raven’s attentive repose in her cherished sunny patch.

Introduction

Microsoft XDR is a comprehensive security solution that includes several component services:

- Microsoft Defender for Endpoint (MDE): Provides robust endpoint protection and response capabilities. It collects and analyzes security data from various sources, including device events, alerts, incidents, and vulnerabilities. The default retention period for this data is 30 days accessible via a query, and up to 180 days visible across the portal.

- Microsoft Defender for Identity: Protects against advanced targeted attacks by automatically analyzing, learning, and identifying normal and abnormal entity behavior. The data retention period for Microsoft Defender for Identity is 90 days for audit trail. However, Defender for Identity is gradually rolling out extended data retentions on identity details to more than 30 days.

- Microsoft Defender for Office 365: Protects your organization against malicious threats posed by email messages, links (URLs), and collaboration tools. By default, data across different features in Microsoft Defender for Office 365 is retained for a maximum of 30 days.

- Microsoft Defender for Cloud Apps: Provides visibility into your cloud apps and services, provides sophisticated analytics to identify and combat cyber threats, and enables you to control how your data travels. Defender for Cloud Apps retains data as follows: Activity log: 180 days, Discovery data: 90 days, Alerts: 180 days, Governance log: 120 days.

These components work together to provide a comprehensive view of data across your organization, allowing for robust threat detection, investigation, and response. However, as data ages out in Microsoft XDR, the ability to conduct long-term historical analysis can be lost.

The data ingested into the Log Analytics Workspace used by Microsoft Sentinel from Microsoft XDR includes all incidents and alerts, along with their alerts, entities, and other relevant information. This data also includes alerts from Microsoft XDR’s component services such as Microsoft Defender for Endpoint, Microsoft Defender for Identity, Microsoft Defender for Office 365, and Microsoft Defender for Cloud Apps. The connector also lets you stream advanced hunting events from all of the above Defender components into the Log Analytics Workspace. This allows you to copy those Defender components’ advanced hunting queries into Microsoft Sentinel, enrich Sentinel alerts with the Defender components’ raw event data to provide additional insights, and store the logs with increased retention in Log Analytics.

If you don’t use Sentinel or you would like to have the ability for further processing, reporting, or investigation, you may want to export the security data from MDE to an external storage or analytics platform. One such platform is Azure Data Explorer (ADX), a fast and scalable data exploration service that allows you to query and visualize the security data.

From a cost perspective, archiving data can lead to substantial savings. Storing large volumes of data in the Log Analytics Workspace used by Sentinel can be expensive, especially over the long term. By archiving aged data to ADX, you can move it to a more cost-effective storage solution while still retaining the ability to access and analyze it as needed. This approach allows you to balance between the need for immediate access to recent data in the Log Analytics Workspace, and the cost-effective, long-term storage of older data in ADX Cluster.

Once you ingest data into the Log Analytics Workspace used by Sentinel, you can retain that data for 90 days at no additional cost. You can also purchase interactive tier retention for over 90 days up to two full years. Therefore, depending on your specific needs and budget, Microsoft Sentinel could provide more flexibility in terms of data retention.

Therefore, archiving data from Microsoft XDR to ADX before it ages out is a strategic move that enhances your security posture and saves your organization money. It allows for in-depth, long-term analysis and helps optimize costs, making it a valuable practice for any organization committed to robust and cost-effective cybersecurity. Once data is archived to, Azure Data Explorer (ADX) can be used to interrogate that data. ADX is a fast, fully managed data analytics service for real-time analysis on large volumes of data streaming from applications, websites, IoT devices, and more. It can be used to run complex queries and deliver insights on both real-time and historical data, making it a powerful tool for analyzing the archived data.

In this document, you will be guided through the process of exporting data from XDR to an Azure Event Hub and then connecting the logs to ADX. This strategy is worth considering for organizations looking to maximize their cybersecurity investments.

Please ensure you have the following prerequisites to complete this task:

- An Azure subscription

- The various XDR solution subscription(s) and administrator role

Create an Event Hub Name Space

Before data can be configured to be sent from Defender for Endpoint an Event Hub needs to be set up.

- Sign-in to Azure, browse to the Event Hubs blade and select “+Create”.

- Enter a Resource Group

- Enter the Event Hub Name Space

- Select a Location

- Select a Pricing Tier

- Select Throughput Units

- Accept (Or adjust) the advanced settings

- Select the Networking settings

- Review the configuration and if all ok select “Create”

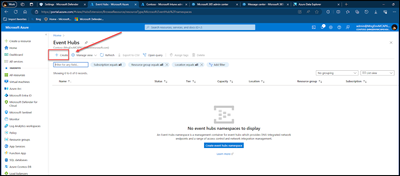

- Once completed it should look similar to below.

Configure Data Export Settings in Defender for Endpoint

To configure the data export settings in Defender for Endpoint. You can choose which data types you want to export and specify the destination where the data will be shipped to.

To configure the data export settings, follow these steps:

- Sign in to the Microsoft Defender Security Center portal at https://securitycenter.windows.com.

- Go to Settings > Microsoft Defender XDR > Streaming API.

- Select “+ Add”

- Enter the following:

- Name

- Select “Forward events to Event Hub.

- Paste the “Resource Id” from the Event-Hub Resource ID created earlier.

- From the Event Hub Name Space overview page, select JSON View and then copy the Resource ID

- Select the tables that should be sent to the Event Hub.

- Select “Submit”

The Streaming API definition should now appear.

Create an Azure Data Explorer (ADX) Cluster to Provide Access to the Security Logs

Connect to https://dataexplorer.azure.com (This is the ADX cluster portal).

An ADX cluster needs to be created so the data in transit has a storage location.

- Select the “My Cluster” blade.

- Click “Create cluster and database”.

- Enter the ADX Cluster information.

- Cluster display name.

- Database name

- Cluster location

- Agree to terms and conditions

- Click on “Create”

The new cluster should now be built.

Connect the previously created Event Hub to the ADX Cluster/Database.

- Click on “Get data” > “Event Hubs”

- Click on “Event Hubs”

- Click on “Add connection”

- Enter - Subscription

- Enter – Event hub namespace

- Event hub

- Consumer group

- Once completed it should look similar to below

Explore the Data in ADX Explorer

ADX Configuration

Lets take a look at the ADX Cluster and its configuration.

- On the upper left side of the blade select “My cluster” (1)

- Look at the bottom of the page (2)

- Click on Database (3)

The “Databases” defined for this cluster are now displayed.

If you click on the “Data connections” tab, it will display the connection to the Event Hub that was just completed

If you click on the name of the Event Hub, it will display the connection configuration.

Browse ADX Data

Click on the “Query” icon, in the upper left corner.

Schema Review

.show database ADXDatabase schema

You will notice there is only 1 column, and it is named “records”.

Data Dump

If you would like to expand the single “records” column you can parse and expand the data. For example, to get a summary of all “Category(s)”.

pbbergsXDR

| extend records = parse_json(records)

| mv-expand records

| extend category = tostring(records.category)

| summarize count() by category

Alert Dump

To take a look at ALL the “Alerts” the following is some example code.

The below script is designed to process and analyze data from a table named ‘pbbergsXDR’. The data in this table is from the XDR export and only has a single column named ‘records’ which contains JSON-formatted data. The script begins by parsing this JSON data into a more manageable format using the parse_json() function.

The mv-expand function is then used to transform each item in the ‘records’ column into a separate row. This is useful when the ‘records’ column contains arrays of data, as it allows each item in the array to be analyzed individually.

Next, the script extracts various fields from each record, such as ‘category’, ‘time’, ‘tenantId’, ‘operationName’, and several properties. These fields are converted to strings for easier processing and analysis. Notably, the ‘time’ field is converted to a datetime format, adjusted from UTC to Central Standard Time (CST), and then formatted as ‘MM-dd-yyyy hh:mm:ss tt’.

The script then filters the records to include only those where the ‘category’ is ‘AdvancedHunting-AlertInfo’.

Next, the script assigns a numeric value to each severity level using the case() function. This is done to facilitate sorting of the records based on severity level. The records are then sorted first by severity (from high to low) and then by time (from newest to oldest).

Finally, the script reorders the columns to have ‘time’, ‘Title’, ‘Category’, ‘Severity’, ‘ServiceSource’, ‘DetectionSource’, and ‘AttackTechniques’ at the beginning. It also removes the ‘SeverityOrder’, ‘records’, and ‘tenantId’ columns from the output.

The result of running this script is a table of data where each row represents a record from the ‘pbbergsXDR’ table that meets the specified criteria. The data is sorted by severity and time, and the columns are ordered in a specific way. This makes it easier to analyze the data and draw meaningful conclusions. For example, you can easily see which alerts are the most severe and when they occurred. You can also see the title, category, service source, detection source, and attack techniques for each alert, which can provide valuable insights into the nature of the alerts.

// Start with the 'pbbergsXDR' table

pbbergsXDR

// Parse the 'records' column as JSON

| extend records = parse_json(records)

// Expand the 'records' column into multiple rows

| mv-expand records

// Extract the 'category' field from each record and convert it to a string

| extend category = tostring(records.category)

// Extract the 'time' field from each record, convert it to a datetime, adjust it from UTC to CST, and format it as 'MM-dd-yyyy hh:mm:ss tt'

| extend ['time'] = format_datetime(datetime_add('hour', -6, todatetime(tostring(records['time']))), 'MM-dd-yyyy hh:mm:ss tt')

// Extract various fields from each record and convert them to strings

| extend tenantId = tostring(records.tenantId)

| extend operationName = tostring(records.operationName)

| extend properties = parse_json(records.properties)

| extend MachineGroup = tostring(properties.MachineGroup)

| extend Timestamp = tostring(properties.Timestamp)

| extend AlertId = tostring(properties.AlertId)

| extend Title = tostring(properties.Title)

| extend Category = tostring(properties.Category)

| extend Severity = tostring(properties.Severity)

| extend ServiceSource = tostring(properties.ServiceSource)

| extend DetectionSource = tostring(properties.DetectionSource)

| extend AttackTechniques = tostring(properties.AttackTechniques)

// Filter the records where 'category' is 'AdvancedHunting-AlertInfo'

| where category == "AdvancedHunting-AlertInfo"

// Assign a numeric value to each severity level for sorting purposes

| extend SeverityOrder = case(

Severity == "High", 1,

Severity == "Medium", 2,

Severity == "Low", 3,

Severity == "Information", 4,

5 // default value for unexpected severity levels

)

// Sort the records by severity (from high to low) and then by time (from newest to oldest)

| sort by SeverityOrder asc, ['time'] desc

// Reorder the columns to have 'time', 'Title', 'Category', 'Severity', 'ServiceSource', 'DetectionSource', and 'AttackTechniques' at the beginning

| project-reorder ['time'], Title, Category, Severity, ServiceSource,DetectionSource, AttackTechniques, *

// Remove the 'SeverityOrder', 'records', and 'tenantId' columns from the output

| project-away SeverityOrder, records, tenantId

Note: Special thanks to Seyed Amirhossein Nouraie, on help with this article.