This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

In our first blog last month we introduced the NLP Recipes repo. In this blog, we would like to help new users navigate from cloning the repo to running their first Jupyter notebook and eventually adapting the code to their own dataset.

To get started, first navigate to this Setup page within the repository. As recommended, it is easiest to spin up an Azure VM to run the sample code available in this repository. The user also has options to select any virtual machine (VM) of their choice from the Azure portal. Once the VM has been set up successfully on the portal, then connect to it either using RDP or SSH as shown below:

Upon successful login to the VM, navigate to the command prompt as shown below to clone the entire repository onto the desktop or any other location of your choice within the VM using the following command:

git clone https://github.com/microsoft/nlp-recipes.git

Next navigate to the folder and set up the dependencies as shown below for a CPU/GPU environment:

cd nlp-recipes

python tools/generate_conda_file.py

conda env create -f nlp_cpu.yaml

Similarly, the GPU environment can also be created to run the Jupyter notebooks. The list of conda environments can be listed as shown below. Then activate the environment of choice to run your notebooks.

conda env list

conda activate nlp_gpu

Next to access the notebooks, first set up the Jupyter kernel using this line of code:

python -m ipykernel install --user --name nlp_gpu --display-name "Python (nlp_gpu)"

There is an additional dependency on the utils-nlp folder which would need to be setup as follows:

pip install -e .

Now that you are done with the setup, the Jupyter notebook can be accessed using the command: jupyter notebook

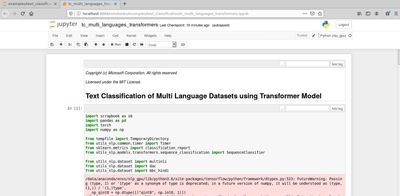

In this blog, we run through the Text classification notebook. Before you run the notebook for the first time, ensure that the kernel is set to nlp_gpu as shown below:

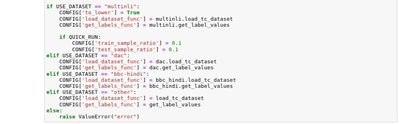

In the notebook, the user has the option of evaluating a pretrained Transformer model using BERT architecture on 3 datasets, for illustration, the quick run option is selected for the BBC Hindi dataset.

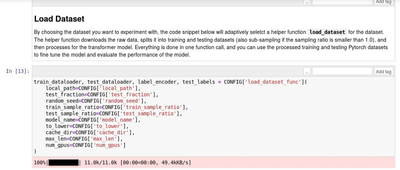

After the configuration setup, the BBC Hindi dataset is loaded:

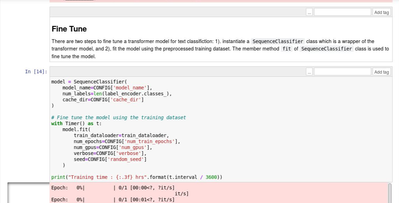

Then the transformer model can be fine-tuned and evaluated on the sample dataset.

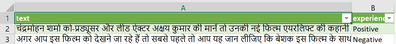

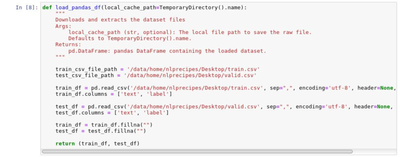

Upon successfully running this Jupyter notebook, the user can try the other examples provided in the repo. A natural extension after running the sample notebook described would be to source your own data and re-run the above Jupyter notebook. For doing so, the user would first need to download the dataset of their choice and saves it locally on the VM, say they have a train.csv and text.csv with 2 columns (text, experience – Positive/Negative/Neutral).

The user would then need to edit some sections of the Jupyter notebook to source the new dataset. One option would be to make these edits to add in a new option to run “other” dataset and make the edit to the configuration as shown below:

Next based on the format of the input train.csv/text.csv files, the user would need to edit load_tc_dataset and get_label_values functions. Sample code for preparing the BBC-Hindi dataset can be leveraged with minor edits.

This is how the code from the repository can be leveraged on additional datasets the user might have or edited to suit their needs.