This post has been republished via RSS; it originally appeared at: Azure Data Explorer articles.

Apache Kafka is an open source streaming platform and Azure Data Explorer is a big data analytical database, purpose-built for low latency and near real time analytics on all manners of logs, time series datasets, IoT telemetry and more. The Azure Data Explorer KafkaConnect sink connector makes ingestion from Kafka, code free and configuration based, scalable and fault tolerant, easy to deploy, manage and monitor. This article, is paired with a hands-on-lab, and strives to demystify the integration, and is the first of a series focused on Kafka ingestion.

About Kafka

Apache Kafka is a distributed streaming platform with partitioning, replication, fault tolerance and scaling baked into the architecture and design. It offers the scalability of a distributed file system, supporting hundreds of MB/s throughput, TBs of data per node in a Kafka cluster, and can run on commodity hardware on-premise and on cloud infrastructure just as well. It offers the durability of a database and strict ordering. There is a whole ecosystem of open source distributed computing technologies that integrate with Kafka. Kafka is publish-subscribe model based, secure, mature, robust, enterprise ready, and over time has become the de-facto open source streaming platform and is part of any open source big data architecture involving real time processing.

Kafka on Azure

Azure has multiple Kafka offerings. HDInsight Kafka 4.0 (Kafka 2.1) is a Microsoft Azure first party managed Kafka PaaS. From an ISV perspective, there is Confluent Cloud, a managed Confluent Kafka PaaS, and Cloudera Data Platform, a managed offering from Cloudera. IaaS is an option as well with Confluent Enterprise Platform on Azure Kubernetes Service managed by the Confluent operator.

Kafka use cases and eco-system of services

Kafka can be leveraged in modern application architectures, in IoT use cases, log processing use-cases and in practically any real-time and near-real-time processing use cases. Kafka was born as a distributed commit log, but has evolved to include an eco-system of complementary services, across open source, Confluent community license and Confluent Enterprise. A few popular ones are Kafka streams, Schema registry, KafkaConnect and ksqlDB. Kafka streams is an open source streaming client library for building real time applications on top of Kafka. Schema registry provides centralized schema management and compatibility checks as schemas of events published to Kafka topics evolve. ksqlDB is the streaming SQL engine for Kafka that you can use to perform stream processing tasks using SQL parlance.

What about Kafka integration?

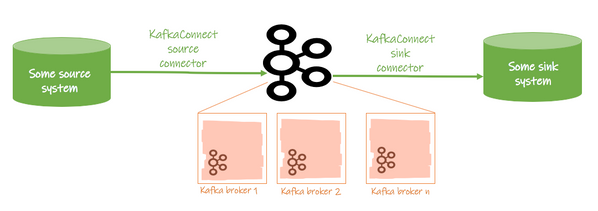

Kafka has a great offering in the integration space with KafkaConnect - a standardized, configuration based, scalable, fault tolerant framework to integrate from source systems to Kafka, and from Kafka to target systems.

As part of the connector development, you just have to plugin the integration bits for the source or sink system into the framework code base. The framework abstracts out or simplifies offset management, task distribution, parallelization, scaling, retries, failure management and further supports plugin based per record transformation. Kafka has a number of KafkaConnect source and sink connectors, and it is preferable to leverage a KafkaConnect connector (code free, and configuration based deployment) if available, over writing custom producer or consumer code. You can find a listing of Confluent certified Kafka connectors at Confluent hub.

Kafka and Azure Data Explorer - positioning

Wherever there is Kafka in the architecture landscape, especially in use-cases involving IoT telemetry, time series, all manners of logs and near real time processing, Azure Data Explorer has a complementary place as a big data, analytical database for low latency analytics.

Kafka ingestion support for Azure Data Explorer

As mentioned in the introduction, Azure Data Explorer has a KafkaConnect sink connector that can be leveraged for Kafka ingestion and is the focus of this blog post.

What’s involved besides Kafka and Azure Data Explorer for the integration?

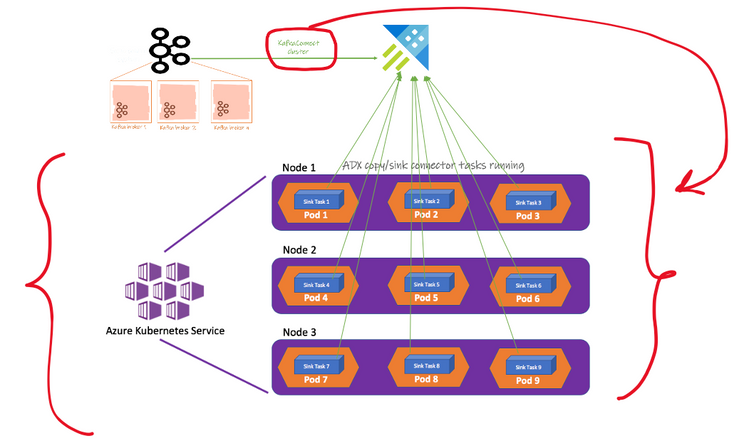

- Infrastructure to run the connectors:

Containers on Azure Kubernetes Service (PaaS), recommended or virtual machines - KafkaConnect Kusto sink connector jar:

Available in GitHub - If you plan to deploy on Azure Kubernetes Service, Confluent helm chart for KafkaConnect compatible with your Apache Kafka version; If you plan to deploy on virtual machines, Apache Kafka download - this includes the KafkaConnect binaries. For the rest of the blog, we will focus on a containerized deployment.

- Sink properties

Configuration that details the connector class, number of connector tasks, connector name, Kafka topic to Azure Data Explorer table mapping and other configurations to override offsets to consume from, specify failure management, retries, dead letter queue and more.

Sample sink configuration

In the sample above, the Kafka topic "crimes" is mapped to Azure Data Explorer database "crimes_db", table "crimes_curated", with Kafka messages in json and with the table mapping "crimes_curated_mapping". Here we are using an Azure Active Directory service principal as the identity to persist to the table, and grants needs to be in place to allow the same. Details of the full set of properties and their usage are documented here.

Step by step deployment of the connector on Azure Kubernetes Service (AKS):

1. Provision an AKS cluster

2. On your local machine, download the latest stable version of the KafkaConnect Kusto sink connector jar

3. On your local machine, download the Confluent Helm Chart (compatible with your Kafka version)

4. On your local machine, build a docker image that includes the Confluent KafkaConnect image with the KafkaConnect Kusto sink jar in /usr/share/java, and publish to Docker hub

5. On your local machine, modify the values.yaml from #3, to leverage the image you published from #4, and update the bootstrap server list with those of your Kafka broker IPs/broker load balancer IP

6. Install KafkaConnect on the AKS cluster using helm chart updated in #5

7. Start the connector tasks using the KafkaConnect REST API - this includes the Kusto sink properties that details the Kafka topic to Azure Data Explorer table amongst other configuration.

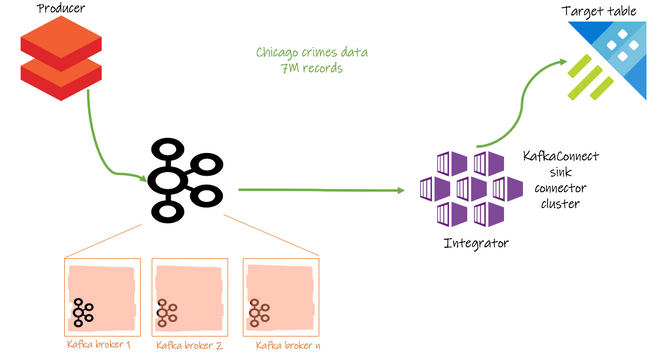

You should see the messages published to Kafka flow through to Azure Data Explorer. The diagram below is a pictorial representation. The hands on lab referenced further on in this article delves deep into the individual above steps.

Hands-on-lab for Kafka ingestion

A fully scripted, self-contained, hands-on-lab is available for both Confluent Kafka and HDInsight Kafka flavors in the Azure Data Explorer HoL git repo. It includes instructions for provisioning and configuring environments - Spark (producer), Kafka, connectors (Kubernetes) and Azure Data Explorer as well, giving you the full Azure experience.

In conclusion

With the KafkaConnect Kusto sink connector we have a great integration story from Kafka to Azure Data Explorer. Its code-free and configuration based, its scalable, fault tolerant, easy to deploy, manage and monitor. The connector is *open source* and we welcome community contribution.

Resources

- Azure Data Explorer documentation

- Kusto Query Language

- Data Visualization with Azure Data Explorer

- Confluent documentation on KafkaConnect

- Confluent video on KafkaConnect

- Confluent connector hub

- Confluent webinar on developing KafkaConnect connectors