This post has been republished via RSS; it originally appeared at: Core Infrastructure and Security Blog articles.

Hello everyone, my name is Zoheb Shaikh and I’m a Solution Engineer working with Microsoft Mission Critical team (SfMC). Today I’ll share with you about an interesting issue related to Azure AD Quota limitation we came across recently.

I had a customer who exhausted there AAD quota which put them at a significant health risk.

Before I share more details on this let’s try to understand what your organization AAD Quota could be and why does it even matter.

In simple words Azure AD has a defined quota of number of Directory objects/Resources that can be created and stored in AAD.

A maximum of 50,000 Azure AD resources can be created in a single tenant by users of the Free edition of Azure Active Directory by default. If you have at least one verified domain, the default Azure AD service quota for your organization is extended to 300,000 Azure AD resources. Azure AD service quota for organizations created by self-service sign-up remains 50,000 Azure AD resources even after you performed an internal admin takeover and the organization is converted to a managed tenant with at least one verified domain. This service limit is unrelated to the pricing tier limit of 500,000 resources on the Azure AD pricing page. To go beyond the default quota, you must contact Microsoft Support.

For information about AAD Quotas, see Service limits and restrictions - Azure Active Directory .

How to check your AAD Quota limit

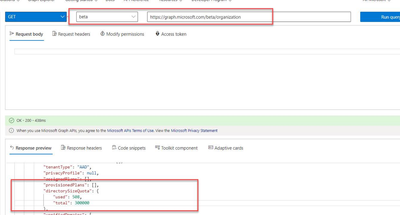

Test this in Graph Explorer: https://developer.microsoft.com/en-us/graph/graph-explorer

Sign into Graph Explorer with your account that has access to the directory.

Run beta query (GET) https://graph.microsoft.com/beta/organization

Now since you understand what AAD Quota is and how to view details let’s get back to the customer scenario and try to understand how AAD Quota affected them and what is in this for you to learn.

The customer was approximately a 100k Users organization using multiple Microsoft cloud related services like Teams, EXO, Azure IaaS, PaaS etc.

As a part of cloud modernization journey, they were doing a massive Rollout of Intune across the Organization post doing all the testing and PoC.

Our proactive monitoring and CXP teams did inform the customer that there Azure AD objects are increasing at an unusual speed, but the customer never estimated this could go beyond their AAD Quota.

One fine morning I got up with a call from our SfMC Critsit manager that my customer’s AAD Quota has exhausted and their AAD Connect is unable to Synchronize any new objects. As a part of Reactive arm of SfMC we got in a meeting with customer along with our Azure Rapid Response team to find what is the cause of the problem.

We decided on below approaches for the issue:

- Confirm AAD Quota exhaustion and what objects are consuming AAD Resources

- Remove stale objects from AAD.

- Reach out to Product Group asking for a Quota increase for this specific customer.

How to check what objects are consuming AAD Quota limit

While I was engaged in this case, we did it the hard way (by exporting all registered objects in Excel and then using Pivot Tables to analyze) but now there is an easy way to do as described below:

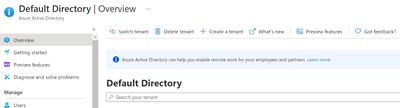

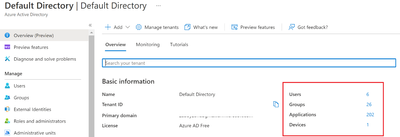

- Login to Azure AD Admin Center (https://aad.portal.azure.com)

- In Azure AD Click on preview features (Presently)

- This will give you a nice overview of your Object Status of AAD

We created the below table to help us find what exactly is going on in the environment, in this we measured how many Objects are in total and how many were created in last few days.

|

Object |

Count |

New Count in last 24 hours |

New Count in last 1 week |

|

Users |

# |

# |

# |

|

Groups |

# |

# |

# |

|

Devices |

# |

# |

# |

|

Contacts |

# |

# |

# |

|

Applications |

# |

# |

# |

|

Deleted Applications |

# |

# |

# |

|

Service Principals |

# |

# |

# |

|

Roles |

# |

# |

# |

|

Extensionproperties |

# |

# |

# |

|

TOTAL |

## |

## |

## |

Not sharing numbers here but highlighting that we saw Devices consuming about 50 % of the AAD Object quota and increased in the last 24 hours to few thousands.

This output made us understand that thousands of devices are getting registered every day which has resulted in AAD Quota exhaustion.

Based on our analysis to come out of this situation we recommended the customer to delete stale devices that have not been used for more than 1 year. This itself enabled us to delete approximately 50k objects.

This 50k object deletion ensured that they are out of the critical situation giving them some breathing space to think and avoid this problem from reoccurring at least for the next couple of weeks till we figure out what exactly is going wrong.

Being part of the Microsoft Solution for Mission Critical team, we always go above and beyond to support our customers. The first step is always to quickly resolve the reactive issue, subsequently identify the Root Cause, and finally through our Proactive Delivery Methodology making sure this does not happen again.

We followed below approach to identify the root cause and ensure it will not happen again, below the steps:

- Configuring Alerts for validation and Quota exhaustion

- Daily alerts for Azure AD Object count

- Alerts in case AAD Object Quota limit is exhausted.

- More detailed review on the Root Cause of the issue.

- Creating a baseline for AAD Objects needed in the organization.

- Baseline to be created based on number of Objects in Organization (Users, computers etc.)

- What is the expected count?

- Increasing the Object Quota based on the baseline created if needed.

In the next sections we will go through each of the above actions for more explanation

- Configuring Alerts for validation and Quota exhaustion

Option#1 using Azure Automation.

We wanted to add alerts to ensure the customer is notified if they are nearing the limit. We achieved this by using Azure Automation as below with the help of my colleague Eddy Ng from Malaysia.

Below is the step-by-step process on how you can help achieve alerts post creating an Azure Automation account:

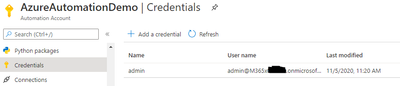

- Create the credential in Credential vault. Click on the + sign, add a credential and input the information name. The credential must have the sufficient rights to connect to Azure AD and not have MFA prompt. The name used is important. It will be referenced from the script.

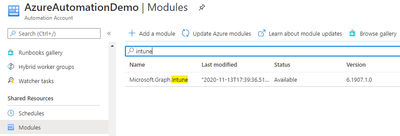

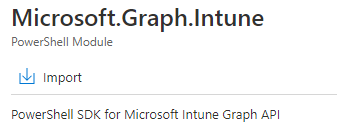

- Next Install Microsoft Graph Intune under the Modules Resources. Click on Browse Gallery, search for Microsoft.Graph.Intune. Click on the result and Import.

We are recommending MS Graph PowerShell SDK going forward.

- Go to Runbooks.

Create a new Runbook. Give it a name.

Runbook type : Powershell

Paste the below code:

#get from credential vault the admin ID. Change "admin" accordingly to the credential vault name

$credObject = Get-AutomationPSCredential -Name admin

#initiate connection to Microsoft Graph

$connection = Connect-MSGraph -PSCredential $credObject

#setting up Graph API URL

$graphApiVersion = "beta"

$Resource = 'organization?$select=directorysizequota'

$uri = "https://graph.microsoft.com/$graphApiVersion/$($Resource)"

#initiate query via Graph API

$data = Invoke-MSGraphRequest -url $uri

#get data and validate

#change the number 50000 accordingly

$maxsize = 50000

if ([int]($data.value.directorysizequota.used) -gt $maxsize)

{

write-output "Directory Size : $($data.value.directorysizequota.used) is greater than $maxsize limit"

Write-Error "Directory Size : $($data.value.directorysizequota.used) is greater than $maxsize limit"

Write-Error " " -ErrorAction Stop

}

else

{

Write-Output "Directory Size : $($data.value.directorysizequota.used)"

}

- Click Save and Publish

- Click Link to Schedule

- Populate the schedule accordingly. For example, run daily at 12pm UTC.

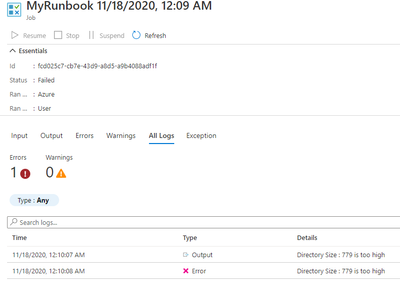

- Results from each run job can be found under Jobs.

- If above quota, the status will be failed as a result of the script -erroraction Stop.

- Setup Alerts to take advantage of this by creating New Alert Rule

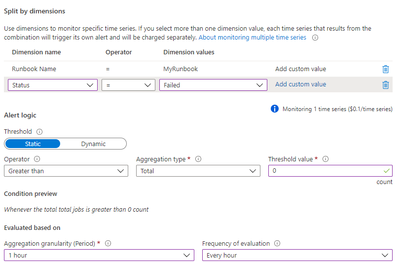

- Click select condition. Signal name “Total Job”. Follow the below. Amend “MyRunbook” accordingly. When finished, click done

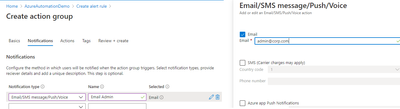

- Select Action Group. Create Action Group.

- Populate info accordingly for Basics.

- Populate info similar to below for Notifications.

- Click Review + Create

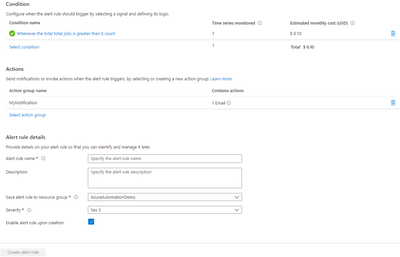

- Once done, scroll below under Alert Rule Details, such as Name, Description and Severity.

- Create Alert Rule

Results: When the Directory Quota Size breached the limit, you will get an alert via email to the admins.

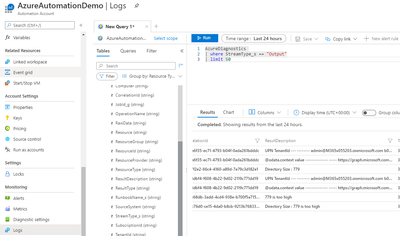

You can then proceed to click on the Runbook and select Jobs. Click on All Logs to see the error output for each individual job run.

If you wish to monitor the previous results in bulk, go to Logs and run this Kusto Query below. Scroll to the right for ResultDescription. Assumption is that the schedule is set to run daily. A limit of 50 will then be for the past 50 days.

AzureDiagnostics

| where StreamType_s == "Output"

| limit 50

Option#2 Alternative way Configuring Alerts for validation and Quota exhaustion

While I was writing this blog, my colleague Alin Stanciu from Romania advised with probably better way to configure Alerts for Quota Exhaustion.

Replace the script in the Azure Automation account as below!

#get from credential vault the admin ID. Change "admin" accordingly to the credential vault name

$credObject = Get-AutomationPSCredential -Name 'azalerts'

#initiate connection to Microsoft Graph

$connection = Connect-MSGraph -PSCredential $credObject

#setting up Graph API URL

$graphApiVersion = "beta"

$Resource = 'organization?$select=directorysizequota'

$uri = "https://graph.microsoft.com/$graphApiVersion/$($Resource)"

#initiate query via Graph API

$data = Invoke-MSGraphRequest -url $uri

#get data and validate

$usedpercentage=(($data.value.directorysizequota.used/$data.value.directorysizequota.total)*100)

#if ($usedpercentage -gt $maxsize)

#{write-output "Directory Size : $($data.value.directorysizequota.used) is greater than 90 percent"}

#else

#{

Write-Output "Directory Size : $($data.value.directorysizequota.used) and percentage used is $($usedpercentage)"

#}

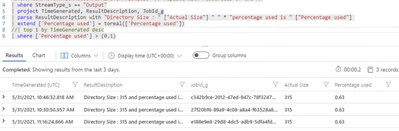

And you could use Azure Log Analytics to help Alert on Monitor as below

AzureDiagnostics

| where Category == "JobStreams"

| where ResourceId == "" // replace with resourceID of the Automation Account

| where StreamType_s == "Output"

| project TimeGenerated, ResultDescription, JobId_g

| parse ResultDescription with "Directory Size : " ["Actual Size"] " " * "percentage used is " ["Percentage used"]

| extend ['Percentage used'] = toreal(['Percentage used'])

| top 1 by TimeGenerated desc

| where ['Percentage used'] > (0.1)

This can help you get an overview of percentage used

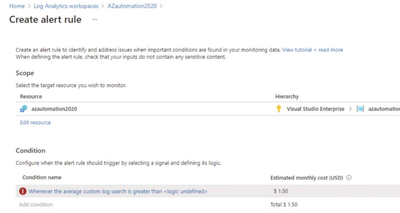

Alert configuration using Log Analytics can be done as shown in below screenshots:

You could define the threshold when to be alerted

2. More detailed review on the Root Cause of the issue

In this step we need to identify why so many devices are being registered every day.

We exported the list of all registered devices in AAD in excel and tried to filter based on what type of registrations they have had.

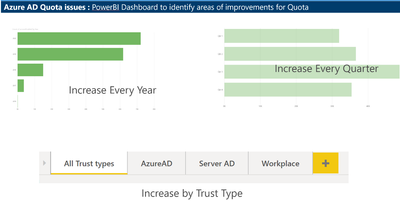

Thanks to Claudiu Dinisoara & Turgay Sahtiyan for helping create a nice dashboard in POWERBI based on these logs which helped us understand the Root Cause much better.

This dashboard helped us understand the type of Device registrations and the overall count across the years, we found that there has been a Significant increase in AAD Device registrations due to Intune Rollout across the organization.

We checked with customer’s Intune support team, and they confirmed that this increase was expected.

3. Creating a baseline on number of AAD objects we can have:

The trickiest part on this issue was coming up with a baseline number for AAD Objects.

So, the customer had approximately 100k users and we came up with the below table for the baseline.

Please note that this number was unique to customer scenarios and discussions and it may differ for your organization.

|

Object |

Count |

Why this number |

|

Users |

105000 |

Total number of Production users are 100k and other 5000 users could be used for Administration, To be deleted users or Guest users. |

|

Groups |

60000 |

We felt 60k is a high number but they were using Groups extensively for Intune and other Policy management tasks, we recommended them to work on reducing this number in future. |

|

Devices |

200000 |

We assumed there will be 2 devices registered per user (Mobile & Laptop) and few stale devices. |

|

Contacts |

16000 |

These objects were already low, so we considered the present values as Baseline |

|

Applications |

1500 |

These objects were already low, so we considered the present values as Baseline |

|

Service Principals |

3000 |

These objects were already low, so we considered the present values as Baseline |

|

Roles |

100 |

These objects were already low, so we considered the present values as Baseline |

|

TOTAL |

385600 |

These objects were already low, so we considered the present values as Baseline |

We compared the expected baseline with their Quota limit was 5,00,000 and came up with the strategy for cleanups and strategy to maintain the object counts as per baseline.

4. Increasing the Object Quota based on the baseline created if needed.

Their AAD Quota limit was 500,000 objects however our baseline indicated that they need to be around 400,000 objects.

Hope this helps,

Zoheb