This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Apache Spark for Azure Synapse now provides descriptive Livy error codes. When an Azure Synapse Spark job fails, this new and updated error handling feature parses and checks the logs on the backend to identify the root cause and displays it on the monitoring pane along with the steps to take to resolve the issue.

What is Livy?

Livy is a service that enables easy interaction with a Spark cluster over a REST interface to enable easy submission of jobs, result retrieval as well as Spark Context management. In the context of this discussion, if a Spark application fails, Livy receives the state of the application from YARN which is then surfaced up, generally in the form of the `LIVY_JOB_STATE_DEAD` error code.

Spark application failure scenarios

A Spark application can fail for a variety of reasons ranging from user errors like reading data from an incorrect storage account to missing dependencies, as well as system errors such as the Spark executor running out of memory. In all of these scenarios, Livy registered the status of the application as failed, and it was up to you to figure out the cause and the resolution.

What problem does this feature solve?

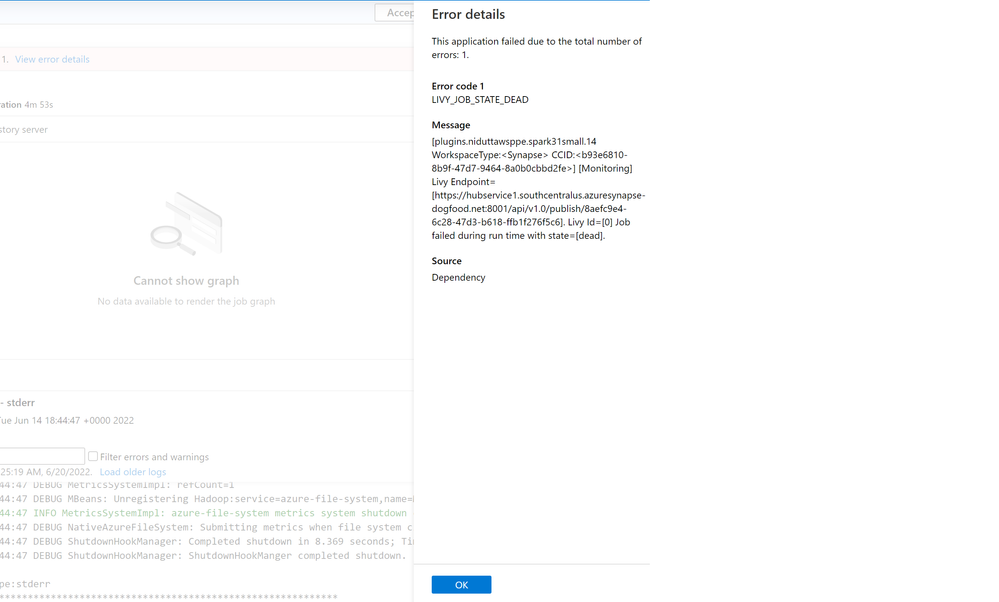

Previously, all failing jobs on Synapse would surface a generic error code, LIVY_JOB_STATE_DEAD, with no other information. As you can see in the image below, this error code provided no further insight into why the job had failed and required significant effort to identify the root cause by digging into the driver, executor, Spark Event and Livy logs to find the source problem.

Figure 1 - Previous error codes when a Spark job fails

To make troubleshotting errors easier, we have introduced a more precise list of error codes that describes the cause of failure and replaces the previous generic error codes. The error codes are now divided into four categories which helps classify the type of error:

- User: Indicating a user error

- System: Indicating a system error

- Ambiguous: Could be either user or system error

- Unknown: No classification yet, most probably because the error type isn't included in the model

The message provided with the error is more detailed to help you debug the issue.

Figure 2 - New error codes when a Spark job fails

It is important to note that this feature is currently disabled by default but will be enabled by default for all Spark applications shortly. To try this out now, all you have to do is set the following Spark configuration to true at the job or pool level:

livy.rsc.synapse.error-classification.enabled.

How this feature works

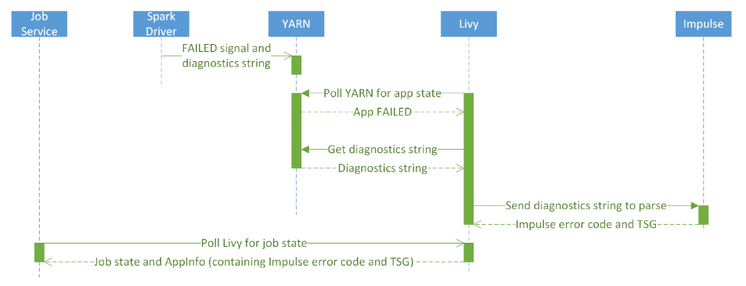

When a Spark application fails, the Spark driver generates a diagnostic string containing the reason of failure and exception stacktrace which is sent to YARN. Livy pools YARN for the application status and gets this diagnostic string, where it uses our error classification library to generate the specific error code and TSG to help resolve the error. This is then returned by Livy as a response.

Figure 3 - New Livy error code handling sequence diagram

We are continually improving our classification model to capture more types of errors to make your debugging experience easy and effortless.

To learn more, please read Interpret error codes in Synapse Analytics