This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

The goal of this post is to enlighten you about the Apache Spark options available inside Synapse and how it works the basic setup.

Apache Spark is a parallel processing framework that supports in-memory processing. It can be added inside the Synapse workspace and could be used to enhance the performance of big analytics projects. (Quickstart: Create a serverless Apache Spark pool using the Azure portal - Azure Synapse Analytics |...). Once added it is billed by minute if the cluster is online. So, if it is not in use be sure the Spark cluster is stopped.

First things first, let's overview the options while adding Apache Spark Pool to the Workspace.

Apache Spark Basic configuration inside Synapse:

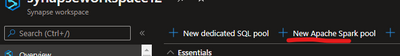

From the Synapse workspace portal click on the option: + New Apache Spark Pool (Fig 1).

Fig 1

When a Spark pool is created, it exists only as metadata no resources are consumed.

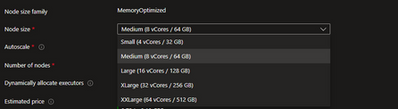

The next step is to choose the configurations (Fig 2):

Fig 2

Now... What are?

VCores

It must be defined the number of cores that will be available for the Pool. The number to be chosen depends on the kind of workload that will be run against the pool. As general advice, start small to manage the cost, test, and upgrade as needed.

If Spark runs out of capacity an error like this one will be displayed:

Failed to start session: [User] MAXIMUM_WORKSPACE_CAPACITY_EXCEEDED

Nodes and Autoscale

If enable based on the workload requirement it will scale up or down the number of nodes according to the configuration defined: The initial number of nodes when the pool is in use will be at the minimum and that number cannot be fewer than three. The metrics are taken into consideration (every 30 seconds) to scale (up or down) are: CPU and Memory require for execution of the pending jobs, unused cores, and memory on active nodes, as also how much memory is in use per node.

When the following conditions are detected, Autoscale will issue a scale request:

|

Scale-up |

Scale-down |

|

Total pending CPU is greater than total free CPU for more than 1 minute. |

Total pending CPU is less than total free CPU for more than 2 minutes. |

|

Total pending memory is greater than total free memory for more than 1 minute. |

Total pending memory is less than total free memory for more than 2 minutes. |

Executors Dynamically allocation

If enable the executors will be allocated between the maximum and the minimum configured. So, if the Spark Job requires only 2 executors for example it will only use 2, even if the maximum is 4. If requires more it will scale up to the maximum defined on the configuration.

In the end, the dynamic allocation if enabled will allow the number of executors to fluctuate according to the number configured as it will scale up and down.

Scenario- Putting all together:

A Spark pool is created with Autoscale enabled and set the min node to 3 and max node to 6. When the session started or a job is submitted with 2 executors (default), this will use 3 nodes (1 node will be used by the driver), if necessary, it can scale up to 6 nodes and dynamically allocate the executors as configured/enabled. When the job does not need the executors, it will decommission them and if it does need the node it will free up the node. Once the node is in decommission state no executors can be launched on that node. It can take 1 to 5 minutes for a scaling operation to complete.

So what is a session?

Session and Spark instance:

Spark instances are created when a connection to a Spark pool creates a session to run a job.

When a job is submitted, if there is enough capacity in the pool and in the instance, the existing instance will process the job otherwise another instance will be launched. If another job is submitted and there is no capacity available then the job will be rejected if it is a notebook; if it comes from a batch job, then it will be queued.

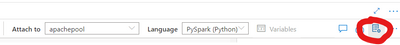

It is possible to define the maximum number of resources to be used per session execution. So, instead of using all resources available per pool configuration, they can be limited by session configuration (Fig 3).

Fig 3

At a high level:

When Apache is added to the workspace a limit of resources available will be configured for the Spark pool (and it can be changed later): https://docs.microsoft.com/en-us/azure/synapse-analytics/quickstart-create-apache-spark-pool-portal

A CAP of resources can be configured by session: https://techcommunity.microsoft.com/t5/azure-synapse-analytics/livy-is-dead-and-some-logs-to-help/ba...

Notebooks:

Notebooks consist of cells, which are individual blocks of code or text that can be run independently or as a group. Synapse Studio inside of the Workspace can be used (Fig 3) to create a notebook and run the code against the Apache Spark Pool.

Notebooks will use AAD pass-through as authentication inside of Synapse Studio unless this is customized on the code to work differently. I mean it will use the AAD user authenticated in the workspace to validate the permission to execute the code.

Follow code example and references to use MSI instead the AAD user, for example:

Stopping the execution - IMPORTANT:

Note once the Apache Spark Pool is online it will take around 30 minutes to stop an idle session. If because of cost management you need the session or the pool to be stopped before 30 minutes. Follow 3 simple tips inside Synapse Studio:

1) Configure the Notebook session inside Synapse Studio to be stopped before that time

(Fig 4):

Synapse Studio -> Develop -> notebook -> properties (Fig 4) -> Configure Session

Session details (Fig 5):

Fig 5

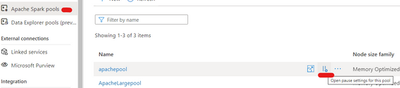

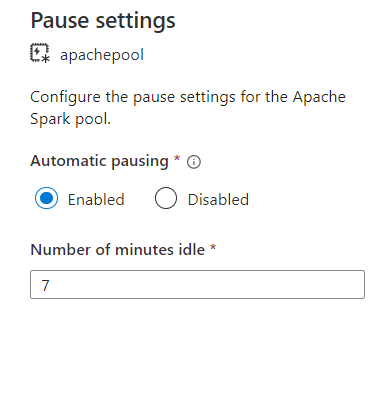

2) Synapse Studio -> Manage -> Apache Spark Pool -> Open pause settings for this pool (Fig 6 and 7).

Fig 6

Fig 7

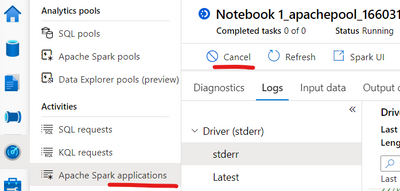

3) Synapse Studio -> Monitor -> Apache Spark Application -> Click on the Job that is running and Cancel it (Fig 8).

Fig 8.

Summary: This post covers the basics of Apache Spark Pool setup for Synapse. I hope it can help you to manage properly Synapse spark pool environments.

That is it!

Liliam

UK Engineer

Reference:

Apache Spark core concepts - Azure Synapse Analytics | Microsoft Docs

Automatically scale Apache Spark instances - Azure Synapse Analytics | Microsoft Docs

How to use Synapse notebooks - Azure Synapse Analytics | Microsoft Docs