This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

This blog is co-authored with Yufei Xia, Jinzhu Li, Sheng Zhao, Binggong Ding, Nick Zhao and Deb Adeogba

Azure Neural Text-to-Speech (Neural TTS) enables users to convert text to lifelike speech. It is used in various scenarios including voice assistant, content read-aloud capabilities, accessibility tools, and more. During the past few months, Azure Neural TTS has made rapid progress in speech naturalness (see details), voice variety (see details), and language support (see details). In this blog, we introduce the latest updates on the vocoder.

A vocoder is a critical component in TTS which generates synthesized audio samples with the input of linguistic or acoustic features. Thanks to the latest innovation on our HiFiNet vocoder, we are able to upgrade 400+ neural voices in the Azure TTS portfolio to 48kHz which delivers an exceptional hi-fidelity synthetic voice sound experience to users, while keeping high efficiency and scalability.

48kHz voices

The fidelity of audio is an important element of text-to-speech voice quality. High-fidelity voices can bring a richer and more detailed sound with minimal distortion and artifacts. With the higher sampling rate, listeners can hear more subtle details and closer timbre. In sophisticated scenarios like video dubbing, gaming and singing, where a cleaner and more enjoyable sound experience is strongly desired, higher fidelity output like a 48kHz sampling rate makes a world of difference.

We are glad to announce that Azure Neural TTS portfolio voices have been upgraded to 48kHz for the best audio fidelity.

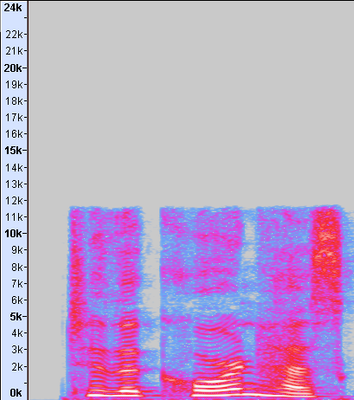

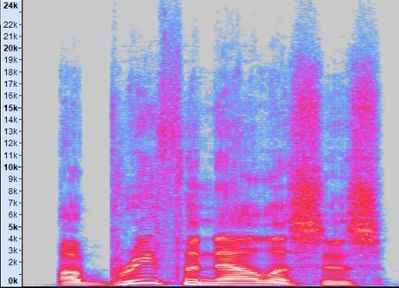

Below are two examples of the 48kHz voices, compared to their 24kHz counterparts. Hear the difference of the audio fidelity between the TTS output in 24kHz and 48kHz sampling rate, with a hi-fi speaker or headset. In each example, the audio spectrum is also provided to show how the frequency range differs between the 48kHz sampling rate and 24kHz.

|

Speaker |

24kHz voice |

48kHz voice |

|

Jessa |

|

|

|

Guy |

|

|

Table 1. 24kHz voices vs 48kHz voices

This update covers more than 400 voices across over 140 languages and variances provided in the Azure Neural TTS portfolio. You can easily check out the voices and create hi-fi audios in 48kHz with the Speech Studio Audio Content Creation tool.

As shown in the above images, audio with a 48kHz sampling rate gets a higher frequency responding range which keeps more subtle details and nuances of the sound. Such high sampling rate creates challenges on both voice quality and inference speed. How are these challenges solved? In the below sections, we introduce the technology behind: HiFiNet2.

HiFiNet2: a new-generation neural TTS vocoder

Vocoder is a major component in speech synthesis, or text-to-speech. It turns an intermediate form of the audio, a.k.a., acoustic features, into an audible waveform. Neural vocoder is a specific vocoder design which uses deep learning neural networks and is a critical module of Neural TTS.

In an earlier blog , we introduced the first generation of HiFiNet (HiFiNet1), a GAN based model, which supports 24kHz with audio quality improved and speech synthesis latency reduced.

Figure 1. HiFiNet1 with the full bandwidth model

HiFiNet1 consists of a full-band Generator which is used to create audio (‘Generated Wave’), and a Discriminator which is used to identify the gap of the created audio from its training data (‘Real Wave’). HiFinet1 takes Mel spectrogram as the Input feature. The main structure of the neural vocoder consists of several Pre-net layers and up sampling layers. All full-band bandwidth Mel spectrogram information will be processed by a neural vocoder. The output Waves of the HiFinet1 are at the 24kHz sampling rate.

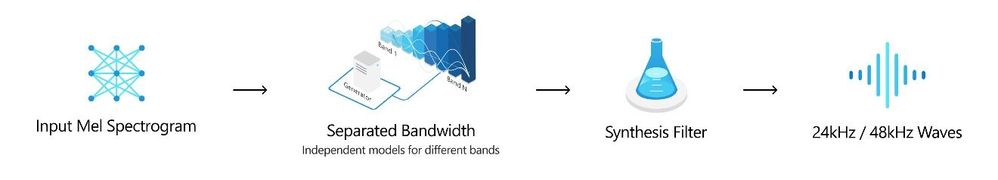

HiFinet2 is still given Mel spectrogram as the Input feature. However, instead of having one set of the full-band bandwidth model structure, the HiFiNet2 accepts multiple different combinations of the model structure according to the different bandwidths. And a Synthesis filter is employed to synthesize the multiband signals. The output Waves of the HiFiNet2 can support both 24kHz and 48kHz sampling rates.

Figure 2. HiFiNet2 with the separated bandwidth model

HiFiNet2 continues using the GAN base vocoder. The major innovation is the new design of the generator. Unlike HiFiNet1 which was designed with all bandwidths sharing one network structure, HiFiNet2 applies a different model structure based on different bandwidth. The separated design makes the computation and inference cost more targeted and efficient.

In terms of low frequency bandwidth, using a sophisticated model structure can guarantee robust voice quality. However, regarding the high frequency bandwidth, a lightweight model structure design can save the training cost and benefit inference speed. As a result, the independent model design contributes to the efficiency and makes the voice output scalable, while keeping the high quality of the generated voice, which is on par with the original recording.

Moreover, the separated bandwidth design not only supports the targeted model capacity, but also meets multiple application needs. Thanks to the HiFiNet2 framework design, the different model output can be synthesized. For example, the different bandwidth of universal voice and the single speaker voice can be synthesized at the output layer and generate the final voices.

Benefits of HiFiNet2

With the updated design, HiFiNet2 brings a higher voice quality to Azure Neural TTS, while keeping speech synthesis more efficient and scalable.

Higher voice quality

Compared to Hifinet1, HiFiNet2 shows better quality. The HiFiNet2 voices show less DSATs (for example, glitches, shakiness, robotic sounds, etc.), higher fidelity (in the 48khz sampling rate) and better similarity (how similar the generated voice sounds like the original speaker’s timbre). The improvement of the voice quality can especially benefit scenarios like audio book, video dubbing, gaming, and singing etc., where a more engaging sound experience is expected.

To measure the benefit of HiFiNet2, we conducted several tests in many aspects which yielded positive results. Our tests show that the HiFiNet2 vocoder improves voice quality obviously compared to HiFiNet1, especially on fidelity improvement. The improvements are measured by CMOS1 and SMOS2. With 48kHz features, we get an average CMOS gain +0.1 and SMOS gain +0.105 on top 10 traffic platform voices.

Note:

- CMOS (Comparative Mean Opinion Score) is a well-accepted method in the speech industry for comparing the voice quality of two TTS systems. A CMOS test is like an A/B testing, where participants listen to different pairs of audio samples generated by two systems and provide their subjective opinions on how A compares to B. In the cases where the absolute value of a CMOS gap is <0.1, we claim system A and B are on par. When the absolute value of a CMOS gap is >=0.1, then one system is reported better than the other. If the absolute value of a CMOS gap is >=0.2, we say one system is significantly better than the other.

- SMOS (similarity Mean Opinion Score) is a well-accepted method in the speech industry for comparing the similarity of waves compared with recording waves. In the cases where the absolute value of a SMOS gap is >=0.1, one system is reported with higher similarity than the other.

Universal voice capability

The HiFiNet2 vocoder has universal applicability across speakers, which means that the vocoder is not speaker specified. A universal vocoder can be applied on different speakers without any further training or refinement, which is time and resource-saving.

This capability is critical for some scenarios, for example, building a synthetic voice from just a few recordings (e.g, less than 50 sentences recorded) or data collected from less professional devices. There are two major challenges to build a good vocoder in these situations:

- Limited number of recordings;

- Unprofessional recording quality.

Traditionally, training a neural vocoder from scratch usually requires a large volume of professional audio training data and takes a long time. Building a universal vocoder, which can synthesize any speaker’s voice with good and robust quality, helps to resolve a big problem to build a robust voice for the scenarios where data collected is limited or lacks the professional sound quality.

Thanks to HiFiNet2’s universal capability, Custom Neural Voice can support Lite projects (CNV Lite,) with which customers can create voices in good sound quality using just 20-50 sentences recorded online as training data.

Faster speed and lower cost

With the multi-bandwidth targeted architecture design, the HiFiNet2 spends the computation cost more efficiently. The parallel model structure design benefits the model so it can train and inference in parallel, which makes the best use of the computation.

For the 24kHz sampling rate, HiFiNet2 can reduce the inference cost further by 20% compared to HiFiNet1, whereas the 48kHz sampling rate only takes an extra 10% inference cost without any latency regression compared with the current 24kHz voices in production.

One framework for all scenarios

Previously, the vocoder was trained based on the different application needs, such as single speaker voices, universal voices, customer-trained voices, singing voices and so on. While the application needs are rapidly growing, maintaining multiple different vocoder models is not an ideal plan. Therefore, determining how to organize and maintain the different vocoder models is an essential task. HiFiNet2, as a unified vocoder framework, is flexible and scalable to handle different challenging tasks, like 24kHz voices, 48kHz voices, bandwidth extension, and singing, etc. In the section below, we describe two scenarios: bandwidth extension and singing.

Bandwidth extension

The bandwidth extension technology can help to fast build and upgrade the existing voices to higher fidelity, and largely reduce the go-to-market time for voice upgrading. This is particularly useful for customers who have built their voices with lower-fidelity data and would like to improve the fidelity.

Another typical use cases of the bandwidth extension method is to conduct audio super resolution tasks, which can boost lower-fidelity audio to higher-fidelity.

Check out below Bandwidth Extension (BWE) demo to see how close the 48kHz audio generated with BWE from 24kHz human recording is to the 48kHz human recording.

|

24kHz recording |

48kHz recording |

48kHz with BWE from 24kHz recording |

Singing

Singing is a challenging task for speech vocoders, as it needs a stable pitch, perfect reconstruction for long pitch duration, and super high fidelity. In the current speech industry and research area, SOTA vocoders for singing and speech all have different architectures. In HiFiNet2, both speech generation task and singing task share one unified model. The separated bandwidth modeling architecture can resemble the original singing audio quality perfectly, while keeping the inference speed as fast as a speech task.

Listen to the samples below to see how the 24kHz and 48kHz singing voices sound, both generated by the HiFiNet2 vocoder.

|

24kHz sample generated with HiFiNet2 |

48kHz sample generated with HiFiNet2 |

Create a custom voice with 48kHz vocoder

The HiFiNet2 vocoder can also benefit the Custom Neural Voice capability, enabling organizations to create a unique brand voice in multiple languages for their unique scenarios, with a higher fidelity of 48kHz. Learn more about the process for getting started with Custom Neural Voice, and contact mstts [at] microsoft.com if you want to create your voice in 48kHz.

Get started

With these updates, we’re excited to be powering more natural and intuitive voice experiences for global customers. Users can choose from more than 400 pre-built voices or use our Custom Neural Voice service to create their own synthetic voice instead. To explore the capabilities of Neural TTS, please check out our interactive demo.

For more information:

- Read our documentation

- Check out our quickstarts

- Check out the code of conduct for integrating Neural TTS into your apps