This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

AKS Networking

Available Network Models for AKS

In AKS, you can deploy a cluster that uses one of the following network models, which provide network connectivity for your AKS clusters:

- Kubenet networking:

- The network resources are typically created and configured as the AKS cluster is deployed.

- Conserves IP address space:

- Each pod uses an IP from a separate CIDR range (virtual), this is only accessible from the cluster and can be reused across multiple AKS clusters.

- Additional NAT, which means some network overhead and some minimal latency, consider this on certain scenarios where latency is critical.

- Source IP of the packet is always the node IP in kubenet because of NAT-ting

- Kubenet uses a custom route table to redirect requests to pods:

- Challenge #1: You manually manage and maintain user-defined routes (UDRs)

- Challenge #2: The cluster identity needs to have permissions to the route table which is not possible if using System Managed Identities (created with the cluster)

- Recommendation is to use pre-created identities (User Managed Identities or Service Principals)

- Uses Kubernetes internal or external load balancer to reach pods from outside of the cluster.

- Maximum of 400 nodes per cluster.

- Azure Container Networking Interface (CNI) networking:

- The AKS cluster is connected to existing virtual network resources and configurations.

- More features are supported (i.e.: Windows nodes, AGIC, virtual nodes):

- Each pod uses an IP address from the same address space as AKS VNET

- By default, IP addresses are pre-allocated per node based on the number of pods each node can run (

--max-podsparameter), this requires careful IP Planning. - Feature of dynamic IP allocation allows better IP reutilization across clusters and removes IP pre-allocation requirements.

- By default, IP addresses are pre-allocated per node based on the number of pods each node can run (

- Pods get full virtual network connectivity and can be directly reached via their private IP address from connected networks.

- Requires more IP address space.

The choice of which network plugin to use for your AKS cluster is usually a balance between flexibility and advanced configuration needs. The following considerations help outline when each network model may be the most appropriate.

One more thing to keep in mind is that although Microsoft will always try to keep feature parity between Azure CNI and kubenet (they pretty much support the exact same features), regarding releasing new features, typically they are first released in Azure CNI and kubenet will come a little bit later. There are some features that kubenet does not support, like for example Windows node pools.

When you create an AKS cluster in kubenet mode, there is one parameter called --pod-cidr that you can pass during the cluster creation time. You use this parameter to configure the pod CIDR range, which essentially is the range for the network overlay that kubenet uses. This means that pods will be on their own network address range, which will be different than the CIDR range of the AKS cluster itself.

North / South Perspective - Protecting Cluster Subnet with Network Security Groups

From a network security perspective there are a few important things that you need to know about. When you're setting up AKS, the agent nodes are going to go into a VNET that you specify. That VNET is going to have a subnet, and on that subnet, you are going to consider whether you can put in some network security group (NSG) rules around that subnet, so you can lock things down from an ingress and egress point of view, to specify what's allowed to come into / go out from that subnet from a networking North / South perspective, meaning network traffic coming into and leaving the cluster.

You can do that with AKS, what you should not do though is set up any NSG rules on the network interface cards (NICs) of the actual agent nodes that you're managing in your node pools. That's not supported by AKS. You may put NSGs on the subnet for both inbound and outbound traffic. In that case, just note that you do not want to block management traffic.

East / West Perspective - Securing Pod Traffic with Network Policies

The East / West perspective is the term used to define the traffic that's inside of the cluster (i.e., pod-to-pod traffic between applications), and that's something that you can do with what's called a “network policy” provider, which is another recommendation regarding AKS networking.

When talking about the traffic between pods in an AKS cluster, by default, when you create a cluster, all pods can send and receive traffic without any limitations. This might not be acceptable for some scenarios. To improve security, principle of least privilege should be applied to how traffic can flow between pods within the cluster, and this can be achieved by using “Network Policy”, which is the Kubernetes specification to define access policies for the communication between pods.

You can control both ingress and egress communication using this. Isolating applications within namespaces (namespaces are the way in which resources in Kubernetes are organized) is a good idea to provide some isolation degree within the cluster itself, but it will not filter the traffic between those namespaces.

You can create a Network Policy and assign it to the namespace level to secure traffic communication. For that, you must define which network policy you want to choose and you must do it on cluster creation, because you cannot update the cluster afterwards. You will have to recreate the cluster, if you decide to enable it afterwards. You can pass a parameter called --network-policy in the az aks create command.

The possible options in Azure are:

- Calico:

- Open source and widely used in the industry.

- If using kubenet, this is the only supported option for this network model.

- Azure Network Policy Manager (NPM):

- Azure's own solution.

- Translates the Kubernetes network policy model to set all allowed IP address space, which are then programmed as rules in the Linux kernel IP Table module.

- These rules are applied to all packets going through the bridge.

Once you have a network policy provider defined in your AKS cluster, then you can define network policies and force communication policies between namespaces in Kubernetes. Generally, the recommendation is to enable Calico as it supports some additional network policies like Global network policies. Here you can find a feature matrix containing the differences between Azure NPM and Calico Network Policy and their capabilities.

Network Policies by default work at Layer 3 / 4, so they can't be used to create Layer 7 rules. By default, if you do not specify anything, no network policy provider gets deployed. To check which (if any) network policy provider is enabled for your cluster, you can run an az aks show command which will give you all the necessary cluster information.

Expose Kubernetes services with Ingress Controllers

One last thing to note, regarding Kubernetes networking is that it is not a good practice to expose all Kubernetes external services as LoadBalancer type services, because this doesn't scale well. The recommended approach is to expose a single-entry point to the cluster and then use Layer 7 load balancing rules to redirect the traffic to the right service (ClusterIP).

For this in Kubernetes we use Ingress Controllers:

- Kubernetes as a project only supports nginx as ingress controller, other ingress controllers are developed and maintained by third parties.

- The decision on which ingress controller to use depends on the workload requirements, each ingress controller supports different Layer 7 capabilities.

- In Azure, Microsoft provides the Application Gateway Ingress Controller (AGIC)

- It has some limitations in terms of capabilities but also some advantages like removing network hops in the cluster.

- Useful if you're planning to use Application Gateway with WAF enabled anyway.

Private connectivity

In AKS, it is generally recommended to deploy a private cluster.

Enabling Private Clusters and Additional Considerations

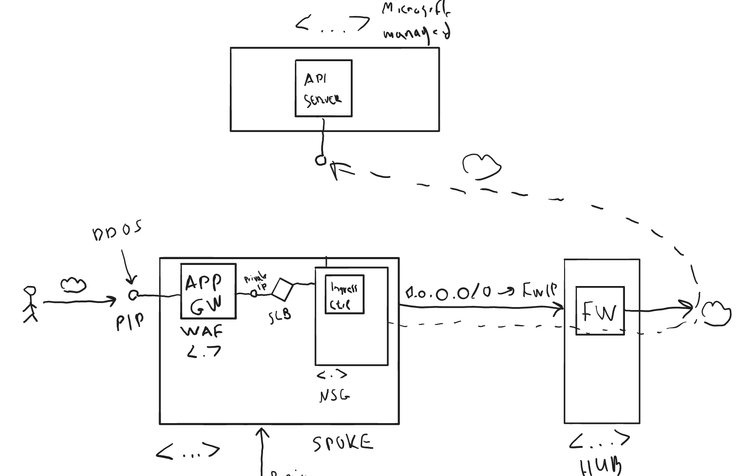

When you create a Kubernetes cluster in Azure, there are a couple of things that happen. By default, there is a “system node pool” that gets created, and if you need you can deploy other node pools as well, which are called “user node pools”. Those are the worker nodes where your application workloads are going to be running. The other part that Kubernetes requires to function properly is the control plane, which is the API server component. The control plane is deployed and managed for you by Microsoft.

Once you create a cluster, you will get your node pools created inside your Azure VNET and somewhere else in a Microsoft managed VNET, a Kubernetes API server will be deployed, which will play the role of the control plane for your cluster. There is a communication happening between your worker nodes that are running the kubelet agents in your VNET to the API server in the Microsoft managed VNET. By default, this communication happens over the public internet through a public FQDN that gets exposed by the API server. Although this FQDN is identity protected, it is still open for everyone on the internet to attempt and connect to it.

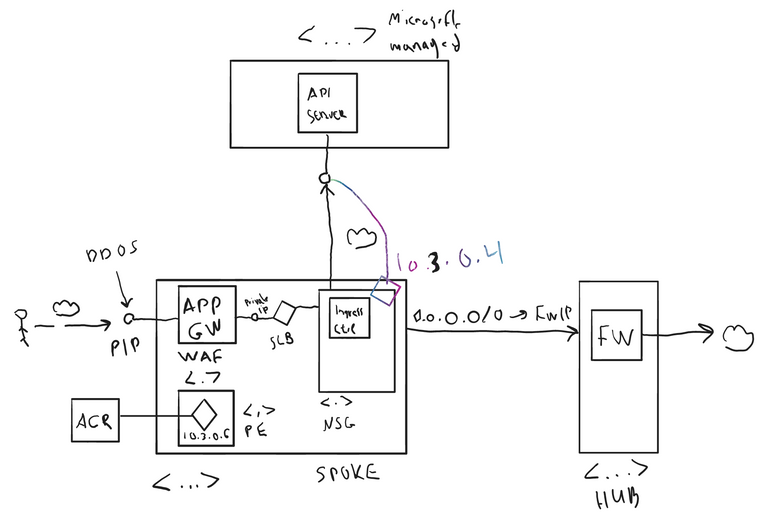

In the above example, because you have configured all your egress traffic from within the cluster to go through the Firewall to reach any destination out on the internet (like shown in the "Egress" section of the previous article of this blog post series), it will still use this path to also reach the API server of this cluster. Because of this, keep in mind that you must enable this egress communication to the API server of your cluster inside the Firewall device in this case.

In the portal if you go to a deployed cluster, you can see in the overview blade of the AKS cluster the API server address, which as mentioned above, will be a public FQDN by default (“API server address” value in the below image). This is something that anyone on the internet can ping, they can take its public IP, can send a request to it, etc.

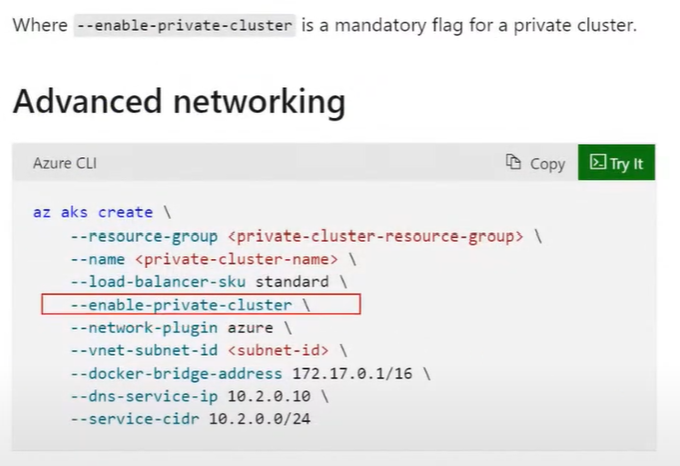

The alternative and the recommendation here would be to use a private cluster instead. This does not expose the API server of the cluster publicly. Instead, the API server can only be accessed from within the VNET where your AKS cluster has been deployed. The API server is an extremely sensitive and highly privileged endpoint and if you somehow managed to connect to it, then you can do anything.

To do that, when you create your cluster, you're going to basically select --enable-private-cluster.

This is going to use something called “private link” service and it is going to connect the API Server to those agent nodes that are running in your virtual network over a private endpoint. There will be no public endpoint exposed for the API Server. The impact of this is that you are going to need to have a bastion server (i.e., jump box) connected inside of your virtual network (probably in the Hub VNET), or you must be able to connect to any VNET that is peered with the AKS VNET, to be able to manage that cluster. You cannot connect to the API Server from outside of the VNET of the cluster because there's no longer a public endpoint for the control plane’s API Server.

Additionally, for DevOps and CI/CD pipelines, you're going to need to have self-hosted agent nodes running inside the same virtual network of the AKS cluster, or a peered VNET, when you're doing deployments, because once again you have no exposed public endpoint. If, for example, you are using Microsoft Hosted Agents for doing your deployments, these will not work in the Private Cluster scenario.

Authorized IPs / IP ranges - Securing Public Clusters

If you do choose to go with a publicly facing cluster though, you absolutely want to lock down that public IP of your API Server, so that it can only be accessed by authorized IPs / IP ranges. The way you do that is when you're creating a cluster, you simply pass in the --api-server-authorized-ip-ranges option to the az aks create command.

You can also update an existing cluster with this, so you can use an az aks update command and you can pass in that same --api-server-authorized-ip-ranges option.

In the above example (i.e., egress traffic configured to go into the Firewall device first, before reaching its desired destination), if you used this approach, you would whitelist only the public IP(s) coming from the Firewall device, as these would be the ones that your worker nodes would use. Also, any developers that would want to connect from their local machines, must have their client IPs whitelisted.

API Server VNET Integration (Preview)

Microsoft also has something new in the pipeline. In the previous case, you were using the Private Link service to connect to the API Server of the cluster’s control plane securely. Now, there is also a feature in preview called “API Server VNET Integration”, where you can integrate those API servers within your own VNET. Instead of using private link service, you will be able to inject your API server in your own subnet, so all the traffic will flow within your VNET itself.

Use a Private DNS Zone for a private cluster

In a Landing Zone topology this will probably be pre-provisioned and passed as a parameter --private-dns-zone during cluster creation.

Disable public FQDN resolution for a private cluster

Explicitly disable public FQDN resolution using the “--disable-public-fqdn” parameter during cluster creation or on an existing cluster. Otherwise, public FQDN will resolve to the private IP of the API Server potentially leaking security information.

Connect to other Azure PaaS services securely

Using Private Endpoints

Another interesting networking aspect to look at is if you plan to connect from your AKS cluster to other Azure PaaS services. For example, you would most probably want to connect to things like Azure Container Registry (ACR) to pull the images for your pods. Again, all that connectivity, by default, will be over the public internet. So, to connect to your ACR, you would use its public FQDN, which would resolve to the public IP and all of those would happen over the public internet.

You want to have all the services privately accessible to just what you are doing inside of your VNET and really be able to lock those down as much as possible. The way you do that in Azure, if you're using a public service like Azure SQL DB or Azure Key Vault or Azure Storage or ACR, is to privately connect to those from your applications, whenever possible. So, you want to be able to leverage a feature called “Private Endpoints” and a service called “Private Link” as much as possible to lock down your Kubernetes cluster and everything it's connecting to.

To drill down on the ACR PaaS component, you would be deploying inside the VNET where the AKS cluster resides, a private endpoint (deployed inside another dedicated subnet for Private Endpoints). Then, the ACR would get a private IP address of the VNET range (e.g., 10.3.0.6) and, thus, connectivity between the AKS cluster and those PaaS services will be private to the VNET.

Microsoft has two kinds of services in Azure:

- Services that can be injected into your VNET:

- e.g., AKS Node Pools.

- This is because the VMs part of the node pool exist on dedicated tenants (hosts) that belong to you only.

- Multi-tenant services:

- Services which reside in a host with VMs that belong to several customers.

- Microsoft cannot place this host into your VNET, it is placed on a managed VNET that Microsoft owns.

- e.g., ACR, Key Vault, Service Bus, Azure Storage:

- In all those services, by default, communication with them happens across the public internet.

- But they all support private connectivity through the leverage of private endpoints.

- So, any multi-tenant services that you want to access in an AKS cluster, you can and is recommended to leverage private connectivity to communicate with them.

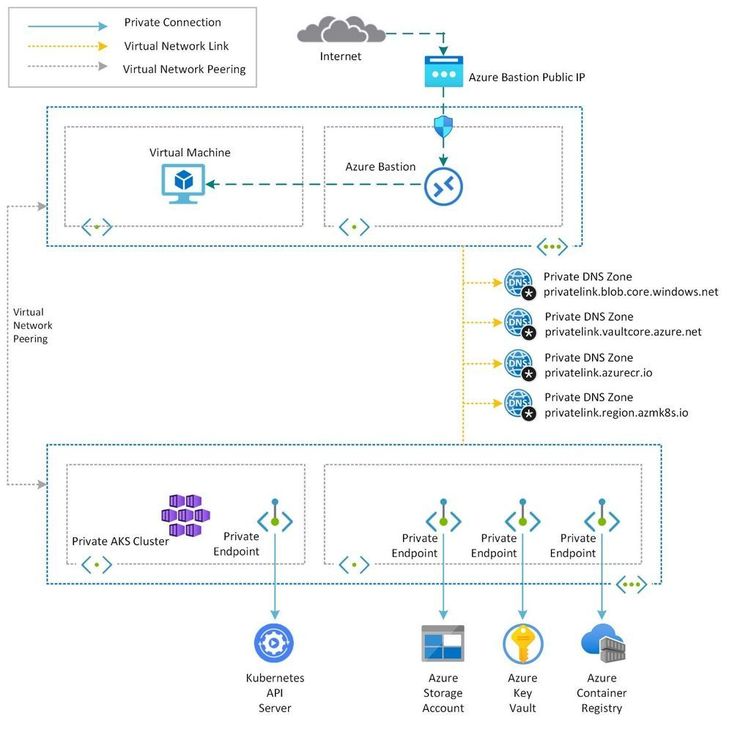

Once you create a private endpoint for a particular service in Azure, anyone in the same VNET as the private endpoint will be placed into, will use that private IP to connect to that service. The issue here begins with users outside the VNET trying to access the service through the public FQDN (e.g., developers, external users residing outside the VNET, or even build agents).

For example, in an AKS scenario a build agent needs to connect to ACR to push an image. A couple of prerequisites exist for the build agent to reach the ACR with a private endpoint configured:

- The build agent first needs a way to be able to get routed to the ACR’s private IP:

- VPN Gateway / ExpressRoute or

- It resides inside a peered VNET.

- The build agent needs to make sure that it connects to the ACR using its public FQDN:

- If the public FQDN of the ACR is something like

demo123.azurecr.io, then this FQDN should be made to resolve to its private IP (e.g.,10.3.0.6like in above image)- This happens automatically inside the VNET, where the private endpoint of the ACR is placed, because when you create it, Azure creates also something called a “private DNS zone” and attaches it to the VNET:

- This private DNS zone is authoritative for this DNS zone (

azurecr.ioin this case). - Any attempt to resolve an ACR FQDN like

demo123.azurecr.iofrom within the VNET where its private endpoint is located and the private DNS zone is attached, will use this private DNS zone to eventually point to the private IP address of the ACR service.

- This private DNS zone is authoritative for this DNS zone (

- On the other hand, outside of the VNET, the same DNS resolution process by default will end up pointing to the public IP of the ACR FQDN, which will be blocked because of the provisioning of the private endpoint feature for this service

- This, of course, needs to be changed to also point to the private IP of the service

- This happens automatically inside the VNET, where the private endpoint of the ACR is placed, because when you create it, Azure creates also something called a “private DNS zone” and attaches it to the VNET:

- If the public FQDN of the ACR is something like

In the above diagram, you can see a Bastion Server running in a Hub VNET, which is peered to a Spoke VNET, that has the AKS cluster in it. Here we are using private endpoints to first connect to the API Server, because this is a private cluster. Secondly, to connect to other services like Storage, Key Vault, ACR that's the architecture you want to push and connect with all those services from your cluster privately.

Use of the internal Firewall Feature of the PaaS services

If, for any reason, you cannot or you do not want to leverage private endpoint connectivity to use those PaaS services that cannot be VNET injected into your own network, then one thing to keep in mind is that most of those services, support an internal firewall feature. So, if you still want to leverage public connectivity to communicate with these, you can adjust the firewall feature of the services, to whitelist only your outbound IPs (if you know and control them) to be able to communicate with their public IPs and no one / nothing else.

Use of Service Endpoints

Service Endpoints offer a simple alternative to private connectivity, but they're not recommended as they are vulnerable to certain malicious attacks (data exfiltration) and create some challenges when routing requests to the services. Still, they are easy to configure (no DNS nightmare) and could be used in certain scenarios (e.g., no egress traffic control via firewall).

AKS Design Review Series - Contents

- Part 1.1: Networking - Ingress / Egress

- Part 1.2: Networking - AKS Networking & Private Connectivity (current)