This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Authors:

At FastTrack for Azure, we assist customers in maximizing their success and efficiency when using Azure cloud. In addition to working directly with customers, we also create content to help other customers identify opportunities for improvement and document these in product features or documents. While it may seem that larger customers have more potential for impact, it is not always the case. The size of a customer does not necessarily have a direct correlation with the impact our team can have on their business.

Case in question – Afimilk (words from the customer)

Afimilk is a small independent software vendor (ISV) that provides solutions for dairy farms. Our development team is small, so we work with partners in areas where we do not have in-house expertise. One of our fundamental areas of focus is helping farmers determine the optimal time for cow insemination to increase milk production. We are also modernizing our solution by moving it from on-premises to the cloud. As a small company seeking to expand our customer base, we were looking for a cost-effective way to collect data from dairy farms, clean and organize it, and allow data scientists to use it to build better models.

Our success story of Afimilk

We have had the pleasure of working with Afimilk for the past few months, during which time we have focused on areas such as security, networking, and data partitioning. The customer has been open and proactive in sharing their concerns, resulting in many feedback items and content contributions. In fact, we have received over 12 feedback items, with most of them being accepted, and have also received a full end-to-end sample and this post. It has been a highly productive and collaborative partnership.

Afimilk requirements

Let us discuss the capabilities and features of a system – can these capabilities address the needs of other industries? (Spoiler alert – YES, they can)

- Thousands of similar data sources, with low change rate

- Over 3000 sources, with < 20 daily small files per location

- Cost effective

- Use serverless compute where possible

- Use low-cost data repositories

- Hourly refresh rate is sufficient

- Ease of operation, since the number of resources can increase, a solid operational model is required.

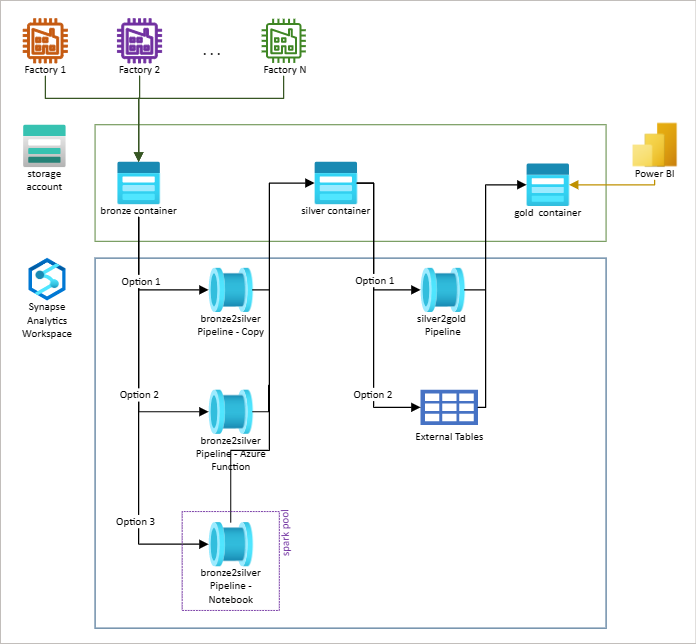

Our solution - Serverless Modern Data Warehouse

During our discussions with Afimilk (and other customers) it was evident that the need for a cost-effective solution would be beneficial for many more customers. We wanted to create a fully operational solution, addressing implementation areas such as building the environment using IaC (infrastructure as code), or using DevOps actions/activities to deploy the solution.

Technology stack

- Synapse pipelines - orchestrator

- Azure Storage with hierarchal namespace support – main data store

- Data Format – Parquet (original files were zipped JSON (JavaScript Object Notation) files

- Azure Function – provide cost-effective solution for individual file processing

- Power BI – data visualization

- DevOps – combination of Bicep scripts and GitHub Actions

Solution options

- Bronze to Silver

- Copy activity – A Copy activity will read a control table and will be used to move the unprocessed files in the bronze container to the silver container. Ideal when the is not any business logic being applied.

- Azure Function – An Azure function will read a control table and will be used to move the unprocessed files in the bronze container to the silver container after applying some complex business logic.

- Notebook – Same as azure function but ideal for large amount of data.

- Silver to Gold

- Store Procedure – A serverless stored procedure will be used to create the aggregated results.

- External Tables – External tables will be used to create the aggregated results.

To learn about the details of the solution, check out Serverless Modern Data Warehouse Sample using Azure Synapse Analytics and Power BI.

Key takeaways

- To minimize costs when using Synapse pipelines or Azure Data Factory pipelines, it is advisable to avoid individual (per file) activities and to repartition data into a minimal number of larger files (ideally, 200MB or larger).

- When working with many small files, performance may be improved on platforms such as Databricks and Serverless SQL by using larger files.

- Managed VNet (Virtual Network) Integration Runtime may not be the most efficient or secure option for all users, especially those with a lower volume of data and activities. In these cases, consider using self-hosted Integration Runtime, which allows for customization of VM (virtual machines) size, operational hours, and network security posture. Check out a quick start guide for setting up self-hosted runtime with Synapse.