This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

I have a customer with some very large storage accounts – of course as the size of an account gets larger so does the cost. Customers can use Blob Lifecycle Management rules to control when blobs are moved to a lower tier (hot -> cool -> archive), but they can also use blob inventory rules to analyze the blobs contained in that storage account. Each blob has an access tier property which denotes which type of storage that blob is present in. This post helps to automate the retrieval of those details and publish it into a Log Analytics workspace for analysis and reporting.

The basic flow for this process is as follows: -

- Enable blob inventory rules on the storage account you are interested in.

- Add an Event Grid subscription to each storage account to detect when the inventory has been completed

- Trigger an Azure Function to analyze the inventory report.

- Upload the report into Log Analytics

As always, the code is located in GitHub for your use.

Enabling Blob Inventory Rules

The Azure Storage blob inventory feature provides an overview of your containers, blobs, snapshots, and blob versions within a storage account. These rules run once per day and can take several days to run for a large storage account. The resulting inventory report is then stored in CSV format in the storage account.

I use Azure Policy to deploy both a container to store the results in (the default name for the container is statistics) and also the blob inventory rule. You can find the policy here and deploy it using any method. During the assignment of the policy, you can choose which storage accounts you want to target for the reports. This was if you only want to target storage accounts with lifecycle management enabled you can.

The image below shows the deployed inventory rule – what is important is the field that I’m retrieving namely Content Length and Access Tier.

Once the policy is deployed and objects remediated the inventory reports will start to run – expect to wait at least 24 hours for a small storage account and possibly days for accounts containing millions of items. When an inventory rule has run it will generate an event which we will capture using Event Grid and then trigger the Azure Function.

Deploying the Azure Function

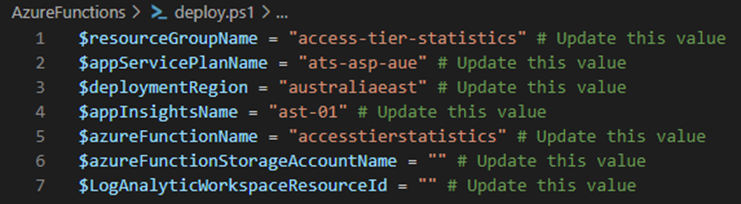

Before the Event Grid subscription is deployed, we need to deploy the Azure Function. I’ve included a script to provision this for you. You will need to update the parameters at the top of the script to make sure it is deployed to the correct resource group and has the correct name. The script helps to deploy the App Service plan, the Azure Function object and deploy the code required.

IMPORTANT NOTE: If you are going to take inventory on storage accounts that are accessed by private endpoint you will need to deploy the app service plan with network integration enabled. This will require deployment of either a Function Premium plan or at least a Basic App Service Plan. Note that this will increase the cost of the solution however network connectivity is required as the function must be able to access the data plane of the storage account. If you have an existing App Service Plan or Function plan which can host this solution, I would try to utilize an existing rather than create a new one.

Update the values in the deploy.ps1 file before running it, the script will deploy a resource group, storage account, app service plan, the function code and assign the Log Analytics Contributor role on the Log Analytics workspace in the script.

Lastly – you must give the Azure Functions managed identity the Storage Account Contributor role on any of the storage accounts that you have enabled the blob inventory rules for. When the inventory is complete this function will be triggered, and it must be able to read the inventory manifest and associated files to gather the statistics.

Deploying Event Grid Subscriptions

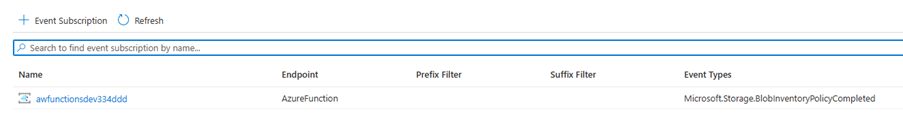

To trigger the Azure Function – we will listen for an event that is generated when the inventory is complete. Again using Azure Policy, we will create an Event Grid subscription that listens for the “Microsoft.Storage.BlobInventoryPolicyCompleted” event. You can deploy the Azure Policy using any method and it again let’s you target specific storage account to have the subscription created for.

When supplying a parameter for the “functionResourceId” in the policy – make sure you select the Azure Function and provide its resource Id. The value you need is found by clicking on the function app, then selecting Functions and then selecting the AccessTierStatistics function itself. You can then click on JSON view and copy the resource Id.

When the policy is deployed and has been remediated it will create an Event Grid system topic for each storage account called “accesstierstatistics” and then create an Event Grid subscription which has the same name as the storage account – like below.

All that is left to do is wait for those inventory rules to run and to trigger the function. As mentioned earlier this can take 24 hours to run so be patient.

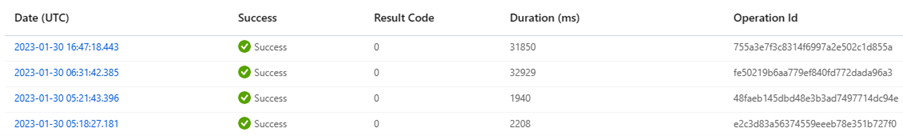

A successful inventory will generate an event which will trigger the Azure Function – so by reviewing the function executions you can follow the process through its steps.

Viewing the Results

After the wait I can see several successful function executions – which tell me there should be some data inside Log Analytics.

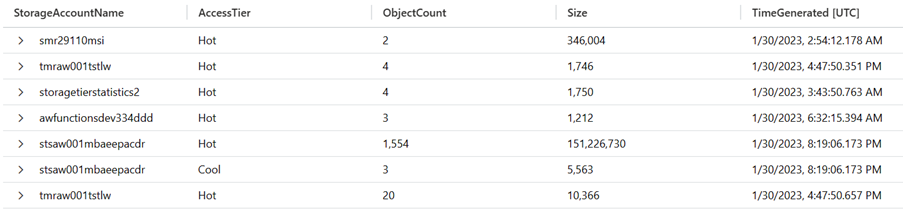

The data will be put into a table called AccessTierStatistics_CL and each record will contain the total size of all objects sorted by tier so like below.

This data can be used for reporting or for visualizations in a dashboard or workbook. As your Blob Lifecycle Management policies work to move blobs to different storage tiers you will have a moving timeline and visual representation of how your blobs are organized in a storage account. This data could be used to assist with the purchase of storage reservations or to assist with cost management for storage accounts.

Troubleshooting

Because the inventory only runs once per day it makes troubleshooting this process a little time consuming – so I’ve listed below some of the issues I ran into which might make it a bit easier to diagnose.

- Azure Function identity needs the correct permissions (must have Storage Account Contributor on each storage account and Log Analytics Contributor on the Log Analytics workspace.

- Firewalls on Storage Accounts. As mentioned if you have firewalls on your storage account or private endpoints you should be using a premium function as it can be integrated into a virtual network.

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.