This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

Today, we are excited to announce the public preview of confidential containers on Azure Container Instances (ACI) that have been in limited preview since May 2022. Confidential containers on ACI give customers the ability to leverage the latest AMD SEV-SNP technology to secure their containerized workloads. Azure customers are increasingly turning to cloud-native, container-based applications to support their workloads. However, these customers are also seeking cloud hosting options that offer the highest levels of data protection, which often require complex infrastructure management and expertise. To address these challenges, we developed confidential containers on Azure Container Instances. This serverless platform allows for running Linux containers within a hardware-based and attested Trusted Execution Environment (TEE), providing the simplicity of a serverless container platform with the enhanced security of confidential computing. TEE’s are secured execution environments that provide runtime protection for your containers helping to protect data in use and the code that is initialized.

Customers can lift and shift their containerized Linux applications or build new confidential computing applications without needing to adopt specialized programming models to achieve the benefits of confidentiality in a TEE. Confidential containers on ACI can protect data-in-use by processing data in encrypted memory. In addition to data confidentiality, ACI supports verifiable execution policies that enable customers to verify the integrity of their workloads helping to prevent untrusted code from running. The simplicity and benefits of a fully managed serverless container platform mean ACI makes it easier to adopt confidential computing quickly while still providing features to build on concepts like remote attestation. Get started with a tutorial to deploy a confidential container on ACI.

ACI Trusted Execution Environment

Hardware-based and attested Trusted Execution Environment

Customers need assurance that the data being processed in their environment is protected. Confidential containers on ACI leverage memory encryption and integrity protection currently enabled by AMD EPYC™ processors. Data in use is protected in encrypted memory with a hardware managed key unique to each container group, which helps provide protection against data replay, corruption, remapping, and aliasing-based attacks.

Verifiable container initialization policies

Customers want the ability to ensure that code executing in their environment is trusted and verified before beginning to process data. Confidential containers on ACI can run with verifiable initialization policies that enable customers to have control over what software and actions are allowed as part of the container launch. These execution policies help to protect against bad actors creating unexpected application modifications that could potentially leak sensitive data. Execution policies are authored by the customer through provided tooling and are verified through cryptographic proof.

Remote guest attestation

Confidential containers on ACI provide support for remote guest attestation which is used to verify the trustworthiness of a container group. A client can review the attestation report to ensure they trust the application running in a container group before sending any sensitive data. Container groups generate an AMD SEV-SNP attestation quote which is signed by the AMD hardware and includes information about the hardware it is running on, and all the software components including container configurations. Optionally, this generated hardware attestation quote can then be verified by the Microsoft Azure Attestation service via an open-source sidecar application before any sensitive data is processed in the container group.

Scenarios for confidential containers on Azure Container Instances

Today, we see customers using ACI for a wide spectrum of scenarios including batch processing, data processing pipelines, and continuous integration. With confidential containers on ACI we are excited to support new scenarios, below we are including a few from our customers leveraging confidential containers on ACI today.

Data clean rooms for multi-party data analytics and machine learning training

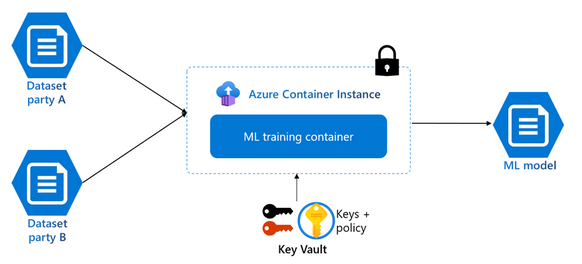

Business transactions and project collaborations often require sharing confidential data amongst multiple parties. This data may include personal information, financial information, and medical records which need to be protected from unauthorized access. Confidential containers on ACI provide features of hardware-based and attested TEEs and remote attestation for customers to process training data from multiple sources without exposing input data to other parties. This enables organizations to get more value from combined datasets while maintaining control over access to sensitive information. This makes confidential containers on ACI ideal for multi-party data analytics scenarios such as confidential machine learning.

A machine learning training model runs in a confidential clean room using sensitive data from multiple parties to generate a model.

Confidential inference

ACI provides fast and easy deployments, flexible resource allocation and pay per use pricing, which positions it as a great platform for confidential inference workloads. With confidential containers on ACI, model developers and data owners can collaborate while protecting the intellectual property of the model developer and helping keep the data used for inferencing secure and private. Check out a sample deployment of confidential inference using confidential containers on ACI.

Machine learning inference model running in a Trusted Execution Environment.

Partner Testimonials

Get Started with Confidential Containers on Azure Container Instances

This confidential serverless offering is now available to all Azure customers! To learn more:

- Visit the Getting Started guide on Microsoft Docs.

- Learn more about pricing details for the Azure Container Instances pricing page.

- Tryout the Machine Learning Inference Demo

- Leverage the ACI Confidential Sidecars

- Learn about Azure Confidential Computing

- Learn about Microsoft Azure Attestation