This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Azure Cognitive Service for Vision offers innovative AI models that bridge the gap between the digital and physical world. At the core of these services is the multi-modal foundation model. This model is the backbone of Azure’s Vision Services, converting images and video streams into valuable, structured data that unlocks endless scenarios for businesses. It is trained on billions of text-image pairs and can deliver image classification and object detection, customized to fit the unique needs of industries and domains.

Until now, model customization required large datasets with hundreds of images per label to achieve production quality for vision tasks. With the Florence model, custom models can achieve high quality when trained with just a few images. This is called few-shot learning, which lowers the bar for creating models that fit challenging use cases.

For example, a custom model could be created to detect objects like shipping containers at height to identify safety risks, or image classification can detect crate capacity for loss prevention and product restocking, or to inspect defects.

Step 1: Select the vision task

First, you need to decide the task that you want to accomplish based on your use case and the output of the model. Will your model detect objects, and therefore return labeled regions inside the image, or will it classify the whole image?

Let’s take the example of training a custom model that detects commercial drones. Let’s assume that the model is used for images collected by a camera installed in the drone’s physical location.

All of this is possible when you bring your data to train the model – which can achieve high quality with just a few images due to few-shot learning. I’ll show you how.

If you are comparing images of a drone versus images when the drone is not present, you can decide to train a model for Image Classification. Let’s assume that the custom labels for this use case are ‘drone’ and ‘clear sky’ and that the model outputs the classification label for each processed image.

At the same time, depending on how far away the drone is, you can decide to train a model for object detection, to return the regions in the image where the drone is.

Let’s assume that the custom labels for this use case are ‘drone’.

Step 2: Create a training dataset

To create a training dataset, the custom labels need to be associated with your image(s). Few-shot learning enables the model to be trained with as little as two images per label, although a variety of images is recommended to ensure the model can mimic different use cases. Keep in mind lighting and weather conditions. Also, collecting images for various focal distances from camera to object will help increase the accuracy of the custom model. Ideally, images should be collected from the camera you plan to use for real life streaming. Images can be stored in an Azure Blob Storage container.

To get started, go to Vision Studio on the “Detect common object in images” page and click the Train a custom model link.

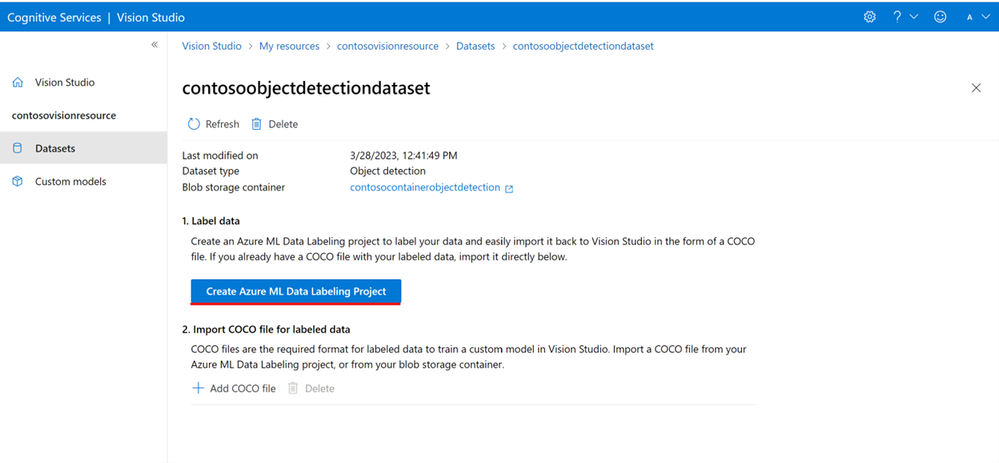

Create a dataset of type “Object Detection” and select the Azure Blob Storage container where your images are saved.

Step 3: Label the images in the training dataset

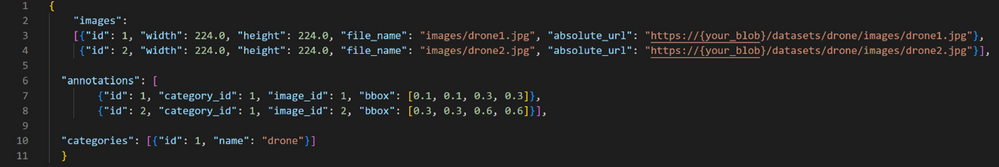

The labels can be provided in a COCO file format and saved in the same Azure Blob Storage container where your training images are located.

You may also consider using Azure Machine Learning to label your images. In the dataset details page, select Add a new Data Labeling project. Name it and select Create a new workspace. That opens a new Azure portal tab where you can create the Azure Machine Learning project.

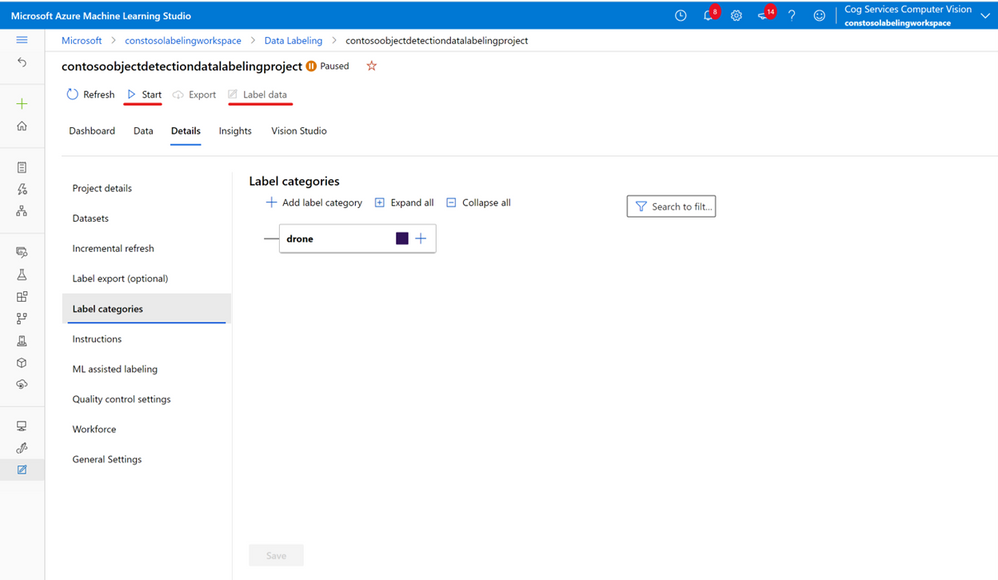

In your Azure Machine Learning labeling project, follow the Add label categories prompt to add label classes.

Once you've added the label category for 'drone', save this, select Start on the project, and then select Label data at the top.

Follow the prompts to label all of your images. In this example we show a label category of type Object detection.

When you're finished labeling your images in the dataset, return to the Vision Studio tab in your browser.

For more information about the steps required to create the training dataset, you can visit this documentation page.

Step 4: Import the labels into your dataset

In your training dataset, select Add COCO file, then select Import COCO file from an Azure ML Data Labeling project. This imports the labeled data from Azure Machine Learning.

Give the COCO file a name, select the same Azure Machine Learning labeling project, and proceed with importing the COCO file into the Azure Storage container for this dataset.

Step 5: Train a custom model

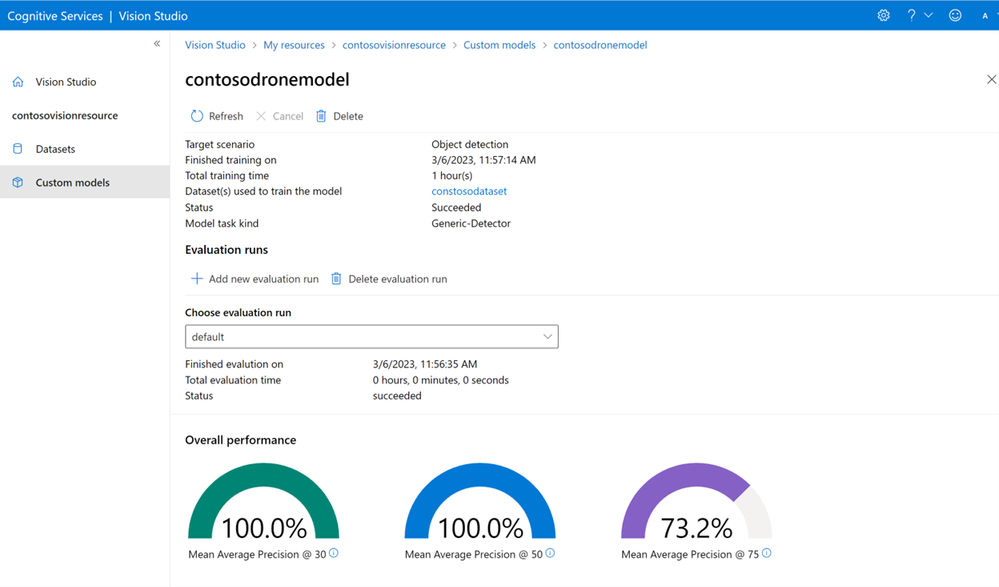

Once you complete image labeling, the training dataset can be used to train your custom model. Enter a name for the model.

To train a model, select the training dataset that has your labeled data.

Then select the training budget. The budget can be selected depending on the size of the training dataset. Select the training to be higher than what you expect, the model training will stop when all images in the training dataset have been used, and you will be charged only for the actual duration of the training.

Once the model has been trained, you can access the model accuracy metrics that have been calculated from the training dataset.

Step 5: Use the model for inference

Once the model is trained, you can use the model for inference with the ImageAnalysis API. For information on how to invoke the Image Analysis SDK for custom models, visit this page.

You can use custom models for analyzing images as well as videos. You can sample video frames and you can use the ImageAnalysis SDK to process the frame, same as a single image.

You can also try the model in Vision Studio on the “Detect common object in images" page. Select the model you trained in the drop-down menu and bring an image to run inference and get the results.

Step 6: Migrate Custom Vision projects to the latest Model Customization

If you have existing models trained with the Azure Custom Vision Service, you can migrate your labeled data to train new models with the latest Model Customization. You will need to create an Azure Blob Storage container where you can migrate the labeled images into COCO file format. Once the labeled images are migrated, you can follow the same steps listed above to train a new model. This article provides the sample code for how to migrate the labeled images.

Get started today

Azure Cognitive Service for Vision offers few-shot learning which makes customizing with Azure Cognitive Service for Vision a cost-effective and effortless process. The Florence foundation model brings about a new era of efficiency, accuracy, and accessibility in image and video processing, making it ideal for industries such as Media & Entertainment, Retail, Manufacturing, and other industries to support Safety & Security and Digital Content Management.

To get started and learn about the steps involved in training a custom model, visit the Learning Module for Cognitive Services for Vision. We can’t wait to see how you innovate.