This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

MLOps & GitHub Actions

You heard about MLOps, read about it, and might have even seen it in action, but starting your organization's MLOps seems harder than it should.

Before discussing modules, identities, pipelines, and other concepts, let's start with the basics. We'll start with a working example that you can use as a starting point to build your MLOps. This blog post will show you how to use GitHub Actions to automate the process of training and deploying a machine learning model using Azure Machine Learning service.

Skilling up - Learning MLOps

Azure Machine Learning SDK/CLI (V2) is the main platform used in this sample. In this article I will cover how to work with Azure CLI, which I find is a great starting point, as it allows for the most common (you may call it basic at times) capabilities. Later in this article, I will compare the CLI with the SDK you may decide to leverage the use of SDK and extend the Azure CLI capabilities.

The learning concept I suggest and demonstrate in this repository is to understand each step; execute it locally, manually, and then tailor these individual steps to a repeatable process. The process will be automated using GitHub Actions. So, what are the basic steps for MLOps?

Training, Deployment and Monitoring are your primary MLOps activities. Each activity has its own set of steps. For example, training a model requires you to prepare the data, train the model, and register the model. Deployment requires you to create an image, deploy the image, and test the deployment. In this article, I will cover the first two areas - creating a model & deploying it.

While this post focuses on two technologies: GitHub Actions and Azure Machine Learning, you can use the same approach to learn other platforms.

My sample repository contains both online and batch endpoints, in this blog post I will focus on the online endpoint.

Training - Data preparation, Model training, Model registration

You collected data, your product manager and data scientist worked together to define the problem, and you are ready to train your model. The following activities happen:

- Prepare the data. The data scientist usually does data preparation. The data scientist will use the data to train the model.

- Register the model once the data has been trained. The registered model will be used for deployment. For each of these activities (or steps), you would have a set of scripts that you can run locally.

In many cases, this would be your folder structure:

Using Azure CLI

This section covers the individual steps required to fulfil the tasks of data preparation, training & registering a model. It is assumed you are familiar with az cli, and have it installed. If not, you can follow the installation guide.

First step is to login to your Azure account:

Confirm you have the latest required extension (ml):

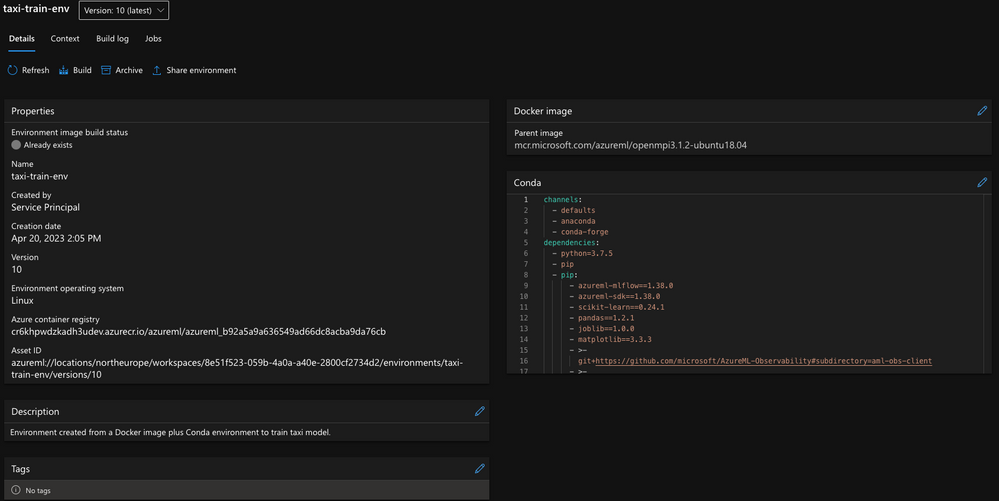

Let's register an environment (it creates a docker image with your required dependencies):

This is how the environment reference file looks like:

Note it points to the dependencies file, which looks like this:

After cloning or forking my repository, run this locally:

The above Azure CLI command will start an image build process. Note, this build process is done by the platform. You can monitor the process and artifacts created in the portal.

Now let's create the compute (in case it was not created before) if the cluster is already created you can skip this step (it won't create a second one though). This compute will be used to train the model.

You can find what compute is already created by running the following command:

Or, run this to make sure the compute is created:

Now let's run a training job, submitting the job, and then opening the web UI to monitor it. (The following command would work on Mac/Linux)

For Windows, you can use the following PowerShell command:

If you followed to this point, you worked on your data, trained a model, and registered it. You can see the registered model in the portal:

Take a moment to reflect.

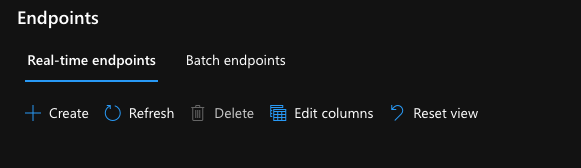

Deployment - Batch vs. Online

Once you have your model trained and registered, it is time to decide how to use it. Scoring a model can be done in two ways, batch and online. Use batch scoring when you have a large set of data that you want to score. Use online scoring when you want to score a single or few data points. When using batch scoring, we could leverage AML clusters, which may utilize low-priority VMs to reduce the cost. Online scoring can leverage, either managed endpoints or Azure Kubernetes Service (AKS). I will discuss the use of managed endpoints, and how to deploy a model to a managed endpoint. For AKS specific deployment, you can refer to the AKS deployment article.

When using AML CLI (v2) you must first create the endpoint, and then deploy to it. This is true for both batch and online scoring. You need two files for each pair model / deployment type. Let’s examine the content of them:

And the deployment file:

This is the second area of the repository folder structure. This can be used especially if you have multiple models to train:

Running the following command will create the endpoint:

This command takes few moments as it allocates resources on your behalf. Once the endpoint is created, you can deploy the model to it:

Once this command is completed, you have created an online endpoint which you could send data and get predictions from. The last step is to route all traffic to the new endpoint. You could leverage the routing of all or a percentage of the traffic to the new endpoint. The following command will route all traffic to the new endpoint:

The deployment name and the endpoint name must match the one used when you configured them for the routing of traffic to work.

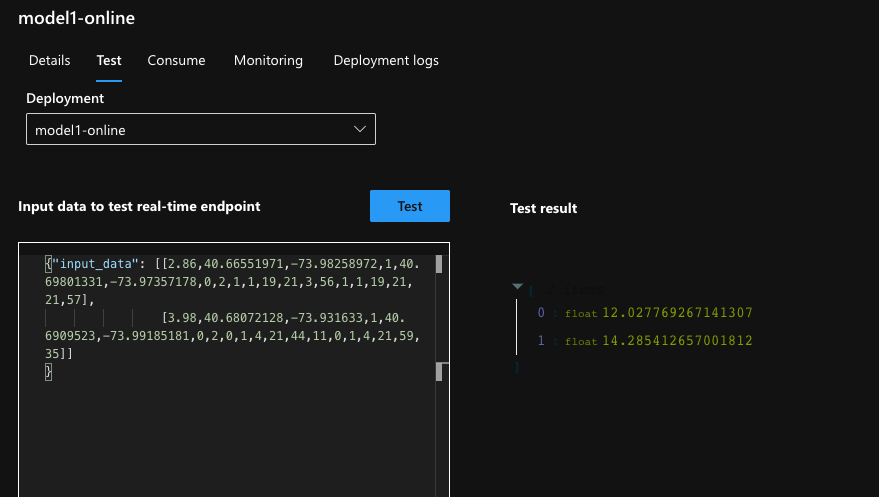

There are multiple ways you can test your work, using the Azure Portal is the most intuitive one. You can click the Test button to send data to the endpoint, as shown in the following picture:

The input data can be found under the data folder in the repo.

Recap: We worked on our data, decided what packages and dependencies we should use, trained a model, registered it, created an endpoint, and deployed the model to it. We also routed all traffic to the new endpoint, tested and confirmed the endpoint is indeed predicting as expected.

We did this by executing individual `cli` commands. In the next section, we will see how to automate this process using GitHub Actions.

GitHub Actions & Azure Machine Learning

I am using a User assigned identity (UAI) to authorize GitHub workflows with Azure. This approach is recommended since it provides a higher level of security compared to other approaches. You will not be required to store any credentials in the GitHub repository, which reduces the risk of exposing sensitive information. You will also avoid any credentials rotation issues that may arise from storing credentials in the GitHub repository. The bicep code provided in this repository would create the required resources and assign them with proper permissions. For further content you could visit this repository. Additionally, this is documented in the official documentation.

The information required to login into azure can be stored in the GitHub repository as secrets (although none of these are considered secrets). The following secrets are required:

GitHub Actions / Workflows

The sample repository contains two variations of workflows, one type leveraging composite workflow, and the other type uses workflow steps. I will cover the workflow steps as it provides a simple and intuitive way to create and understand workflows. If you would rather use composite workflows, my sample contains that as well.

Let's examine one workflow, which will help put all the pieces together. Without getting into too many details, each workflow in my repository would have few sections, the first define how the workflow starts, what are the input parameters, the second (optional) are environment variables, and the last are the jobs section. The jobs section is where the work is done. Each job can have multiple steps, and each step can have multiple actions. The following is a sample workflow with focus on the jobs section, you should be familiar with the actual commands from the previous sections, you should assume that ```${{inputs.name_of_parameter}}``` is a parameter that is passed to the workflow. When you see ```$name_of_env_parameter``` it is an environment variable.

Per workflow, you could create specific environment variables, and use them in the workflow. To enable token creation and content read, when working with a federated credentials.

Here are how the 'Jobs' section would look like:

To conclude: The above section executes in sequence the individual commands we learned in this article, it creates an endpoint, a deployment, and routes all traffic to the new deployment.

Azure CLI vs SDK

As these alternatives are available, you may be wondering which one to use. The following table summarizes the main differences between the V2 CLI and the Python SDK in Azure Machine Learning (AML):

| Aspect | V2 CLI | Python SDK |

| Interface | Command line | Code |

| Flexibility | Limited | High |

| Learning curve |

Low | High |

| Integration with other tools |

Limited | High |

| Compatibility |

Windows, macOS, Linux |

Python environment required |

The main differences between using the v2 CLI and the Python SDK in Azure Machine Learning (AML) are:

- Command line interface vs. code: The v2 CLI provides a command line interface to interact with AML, while the Python SDK allows you to use code to interact with AML.

- Flexibility and customizability: With the Python SDK, you have more flexibility and customizability compared to the v2 CLI. You can write your own code and use various Python libraries and frameworks to implement your machine learning workflows. On the other hand, the v2 CLI provides a set of pre-defined commands that you can use to execute common tasks in AML.

- Learning curve: The learning curve for the v2 CLI is lower compared to the Python SDK. This is because the v2 CLI is designed to be intuitive and easy to use, with a simple set of commands that can be learned quickly. The Python SDK, on the other hand, requires a higher level of expertise in Python programming.

- Integration with other tools: The Python SDK can be integrated with other tools and frameworks, such as Jupyter notebooks, PyCharm, Visual Studio, and many others. This allows you to seamlessly integrate your AML workflows with your existing development environment. The v2 CLI does not have the same level of integration as other tools.

- Compatibility: The v2 CLI is compatible with Windows, macOS, and Linux operating systems, while the Python SDK requires a Python environment to be set up on your machine.

In summary, the choice between using the v2 CLI or the Python SDK in Azure Machine Learning depends on your specific needs and level of expertise in Python programming. If you prefer a simple and easy-to-use command line interface, then the v2 CLI may be the best choice for you. If you need more flexibility and customizability, or if you want to integrate your AML workflows with other tools and frameworks, then the Python SDK may be a better fit.