This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

The idea of retrieval augmented generation is that when given a question you first do a retrieval step to fetch any relevant documents. You then pass those documents, along with the original question, to the language model and have it generate a response.

To do this, however, you first have to have your documents in a format where they can be queried in such a manner.

This article goes over the high level ideas between those two steps:

(1) ingestion of documents into a queriable format, and then

(2) the retrieval augmented generation chain.

Lots of data and information is stored in tabular data, whether it be csvs, excel sheets, or SQL tables. We will use the Langchain library to query our Database using Azure OpenAI GPT3.5 model.

What is Open AI GPT?

OpenAI GPT (Generative Pre-trained Transformer) is a type of LLM (Language and Learning Model) developed by OpenAI. It is a deep learning model that is pre-trained on large amounts of text data and can be fine-tuned for a variety of natural language processing tasks, such as text classification, sentiment analysis, and question answering. GPT is based on the transformer architecture, which allows it to process long sequences of text efficiently. GPT-3, the latest version of the model, has 175 billion parameters and is currently one of the largest and most powerful LLMs available.

What are LLMs?

LLMs stands for Language and Learning Models. They are a type of machine learning model that can be used for a variety of natural language processing tasks, such as text classification, sentiment analysis, and question answering. LLMs are trained on large amounts of text data and can learn to generate human-like responses to natural language queries. Some popular examples of LLMs include GPT-3, GPT-4, BERT, and RoBERTa.

Most LLM's training data has a cut-off date. For example, the OpenAI model only has the information up to the year 2021. We are going to utilize the LLM's Natural Language Processing (NLP) capabilities, connect to your own private data, and perform search. This is called Retrieval Augmented Generation.

What is Retrieval augmented generation?

Retrieval augmented generation is a technique used in natural language processing that combines two steps: retrieval and generation. The idea is to first retrieve relevant documents or information based on a given query, and then use that information to generate a response. This approach is often used with LLMs (Language and Learning Models) like GPT, which can generate human-like responses to natural language queries. By combining retrieval and generation, the model can produce more accurate and relevant responses, since it has access to more information.

How to create a chatbot to query your Database

We will use Azure SQL Adventure Works sample Database. This database contains products and orders, so we will use GTP3.5 to query this sample DB using natural language. This will be our index.

Now that we have an Index, how do we use this to do generation? This can be broken into the following steps:

- Receive user question

- Lookup documents in the index relevant to the question

- Construct a PromptValue from the question and any relevant documents (using a PromptTemplate).

- Pass the PromptValue to a model

- Get back the result and return to the user.

Luckily for us there is a library that does all that - Langchain.

What is the Langchain Library?

LangChain is a framework for developing applications powered by language models. As such, the LangChain framework is designed with the objective in mind to enable those types of applications.

There are two main value props the LangChain framework provides:

- Components: LangChain provides modular abstractions for the components neccessary to work with language models. LangChain also has collections of implementations for all these abstractions. The components are designed to be easy to use, regardless of whether you are using the rest of the LangChain framework or not.

- Use-Case Specific Chains: Chains can be thought of as assembling these components in particular ways in order to best accomplish a particular use case. These are intended to be a higher level interface through which people can easily get started with a specific use case. These chains are also designed to be customizable.

There are several main modules that LangChain provides support for:

- Models: The various model types and model integrations LangChain supports.

- Prompts: This includes prompt management, prompt optimization, and prompt serialization.

- Memory: Memory is the concept of persisting state between calls of a chain/agent. LangChain provides a standard interface for memory, a collection of memory implementations, and examples of chains/agents that use memory.

- Indexes: Language models are often more powerful when combined with your own text data - this module covers best practices for doing exactly that.

- Chains: Chains go beyond just a single LLM call, and are sequences of calls (whether to an LLM or a different utility). LangChain provides a standard interface for chains, lots of integrations with other tools, and end-to-end chains for common applications.

- Agents: Agents involve an LLM making decisions about which Actions to take, taking that Action, seeing an Observation, and repeating that until done. LangChain provides a standard interface for agents, a selection of agents to choose from, and examples of end to end agents.

- Callbacks: It can be difficult to track all that occurs inside a chain or agent - callbacks help add a level of observability and introspection.

Let’s use the Langchain library

In our case we will use the SQLDatabaseToolkit that translates natural language questions to SQL.

We will first connect our Azure SQL DB to langchain so it can “learn” the DB schema.

We will then use the SQLDatabaseToolkit to translate natural language questions into SQL.

What do we need?

- An Azure SQL Adventure works sample DB

- An Azure Open AI deployment of GPT3.5

- Python

- A web app with a Chatbot UI (optional)

Instructions

Grab your credentials and place them in an file called “.env”

Create a file called “sampleChat.py” or any file name you like and paste this python code into it.

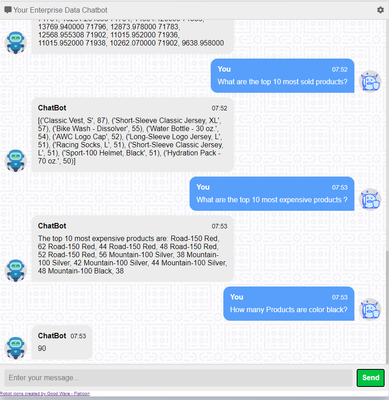

We can ask questions like:

- List the tables in the database

- How many products are in the Adventure Works database?

- How many Products are color black?

- How many SalesOrderDetail are for the Product AWC Logo Cap ?

- What are the top 10 most expensive products ?

- What are the top 10 highest grossing products in the Adventure Works database?

Please note that you have the ability to ask about tables and fields using natural language. This library is truly remarkable - the first time I used it, I was amazed.

What is more amazing is to see how this library “thinks”.

For example for the question: How many products are in the Adventure Works database?

Here is the console log:

Here is my UI, showing the answers I get:

I hope you enjoyed this tutorial.

Cheers

Denise