This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Community Hub.

The Java Engineering Group (JEG) optimized the Microsoft Build of OpenJDK for Azure's first Ampere Altra Arm-based VM families as an essential part of this launch. The Ampere Altra CPUs are based on Arm’s Neoverse N1 architecture. Hence, JEG focused the optimizations to take advantage of Arm Neoverse N1-specific CPU features like a variety of cryptographic algorithms, Large System Extension (LSE) to improve scalability on large server systems, and the entire physical core per vCPU. This helped ensure we could maximize the scaling factor for Java Virtual Machine (JVM) based applications and take advantage of other optimizations.

Test Metrics and Goals

The Azure Compute Arm-based VM families include:

|

VM Series |

vCPU Sizes |

Memory Sizes |

|

Dps_v5 |

2 – 64 |

4GiB per vCPU ratio 8GiB to 208GiB sizes |

|

Dpls_v5 |

2 – 64 |

2GiB per vCPU ratio 4GiB to 128GiB sizes |

|

Eps_v5 |

2 – 32 |

8GiB per vCPU ratio 16GiB to 208GiB sizes |

Table 1: Azure Compute's Arm64 Offerings

We tracked allocations and CPU + system memory scaling numbers for the above SKUs. We also baselined and gathered scaling numbers for various CPU features introduced with Azure's Arm64 offerings.

The JEG used the Microsoft Build of OpenJDK 17.0.3+7 LTS, with Parallel GC and G1 GC for all our tests[1].

Test Group 1: CPU Features - Related Tests

The Azure Arm64-based SKUs released in Sept 2022 offer a different feature set than x86-64-based processors, with Arm64 processors also having features tied to specific Instruction Set Architectures (ISAs). As a result, the JEG wanted to ensure that the Microsoft Build of OpenJDK considered these new features for the ARMv8.2 ISA supported by the Azure Arm64-based SKUs.

Goal

The purpose was to highlight the new CPU's scaling implications and introduce features like the new intrinsics with the most used algorithms for Azure SDK, such as MD5, into the Microsoft Build of OpenJDK.

Test Details

The tests consisted of simple microbenchmarks executed using the Java Microbenchmark Harness (JMH) as well as the SPECJVM2008 suite. These were specifically designed to stress and highlight the new CPU features listed below.

LSE/Atomics

We modified the JMH benchmarks to gather data on scaling both with and without the UseLSE switch. The UseLSE switch, which stands for Large System Extension, toggles the use of single atomic instructions and memory operations (such as CAS, SWP, LDADD) in the JVM when running on ARM processors. Additionally, the JEG measured the impact of Load-Link/Store-Conditional (LL/SC) operations, utilizing exclusive load-acquire/store-release instructions (LDAXR/STLXR) for atomic read-modify-write operations, when the UseLSE flag was false.

Crypto

The JEG used SPECJVM2008's Crypto with the following two sub-benchmark programs:

- Encryption/Decryption - With crypto.aes (AES and DES protocols) and crypto.rsa (RSA)

- Sign Verification - With crypto.signverify (MD5withRSA, SHA1withRSA, SHA1withDSA, and SHA256withRSA).

Compression

We used SPECJVM2008's Compress benchmark based on the Lempel-Ziv-Welch (LZW) algorithm to test compression.

SPECJVM2008 and JMH Benchmarks Common Configuration

To ensure accurate measurement of CPU features and scalability, the JEG took the following steps:

- Tuned the benchmarks to eliminate any interference from garbage collection.

- Accounted for warmup time and the total runtime.

- Scaled benchmark thread counts as vCPUs were incremented.

By conducting these tests, the JEG was able to gain insights into the new CPU features and their impact on performance, allowing for more informed decision-making in terms of hardware and software optimizations.

Test Group 2: Scaling - CPU vs. System Memory

As mentioned, these Arm-based systems offer an entire physical core per vCPU. Hence, we wanted to study how we could optimize and fully utilize these physical cores to scale up and scale out different workloads on the Microsoft Build of OpenJDK.

Goal

The purpose was to discover any scaling implications on Microsoft's Build of OpenJDK when working with the various processor vs. memory configurations offered by the Ampere Altra-based SKUs. The JEG used several benchmarks/test harnesses (SPECjbb2015, HyperAlloc, YCSB/Cassandra). The criteria being to design CPU vs. system memory scaling stress tests for each benchmark.

Test Details

The JEG used industry benchmarks designed to drive load in various shapes on the JVM. We also ensured that the JVM utilizes the available CPU and memory for each configuration offering for our Arm64-based SKUs.

Benchmark/Harness Variables

The JEG utilized common practices to quieten the operating system and increase run-to-run repeatability before tests, including, but not limited to:

- sync – force pending disk writes.

- echo 3 > /proc/sys/vm/drop_caches – clear our memory caches.

- echo madvise > /sys/kernel/mm/transparent_hugepage/enabled – ensures that the OS lets the JVM choose to use Transparent Huge Pages (THP) for the heap and not the stack or other parts of the JVM. From the JVM side, we must enable the option on the command line: -XX:+UseTransparentHugePages.

- Enable -XX:+AlwaysPreTouch for the JVM heap.

- Set ParallelGC threads equal to the number of cores available to the JVM.

- For ParallelGC, we turned off AdaptiveSizePolicy by specifying -XX:-UseAdaptiveSizePolicy on the command line.

- We also turned off perf data gathering by specifying -XX:-UsePerfData on the command line for all benchmarking efforts.

Enable physical CPU binding and local allocation for all runs using numactl --physcpubind=$cpurange –localalloc, where $cpurange consists of the cores under test.

Each benchmark also had its specific configuration.

HyperAlloc

The HyperAlloc benchmark is a part of the Heapothesys toolset developed by the Amazon Corretto team to help analyze and optimize the JVM’s garbage collectors. HyperAlloc is a multi-threaded benchmark that measures memory allocation performance in Java applications. It uses a scalable allocation scheme that reduces contention on shared resources and can handle large numbers of threads.

For our scaling tests, we specified the following:

- Long-lived Dataset Size (LDS) auto-calculated to be 50% of the Java heap size for each scaling experiment.

- Allocation Rates of 4GBps, 8GBps and 16GBps

- Allocation Span by supplying a range (128 bytes to ~16MB) of object sizes.

- The max object size can be < 16MB (to count as non-humongous for G1) or > 16MB to count as `humongous` for G1.

- Medium Data Set (MDS) is 25% of the Java heap size.

- MDS is transient data that lives past the aging threshold and gets promoted into the old generation. MDS superficially applies similar pressures (as long-lived data) to the generations and inflates the heap occupancy.

- Short-lived transients should not feed the LDS or the MDS.

- The run script benchmarked the following configurations (note: humongous testing was essential for stressing G1 GC)

- Humongous without MDS

- Regular-sized objects without MDS

- Humongous with MDS

- Regular-sized objects with MDS

SPECjbb2015

SPECjbb2015 is an industry-standard benchmark designed to measure the performance and scalability of Java-based business applications. We have worked extensively with SPECjbb2015 over the past decade to optimize the performance of the entire software-hardware stack. The benchmark consists of a simulated enterprise application that emulates the behavior of a complete e-commerce system. It includes various workloads and scenarios, such as order placement, inventory management, and payment processing. These workloads and scenarios stress different system performance aspects by simulating a high-volume online transaction processing (OLTP) workload.

SPECJBB2015 includes several key metrics for evaluating scalability, such as sustained throughput and throughput under response time SLA constraints. These metrics make SPECjbb2015 an invaluable tool for identifying and addressing performance bottlenecks and validating hardware and software configurations for scaling in the cloud. The JEG has worked with OSG-Java for many years. We deeply understand the different Java-based benchmarks and their nuances and best practices. We can leverage this knowledge to help achieve optimal performance and scalability.

We used the run_composite and run_multi scripts to execute the SPECJBB2015 benchmark for our scaling tests.

The run_composite script is a single JVM – single host script used to launch all the benchmark components:

- The controller - the execution director.

- The transaction injectors - issue requests and services to the backend and measure response times for them.

- The backend - the actual business logic code that serves requests and services and processes transactions, and provides notifications.

This script is easy to use, and the community uses this for comparative learning and scaling experiments.

The run_multi script is a low-level script that provides more fine-grained control over the benchmark execution. It allows you to specify the controller outside of the measurement domain. The transaction injector and the backend then form a group. Depending on the scaling tests, we can have a 1:1 relationship between them or scale at 2:1 to drive more load towards the backend. The groups themselves can scale such that the backends can also have some inter-process communication with other backends sitting in other groups. You can also stress different parameters, such as the number of warehouses, the number of JVM instances, and the number of virtual users, among others. The run_multi script is ideal for more advanced users who need to customize the benchmark execution to suit their specific scaling needs.

For all the scripts, we standardized the following:

- Initial and max heap were set to around 87% of system memory available to the JVM.

- The rule we followed was to have a scaling multiple like the core-to-memory scaling available for each SKU. So, for Dps_v5, where we have a 4GB per vCPU ratio, we kept the initial and max heap size at 3.5GB per vCPU.

- For cases that needed a bigger nursery, the size was set to 10% of the max heap size.

Our baseline configuration just had the standard configuration listed above. For the minimally “tuned” runs, we tested different configurations as listed here:

- We tested changing the byte offset to 16 and enabled compressed oops and class pointers for max heap sizes >= 32GB but < 64GB

- Different fork-join pool configurations for different tiers offered by the benchmark.

JVM-specific tuning:

These tunings are an essential aspect of performance engineering. Apart from the standard JVM tunings and settings mentioned earlier, the JEG also conducted a scale analysis on software prefetch distance, type profile width, and small code inline sizes to understand how different options can affect the utilization of resources and benchmark scores.

- Software prefetch distance - the number of bytes a processor fetches ahead of the current instruction in anticipation of upcoming memory accesses. A larger prefetch distance can improve performance by reducing memory access latency. However, it can also lead to increased cache pollution and contention.

- Type profile width - refers to the number of type states (monomorphic, bimorphic, megamorphic) that the VM will use to optimize virtual method dispatch. A wider type profile can improve performance by allowing the VM to generate more optimized code. However, it can also increase compilation times and memory usage.

- Small code inline sizes - refers to the maximum size of a method the VM will inline (i.e., replace a method call with its body at the call site). Smaller inline sizes can improve performance by reducing call overhead. However, they can also increase code size and compilation times.

By carefully tuning these parameters, the JEG was able to understand the nuances of the Armv8.2 system and how these options tracked for the architecture and the benchmark.

Appendix I – Benchmark Coverage

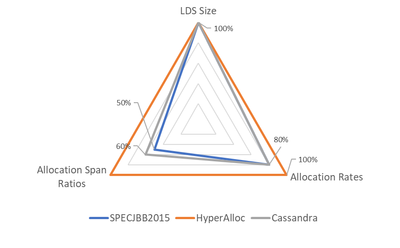

In Figure 1 below, you can see the coverage (as a percentage range over the axes) achieved by the benchmarks. Each benchmark stressed different Live Data Set (LDS) sizes over various allocation rates. HyperAlloc could stress 100%, whereas SPECjbb2015 and Cassandra could only stress 50-80% depending on the allocation rate and spans (e.g., allocation span ratios). Since Cassandra and SPECjbb2015 filled similar needs, we only needed to dive more deeply into one after the initial analysis.

Figure 1: Benchmark Selection criteria coverage offered by each benchmark

Definitions

Allocation Span for Parallel GC

Allocation Span for Parallel GC refers to the allocation behavior of the Parallel GC collector. The fast path allocations fall into the thread-local allocation buffer (TLAB) space, which are special areas within Eden space. The objects that don’t fit TLAB size requirements are allocated outside the TLABs directly into the Eden space. The collector performs a Large Object Allocation (LOA) directly into the tenured or old generation space for objects larger than a certain threshold. The tenured or old generation is used to store objects that have survived the young collection cycles and have aged past the preset tenuring threshold.

Allocation Span for G1GC

Allocation Span for G1GC is like what we have mentioned above, but we consider the humongous objects allocated directly into the humongous regions out of the old generation space.

Allocation Span Ratios

Allocation span refers to the space in the Java heap where an object is allocated. By measuring the span of allocations, we can gain insight into the allocation behavior of a benchmark or an application and identify potential bottlenecks in the heap. To calculate span ratios, we can compare the number of objects allocated in different heap areas. For example, we can calculate the ratio of objects allocated in the Eden space vs. those allocated in the Old generation space. We can also separately calculate the ratio of objects allocated within the TLAB versus those allocated outside the TLAB area. These ratios can determine whether an application is experiencing memory pressure in specific areas of the heap and guide optimizations to improve memory usage.

[1] Please note that Microsoft Build of OpenJDK has had security updates since then. Please download the latest from https://www.microsoft.com/openjdk/download.