This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Introduction

In the world of large language models, model customization is key. It's what transforms a standard model into a powerful tool tailored to your business needs.

Let’s explore three techniques to customize a Large Language Model (LLM) for your organization: prompt engineering, retrieval augmented generation (RAG), and fine-tuning.

In this blog you will learn how to:

- Optimize prompts to ensure a model produces more accurate responses.

- Retrieve information from external sources for better accuracy.

- Teach a model new skills and tasks.

- Teach a model new information from a custom dataset.

When to Use Prompt Engineering

“Prompt engineering” is the art and science of designing prompts that lead LLMs to generate responses that are in line with your organization's objectives. The following are a couple common prompt engineering techniques. Prompt engineering is a popular technique, adopted by customers like ASOS, Siemens, and KPMG.

Few-Shot Learning

Few-shot learning (FSL) is a technique best suited for tasks that are easily learned by a few examples – tasks where a few examples are sufficient for the model to understand the desired problem. FSL contrasts with zero-shot learning (ZSL), where the prompt just contains a description of the task but without examples. That means, you only provide the model with a description of what you want it to do - “you’re a SQL expert” or “write friendly responses” - but no examples.

Let’s look at a concrete example. A study of few shot learning techniques found that FSL improved performance on SAT analogies. GPT-3 achieved an accuracy 53.7% using ZSL and 65.2% using FSL.

A sample zero-shot prompt for this task:

“Complete the following analogy. Food is to hunger as water is to (a) thirst, (b) longing, (c) pain, (d) mirth.”

Sample few-shot prompt where examples incorporated into the input prompt:

“Complete the following analogies.

Analogy: “Legs are to walking as eyes are to (a) chewing, (b) standing, (c) seeing, (d) jumping

Answer: B

Analogy: Up is to down, as left is to: (a) right, (b) sideways, (c) across, (d) outside

Answer: A

Analogy: Food is to hunger as water is to (a) thirst, (b) longing, (c) pain, (d) mirth.

Answer: A

Since FSL relies on padding the prompt with examples, the prompt size can be large. This may drive up both costs and latency. Thus, FSL is recommended for simple, low-inferencing volume tasks with smaller prompt length.

Chain-of-Thought (CoT) Prompting

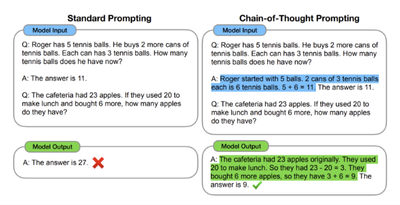

Chain-of-thought (CoT) prompting elicits the model to “think step by step” or otherwise break down its inferencing process into logical units. Just as humans can improve decision-making and accuracy by thinking methodically, LLMs can show gains in accuracy from a similar approach. This is where Chain-of-thought (CoT) prompting elicits the model to “think step by step” or otherwise break down its inferencing process into logical units.

Here’s an example from a seminal study on CoT: Chain-of-Thought Prompting Elicits Reasoning in Large Language Models:

This is best suited for tasks which require complex reasoning. Note that in causing the model to output its individual logical steps throughout the inferencing process as part of the completion, the response size increases, affecting latency, throughput, and cost.

When to Use Retrieval-Augmented Generation (RAG)

In traditional (non large language model) retrieval-based methods, responses are generated by selecting pre-existing responses from a database based on the input query. This approach can lead to more accurate and contextually appropriate responses but may lack flexibility and creativity.

Generative models, on the other hand, generate responses from scratch based on learned patterns and probabilities. While these models can produce more diverse and creative responses, they may sometimes generate inaccurate or irrelevant information.

RAG combines these two approaches by leveraging a retriever to identify relevant passages or documents from a large corpus of text and then using a generative model to generate responses based on the retrieved information. This allows for more accurate and contextually relevant responses while still maintaining the flexibility and creativity of generative models.

RAG is the easiest method to use an LLM effectively with new knowledge - customers like Meesho have effectively used RAG to improve the accuracy of their models, and ensure users get the right results.

When to Fine-Tune

Fine-tuning refers to the process of customizing a pre-trained model using labelled data, to improve its performance at for specific tasks which often leads to better performance and reduced computational resources. Two of the most common use cases for fine tuning are reducing costs (by shortening prompts or improving the performance of cheaper models) and teaching the model new skills. You can also check out our AI Show to learn more about when to fine-tune or not fine-tune.

Reducing Costs

Inferencing cost is a function of the length of the prompt and response. Fine-tuning can help reduce the length of both prompt and response.

Consider the following prompt-completion pair for Tweet sentiment classification using a standard/base LLM:

Prompt:

Determine the sentiment of the following Tweet. Write your answer as a single word, either 'positive' or 'negative'. We'll first give two examples and then the actual tweet to classify.

Example #1: “Yay! My photo shows up now!” Classification: positive

Example #2: “I need more followers…” Classification: negative

Tweet: “I’m stuck on the motorway”

Response: Classification: Negative

After fine-tuning, finetuned model doesn’t need instructions or examples. During training, the model is provided with examples of prompts and appropriate responses. It learns that each prompt is the text of a Tweet, and the completion should always be “positive” or “negative” based on the sentiment of text.

Fine Tuned Model Prompt: “I’m stuck on the motorway.”

Fine Tuned Model Response: “Classification: Negative”

In this example, finetuning reduces input length by a factor of nearly ~ 10x, reducing the prompt from 78 tokens in the first example to 8 in the second. In addition to shorter prompts being cheaper, they may also be faster!

Another example of fine tuning to reduce costs is fine tuning a smaller model, like GPT-35-Turbo, to perform just as well as a more powerful general-purpose model at a specific task.

New Skills on In-Domain Tasks

Finetuning truly excels in scenarios where one needs to tailor the model's behavior for in-domain tasks, that is, tasks where all the necessary information is already present in the pretrained data.

Customizing the model’s behavior (i.e., teaching it a new skill) often either entails (1) a change in how the model reasons about things it already knows or (2) a change in how it formats its responses.

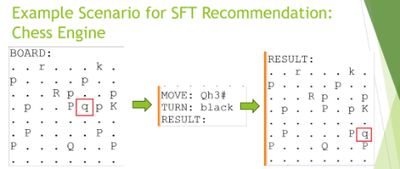

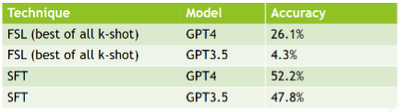

To demonstrate using fine tuning to teach an LLM new skills, we taught GPT-35-Turbo and GPT-4 how to play chess. In our training data, we provided the model with prompts containing a text grid representation of a chess board, and responses containing a move in algebraic chess notation and the resulting board state. Our fine-tuned model was effectively a chess engine: given a board state, it learned to recommend the best next move.

The best LLMs are already familiar with chess logic and concepts; we must simply guide the model to output the board (a novel format, and here, our model “customization”) according to the logic it’s already familiar with. We put this scenario to the test with a few hundred examples of training and indeed supervised fine-tuning vastly outperforms FSL (maxing out the context length), and as this doesn’t concern new data, techniques like RAG are a poor fit here.

Deciding between RAG and Fine Tuning

Even if we’re able to get equal performance between RAG and other techniques like Supervised Fine Tuning (SFT), for scenarios where the underlying knowledge corpus is frequently updated, RAG is a much more appropriate choice as the cost of reindexing with new documents is far cheaper than redoing the SFT procedure. An example here might be a daily news chatbot: daily fine-tuning procedures would be impractical.

Ready To Try This Out?

Additional Resources

- Introduction to prompt engineering

- Introduction to Retrieval Augmented Generation

- Customize a Model with Fine-tuning

- Using your data with Azure OpenAI Service - Azure OpenAI | Microsoft Learn

- Customize a model with Azure OpenAI Service - Azure OpenAI | Microsoft Learn

- To Finetune or Not Finetune, That's the Question