This post has been republished via RSS; it originally appeared at: AI Customer Engineering Team articles.

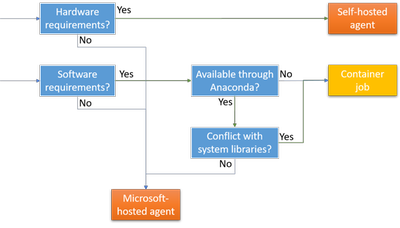

In a previous blog post, I provided an overview of how Azure DevOps can be used to reduce the friction between app developers and data scientists. In this blog post, I’m going to address how to deal with an advanced scenario, where we ditch the standard build environment of Azure DevOps to instead create a custom environment to satisfy a complex set of hardware and software dependencies. Figure 1 provides a diagram of a decision process followed by a description of the components and rationale for selecting one path over another.

Figure 1: How to decide when to go with a self-hosted agent and when to use a container job.

Microsoft-hosted build agents

For a lot of scenarios, Azure DevOps users may be able to rely on Microsoft-hosted build agents. Let’s refer to this as the standard build environment. In these cases, you could simply create a YAML definition to create an Azure build pipeline. This may look something like this:

This pipeline will be triggered and executed on an Ubuntu-16.04 virtual machine, to build a python wheel of your source code every time a code commit is pushed to the master branch of your repository.

If your build pipeline requires software dependencies, it will often be sufficient to create and activate a conda environment at the beginning of your pipeline, using the CondaEnvironment task of Azure DevOps.

However, in some scenarios you will hit a wall with this approach. Let’s use a concrete scenario, say you created a custom neural network architecture in PyTorch, including C++ extensions to the PyTorch backend. You decided to implement these extensions in C++, instead of extending the PyTorch Function or Module in python, because you want these operations to execute very quickly or because your extensions interact with other C++ libraries. In this case, you need to have complete control over the hardware and software - and having control over the operating system of your build environment would certainly make things easier. That is, it might be time to switch to using container jobs, self-hosted agents, or both.

Container Jobs

Container jobs give you more control over the build environment than what you get from creating a Conda environment on a build agent because containers offer isolation from the host operating system. This allows you to pin versions of software that are not available as Conda packages or software that is better to install system-wide (e.g. cuda toolkit). You can even run a different operating system inside your container than the operating system that is running on the host (e.g. run an Ubuntu Linux container on a Windows 10 VM). This can be immensely useful, not only if your build environment has a complex set of dependencies, but also if you want to preserve the reproducibility of your pipeline for a long time into the future.

Executing your build pipeline inside your custom-made container is straightforward. You simply include a section towards the top of your pipeline YAML file that specifies which container to use. For example:

We provide the registry (my private Azure Container Registry in this example), name, and tag of the container image, an endpoint for a secure connection for pulling the image, and optional arguments for how to start the container. In this case, we want the container to have access to all NVIDIA CUDA cards on the build agent. Now each step of our pipeline will be run inside the container.

You may ask: How would one build a custom Docker image suitable for Azure DevOps? In my opinion, you have at least three great options:

- Start with one of the docker files offered by Azure Machine Learning service in its repository for AzureML-Containers. Simply add your dependencies to one of those Dockerfiles.

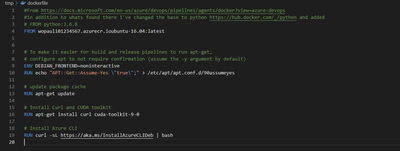

- Alternatively, you could use either a container image that you previously used for deployment, or one that you used for training your model on AML compute. You would then create your own Dockerfile but specify that image as the base image to start from (see Figure 2).

- Of course, the cleanest solution would be to create your own Dockerfile from scratch. This allows you complete control over what is installed in the image, potentially reducing a lot of overhead.

Figure 2. A simple Dockerfile that pulls an existing base image from the azure container registry and adds the dependencies needed for our project (cuda-toolkit and Azure CLI).

Self-hosted agents

Very often Microsoft-hosted agents will be the most convenient option because you don’t have to worry about upgrades and maintenance. Every time you run your build pipeline you get a fresh virtual machine with all upgrades taken care of.

However, in our use-case, we need to have a VM with a NVIDIA CUDA card on it, so that we can compile our C++ extensions for PyTorch with CUDA support. This means we will have to use a self-hosted agent. One good option is to configure a Data Science VM to be your self-hosted agent. The instructions for creating a self-hosted agent are straightforward and should only take a couple of minutes to complete. After you are done setting up your agent and adding it to the agent pool, you can simply change the corresponding line in your pipeline definition shared above.

Summary

While Microsoft-hosted agents in combination with a conda environment will often be the most convenient option, Azure gives you a lot of options to configure the build environment to meet your runtime needs. In this blog post, I tried to outline a scenario that demonstrates the limitations of the standard Azure DevOps environment in which the build pipeline is executed directly on a Microsoft-hosted build agent, and how to get around these limitations. To get more control over software dependencies and operating system, you can use Container jobs. For additional control over hardware, you can use a self-hosted build agent. Note that the decisions whether to run your pipeline inside a container and whether to use a self-hosted agent are independent. You can directly run your pipeline on a self-hosted agent, or inside a container. You can also execute your pipeline in a container on a Microsoft-hosted agent or on a self-hosted agent.

As always, please leave comments and questions below, or email me. I’d be especially interested whether you experienced other situations in which you had to go with a container job or a self-hosted agent!