This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Introduction

Zero Trust is defined by Microsoft as model that “assumes breach and verifies each request as though it originates from an open network. Regardless of where the request originates or what resource it accesses, Zero Trust teaches us to ‘never trust, always verify.’ Every access request is fully authenticated, authorized, and encrypted before granting access. Micro-segmentation and least privileged access principles are applied to minimize lateral movement.”

Therefore, Windows 10 devices that leverage a Zero Trust model are typically off-network and use only Internet connectivity to securely authenticate. In this case, authentication is with Azure Active Directory only.

Microsoft Intune is the premier management interface and control plane of Zero Trust devices. Intune provides a significant number of configuration profiles and administrative templates that are the same as or similar to Group Policy to manage Windows 10 devices across the enterprise. However, a complete one-to-one match between Intune policy and Group Policy does not exist at the time of this blog post, leaving some organizations in a potentially less secure posture than using Group Policy, and potentially exposing them to open audit findings. Group Policy can continue to be leveraged in Hybrid environments where the Windows 10 devices have access to a domain controller.

In a zero trust environment with no connectivity back to traditional on-premises or Azure hosted domain controllers, nor any way to VPN into said environment, the security gap between Intune and Group Policy could be a roadblock to more organizations leveraging the power of cloud. Azure AD does not provide Group Policy capabilities, nor is it designed to. While Intune, through the use of the Windows 10 MDM channel, can provide constant enforcement of policy like Group policy does, for those policy gaps that cannot yet be manipulated through the MDM channel, what is an organization to do? Enter Azure Automation Desired State Configuration (DSC).

Desired State Configuration for Windows 10 devices

Desired State Configuration (DSC) can be used to monitor and enforce the state of a company’s computer systems are in a desired configuration. This could be to ensure certain services are started, certain registry keys exist, certain features are added, certain permissions are present and enforced, etc. These are performed using both Microsoft produced and community provided PowerShell modules, and DSC is built into all modern Windows OSes as well as Linux.

Azure Automation contains a DSC pull server as part of the basic deployment. In this configuration computer systems, called nodes within the DSC Blade, join the Azure Automation DSC service, pull configurations down, and apply the settings. The configurations are compiled as part of a different process and contain the elements of the desired configuration. The nodes will need either direct outbound Internet access or be configured to use a proxy. The nodes will request the configuration from the DSC pull service in Azure over port 443 from the region where the Azure Automation account is configured.

The general breakdown of the involved pieces is as follows:

1. Set up an Azure Automation account

2. (Optional) Link a Log Analytics workspace

3. Deploy a desired state configuration to Azure Automation DSC

a. Author, Deploy, and then Compile the configuration in Azure Automation DSC

b. Author and Compile while Deploying the configuration to Azure Automation DSC

4. Deploy a PowerShell script to nodes that generates a meta.MOF file to be used to register with Azure Automation DSC

5. Monitor status

6. Where to begin troubleshooting

Be aware that there are costs associated with using Azure DSC on any device, which could be up to $6/node/month. However, there is some pricing flexibility available.

Set up an Azure Automation Account

It is important to determine which region the Azure Automation account is going to be deployed in. If a Log Analytics workspace is also desired, pay special attention to the region mappings. The mappings are important if Update Management, which at this time is only supported on Windows Server, or if Inventory Collection or Change Tracking is intended to be used.

Follow the quick-start to set up an Azure Automation account, but skip the run a runbook section.

Note

This document does not address the RBAC permissions and considerations necessary to complete the creation and operation of an Azure Automation account.

Set up a Log Analytics Workspace

Easily create a Log Analytics Workspace in the Azure Portal. Once created, link the automation account and the workspace by browsing to the Azure Automation account, and configuring one or more of Update Management, Inventory Collection, or Change Tracking features.

Monitoring runbooks and DSC Node Status in a Log Analytics workspace is possible without having to set up Update Management, Inventory Collection, or Change Tracking. That can be done by going to the Diagnostic Settings within the automation account, and adding a diagnostic setting with Log Analytics selected and any logs and metrics desired and pointing to the appropriate Log Analytics workspace. Additionally, diagnostic settings can be configured to go to an Event Hub or Storage Account.

Deploy a Desired Configuration to Azure Automation DSC

A configuration file is saved as a .ps1 PowerShell file, and contains resources for the node to add, remove or enforce, such as registry entries, services, files and directories, scheduled tasks, etc. DSC contains a number of built-in resources. Custom resources can be added from searching and importing from the Azure Automation Module Gallery or uploading as a custom .zip file. These are then referenced in the configuration file with the Import-DSCResource command, which exposes the resource classes to the script.

An example configuration file is located at GitHub and contains a number of configurations related to:

- HKEY Local Machine registry entries

- Services

- Audit policies

- User rights assignments

- File/Directory creations

- NTFS Permissions and inheritance

- Environment variables

- PowerShell execution policy

- Robocopy-like synchronization with an Azure Storage account

- Windows Optional Features

- Scheduled Tasks

- One of the scheduled tasks is designed to apply local group policy settings on a recurring basis to configure HKEY Current User registry keys. PowerShell DSC by itself was not designed to handle Current User registry keys. This may be an acceptable workaround. Please see the ApplyLockedLGPO.ps1 file for additional information.

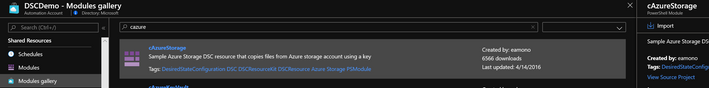

Use the example configuration file above as a template to modify the configuration to suit intended business purposes, removing or commenting out what’s not necessary, and adding or modifying others as needed. There are several custom modules in the example configuration file that need to be imported to the Azure Automation account from the gallery if their use on nodes is desired, otherwise comment out or remove them from the configuration file. To perform this, review the import module documentation, or navigate to the Modules gallery blade in the Azure Automation account, search for and select a particular module, and then import any necessary modules as needed.

One of the custom modules in use in the example configuration file is cAzureStorage. This module takes in an Azure Storage Account name, key, and container name to provide robocopy-like synchronization with nodes. The setup of the Storage Account is outside the scope of this document. However, upload files to the storage account that are required on nodes, and they will be downloaded only if new or changed on the nodes. Requirements for files on nodes exist if using Scheduled Tasks to run PowerShell scripts that install software in a user context or manage HKEY Current User registry keys via LGPO, for example. This module inclusion is designed to effectively replace on-premises file shares which may not be accessible in a Zero Trust scenario.

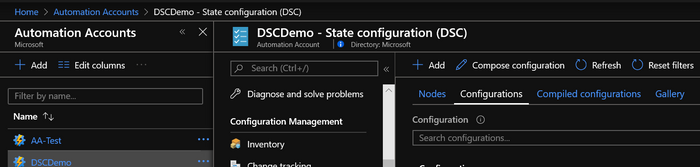

The .PS1 configuration file that contains the desired configurations that are to be enforced on a node is uploaded to Azure Automation by accessing the Azure Automation blade within the Azure portal, selecting the appropriate automation account, and then selecting State Configuration (DSC). Select the Configurations tab, and then select the +Add option to choose the .ps1 configuration file and upload it. Select OK at the bottom. Any modifications to the configuration file will require re-uploading and compiling within the automation account for the nodes to utilize them.

Alternatively, the configuration file can be added to Azure Automation programmatically using Import-AzAutomationDscNodeConfiguration.

Compile the configuration in Azure Automation DSC

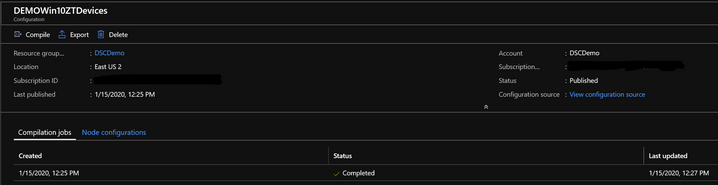

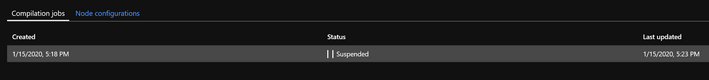

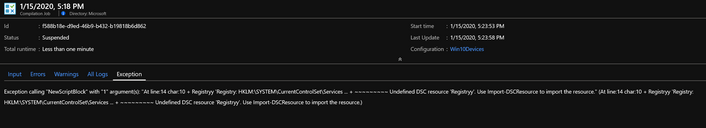

Select the now added configuration file that appears below the search bar. On the next blade, ensure the Last published date is the approximate date and time that the configuration file was added, then select Compile, and select Yes. The configuration will either show completed or suspended after a few minutes.

Select suspended configurations to drill into the exception reason on the exception tab. The last successfully completed configuration becomes the one that is applied to nodes.

Register Nodes with Azure Automation DSC Using PowerShell

Another PowerShell script needs to be deployed to any devices (nodes) that need the desired configuration applied. This script can be deployed by manual means or a software deployment mechanism such as System Center Configuration Manager or Intune.

This script contains variables near the end of the script that need to be adjusted depending the desired automation account and check in times that the nodes should adhere to. Notable parameters:

- RegistrationUrl: Copied from the Keys section within the Azure Automation account

- RegistrationKey: Copied from the primary or secondary access key from the Keys section

- NodeConfigurationName: The name of the configuration combined with the name of the node from the configuration .PS1 file with a period in between. See example in script below.

- RefreshFrequencyMins: Provide a number between 30 and 10080. This specifies how often in minutes the node should obtain the configuration from the Azure Automation DSC service.

- ConfigurationModeFrequencyMins: Provide a number between 15 and 10080. This specifies how often in minutes the node ensures the local configuration is in the desired state.

- AllowModuleOverwrite: Specifies if new modules are downloaded and overwrite old ones.

- ConfigurationMode: Values are ApplyOnly (once), ApplyAndMonitor (Once w/ drift reported), or ApplyAndAutoCorrect (Applied repeatedly upon drift w/ reporting).

- ActionAfterReboot: Values are ContinueConfiguration (continue config after reboot, default) or StopConfiguration (stop config after reboot).

Additionally, this script creates a unique Managed Object Format (MOF) file, known as a meta.MOF file, that is used to describe Common Information Model (CIM) classes. These are industry standards which gives flexibility in working with DSC. After using the MOF file, the script then cleans itself up and deletes this MOF file for security reasons.

An example script for generating the meta.MOF file and registering with Azure Automation DSC is located at Run-MetaMofDeployment.ps1, and is a slightly modified version of the official one. No other modifications to the script are necessary.

Monitor Status

The status of the nodes that have registered with Azure Automation DSC service will be in the nodes tab.

Node statuses:

- Failed: An error occurred applying one or more configurations on a node.

- Not compliant: Drift has occurred on a node and should be reviewed more closely if systemic.

- Unresponsive: Node has not checked in > 24 hours.

- Pending: Node has a new configuration to apply and the pull server is awaiting node check in.

- In progress: Node is applying configuration and the pull server is awaiting status

- Compliant: Node has a valid configuration and no drift is occurring presently.

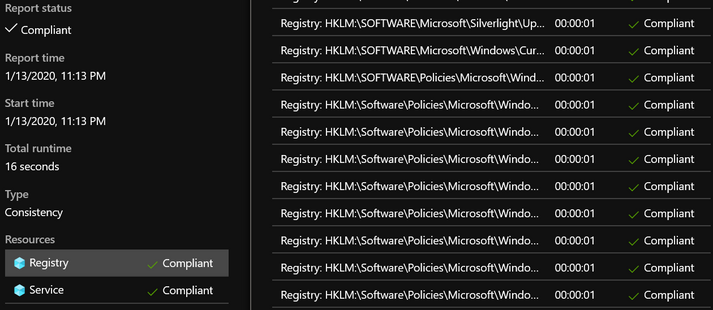

Select a node to get consistency history. Select a consistency item for detailed reporting status, any errors, and the compliance of the resources. Select a compliant or non-compliant resource to view more granular resource reporting, like the following example:

Troubleshooting

While new causes surface periodically, most troubleshooting will occur when testing and attempting to get the node configurations. After applying a configuration, the node will either be in a non-compliant or failed status. Non-compliance generally indicates a temporary drift in the node, and if the ConfigurationMode is set to ApplyAndAutoCorrect, will be corrected. In both cases however, having a node to work with to dig deeper is necessary.

On the testing/problem node, detailed PowerShell logging is beneficial. This can be enabled via the Local Group Policy Editor, creating a new local GPO, and browsing to Computer Configuration à Policies à Administrative Templates à Windows Components à Windows PowerShell. The two polices to enable are Turn on PowerShell Script Block Logging and Turn on Module Logging. The latter policy can log specific modules or all modules using * in both fields. Alternatively, run the following registry script on the node(s) to enable the same.

Module logging will stream more detailed events to the Applications and Services Logs > Windows PowerShell section in the Event Viewer on the node. Event IDs of 800 generally contain valuable data. Once it seems like the offending resource has been found, look at a few events preceding and following the 800 event ID.

Script block logging will stream more detailed events to the Application and Services Logs > Microsoft > Windows > PowerShell > Operational section in the Event Viewer on the node.

Conclusion

Thanks for reading! Hopefully this helps in maintaining security and enforcement on the Zero Trust journey.