This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

When developing service fabric services, you might have noticed that we can specify two parameters: "TargetReplicaSetSize" and "MinReplicaSetSize" for our reliable stateful services.

The explanation of these two attributes seems very straightforward as can be found in below two docs:

MinReplicaSetSize defines the minimum number of replicas that Service Fabric will keep in its view of the replica set for a given partition. For example, if the TargetReplicaSetSize is set to five, then normally (without failures) there will be five replicas in the view of the replica set. However, this number will decrease during failures. For example, if the TargetReplicaSetSize is five and the MinReplicaSetSize is three, then three concurrent failures will leave three replicas in the replica set's view (two up, one down).

However, developers always have some questions like how those two attributes cooperate and work together, and what is the recommended way to set those two values.

Let’s now have some experiments.

Scenario 1. What happens when TargetReplicaSetSize is different from MinReplicaSetSize?

We use a five nodes cluster. Then we deploy a stateful service into it with below configuration:

TargetReplicaSetSize=5

MinimumReplicaSetSize=3

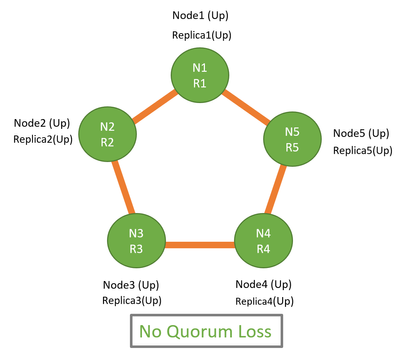

This means that at steady state there will be 5 replicas for the service in all nodes. For simplicity, we assume this stateful service only has one partition and below is the view of replicas of the service partition.

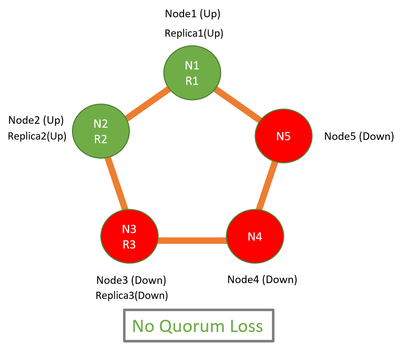

Now let’s say if nodes 5, 4 and 3 went down (in that order). You can see the number of replicas will be reduced to 3 since MinimumReplicaSetSize is set to 3. When failure happens, Service fabric will shrink down the count of replica set all the way to match the MinimumReplicaSetSize value. SF won't shrink replica set size below MinimumReplicaSetSize, that means replica set on nodes 1, 2 and 3 will stay in the SF view.

Since at this point, we still have 2 (out of 3) replicas up, the majority quorum of stateful service partition is still ensured, service will still be available.

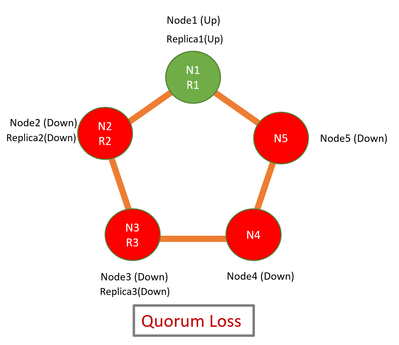

Now let’s say node 2 also went down. We will have quorum loss since majority of the replicas: 2 out of 3 are down. At this point service will be unavailable. Additional writes to the partition will be prevented until a quorum of replicas is restored.

Now let’s say if nodes 4 and 5 came up. At this point we have 3 nodes (out of 5) up, but the service will still not be recovered since there is only 1 out of 3 replicas up. In this case, node 2 or 3 must come up in order for quorum loss recovery. It doesn’t matter if node 4 or 5 come up or not.

Alternatively, if nodes 3 and 4 came up. At this point we have 3 nodes (out of 5) up, and service will recover since there are 2 out of 3 replicas up (quorum achieved).

That’s why when you have TargetReplicaSetSize and MinReplicaSetSize different, the recovery order becomes super important.

Scenario 2. When TargetReplicaSetSize equals to MinReplicaSetSize

Now if you have set TargetReplicaSetSize equals to MinReplicaSetSize. For example, we have set TargetReplicaSetSize=5 and MinimumReplicaSetSize=5. Then stateful service partition will always have five replicas persisted. Replicas in partition will not shrink.

TargetReplicaSetSize=5

MinimumReplicaSetSize=5

If nodes 5, 4 and 3 went down, which means 3 out of 5 replicas are down in a partition, the stateful service partition will immediately go into quorum loss status.

In this case, we only need to bring back only one random node to restore the quorum (3 out of 5 replicas up and running). The recovery is much easier and quicker than previous situation.

Conclusion

It’s a tradeoff of fault tolerant and quick recovery.

For quick recovery

- we recommend setting TargetReplicaSetSize = MinReplicaSetSize.

For fault tolerant

- we recommend setting the MinimumReplicaSetSize as demand. There's more fault tolerant because you can lose more replicas. However, the recovery is much more difficult.

Basically, we suggest easier recovery as you want to recover as fast as possible.

Additional

System Stateful Services

You can also set TargetReplicaSetSize and MinReplicaSetSize for system stateful services by changing cluster configuration as below:

"FabricSettings": [

{

"Name": "EventStoreService",

"Parameters": [

{

"Name": "TargetReplicaSetSize",

"Value": "3"

},

{

"Name": "MinReplicaSetSize",

"Value": "1"

}

]

}

]

Please find official doc here: https://docs.microsoft.com/en-us/azure/service-fabric/service-fabric-cluster-fabric-settings#eventstoreservice.

How SF handle stateful service failures:

Below is background of how SF cluster handle stateful service failures:

In a stateful service, incoming data is replicated between replicas (the primary and any active secondaries). If a majority of the replicas receive the data, data is considered quorum committed. (For five replicas, three will be a quorum.) This means that at any point, there will be at least a quorum of replicas with the latest data. If replicas fail (say two out of five), we can use the quorum value to calculate if we can recover. (Because the remaining three out of five replicas are still up, it's guaranteed that at least one replica will have complete data.)

When a quorum of replicas fail, the partition is declared to be in a quorum loss state. Say a partition has five replicas, which means that at least three are guaranteed to have complete data. If a quorum (three out five) of replicas fail, Service Fabric can't determine if the remaining replicas (two out five) have enough data to restore the partition. In cases where Service Fabric detects quorum loss, its default behavior is to prevent additional writes to the partition, declare quorum loss, and wait for a quorum of replicas to be restored.

More details can be found in this official documentation: https://docs.microsoft.com/en-us/azure/service-fabric/service-fabric-disaster-recovery#stateful-services .