This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

Time by time, when you use Service Fabric cluster, the cluster may meet different issues and in the reported error/warning message, it’s marked that one or some specific nodes do not have enough disk space. This may be caused by different reasons. This blog will talk about the common solution of this issue.

Possible root causes:

There will be lots of possible root causes for the not enough disk space issue. In this blog, we'll mainly talk about following four:

- Diagnostic log files (.trace and .etl) consumes too much space

- Paging file consumes too much space

- Too many application packages existed in node

- Too many images existed (Only for cluster used with container)

To better identify which one is matching your own scenario, please kindly check the following description of them:

- For the log files, we can RDP into the node reporting not enough disk space and check the size of the folder D:\SvcFab\Log. If the size of this folder is bigger than expected, then we can try to reconfigure the cluster to decrease the size limit of the diagnostic log files.

- For the paging files, it's a built-in feature of Windows system. For detailed introduction, please check this document. To verify if we got this issue, we can RDP into the node and check whether we can find the hidden file D:\pagefile.sys. If we can find it, that means your service fabric cluster is consuming some disk space as RAM. We can consider about configuring the Paging file to be saved in disk C instead of disk D.

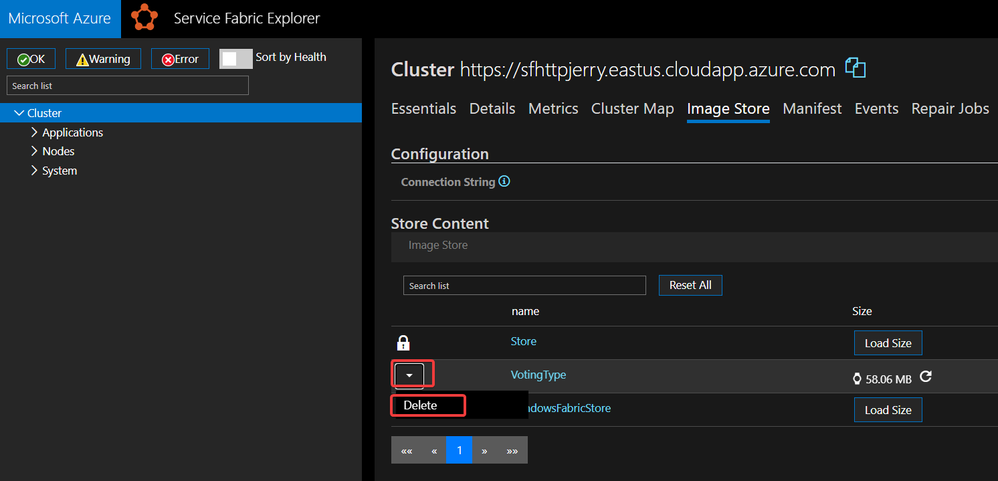

- For too many application packages existed in node which consume too much disk space, we can verify it in Service Fabric Explorer (SFX). By visiting SFX from Azure Portal Service Fabric overview page, we can turn to the Image Store page of the cluster and verify whether there is any record with name different from Store and WindowsFabricStore. If yes, then please click on Load Size button to check its size.

- For the too many images existed cause, this will only happen when our service fabric cluster is running with Container feature. We can RDP into the node and use command docker image ls to list all used images on this node. If there are some images which were used before but not removed/pruned even after no longer being used, it will consume a lot of disk space since the image file is normally with a huge size. For example, the size of image for windows server core is more than 10 GB.

Possible solutions:

Then let's talk about the solutions of the above four kinds of issue.

1. To reconfigure the size limit of the diagnostic log files, we need to open a PowerShell command window with Az module installed. Please refer to the official document for how to install. After login successfully, we can use the following command to set the expected size limit.

Please remember to replace the resource group name, service fabric cluster name and the number for size limit by yourself before running command.

Once this command is run successfully, it may not take effect immediately. The Service Fabric cluster will scan size of the diagnostic log periodically. We need to wait until next scan is triggered. Once it's triggered and if the size of the diagnostic log files are bigger than your setting number (25600MB = 20 GB in my example), cluster will automatically delete some log files to release more disk space.

2. To change the path of paging file, we can follow these steps to switch.

- Check the status of our Service Fabric cluster in Service Fabric Explorer to make sure every node, service and application is healthy.

- RDP into the VMSS node

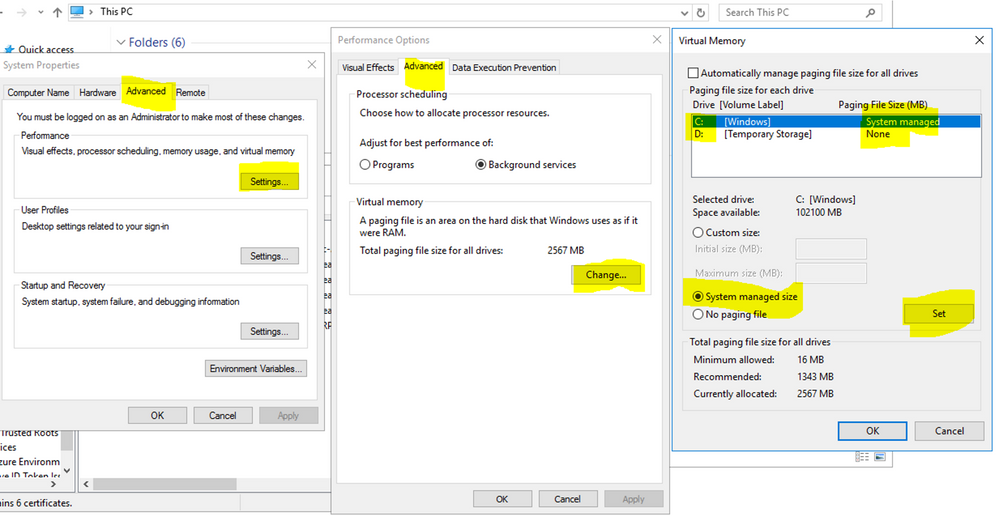

- In the Search bar, type in "Advanced System Setting". Then choose Advanced -> Advanced -> Change -> Next is to set D drive to No Paging file and set C drive to System Managed Size.

- This setting change will need user to reboot the VMSS node to take effect. Please reboot the node and wait until everything is back to healthy status in Service Fabric Explorer before RDP into next node.

- Repeat above steps for all nodes.

3. To clean up the application package, this is easy to do in SFX. Once we visit SFX, go to the same Image Store page as how we check the issue for this kind of root cause. Then on the left side, there will be a menu to delete the unneeded package.

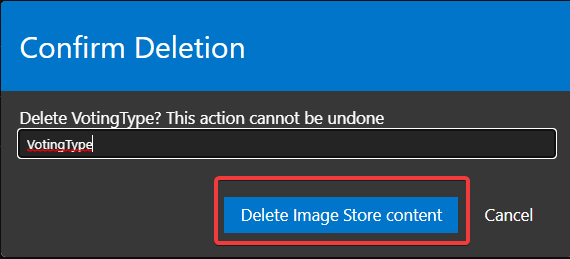

After typing in the name in confirmation window and select Delete Image Store content, cluster will automatically delete the unneeded application on every node.

4. For the issue caused by too many images, we can configure the cluster to automatically deleted the unused image. The detailed configuration can be found in this document and the way about how to update cluster configuration will be as following:

a. Visit Azure Resource Explorer with Read/Write mode, login and find the Service Fabric cluster.

b. Click Edit button and modify the json format cluster configuration as expected. In this solution, it will be to add some configuration into fabricSettings part.

c. Send out the request to save the new configuration by clicking the green PUT button and wait until the provisioning status of this cluster becomes Succeeded.

To make this solution work, there is one more thing which we need to do is to unregister all unnecessary and unused applications. This can also be done by the command documented here. Since the parameter ApplicationTypeName and ApplicationTypeVersion are both required for this command, that means we can only unregister one version of an application type after running the command once. But since maybe you may have very many versions and many application types, here are 2 following possible ways:

- If there is/are actually some versions of some application types which you want to keep it registered for future use in this cluster, please unregister those unnecessary versions by running command Unregister-ServiceFabricApplicationType -ApplicationTypeName VotingType -ApplicationTypeVersion 1.0.1 (Remember to replace the ApplicationTypeName and ApplicationTypeVersion and Use step 2.e to connect to cluster at first.)

- If there isn't any version of any application type which you want to keep specially, which means we only need to keep the application type being used by running application, then we can use step 2.e to connect to cluster and then run the following script:

In additional to the above four possible causes and solutions, there are three more possible solutions for the "Not enough disk space" issue. The following is the explanation.

Scale out VMSS:

Sometimes scaling out, which means increasing the number of nodes of Service Fabric cluster will also help us to mitigate the disk full issue. This operation will not only be useful to improve the CPU and memory usage, but will also auto-balance the distribution of the services among nodes to improve the disk usage. When using the Silver or higher durability, to scale out the VMSS instances, we can scale out the nodes number in the VMSS directly.

Scale up VMSS:

It is easy to understand this point. Since the issue is about the full disk, we can simply change the VM sku to a bigger size to have bigger disk space. But please kindly check all above solutions at first to make sure everything is reasonable and we do really need more disk size to handle more data. For example, if our application is with stateful services and the full disk happens due to our stateful services save too much data, then we should consider about improving the code logic but not scaling out the VMSS at first. Otherwise, with bigger VM sku, the issue will still reproduce sooner or later.

To scale up the VMSS, we can following two ways:

- We can use the command Update-AzVmss to update the state of a VMSS. This is the simple way however the solution is not recommended, because there is a little risk of data loss/instance down. When using the Silver or higher durability, the risk can be mitigated because they support repair tasks.

- The second way to upgrade the size of the SF primary node type is adding the new node type with the bigger SKU. The option is much more difficult than the option one but officially recommended and you can check the document for more information.

Reconfigure the ReplicatorLog size:

Please be careful that the ReplicatorLog is not saving any kind of log file. It's saving some important data of both Service Fabric cluster and application. Delete this folder will possibly cause data loss. And the size of this folder is fixed to the configured size, by default 8 GB. It will always be the same size no matter how much data is saved.

It's NOT recommended to modify this setting. You should only do it if you absolute have to do so. It may run the risk of data loss. This should only be done if absolutely required.

For the ReplicatorLog size, as mentioned above, the key point is to add a customized ktlLogger setting into the Service Fabric cluster. To do that, we need to:

a. Visit Azure Resource Explorer with Read/Write mode, login and find the Service Fabric cluster.

b. Add the ktlLogger setting into fabricSettings part. The expected expression will be such as following:

KTlogger setting in resource explorer

KTlogger setting in resource explorer

c. Send out the request to save the new configuration by clicking the green PUT button and wait until the provisioning status of this cluster becomes Succeeded.

d. Visit SFX and check the status to make sure everything is in healthy state.

e. Open a PowerShell command window from a computer where the cluster certificate is installed. If the Service Fabric module is not installed yet, please refer to our document to install at first. Then run following command to connect to the Service Fabric cluster. Here the thumbprint is the one of the cluster certificate and also remember to replace the cluster name by correct URL.

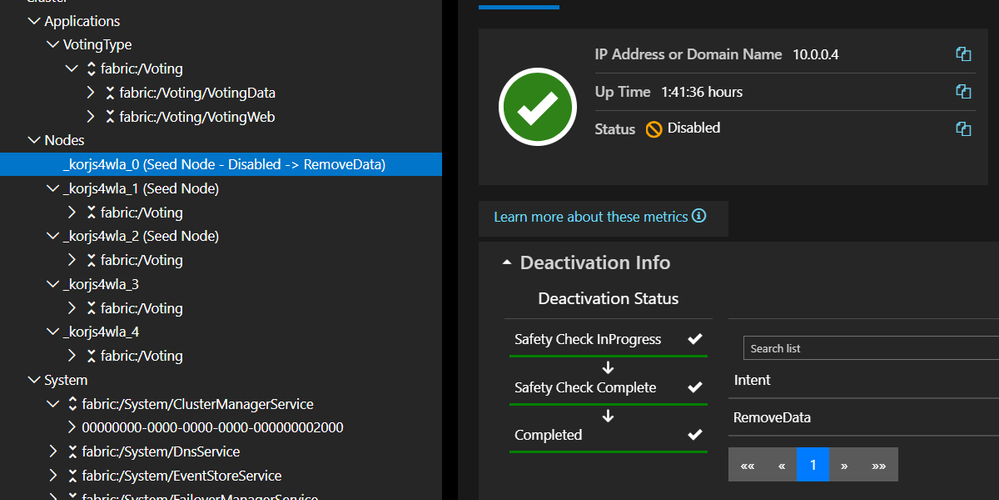

f. Use command to disable one node from Service Fabric cluster. (_nodetype1_0 in example code)

g. Monitor in SFX until the node included in last command is with status disabled.

h. RDP into this node and manually delete the D:\SvcFab\ReplicatorLog folder. Attention! This operation will remove all logs in ReplicatorLog. Please double-confirm whether any context there is still needed before deletion.

i. Use following command to enable the disabled node. Monitor until the node is with status Up.

j. Wait until everything is healthy in SFX and repeat step f to step i on every node. After that, the ReplicatorLog folder in node will be with new customized size.