This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

This article shows how to deploy an Azure Kubernetes Service (AKS) cluster with API Server VNET Integration. AKS clusters with API Server VNET integration provide a series of advantages, for example, they can have public network access or private cluster mode enabled or disabled without redeploying the cluster. You can find the companion code in this GitHub repo.

Prerequisites

- An active Azure subscription. If you don't have one, create a free Azure account before you begin.

- Visual Studio Code installed on one of the supported platforms along with the Bicep extension.

Architecture

The companion Azure code sample provides a Bicep and an ARM template to deploy a public or a private AKS cluster with API Server VNET Integration with Azure CNI network plugin and Dynamic IP Allocation. In a production environment, we strongly recommend deploying a private AKS cluster with Uptime SLA. For more information, see Private AKS cluster with a Public DNS address. Alternatively, you can deploy a public AKS cluster and secure access to the API server using authorized IP address ranges.

Both the Bicep and ARM template deploy the following Azure resources:

- Microsoft.ContainerService/managedClusters: A public or private AKS cluster composed of a:

systemnode pool in a dedicated subnet. The default node pool hosts only critical system pods and services. The worker nodes have node taint, which prevents application pods from beings scheduled on this node pool.usernode pool hosting user workloads and artifacts in a dedicated subnet.

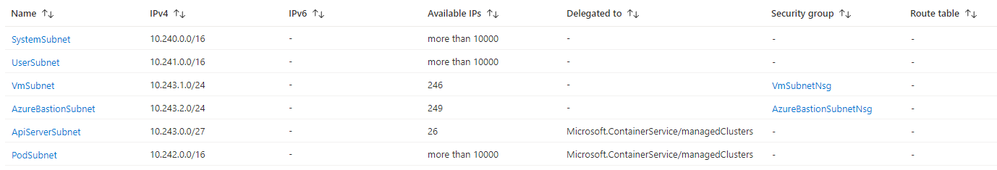

- Microsoft.Network/virtualNetworks: a new virtual network with six subnets:

SystemSubnet: this subnet is used for the agent nodes of thesystemnode pool.UserSubnet: this subnet is used for the agent nodes of theusernode pool.PodSubnet: this subnet is used to allocate private IP addresses to pods dynamically.ApiServerSubnet: API Server VNET Integration projects the API server endpoint directly into this delegated subnet in the virtual network where the AKS cluster is deployed.AzureBastionSubnet: a subnet for the Azure Bastion Host.VmSubnet: a subnet for a jump-box virtual machine used to connect to the (private) AKS cluster and for the private endpoints.

- Microsoft.ManagedIdentity/userAssignedIdentities: a user-defined managed identity used by the AKS cluster to create additional resources like load balancers and managed disks in Azure.

- Microsoft.Compute/virtualMachines: Bicep modules create a jump-box virtual machine to manage the private AKS cluster.

- Microsoft.Network/bastionHosts: a separate Azure Bastion is deployed in the AKS cluster virtual network to provide SSH connectivity to both agent nodes and virtual machines.

- Microsoft.Storage/storageAccounts: this storage account is used to store the boot diagnostics logs of both the service provider and service consumer virtual machines. Boot Diagnostics is a debugging feature that allows you to view console output and screenshots to diagnose virtual machine status.

- Microsoft.ContainerRegistry/registries: an Azure Container Registry (ACR) to build, store, and manage container images and artifacts in a private registry for all container deployments.

- Microsoft.KeyVault/vaults: an Azure Key Vault used to store secrets, certificates, and keys that can be mounted as files by pods using Azure Key Vault Provider for Secrets Store CSI Driver. For more information, see Use the Azure Key Vault Provider for Secrets Store CSI Driver in an AKS cluster and Provide an identity to access the Azure Key Vault Provider for Secrets Store CSI Driver.

- Microsoft.Network/privateEndpoints: an Azure Private Endpoints is created for each of the following resources:

- Azure Container Registry

- Azure Key Vault

- Azure Storage Account

- API Server when deploying a private AKS cluster.

- Microsoft.Network/privateDnsZones: an Azure Private DNS Zone is created for each of the following resources:

- Azure Container Registry

- Azure Key Vault

- Azure Storage Account

- API Server when deploying a private AKS cluster.

- Microsoft.Network/networkSecurityGroups: subnets hosting virtual machines and Azure Bastion Hosts are protected by Azure Network Security Groups that are used to filter inbound and outbound traffic.

- Microsoft.OperationalInsights/workspaces: a centralized Azure Log Analytics workspace is used to collect the diagnostics logs and metrics from all the Azure resources:

- Azure Kubernetes Service cluster

- Azure Key Vault

- Azure Network Security Group

- Azure Container Registry

- Azure Storage Account

- Microsoft.Resources/deploymentScripts: a deployment script is used to run the

install-helm-charts.shBash script which installs the following packages to the AKS cluster via Helm. For more information on deployment scripts, see Use deployment scripts in Bicep

NOTE

You can find thearchitecture.vsdxfile used for the diagram under thevisiofolder.

What is Bicep?

Bicep is a domain-specific language (DSL) that uses a declarative syntax to deploy Azure resources. It provides concise syntax, reliable type safety, and support for code reuse. Bicep offers the best authoring experience for your infrastructure-as-code solutions in Azure.

API Server VNET Integration

An Azure Kubernetes Service (AKS) cluster configured with API Server VNET Integration projects the API server endpoint directly into a delegated subnet in the virtual network where the AKS cluster is deployed. This enables network communication between the API server and the cluster nodes without requiring a private link or tunnel. The API server will be available behind a Standard Internal Load Balancer VIP in the delegated subnet, which the agent nodes will be configured to utilize. The Internal Load Balancer is called kube-apiserver and is created in the node resource group, which contains all of the infrastructure resources associated with the cluster.

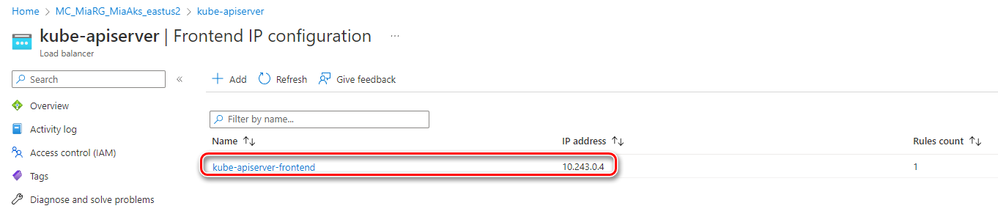

By using API Server VNET Integration, you can guarantee that the network traffic between your API server and your node pools remains in the virtual network. The control plane or API server is in an Azure Kubernetes Service (AKS)-managed Azure subscription. Your AKS cluster and node pools are instead in your Azure subscription. The agent nodes of your cluster can communicate with the API server through the API server VIP and pod IPs projected into the delegated subnet. The following figure shows the kube-apiserver-frontend frontend IP configuration of the kube-apiserver Internal Load Balancer used by agent nodes to invoke the API server in a cluster with API Server VNET Integration.

The kube-apiserver Internal Load Balancer has a backend pool called kube-apiserver-backendpool which contains the private IP address of the API Server pods projected in the delegated subnet.

API Server VNET Integration is supported for public or private clusters, and public access can be added or removed after cluster provisioning. Unlike non-VNET integrated clusters, the agent nodes always communicate directly with the private IP address of the API Server Internal Load Balancer (ILB) IP without using DNS. If you open an SSH session to any of the AKS cluster agent nodes via Azure Bastion Host and you run the sudo cat /var/lib/kubelet/kubeconfig command to see the kubeconfig file, you will notice that the cluster server contains the private IP address of the kube-apiserver load balancer in place of the FQDN of the API Server as in a non-VNet integrated cluster.

kubeconfig of an AKS cluster with API Server VNET Integration

kubeconfig of an AKS cluster without API Server VNET Integration

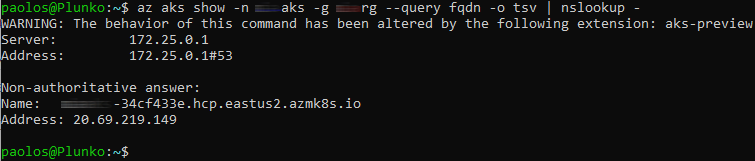

All node to API server traffic is kept on private networking and no tunnel is required for API server to node connectivity. Out-of-cluster clients needing to communicate with the API server can do so normally if public network access is enabled. If the AKS cluster is public and you are allowed to access the API Server from your machine, you can run the az aks show -n <cluster-name> -g <cluster-resource-group> --query fqdn -o tsv | nslookup - command to run nslookup against the FQDN of the API server, as shown in the following figure:

If you need to access the API server from a virtual machine located in the same virtual network of the AKS cluster, for example via kubectl, you can use the private IP address of the kube-apiserver-frontend frontend IP configuration of the kube-apiserver Internal Load Balancer to keep the traffic within the virtual network. Instead, if you use the API Server FQDN, the virtual machine will communicate with the API Server via a public IP. If public network access is disabled, any virtual machine in the cluster virtual network, or any peered virtual network, should follow the same private DNS setup methodology of a standard private AKS cluster. For more information, see Create a private AKS cluster with API Server VNET Integration using bring-your-own VNET.

When using Bicep to deploy an AKS cluster with API server with VNET integration, you need to proceed as follows:

- Create a dedicated subnet for the API Server. This subnet will be delegated to the

Microsoft.ContainerService/managedClustersresource type and should not contain other Azure resources. Please note theapiServerSubnetNamesubnet in the Bicep snippet below.

- Set the enableVnetIntegration property to

trueto enable API Server VNET Integration. - Set the subnetId to the resource id of the delegated subnet where the API Server VIP and Pod IPs will be projected.

Convert an existing AKS cluster to API Server VNET Integration

Existing AKS public clusters can be converted to API Server VNET Integration clusters by supplying an API server subnet that meets the following requirements:

- The supplied subnet needs to be in the same virtual network as the cluster nodes

- Network contributor permissions need to be granted for the AKS cluster identity

- Subnet CIDR size needs to be at least /28

- The subnet should not contain other Azure resources.

This is a one-way migration; clusters cannot have API Server VNET Integration disabled after enabling it. This upgrade will perform a node-image version upgrade on all agent nodes. All the workloads will be restarted as all nodes undergo a rolling image upgrade.

Converting a cluster to API Server VNET Integration will result in a change of the API Server IP address, though the hostname will remain the same. If the IP address of the API server has been configured in any firewalls or network security group rules, those rules may need to be updated.

Enable or disable private cluster mode on an existing cluster with API Server VNET Integration

AKS clusters configured with API Server VNET Integration can have public network access/private cluster mode enabled or disabled without redeploying the cluster. The API server hostname will not change, but public DNS entries will be modified or removed as appropriate.

Enable private cluster mode

Disable private cluster mode

Limitations

- Existing AKS private clusters cannot be converted to API Server VNET Integration clusters at this time.

- Private Link Service will not work if deployed against the API Server injected addresses at this time, so the API server cannot be exposed to other virtual networks via private link. To access the API server from outside the cluster network, utilize either VNet peering or AKS run command.

Deploy the Bicep modules

You can deploy the Bicep modules in the bicep folder using the deploy.sh Bash script in the same folder. Specify a value for the following parameters in the deploy.sh script and main.parameters.json parameters file before deploying the Bicep modules.

prefix: specifies a prefix for the AKS cluster and other Azure resources.authenticationType: specifies the type of authentication when accessing the Virtual Machine.sshPublicKeyis the recommended value. Allowed values:sshPublicKeyandpassword.vmAdminUsername: specifies the name of the administrator account of the virtual machine.vmAdminPasswordOrKey: specifies the SSH Key or password for the virtual machine.aksClusterSshPublicKey: specifies the SSH Key or password for AKS cluster agent nodes.aadProfileAdminGroupObjectIDs: when deploying an AKS cluster with Azure AD and Azure RBAC integration, this array parameter contains the list of Azure AD group object IDs that will have the admin role of the cluster.keyVaultObjectIds: Specifies the object ID of the service principals to configure in Key Vault access policies.

We suggest reading sensitive configuration data such as passwords or SSH keys from a pre-existing Azure Key Vault resource. For more information, see Use Azure Key Vault to pass secure parameter value during Bicep deployment.

Review deployed resources

Use the Azure portal, Azure CLI, or Azure PowerShell to list the deployed resources in the resource group.

Azure CLI

PowerShell

Azure Portal

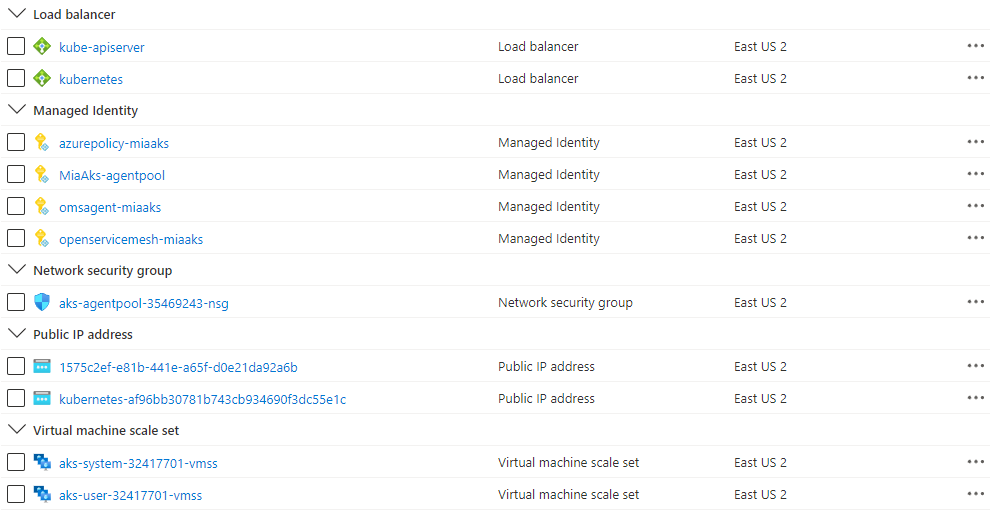

Figure: Azure Resources in the resource group.

Figure: Azure Resources in the node resource group.

Figure: Subnets in the BYO virtual network.

Clean up resources

When you no longer need the resources you created, just delete the resource group. This will remove all the Azure resources.

Next Steps

For more information, see Create an Azure Kubernetes Service cluster with API Server VNET Integration