This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Introduction

There are many good reasons to enable Azure Diagnostics on your Azure PaaS resources, for example, auditing who has been accessing a Key Vault, troubleshooting failed requests to a Storage Account, doing a forensics analysis to a compromised Azure SQL Server, etc. Azure resource logging is recommended as part of the Operational Excellence and Security pillars of the Well Architected Framework. Furthermore, you’ll also increase your Azure Secure Score, as enabling auditing and logging is one of the assessed controls of your security posture.

Azure customers mostly prefer to enable Azure Diagnostics at scale and ensure recommended best practices are always applied. Among the several deployment options, implementing Azure Policy is the preferable strategy. There are many built-in DeployIfNotExists policies for each Azure resource type. The effort of this is almost negligeable.

“OK, I got it, but how much will this cost?”, you may certainly ask. This is a perfectly valid question and it’s something my customers ask over and over, each time I recommend them to enable Azure Diagnostics at scale for a specific resource type. This post is an attempt to teach you how to estimate your Azure Diagnostics costs, before you deploy it at scale.

Strategy

Azure Diagnostics is priced differently, depending on the type of destination you select for your logs – Log Analytics, Storage Account, Event Hubs or a partner solution. For the sake of simplicity and assuming Log Analytics is the preferred destination type (easiest and integrated way of consuming your logs), this post focuses on Log Analytics ingestion costs, but the principles described here apply to the other destination types as well, because, at the end, your Azure Diagnostics costs will always be proportional to the volume of data sent to the chosen destination.

Being Log Analytics billed for ingestion volume (in GB) and retention volume (GB/month after 31 days of free retention), we must first determine what is the typical size of a log entry for the resource we are going to enable Azure Diagnostics in. Secondly, by leveraging Azure Monitor metrics or other metrics provider, we calculate how many logging entries our resources will potentially generate per month. Depending on the resource type, we need to identify which metric gives us this information. Having the estimated entries per month and log entry size at hand, we can easily get a gross estimate of the costs, with this generic formula: entries per month * approximate log entry size * (ingestion cost + non-free retention cost).

As examples, we’ll use Key Vault, AKS and SQL Server audit events. The principles described here apply to other Azure resource types as well. All the cost estimates done in this article are done using the West Europe PAYG retail price in EUR from the Azure Monitor Pricing page.

Key Vault audit logs cost

Let’s start with the simplest one! Azure Key Vault currently supports two different log categories and we’re looking here at the most used one – Audit Logs.

How do we know the typical size of a Key Vault Audit Log entry? This article gives you a hint about the approximate size, but expect some variation, as your Key Vault may generate slightly larger or smaller entries, depending on the Key Vault name, resource group, client agent or request string lengths. The best way to estimate a log entry size is to enable Diagnostic Settings in a single representative Key Vault and then run this query in the Log Analytics workspace you chose as destination:

AzureDiagnostics

| where Category == 'AuditEvent' and TimeGenerated > ago(30d)

| summarize percentile(_BilledSize, 95), round(avg(_BilledSize))

You’ll get results close to this:

This means that 95% of the audit log entries of the past 30 days were at most 931 bytes in size and a log entry is on average 912 bytes long. Depending on how conservative you are, you’ll choose one or the other value (or somewhere in the middle). You may ask how I knew which Log Analytics table and Diagnostic Logs category to query. With some exceptions, Azure Diagnostics are written in the AzureDiagnostics table. The log categories for each resource type are documented here.

Now, let’s calculate the amount of Key Vault audit log entries our environment is potentially generating each month. In the Metrics blade of a Key Vault resource, we have several metrics available. One of them is “Total Service Api Results”, which gives you the number of requests to your Key Vault and which will ultimately generate a log entry (see an example in the picture below, where a specific Key Vault got 2.54 K requests in the last month).

While this approach is viable when you have only a few resources, it does not scale well when you have tens or hundreds of resources. An alternative, more scalable solution is to look at the Key Vault Insights blade in Azure Monitor. You can see per subscription aggregates and thus easily get an insight about how many Key Vaults requests your environment is getting overall every month (81, in the example below).

OK, we know each log entry is about 912 bytes long and assuming our environment gets a hypothetical 1 million requests per month across all Key Vaults, we have 1M * 912 bytes = 0,85 GB of data ingested into Log Analytics per month. Assuming we’ve set the Log Analytics retention to 90 days, we have:

- Ingestion costs: 0,85 * 2.955 EUR = 2.51 EUR

- Retention costs (31 days free): 0,85 * 2 months * 0.129 EUR = 0.22 EUR

As you see, it’s an easy estimate to do and, unless you have lots of Key Vaults being hit hard, the Log Analytics costs will normally be low.

Azure Kubernetes Service (AKS) audit events cost

AKS diagnostic logs are divided into several categories with different purposes, ranging from logging generated by requests being handled by the Kubernetes API Server to scheduler or storage drivers logs. You can find more details in the AKS documentation. Estimating the costs of each log category is not a simple task. To make things a bit less difficult, we’re focusing on one of the most popular categories - kube-audit -, which logs audit events that were exported from the Kubernetes API Server and is especially relevant from the point of view of cost analysis and estimation.

If we had an Azure Monitor metric for AKS that exposed the total number of audit events generated by the API Server, our estimates would be very simple to achieve. But, alas, that’s not the case. The only metric available for the AKS API Server is “Inflight Requests”, which gives us an idea of the load on the API Server but is not helpful in extrapolating the number of audit events at a given time interval. Unfortunately, the Azure Monitor Containers Insights solution is not of help either, as it does not surface API Server metrics.

We have therefore to use other tools to directly access the metrics exposed by the Kubernetes API Server. For example, we can collect those metrics with the help of Prometheus – see this article for a very simple and quick Prometheus deployment on your AKS cluster – and query for the apiserver_audit_event_total counter.

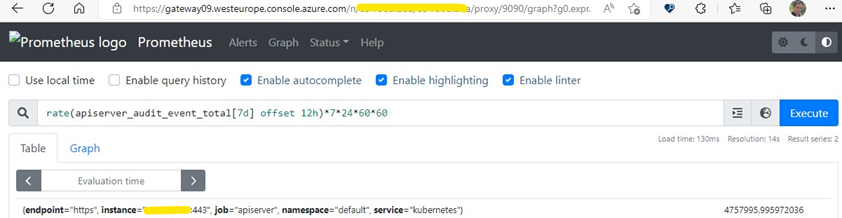

After having set up Prometheus in my cluster and let it collect metrics for at least one week, I port forwarded the Prometheus Graph endpoint (see instructions in the above-mentioned article) and wrote the PromQL query below to get the approximate amount of audit events that occurred in a 7-days timeframe.

rate(apiserver_audit_event_total[7d] offset 12h) *7*24*60*60

In the query above, I had to use the rate function to account for counter resets and added a 12-hours offset to be sure my recent unusual activity in the cluster wasn’t influencing the results. The rate function gives me the per-second rate of the counter, so I had to multiply the result by the number of seconds in a week.

However, making a correlation between this number and what we can expect to have as log entries in Log Analytics is not straightforward, because it depends on the Prometheus configuration and on the Kubernetes Audit policy that is in place. Using the default Prometheus setup described in the article above and enabling kube-audit Azure Diagnostics for a few days in my AKS clusters, I consistently got a 70-80% relation between Prometheus metrics and Log Analytics log entries. For example, for each 10K Log Analytics entries, I had 7-8K audit events measured by Prometheus. Please, do not blindly follow these numbers and do your own diagnostics tests for a short period of time in a representative cluster. If you can’t access API Server metrics, you can simply try to extrapolate the numbers you got from the diagnostics test and assume that other AKS clusters have potentially X or Y percent more/less audit events – this approach is much more error prone, of course.

Finally, having at hand the number of audit events per month, we must do the usual math, based on the average size of each log entry. In my lab, using a cluster without any workload other than Prometheus, the average log entry size was about 2 KB.

For example, with 10 M audit events per month representing 12.5 M Log Analytics entries, let’s assume I have 5 AKS clusters in total and all of them show the same audit events metrics counts. This means I will have 62.5 M log entries * 2KB = 199 GB per month, and thus:

- Ingestion costs: 199 * 2.955 EUR = 351.65 EUR

- Retention costs (31 days free): 199 * 2 months * 0.129 EUR = 51.34 EUR

SQL Server Auditing cost

This is one the toughest, but we won’t give up, as it’s always possible to do some overall estimate, despite the high error proneness. And why is it so difficult to estimate SQL Server Auditing costs? For two main reasons: 1) each SQL Database has a different schema and is subject to a different load, with different querying behavior; 2) there isn’t a reliable metric or set of metrics in SQL Server that can help us identify how many auditable operations are happening in each moment or timespan.

Azure SQL Server Auditing logs can be very verbose, as they include by default many details of the operations, including the SQL statements. You can and should review the auditing policy that is defined in the Azure SQL Server instance, so that you have more control over what is logged.

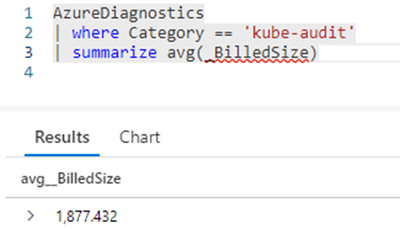

Having said that, the only (still unreliable) option to do some estimates is to enable SQL Auditing in an Azure SQL Server instance or database, for a few days, and then try to extrapolate the log volume to other instances of your environment.

Conclusion

Accurately estimating Azure Diagnostics costs can be either very easy or almost impossible. It will depend on the resource type you are monitoring. I hope this article gave you some references and food for thought for your estimations! Happy monitoring!

A special thanks to Alexandre Vieira for reviewing this article.