This post has been republished via RSS; it originally appeared at: Microsoft Tech Community - Latest Blogs - .

Identity & Access Control

The first thing to cover regarding Identity & Access Control is the cluster and operator identity, which means identity access control for the cluster itself and for people who are going to interact with the API server of the cluster. The other important part is pod identity.

Cluster Identity

Let’s start with identity access control of the cluster itself. When you are creating an AKS cluster, the cluster will get an identity in Azure. This identity is the one that the control plane of the cluster uses to interact with Azure resources and manage cluster resources, including ingress load balancers and AKS managed public IPs, Cluster Autoscaler, Azure Disk & File CSI drivers. It is used in a lot of different operations that the control plane nodes running in the cluster need to perform against the Azure management plane.

Use a managed identity for the control plane identity in AKS

There are two kinds of identities that the cluster can use. The old way of configuring a cluster identity was by passing a service principal, at AKS cluster creation time. This is not recommended.

The recommendation would be to use a managed identity in Azure, which is an identity that is fully managed by Azure. That means you don’t need to care about credentials, you don’t need to store the password for that identity anywhere. Even more, depending on the type of the managed identity that you use, the lifecycle of each identity can also be fully managed by Azure.

You can assign a managed identity, when you create an AKS cluster, by using one parameter that is called --enabled-managed-identity in the az aks create command, which is the default option.

System-assigned VS User-assigned Managed Identity for the Cluster Identity

If you choose to use a managed identity (MI) you have two choices in Azure. It can be either a system-assigned MI or a user-assigned MI.

In the case of a system-assigned MI, Azure is fully responsible for handling both the credentials and the lifecycle of this identity. This identity is directly related to the lifecycle of the service that is tight to, which in this case is the AKS cluster. If some day you do not need the cluster and you delete it, that identity will also be deleted. Then, you can use that identity to associate it with your cluster and start assigning RBAC permissions to it, to access other Azure resources.

The other type of MI you can use is a user-assigned MI. In this case, the lifecycle of a user-assigned MI is controlled by you, which means that you must create the identity beforehand as a separate Azure resource appearing inside your resource group and start assigning RBAC permissions to it, to access other Azure resources. Now, even if you delete the resource to which the identity is associated with, the identity remains there. You do not have to take care of the identity credentials, as these are still managed by Azure.

Back to the AKS cluster scenario, when you pass the --enabled-managed-identity flag, it will default to a system-assigned MI for the control plane identity, with default contributor role permissions for the cluster’s resource group, which is created and maintained by Azure. But you can also specify another parameter called --assign-identity. With that, you can assign the AKS cluster a user-assigned MI that you have pre-created. Both options (system / user-assigned MI) are equally fine.

One scenario where you want to use a user-assigned MI is when you pre-provision the cluster by providing a subnet, where you want to deploy the cluster. In this case, the identity that gets created will need to have certain permissions on those Azure resources. For example, if you configure a private cluster, with private DNS zones, it will require certain permissions over specific resources. If you have this identity pre-created, then you can make sure that it has all the necessary permissions beforehand.

Another scenario where you would want to use a user-assigned managed identity instead of a system-assigned one would be if you would want to recreate your cluster. System-assigned managed identity is tied to your resource life cycle, so it's better to use a user-assigned managed identity, because you can recreate your cluster using the same identity and you don't have to reassign all the permissions which are needed.

Bring your own identity in AKS

In AKS, when you create a cluster, by default, two identities are going to be created: Control plane and Kubelet identity. For the control plane identity, as mentioned above, a system-assigned MI is going to be created.

Kubelet identity is created as a user assigned one, but by default is managed by Azure and will be created inside the managed MC_{resourceGroupName}_{aksClusterName}_{aksClusterLocation} resource group that gets created automatically by Azure.

An alternative would be to provide your own identity, which lies somewhere else (in a different resource group and not in the managed MC_{resourceGroupName}_{aksClusterName}_{aksClusterLocation} one that Azure automatically creates), and you are then responsible to manage this identity. This is the reason why this scenario is called "Bring your own identity in AKS" as opposed to "user assigned identities", since the default one (for kubelet at least) is also a user assigned identity.

Summary of managed identities in AKS (Control plane & Kubelet)

In this summary of managed identities table, you can find all the different identities that you can have in an AKS cluster, which the cluster uses for built-in services and add-ons. The most important and mandatory ones are:

- Control plane identity:

- Being used by the AKS control plane to do things like joining VMs into VNETs or scaling up / down node pools.

- Kubelet identity:

- Each Node Pool is essentially a VM scale set (VMSS) under the hood:

- Each VMSS in Azure can have multiple identities attached to it, and those are identities that any VM in that scale set can use.

- By default, a Kubelet identity is going to be attached to a node pool and any VM can request a token for that identity:

- This identity is being used at a minimum on any cluster to be able to connect to an Azure Container Registry (ACR).

- When you pass another parameter called

--attach-acrat cluster creation time, you are giving the kubelet identity full image permissions on your container registry:- This is the bear minimum permission that a kubelet identity needs.

- You can also do this after the cluster creation by going to the ACR resource and give

ACRPullrole permissions to the Kubelet identity, but if you pass the--attach-acrparameter during cluster creation, then this happens by default. - Ideally, you should not have any more permissions than this one.

- Each Node Pool is essentially a VM scale set (VMSS) under the hood:

There are also a lot of other identities, which most of them do not support “bring your own identity” and those depend on which “add-ons” of the AKS cluster you decide to enable. For example, Microsoft has the “omsagent” MI, which is used to connect to Log Analytics and send AKS metrics to Azure Monitor and needs to be assigned the monitoring metrics publisher role.

Only the first two are mandatory (control plane & kubelet) and for these two you can bring your own identity by specifying the --assign-identity and --assign-kubelet-identity parameters during cluster creation through az aks create command.

With --assign-identity parameter, you are essentially providing a user-assigned instead of a system-assigned MI for the control plane. And with --assign-kubelet-identity, you are just overriding the user-assigned MI that Azure creates by default with one user-assigned MI you have already pre-created. You are not letting Azure generate this by default.

Operator Identity

The second important thing is the operator identity, meaning the people who are interacting with the API server of the cluster. Traditionally this could be a human (e.g., developer) operator, or maybe a DevOps pipeline that is used to deploy the different things in the AKS cluster.

Authenticating to AKS Control Plane’s API Server

Use Azure AD Integration for Authenticating to AKS Control Plane’s API Server

Those persons / machines will also need an identity. In AKS, when you create a cluster, you get a system node pool, by default, which comes in the form of VMSS. You also get an Azure managed control plane, which exposes an API server. The API server is exposed either publicly over the internet, or privately and that API is where you connect and do all those things to a cluster from a management point of view. This API server component is the one which the kubectl CLI command connects to.

Let's examine how someone (or something) can be authenticated to this API server. By default, when you create a cluster, if you are an Azure user that has access permissions to the AKS resource itself, you can try to connect to the API server, depending on your level of permissions in the underlying resource group / subscription / management group that the AKS cluster belongs to. The way you would do that is by running a command like az aks get-credentials -g {resource-group-name} -n {aks-cluster-name}, after you have been authenticated with your credentials using the az login command.

This command connects to the Azure management plane and tries to download a local kubeconfig file, which is where Kubernetes credentials are stored. The format of this file will depend on how you have your authentication configured in the AKS cluster. By default, Kubernetes uses services accounts and when you type the az aks get-credentials command it will download a kubeconfig file, that is signed with a certificate token from the local service account in Kubernetes. Once you have this certificate-based config file mapped to a service account, anytime that you run a kubectl command, you will be impersonating that service account.

In Azure we have something different for identity in the cloud scenarios, which is called Azure Active Directory (AAD). Ideally, people should try to avoid downloading local certificate-based credentials in kubeconfig files that completely bypasses the Azure AD authentication provider. AKS has a way to change this default behavior. When you create a cluster if you pass the parameter called --enable-aad, in the az aks create command, it will enable you to authenticate, not only normally by using Kubernetes service accounts, but you are also implementing Azure AD as your authentication provider. And this is the recommended way to go, as you can get all those nice Azure AD features (e.g., logging, MFA, conditional access policies etc.).

But, most importantly, if you connect to an AKS cluster, using Azure AD, what you get in your global kubeconfig file is not a certificate-based token, but an OAuth2.0 token. This means, you are getting things like default expiration of 5 minutes, a refresh token etc. On the other hand, if you get a certificate-based token,that token has a default validity date of 1 year, so if that token got somehow compromised, it would have been extremely difficult for you to be informed and try to revoke it.

If you enable the AAD feature for the cluster, then when you try run any az aks command against the API server, you will get an interactive Azure AD login experience and that way you can authenticate yourself to the API server of your cluster using Azure AD OAuth tokens.

Bootstrap the cluster with --aad-admin-group-object-ids for your cluster admins

Another important thing to keep in mind is, even if you have Azure AD enabled, you can still login using a service account. For example, if you run something like:

az aks get-credentials -g {resource-group-name} -n {cluster-name} –admin

You can bypass the Azure AD enabled login mechanism, and login using a cluster admin certificate-based credentials. This is not recommended, because this service account has cluster admin credentials and it’s signed with the same certificate that the cluster service account uses.

The reason Microsoft had this was based on the following scenario. At the beginning you enabled Azure AD for your cluster and now you could connect to the API server using your Azure AD tenant account. Now, you wanted to configure something at the cluster level, which are called cluster role bindings. Cluster role bindings map user identities to Kubernetes roles. For example, let’s say that your account is {someUsername}@microsoft.com. You could create a cluster role binding that binds the Azure AD account to a cluster admin role. Once you had that cluster role binding created, then you could connect using your “@microsoft” Azure AD account credentials and you would be a cluster admin.

The problem here is that when the cluster was created, you enabled Azure AD from the beginning, but there was no way for you to bootstrap this cluster role binding creation. The only way you could create those was if you used the --admin login option. Then you would temporarily be a cluster admin, create all necessary cluster role bindings, and after that you would ideally try to limit or block access to that option, as this is a big security risk.

Now, you no longer need to use the --admin option, because there is another property that you can configure when you create the cluster with az aks create command and this is the parameter called --aad-admin-group-object-ids.

When you create a cluster, you can optionally use this property to pass the id(s) of the group(s) in Azure AD, and under the hood, Microsoft is pre-creating these cluster role bindings for you, so that all members of this group(s) will be able to login directly with their Azure AD credentials and be cluster admins.

Disable Local Accounts

But, even using the --aad-admin-group-object-ids option you are still able to use the --admin flag of the az aks get-credentials command and login to the API server using local accounts, because those are still enabled by default.

For that reason, it is also best to disable local Kubernetes accounts, when integrating it with Azure AD and do all RBAC level permissions through Azure AD accounts which are auditable, and cloud integrated. This way, there is no back door admin access to your cluster. With Azure AD integration, Kubernetes local accounts are no longer needed and to disable them, you can pass the --disable-local-accounts option at cluster creation time. When you disable local accounts, this also disables the ability to use the --admin option at the end of the az aks get-credentials command.

If you need to enable local accounts for some scenario, make sure you limit access to your cluster configuration file by assigning the appropriate roles. Azure provides you with built in RBAC roles, like AKS Cluster Admin role or AKS Cluster User role and you can select appropriate role as needed or create a custom role as well.

To summarize, the recommended approach for operator identity is:

- Enable AAD with

--enable-aad. - Bootstrap the cluster with

--aad-admin-group-object-ids. - Pass the option of

--disable-local-accountsduring cluster creation or even during an existing cluster update, which disables the--adminflag from theaz aks get-credentialscommand.

Use kubelogin for authenticating automated DevOps / Build Agents to your cluster’s API Server

There is one issue though, which has to do with automated agents like DevOps or build agents. When you enable AAD, and you try to run a kubectl command, you get an Azure AD device login experience, which is an interactive browser-based login experience. This works well for end users, but not for automated processes, because this interactive login experience is not supported by most of them. The recommendation for that scenario, is that all DevOps / build agents should use something that is called kubelogin.

This can be applied to a build / DevOps agent, and it can implement a non-interactive login experience. With this, you can do something like, device code login, or a non-interactive service principal login and override the interactive login experience for your build agents or any other automated processes that need to connect to the API server of your cluster.

Authorization for the operators while accessing the AKS cluster’s API Server

After having authenticated the operators, then we need to authorize them, meaning to figure out what they are allowed to do.

Kubernetes RBAC with Azure AD integration

We have two ways of defining authorization in an AKS cluster, which are complementary:

- Use Azure RBAC for Kubernetes authorization

- Use Kubernetes RBAC with Azure AD integration

- This is the default and the recommended way:

- Now that there is a proper authentication mechanism in place, you need to use a Kubernetes model for authorizing users.

- A Kubernetes model consists of cluster roles, cluster role bindings, roles, and role bindings:

- A role is what a user can do.

- A role binding is a mapping between a role to a particular user or group of users via an Azure AD object id.

- This is the default and the recommended way:

Azure RBAC for Kubernetes authorization

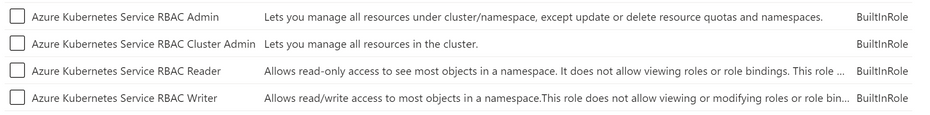

On top of Kubernetes RBAC with Azure AD integration, Microsoft released something called “Azure RBAC for Kubernetes authorization”, and this is basically to make authorization easier from a management point of view. If you do not want to have to create your own cluster roles and bindings and define your own RBAC model from scratch, Microsoft has pre-created certain permissions in Kubernetes that you could use if they suit your needs.

If you look at an AKS cluster in the Azure Portal and you navigate to the Access Control (IAM) blade, you can find various built-in roles like the following:

The above roles are basically permissions in Azure, that you can think about them at a very high level as being mapped directly to roles in Kubernetes. For example, if you go in this section in the portal and add somebody to the Azure Kubernetes Service RBAC Reader role, then this user will automatically have read-only access to all namespace resources inside a Kubernetes cluster. This way, you will not have to create either a specific cluster role for this, or a cluster role binding for that user. Just by adding the user to this role in Azure, all those permissions will be granted.

To be able to use the “Azure RBAC for Kubernetes Authorization” you need to enable it. You can do this by passing the parameter --enable-azure-rbac in the az aks create command.

Which authorization option to use

Kubernetes RBAC with Azure AD integration allows you to be a lot finer grained regarding the cluster roles and cluster role bindings that you are going to define and lets you define and tune your own RBAC model requirements.That is why this is the default and recommended approach to use. If you want you can complement it with the use of Azure RBAC for Kubernetes authorization built-in roles, but with that you cannot be flexible. If, for example, you assign to a user the Azure Kubernetes Service RBAC Reader role then the user takes read permissions to all namespaces and all resources in your Kubernetes cluster. You cannot give reader permissions only to specific namespaces.

You can also take advantage of both authorization models, by creating your own cluster roles and cluster role bindings and at the same time use the Azure RBAC for Kubernetes authorization built-in roles inside the portal.

To summarize, it is important to always make sure you have an RBAC access model that makes sense for your requirements and that you can identify the different personas that can interact with the API server of the Kubernetes cluster. Connectivity to the API server is a highly privileged operation,so make sure that you are following the least privileged approach and that all your users have access to only what they really need to.

Pod Identity

Your applications themselves are running in pods and they are probably going to need to have some sort of identity. If you have integrated your cluster with Azure AD (which you really should), then you should try to avoid having passwords or having to manage credentials yourself wherever possible. You can do that, because there's going to be a managed identity that's assigned to the cluster that your application could potentially use to pick up an Azure AD identity and acquire an OAuth2.0 token that could then use to potentially connect to maybe an Azure SQL database or a Key Vault or a Storage Account or whatever Azure Service trusts Azure AD as its authentication provider.

AAD Pod Identity (Deprecated)

The way to do that traditionally with AKS has been to use something called “AAD Pod Identity”, which Microsoft had available in preview for a couple of years now. This allows you to set up your pods to acquire their own managed identity and use a token to securely connect to other Azure resources. That way, every single application that you're deploying to your cluster can be individually secured as to what it can and can't access and really have least privilege across your whole deployment. The thing with pod identity though was that it is very proprietary to Azure and to AKS, because you couldn't use it and then maybe take your deployment and run it on another Kubernetes cluster somewhere else outside of AKS.

Azure AD Workload Identity (Preview)

For that reason, Microsoft has deprecated this technology (they will still be supporting it though) and now recommends a better solution which is more open to other clouds. This solution is called “Azure AD Workload Identity”. Basically what this does is it leverages a feature of Azure AD called “Workload Identity Federation” and this allows you to access Azure AD resources without needing to manage secrets. It integrates with Kubernetes, so it allows you to leverage some of the native Kubernetes features to federate to external identity providers. So what you're doing with this will work in other clouds as well as on-prem.

In this model the Kubernetes cluster becomes a token issuer. Kubernetes will sign this token, using service accounts, and it will then send it over to Azure AD, exchange it for an AAD token and then you'll be able to eventually have a managed identity. Currently, it doesn't work with managed identities, but only with service principles in AAD. Working with managed identities is coming and will soon be in public preview first and Globally Available (GA) after that.

What you'll then be able to do is map your Kubernetes service accounts to managed identities for each individual pod. Each pod will take on an identity and can connect to other Azure resources essentially without having to have any secrets, or not having to go to Key Vault to fetch connection strings or passwords. All that will go away, and you'll be able to flow OAuth2.0 tokens all the way to other services.

Use Azure Key Vault for communicating with services (Azure or non-Azure) that do not support Azure AD authentication

Regarding the topic of interaction between different Azure services, there are some different options here. In many cases, service to service communication in Azure can be implemented using OAuth2.0 and Azure AD tokens. This is always the preferred way. For example, if you have an app service and want to connect it with Azure SQL DB, the preferred way to do that is via OAuth2.0 protocol, using Azure AD as your token provider and configuring permissions on both ends.

There are some services that do not support Azure AD authentication though, and those rely on things like SaaS tokens, username credentials etc. Azure SQL DB can work in that mode, Storage Accounts, Event Hubs also use SaaS tokens, which you need to manage, store and handle as secrets. Maybe you also want to communicate with non-Azure resources as well, like with an on-premises component. For those secrets that do not use the OAuth2.0 protocol, the recommendation is to use a Key Vault, store those secrets and Azure Key Vault does support Azure AD OAuth2.0 authentication flow. Once you have all your secrets in the Key Vault, you can use the OAuth2.0 authentication flow, unlock the Key Vault, and get access to all those secrets.

In this scenario, if you have things like workload pod identity, you can use that workload pod identity, to unlock the Key Vault, and get all those secrets that your application uses. To achieve something like this, in AKS, the recommendation would be to use something called the “CSI secret driver” for Key Vault.

When you deploy this driver into a cluster, you can then say, whenever this pod starts, it is going to need those secrets from this Key Vault. Then, the CSI secret driver unlocks the Key Vault, gets the secrets, and mounts them as files or environment variables inside the pod.

You have several options for the identity that the CSI secret driver will use to unlock the Key Vault:

- Use the cluster identity:

- This is the kubelet identity.

- Bring your own identity:

- Use one identity which you specifically provide to the CSI secret driver.

- Use the workload pod identity:

- If you have workload identities enabled, it is recommended to use those, because the secret driver will try to unlock the Key Vault using the pod’s credentials.

- Otherwise, if you use something like the kubelet identity, then all pods in the cluster will be able to use this identity to unlock the Key Vault.

AKS Design Review Series - Contents

- Part 1.1: Networking - Ingress / Egress

- Part 1.2: Networking - AKS Networking & Private Connectivity

- Part 2.1: Identity & Access Control - Cluster, Operator & Pod Identity (current)