This post has been republished via RSS; it originally appeared at: New blog articles in Microsoft Tech Community.

We are happy to introduce this Preview feature which enables you to run microservices and containerized applications on a serverless platform.

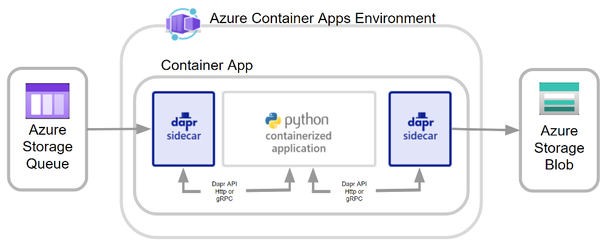

Considering the distributed nature of microservices, you need to account for failures, retries, and timeouts in a system composed of microservices. While Container Apps features the building blocks for running microservices, use of Dapr (Distributed Application Runtime) provides an even richer microservices programming model.

Azure Container Apps offers a fully managed version of the Dapr APIs when building microservices. When you use Dapr in Azure Container Apps, you can enable sidecars to run next to your microservices that provide a rich set of capabilities. Available Dapr APIs include Service to Service calls, Pub/Sub, Event Bindings, State Stores, and Actors.

In this blog, we demonstrate a sample Dapr application deployed in Azure Container Apps, which can:

- Read input message from Azure Storage Queue using Dapr Input Binding feature

- Process the message using a Python application running inside a Docker Container

- Output the result into Azure Storage Blob using Dapr Output Binding feature

Prerequisites

- Azure account with an active subscription

- Azure CLI

Ensure you're running the latest version of the CLI via the upgrade command.az upgrade - Docker Hub account to store your Docker image

Deployment Steps Overview

The complete deployment can be separated into 3 steps:

- Create required Azure resources

- Build a Docker image contains the Python application and Dapr components

- Deploy Azure Container App with the Docker image and enable Dapr sidecar

- Run test to confirm your Container App can read message from Storage Queue and push the process result to Storage Blob

Create required Azure resources

Create Powershell script CreateAzureResourece.ps1 with the following Az CLI commands:

This script will:

Install Azure CLI Container App Extension

- Create a Resource Group

- Create a Log Analytics workspace

- Create Container App Environment

- Create Storage Account

- Create Storage Queue (Dapr input binding source)

- Create Storage Container (Dapr outbound binding target)

Note: Define your own values for the following variables in the first 7 lines of the script

For $LOCATION, in Preview stage, we can only use northeurope and canadacentral

$RESOURCE_GROUP

$LOCATION

$CONTAINERAPPS_ENVIRONMENT

$LOG_ANALYTICS_WORKSPACE

$AZURE_STORAGE_ACCOUNT

$STORAGE_ACCOUNT_QUEUE

$STORAGE_ACCOUNT_CONTAINER

Run the Powershell script

Build a Docker image contains the Python application and Dapr components

All the source code are saved in the following structure:

|-Base Floder

|- Dockerfile

|- startup.sh

|- requirements.txt

|- app.py

Dockerfile

startup.sh

daprcomponents.yaml

<AZURE_STORAGE_ACCOUNT>, <STORAGE_ACCOUNT_CONTAINER>, <STORAGE_ACCOUNT_QUEUE> values can be found in your CreateAzureResourece.ps1 script

<AZURE_STORAGE_KEY> value can be retrieved by:

$AZURE_STORAGE_KEY=(az storage account keys list --resource-group $RESOURCE_GROUP --account-name $AZURE_STORAGE_ACCOUNT --query '[0].value' --out tsv)

requirements.txt

app.py

It use the following code to take input from Dapr queueinput binding.

@app.route("/queueinput", methods=['POST'])

def incoming():

incomingtext = request.get_data().decode()

It use the following code to write output to Dapr bloboutput binding.

url = 'http://localhost:'+daprPort+'/v1.0/bindings/bloboutput'

uploadcontents = '{ "operation": "create", "data": "'+ base64_message+ '", "metadata": { "blobName": "'+ outputfile+'" } }'

requests.post(url, data = uploadcontents)

Using the following Docker command to create Docker image and push it to Docker hub.

Deploy Azure Container App with the Docker image and enable Dapr sidecar

Use "az containerapp create" command to create an App in your container app environment.

After you successfully run the containerapp create command, you should be able to see a new App Revision being provisioned.

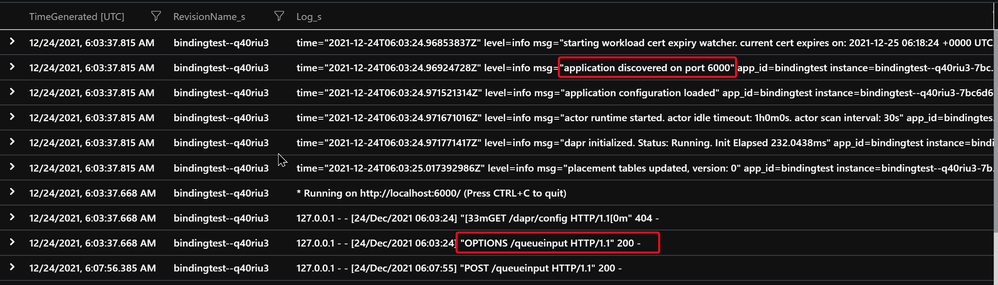

Go to Container App --> Logs Portal, run the following query command:

ContainerAppConsoleLogs_CL

| where RevisionName_s == "<your app revision name>"

| project TimeGenerated,RevisionName_s,Log_s

In the log, we should be able to see:

- Successful init for output binding bloboutput (the name is defined in the daprcomponents.yaml)

- Successful inti for input binding queueinput (the name is defined in the daprcomponents.yaml)

- Application discovered on port 6000 (as we defined in Python application code)

- Also Dapr should be able to send OPTIONS request to /queueinput, and get Http 200 response. That means the application can take message from input Storage Queue.

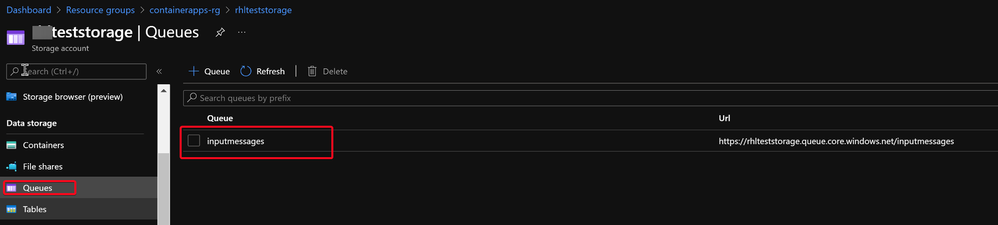

Run test to confirm your Container App can read message from Storage Queue and push the process result to Storage Blob

Now, let's add a new message in the Storage Queue.

After the application being successfully executed, we can find any output audio file in the output Storage Blob.

To check the application log, you can use Container App --> Logs Portal, run the following query command:

ContainerAppConsoleLogs_CL

| where RevisionName_s == "<your app revision name>"

| project TimeGenerated,RevisionName_s,Log_s